Published: Dec 12, 2023 by Isaac Johnson

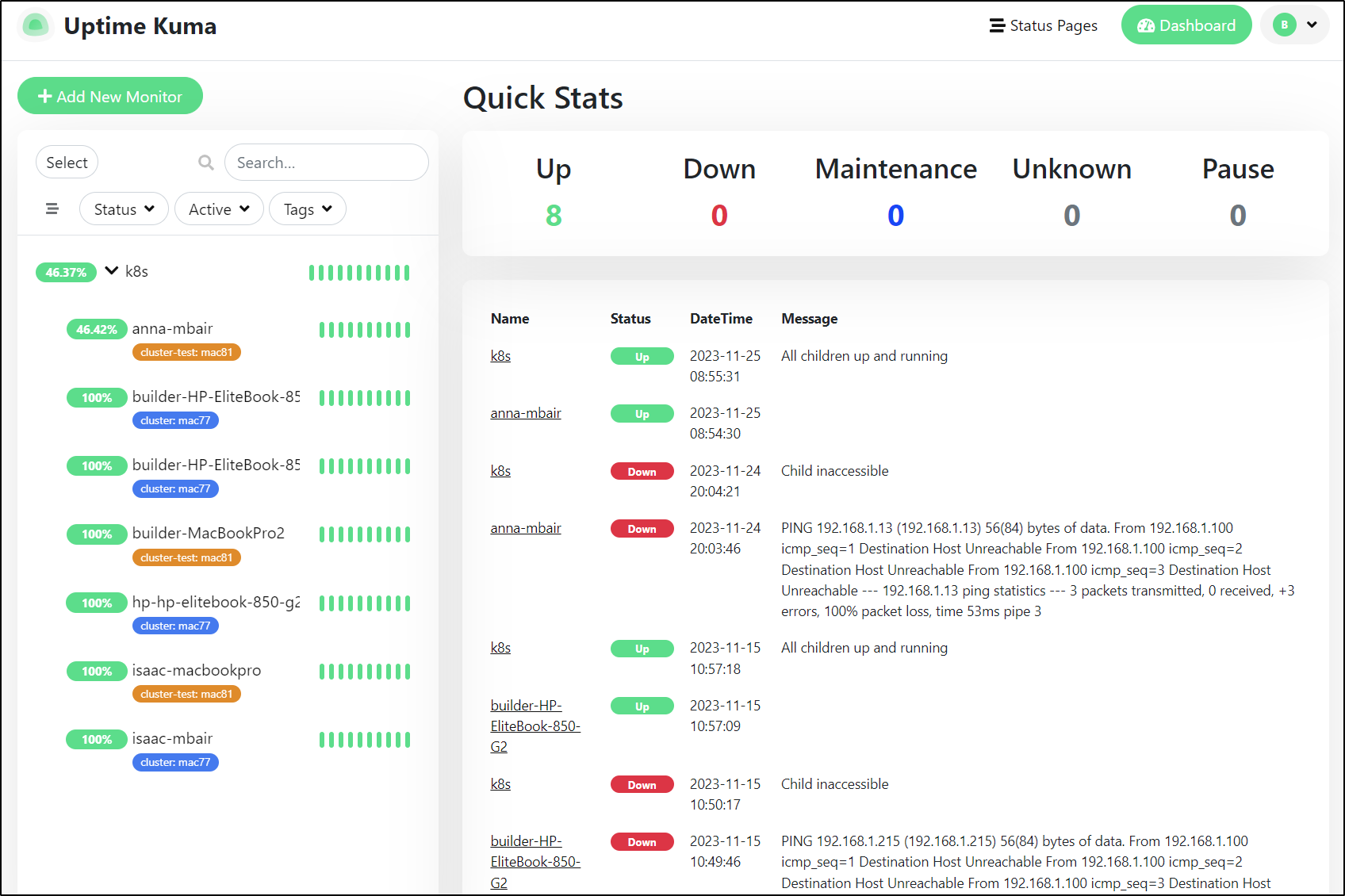

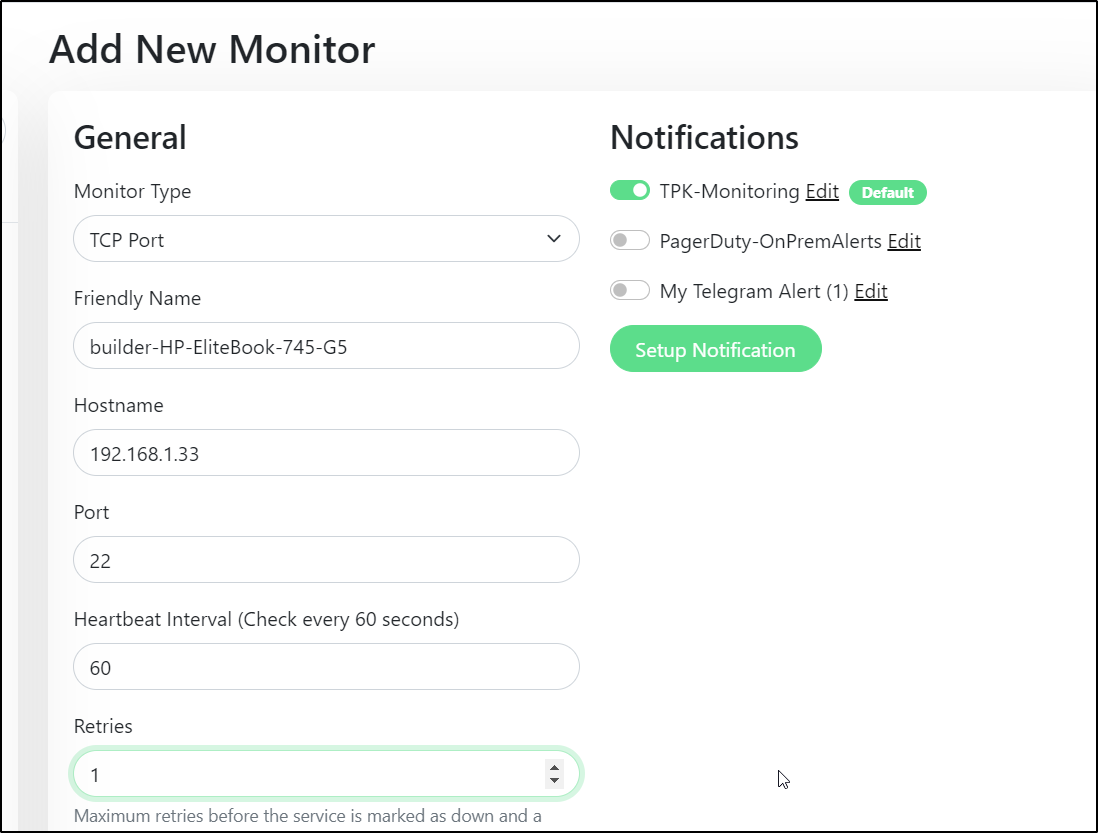

We’ll cover two key areas today. The first came when I discovered a physical utility machine was offline. I powered it up easy enough (it has an on button), but I decided I needed to properly monitor it with Uptime Kuma. It also gave a good opportunity to illustrate typing Uptime Kuma to Matrix rooms for monitoring.

The other improvement we’ll cover is upgrading Gitea. I upgraded it not that long ago and it got totally hosed. This time we’ll do a bugfix update then proceeded to a minor release. The unforeseen problem I had to deal with this time was a crashing Redis cluster. We’ll go over the many ways I tried then how I succeeded to bringing Redis (and thusly Gitea) back online.

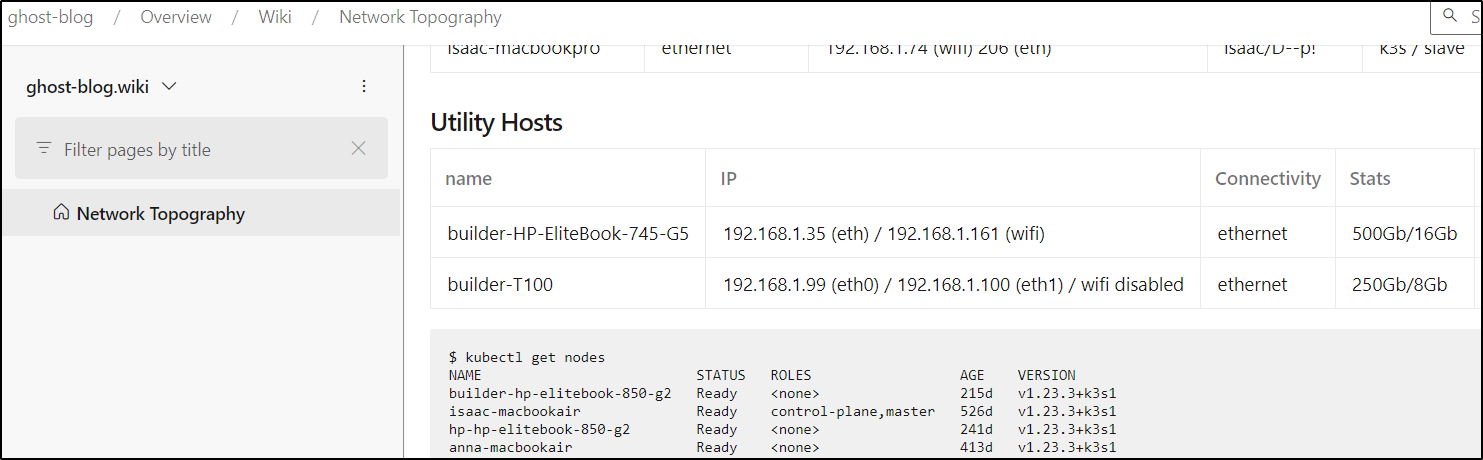

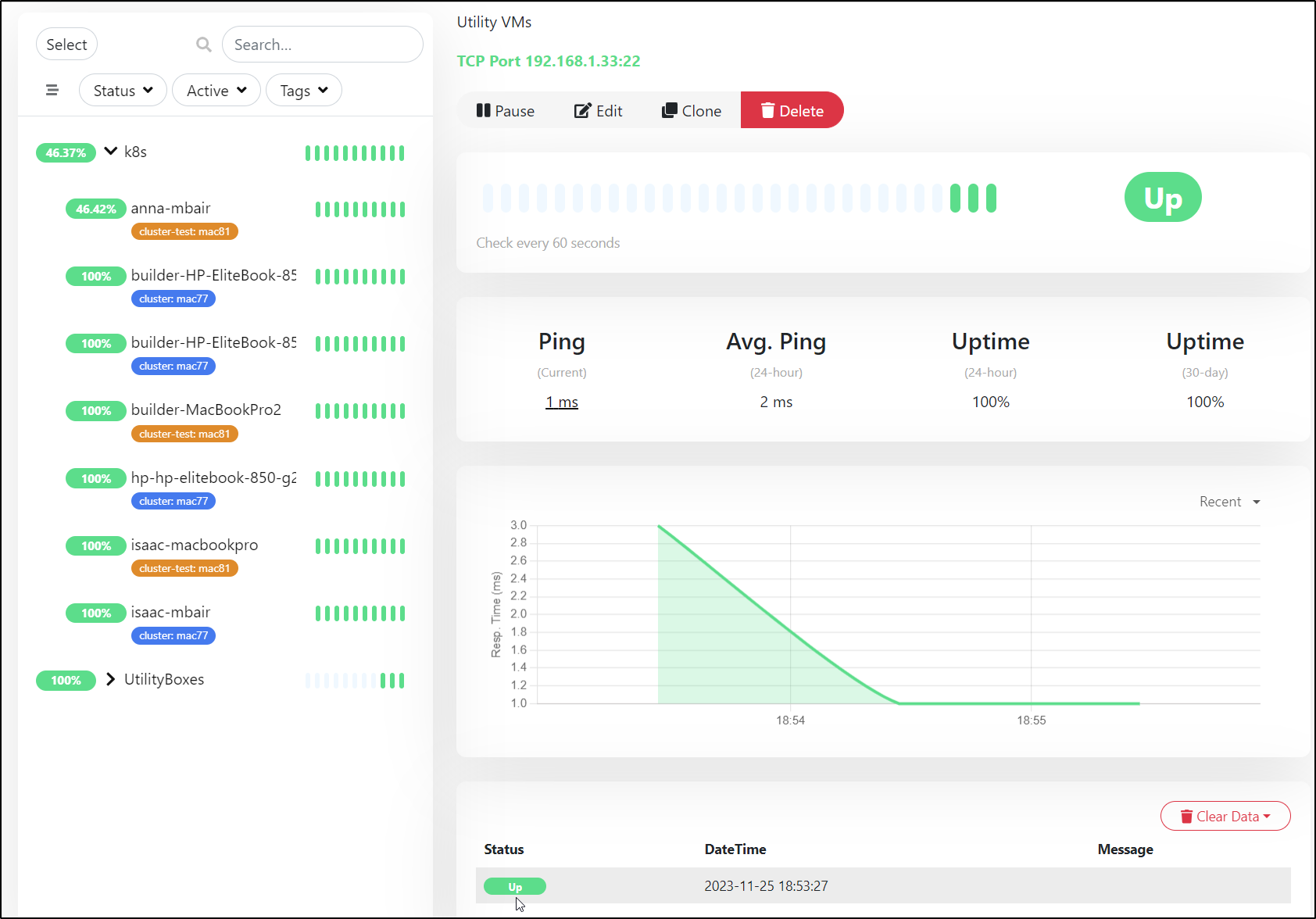

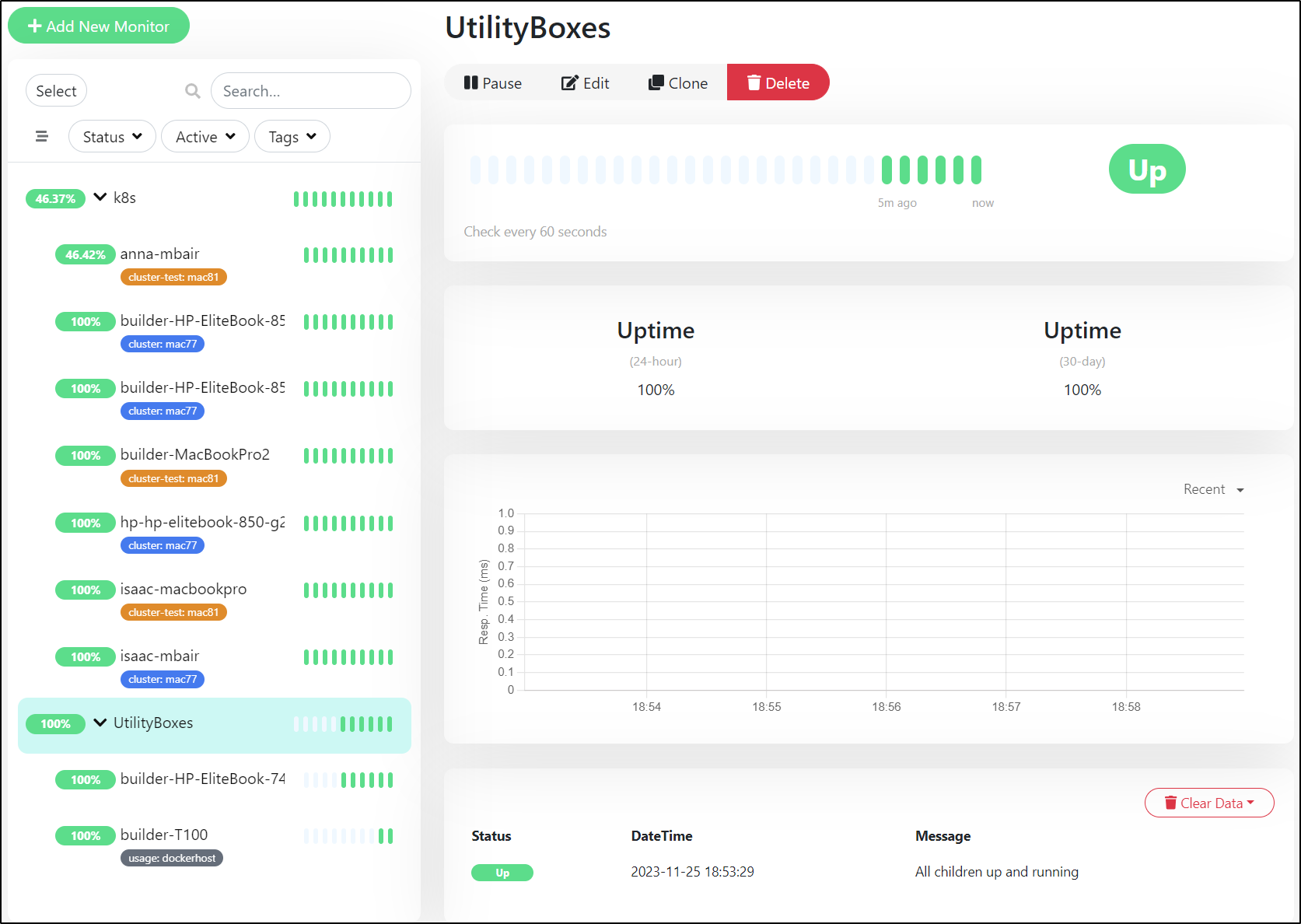

Utility VMs

I found that one of my utility boxes was down.

One of the reasons I never noticed was that Uptime really only was monitoring my Kubernetes Clusters

I’ll now add the host on it’s eth0 (.33).

I can see ping tests are now responding

I also added my DockerHost to the group and used a label to denote it’s usage

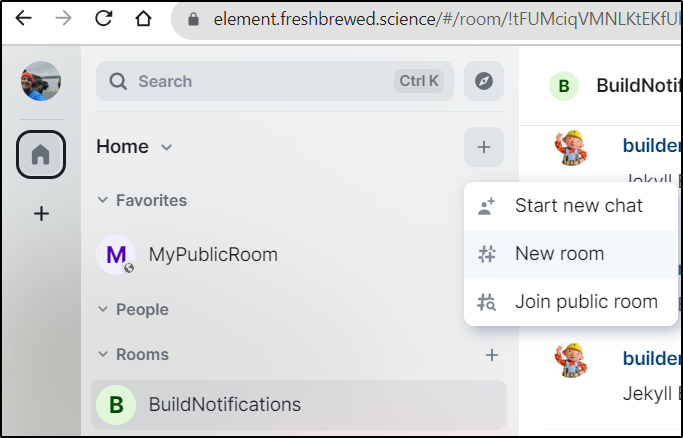

Matrix Room

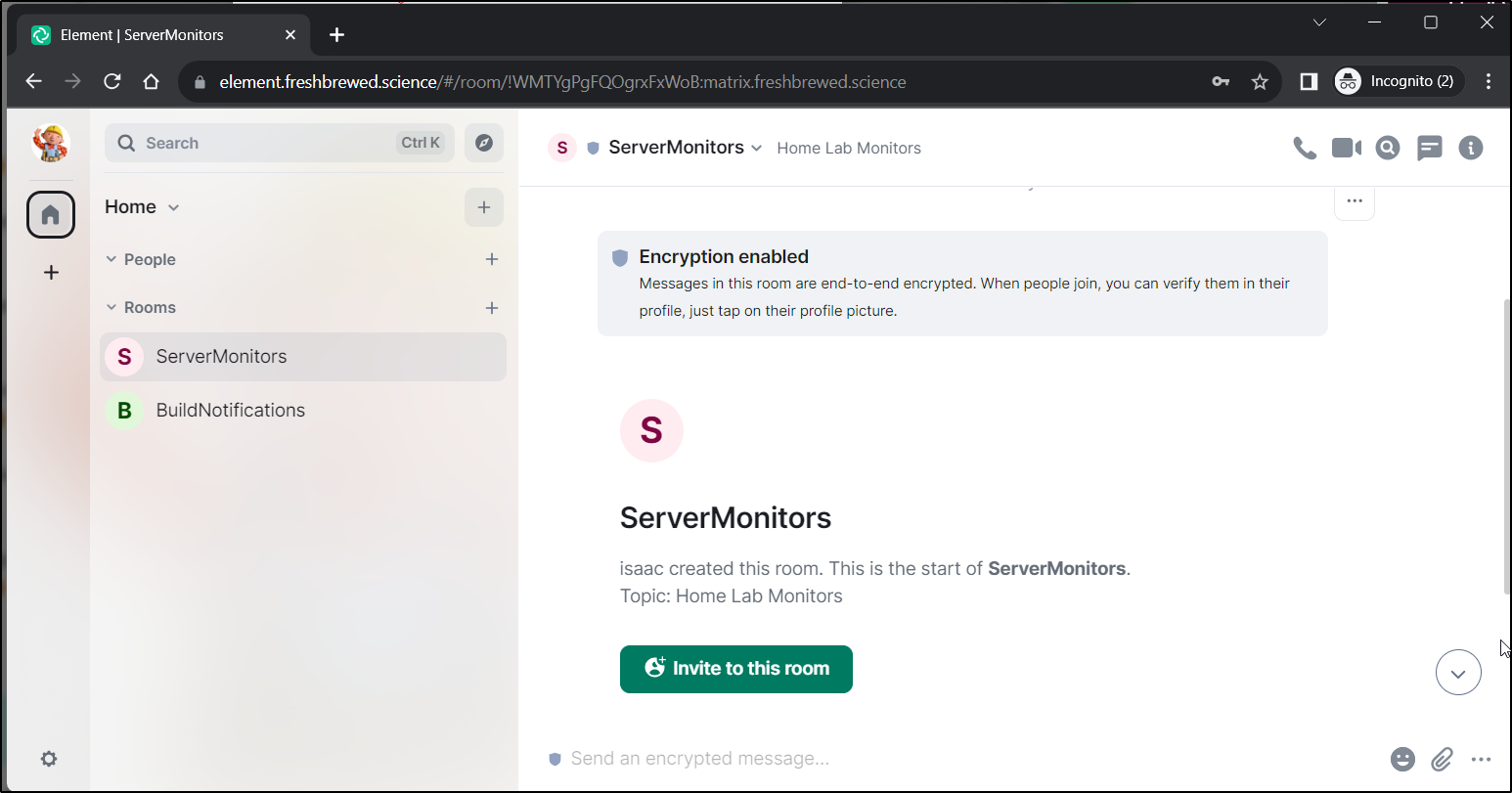

Let’s first add a Room

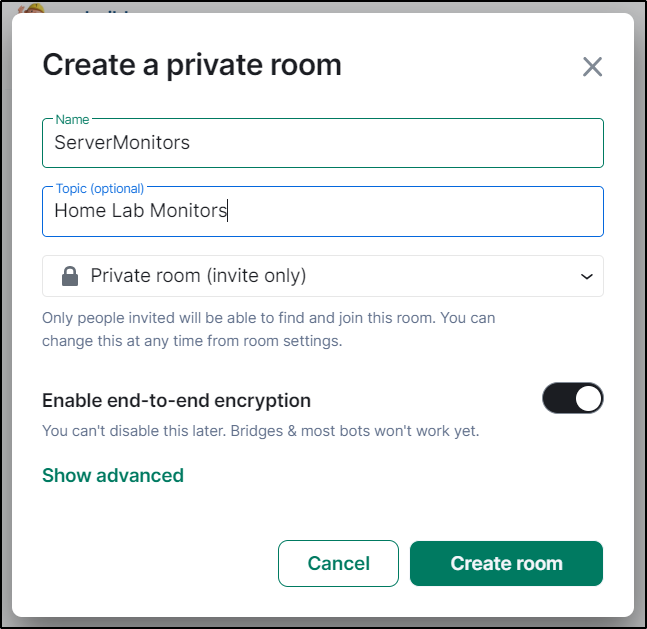

I’ll give it a name and create the room

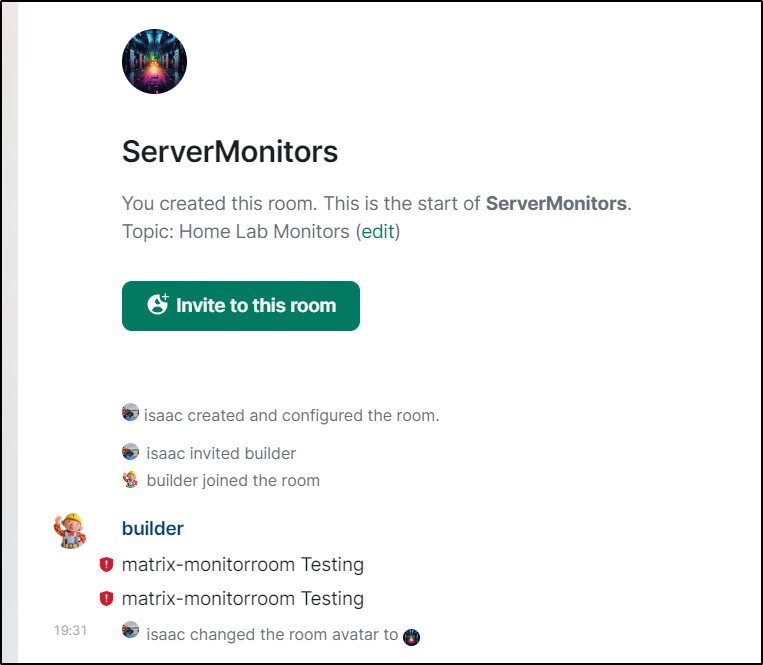

I needed to not only invite my ‘builder’ user but also login (via element) to accept the invite. Once I was able to see the room as builder

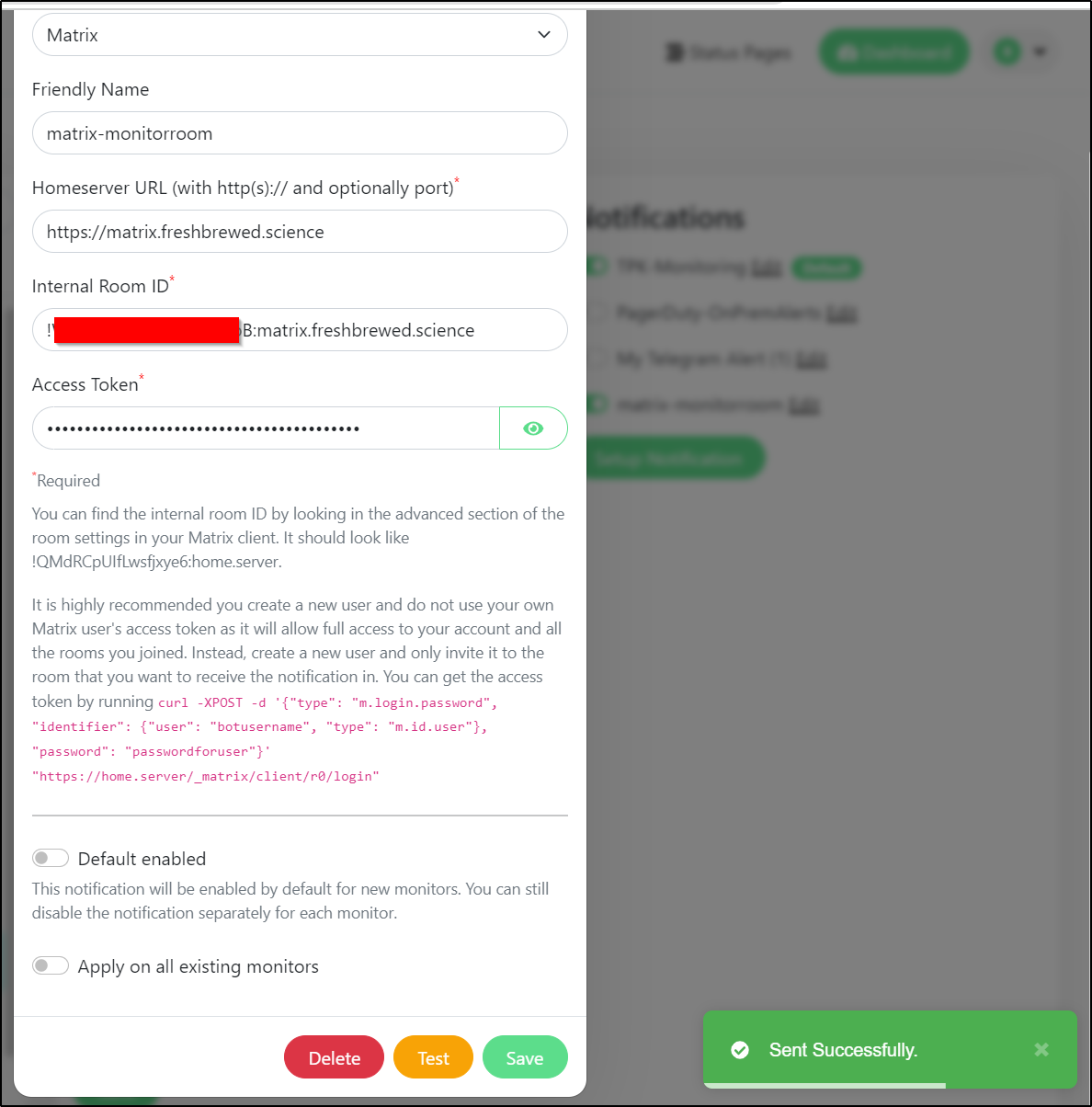

then my Matrix test worked

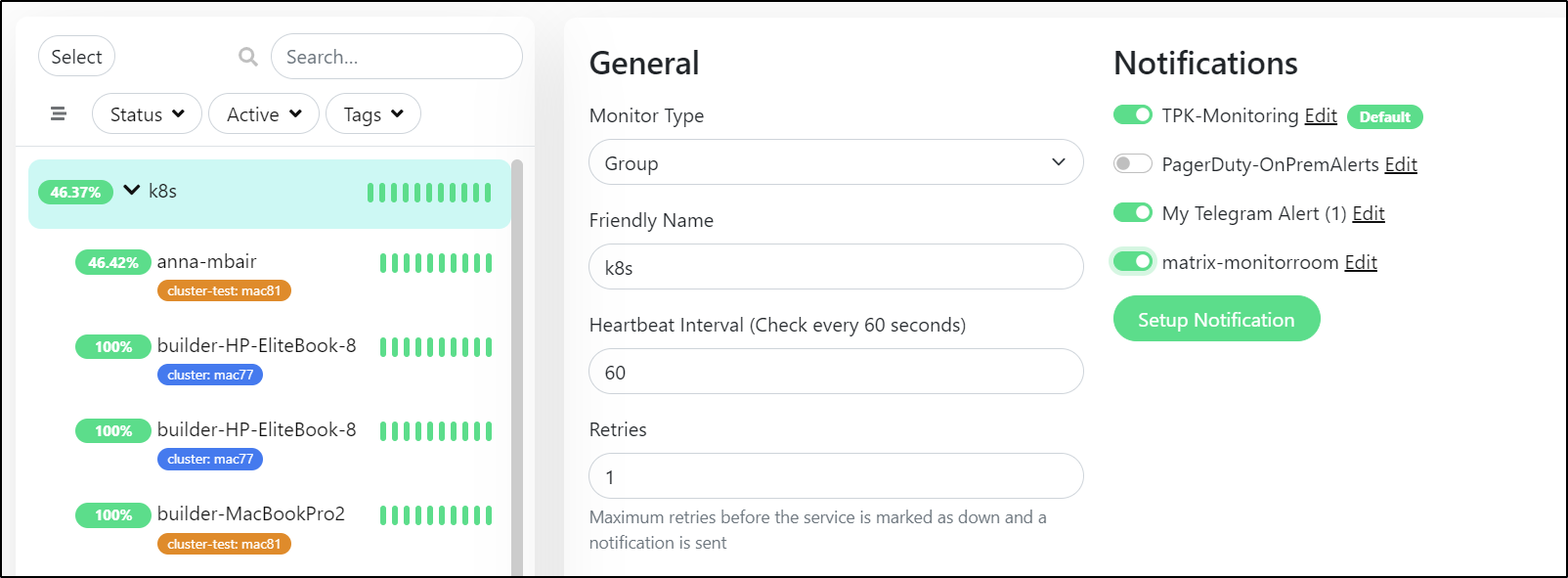

Lastly, I enabled the same monitoring for K8s

I changed the room icon and could see the test pushes from Uptime in the room

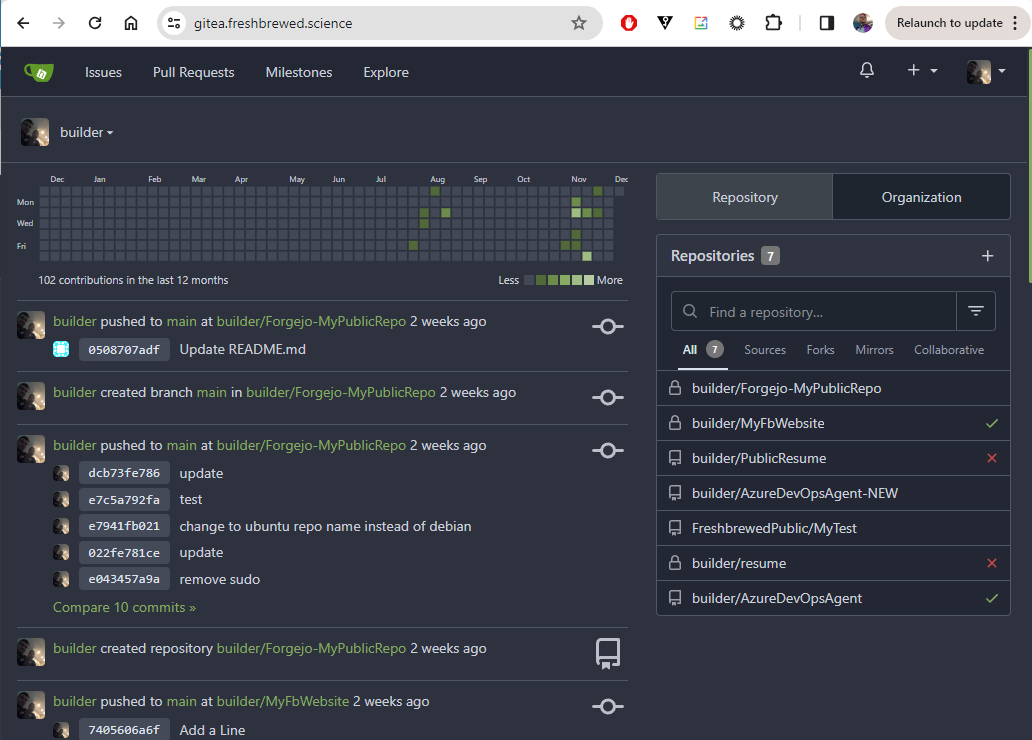

Upgrading Gitea

Let’s talk about two kinds of upgrades.

The first is minor - we have a gitea instance but we just want to keep it up to date with the security and bugfix updates for our release.

We can see our Gitea chart is at 9.5.1

$ helm list

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

argo-cd default 1 2022-10-03 14:55:02.238016 -0500 CDT deployed argo-cd-1.0.1

azure-vote default 1 2022-07-27 06:14:33.598900543 -0500 CDT deployed azure-vote-0.1.1

gitea default 11 2023-11-07 08:10:24.849544239 -0600 CST deployed gitea-9.5.1 1.20.5

We’ll want to grab our current values

$ helm get values gitea -o yaml > gitea.values.passwordsandall.yaml

There are now two approaches we can take - download the chart or specify the version to the chart repo.

In the first approach, I can download the 9.5.1 chart directly from gitea charts

builder@DESKTOP-QADGF36:~$ cd giteachart/

builder@DESKTOP-QADGF36:~/giteachart$ wget https://dl.gitea.com/charts/gitea-9.5.1.tgz

--2023-12-02 06:32:13-- https://dl.gitea.com/charts/gitea-9.5.1.tgz

Resolving dl.gitea.com (dl.gitea.com)... 18.160.249.54, 18.160.249.45, 18.160.249.44, ...

Connecting to dl.gitea.com (dl.gitea.com)|18.160.249.54|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 288892 (282K) [application/x-tar]

Saving to: ‘gitea-9.5.1.tgz’

gitea-9.5.1.tgz 100%[==========================================================================================================================>] 282.12K --.-KB/s in 0.07s

2023-12-02 06:32:13 (3.85 MB/s) - ‘gitea-9.5.1.tgz’ saved [288892/288892]

builder@DESKTOP-QADGF36:~/giteachart$ tar -xzf ./gitea-9.5.1.tgz

builder@DESKTOP-QADGF36:~/giteachart$ ls -ltra

total 304

-rw-r--r-- 1 builder builder 288892 Oct 17 12:27 gitea-9.5.1.tgz

drwxr-xr-x 59 builder builder 12288 Dec 2 06:32 ..

drwxr-xr-x 5 builder builder 4096 Dec 2 06:32 gitea

drwxr-xr-x 3 builder builder 4096 Dec 2 06:32 .

builder@DESKTOP-QADGF36:~/giteachart$ ls -ltra gitea

total 140

-rw-r--r-- 1 builder builder 20792 Oct 17 12:27 values.yaml

-rw-r--r-- 1 builder builder 981 Oct 17 12:27 renovate.json5

-rw-r--r-- 1 builder builder 63376 Oct 17 12:27 README.md

-rw-r--r-- 1 builder builder 1174 Oct 17 12:27 LICENSE

-rw-r--r-- 1 builder builder 1108 Oct 17 12:27 Chart.yaml

-rw-r--r-- 1 builder builder 422 Oct 17 12:27 Chart.lock

-rw-r--r-- 1 builder builder 293 Oct 17 12:27 .yamllint

-rw-r--r-- 1 builder builder 10 Oct 17 12:27 .prettierignore

-rw-r--r-- 1 builder builder 488 Oct 17 12:27 .helmignore

-rw-r--r-- 1 builder builder 230 Oct 17 12:27 .editorconfig

drwxr-xr-x 4 builder builder 4096 Dec 2 06:32 templates

drwxr-xr-x 2 builder builder 4096 Dec 2 06:32 docs

drwxr-xr-x 3 builder builder 4096 Dec 2 06:32 ..

drwxr-xr-x 5 builder builder 4096 Dec 2 06:32 .

drwxr-xr-x 5 builder builder 4096 Dec 2 06:32 charts

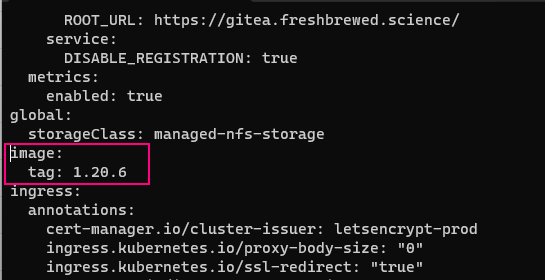

Now with my values I can upgrade by giving the new tag and the local downloaded version:

builder@DESKTOP-QADGF36:~/giteachart$ helm upgrade --dry-run gitea -f ./gitea.values.passwordsandall.yaml ./gitea | grep -i image

image: "busybox:latest"

image: "gitea/gitea:1.20.5-rootless"

imagePullPolicy: Always

image: "gitea/gitea:1.20.5-rootless"

imagePullPolicy: Always

image: "gitea/gitea:1.20.5-rootless"

imagePullPolicy: Always

image: "gitea/gitea:1.20.5-rootless"

imagePullPolicy: Always

image: docker.io/bitnami/redis-cluster:7.2.1-debian-11-r26

imagePullPolicy: "IfNotPresent"

# Now with the image.tag specified

builder@DESKTOP-QADGF36:~/giteachart$ helm upgrade --dry-run gitea -f ./gitea.values.passwordsandall.yaml --set image.tag=1.20.6 ./gitea | grep -i image

image: "busybox:latest"

image: "gitea/gitea:1.20.6-rootless"

imagePullPolicy: Always

image: "gitea/gitea:1.20.6-rootless"

imagePullPolicy: Always

image: "gitea/gitea:1.20.6-rootless"

imagePullPolicy: Always

image: "gitea/gitea:1.20.6-rootless"

imagePullPolicy: Always

image: docker.io/bitnami/redis-cluster:7.2.1-debian-11-r26

imagePullPolicy: "IfNotPresent"

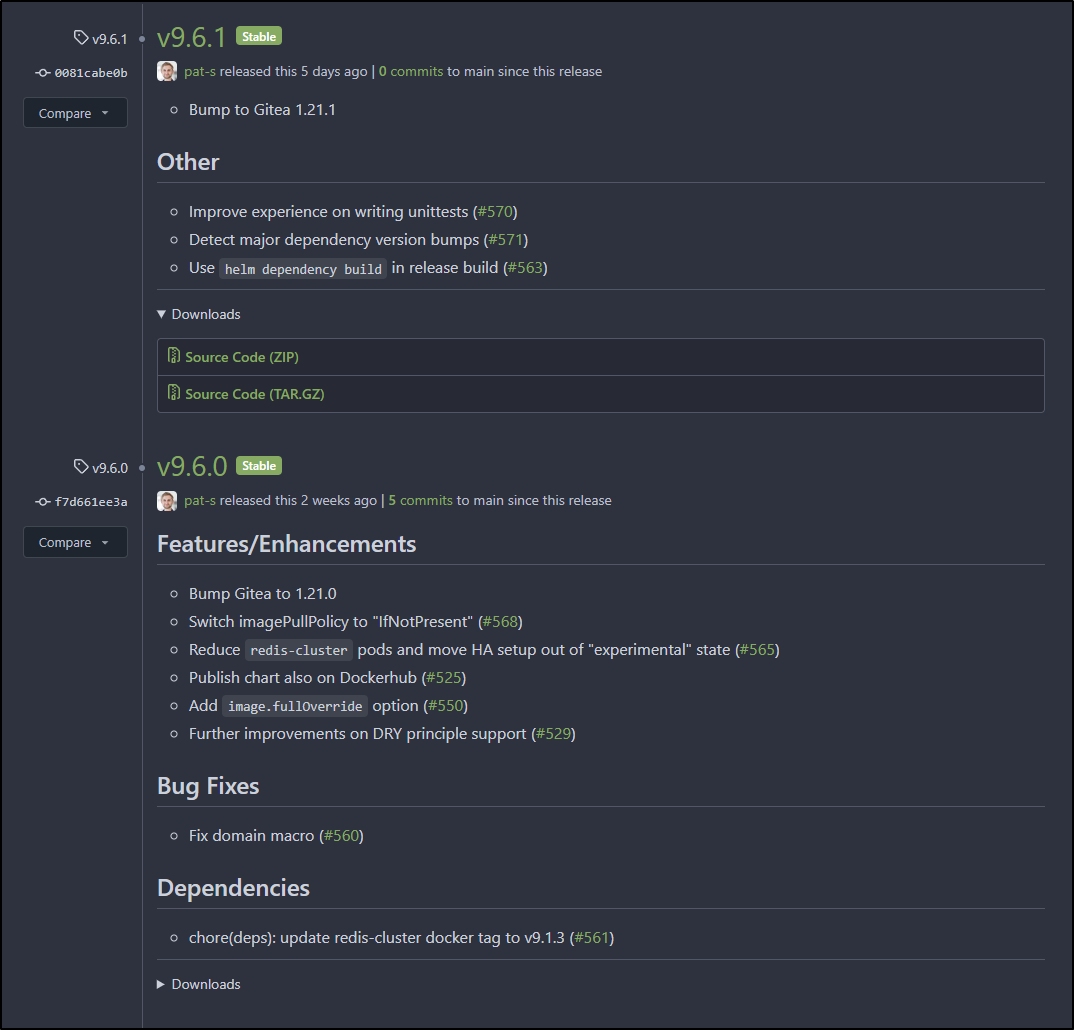

Had they made a chart version that referenced 1.20.6, we would be fine to use a chart version route. However, versions 9.6.0 and 9.6.1 move to Gitea 1.21.

Bugfix release upgrade

Let’s perform this upgrade using the image tag:

$ wget https://dl.gitea.com/charts/gitea-9.5.1.tgz

$ tar -xzf ./gitea-9.5.1.tgz

$ helm get values gitea -o yaml > gitea.values.passwordsandall.yaml

$ helm upgrade gitea -f ./gitea.values.passwordsandall.yaml --set image.tag=1.20.6 ./gitea

Minor release upgrade

Going from one minor release to another means one should really read releease notes. Last time I neglected to do this I didn’t take note they dropped mysql and I had a bad day.

Note: MySQL/MariaDB was not dropped. However, I would find that there are some major changes between 8.x and 9.x charts - read more about that here

The charts page will detail up the differences from a charts perspective

We can see the only real thing to watch is the update of Redis tags to 9.1.3. This means the redis cluster might also bounce.

To be honest, I’ve had one of the pods in a crashloop the whole time and the app has been fine

$ kubectl get pods -l app.kubernetes.io/instance=gitea

NAME READY STATUS RESTARTS AGE

gitea-redis-cluster-0 1/1 Running 1 (16d ago) 16d

gitea-redis-cluster-3 1/1 Running 1 (16d ago) 16d

gitea-redis-cluster-2 1/1 Running 0 24d

gitea-redis-cluster-1 1/1 Running 0 24d

gitea-redis-cluster-4 1/1 Running 4 (15d ago) 24d

gitea-5898bd5c79-pgt5q 1/1 Running 0 7m7s

gitea-redis-cluster-5 0/1 CrashLoopBackOff 4764 (3m52s ago) 16d

Download approach

First we can do this as a download.

I’ll move my 9.5.1 out of the way and get the latest 9.6.1

builder@DESKTOP-QADGF36:~/giteachart$ ls

gitea gitea-9.5.1.tgz gitea.values.passwordsandall.yaml o

builder@DESKTOP-QADGF36:~/giteachart$ mv gitea gitea_951

builder@DESKTOP-QADGF36:~/giteachart$ wget https://dl.gitea.com/charts/gitea-9.6.1.tgz

--2023-12-02 06:59:49-- https://dl.gitea.com/charts/gitea-9.6.1.tgz

Resolving dl.gitea.com (dl.gitea.com)... 18.160.181.93, 18.160.181.70, 18.160.181.23, ...

Connecting to dl.gitea.com (dl.gitea.com)|18.160.181.93|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 290634 (284K) [application/x-tar]

Saving to: ‘gitea-9.6.1.tgz’

gitea-9.6.1.tgz 100%[================================================>] 283.82K --.-KB/s in 0.06s

2023-12-02 06:59:49 (4.81 MB/s) - ‘gitea-9.6.1.tgz’ saved [290634/290634]

builder@DESKTOP-QADGF36:~/giteachart$ tar -xzf gitea-9.6.1.tgz

builder@DESKTOP-QADGF36:~/giteachart$ ls -l

total 604

drwxr-xr-x 5 builder builder 4096 Dec 2 06:59 gitea

-rw-r--r-- 1 builder builder 288892 Oct 17 12:27 gitea-9.5.1.tgz

-rw-r--r-- 1 builder builder 290634 Nov 27 15:02 gitea-9.6.1.tgz

-rw-r--r-- 1 builder builder 1700 Dec 2 06:33 gitea.values.passwordsandall.yaml

drwxr-xr-x 5 builder builder 4096 Dec 2 06:32 gitea_951

-rw-r--r-- 1 builder builder 21985 Dec 2 06:39 o

Before we continue, be aware that IF in our prior steps we set the image, it won’t override that:

# Using our value with a set image

builder@DESKTOP-QADGF36:~/giteachart$ helm get values gitea -o yaml > gitea.values.passwordsandall.yaml.NEW

builder@DESKTOP-QADGF36:~/giteachart$ helm upgrade --dry-run gitea -f ./gitea.values.passwordsandall.yaml.NEW ./gitea |

grep -i Image

image: "busybox:latest"

image: "gitea/gitea:1.20.6-rootless"

imagePullPolicy: IfNotPresent

image: "gitea/gitea:1.20.6-rootless"

imagePullPolicy: IfNotPresent

image: "gitea/gitea:1.20.6-rootless"

imagePullPolicy: IfNotPresent

image: "gitea/gitea:1.20.6-rootless"

imagePullPolicy: IfNotPresent

image: docker.io/bitnami/redis-cluster:7.2.3-debian-11-r1

imagePullPolicy: "IfNotPresent"

# Using the values where we didnt set an image:

builder@DESKTOP-QADGF36:~/giteachart$ helm upgrade --dry-run gitea -f ./gitea.values.passwordsandall.yaml ./gitea | gre

p -i Image

image: "busybox:latest"

image: "gitea/gitea:1.21.1-rootless"

imagePullPolicy: IfNotPresent

image: "gitea/gitea:1.21.1-rootless"

imagePullPolicy: IfNotPresent

image: "gitea/gitea:1.21.1-rootless"

imagePullPolicy: IfNotPresent

image: "gitea/gitea:1.21.1-rootless"

imagePullPolicy: IfNotPresent

image: docker.io/bitnami/redis-cluster:7.2.3-debian-11-r1

imagePullPolicy: "IfNotPresent"

To correct that, just remove the ‘image.tag’ block:

OR you can force it to determine the right image by adding --set image.tag="" when invoking:

builder@DESKTOP-QADGF36:~/giteachart$ helm upgrade --dry-run gitea -f ./gitea.values.passwordsandall.yaml.NEW --set image.tag="" ./gitea | grep -i Image

image: "busybox:latest"

image: "gitea/gitea:1.21.1-rootless"

imagePullPolicy: IfNotPresent

image: "gitea/gitea:1.21.1-rootless"

imagePullPolicy: IfNotPresent

image: "gitea/gitea:1.21.1-rootless"

imagePullPolicy: IfNotPresent

image: "gitea/gitea:1.21.1-rootless"

imagePullPolicy: IfNotPresent

image: docker.io/bitnami/redis-cluster:7.2.3-debian-11-r1

imagePullPolicy: "IfNotPresent"

From Chart repo

Knowing that we want the latest chart, we can also just upgrade using their chart repo

builder@DESKTOP-QADGF36:~/giteachart$ helm repo add gitea-charts https://dl.gitea.com/charts/

"gitea-charts" already exists with the same configuration, skipping

builder@DESKTOP-QADGF36:~/giteachart$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Unable to get an update from the "freshbrewed" chart repository (https://harbor.freshbrewed.science/chartrepo/library):

failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Unable to get an update from the "myharbor" chart repository (https://harbor.freshbrewed.science/chartrepo/library): failed to fetch https://harbor.freshbrewed.science/chartrepo/library/index.yaml : 404 Not Found

...Successfully got an update from the "nfs" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "jfelten" chart repository

...Successfully got an update from the "ngrok" chart repository

...Successfully got an update from the "adwerx" chart repository

...Successfully got an update from the "akomljen-charts" chart repository

...Successfully got an update from the "kube-state-metrics" chart repository

...Successfully got an update from the "confluentinc" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "kuma" chart repository

...Successfully got an update from the "btungut" chart repository

...Successfully got an update from the "sonarqube" chart repository

...Successfully got an update from the "elastic" chart repository

...Successfully got an update from the "rook-release" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "sumologic" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "kiwigrid" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "rancher-latest" chart repository

...Successfully got an update from the "argo-cd" chart repository

...Successfully got an update from the "incubator" chart repository

...Successfully got an update from the "newrelic" chart repository

...Successfully got an update from the "prometheus-community" chart repository

...Successfully got an update from the "opencost" chart repository

...Successfully got an update from the "portainer" chart repository

...Successfully got an update from the "zabbix-community" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "novum-rgi-helm" chart repository

...Successfully got an update from the "gitea-charts" chart repository

...Successfully got an update from the "rhcharts" chart repository

...Successfully got an update from the "castai-helm" chart repository

...Successfully got an update from the "open-telemetry" chart repository

...Successfully got an update from the "longhorn" chart repository

...Successfully got an update from the "lifen-charts" chart repository

...Successfully got an update from the "kubecost" chart repository

...Unable to get an update from the "epsagon" chart repository (https://helm.epsagon.com):

Get "https://helm.epsagon.com/index.yaml": dial tcp: lookup helm.epsagon.com on 172.22.64.1:53: no such host

...Successfully got an update from the "signoz" chart repository

...Successfully got an update from the "openzipkin" chart repository

...Successfully got an update from the "bitnami" chart repository

...Successfully got an update from the "grafana" chart repository

...Successfully got an update from the "crossplane-stable" chart repository

...Successfully got an update from the "uptime-kuma" chart repository

...Successfully got an update from the "gitlab" chart repository

Update Complete. ⎈Happy Helming!⎈

then we don’t use a local path, rather their chart/repo name to upgade

builder@DESKTOP-QADGF36:~/giteachart$ helm upgrade --dry-run gitea -f ./gitea.values.passwordsandall.yaml.NEW --set image.tag="" gitea-charts/gitea | grep -i Image

image: "busybox:latest"

image: "gitea/gitea:1.21.1-rootless"

imagePullPolicy: IfNotPresent

image: "gitea/gitea:1.21.1-rootless"

imagePullPolicy: IfNotPresent

image: "gitea/gitea:1.21.1-rootless"

imagePullPolicy: IfNotPresent

image: "gitea/gitea:1.21.1-rootless"

imagePullPolicy: IfNotPresent

image: docker.io/bitnami/redis-cluster:7.2.3-debian-11-r1

imagePullPolicy: "IfNotPresent"

Issues: Redis Crashes

I got stuck with redis cluster crashes

kubectl get pods -l app.kubernetes.io/instance=gitea DESKTOP-QADGF36: Sun Dec 3 07:08:27 2023

NAME READY STATUS RESTARTS AGE

gitea-5898bd5c79-pgt5q 1/1 Running 0 24h

gitea-redis-cluster-2 1/1 Running 0 23h

gitea-redis-cluster-0 0/1 CrashLoopBackOff 285 (2m42s ago) 23h

gitea-dfccfb879-k77qq 0/1 CrashLoopBackOff 282 (43s ago) 23h

gitea-redis-cluster-1 0/1 CrashLoopBackOff 285 (5s ago) 23h

Luckily the old (1.20.6) Gitea was still running, but the new redis clsuer seemed to be in endless reboot

$ kubectl logs gitea-redis-cluster-0

redis-cluster 13:05:46.80 INFO ==>

redis-cluster 13:05:46.80 INFO ==> Welcome to the Bitnami redis-cluster container

redis-cluster 13:05:46.80 INFO ==> Subscribe to project updates by watching https://github.com/bitnami/containers

redis-cluster 13:05:46.81 INFO ==> Submit issues and feature requests at https://github.com/bitnami/containers/issues

redis-cluster 13:05:46.81 INFO ==>

redis-cluster 13:05:46.81 INFO ==> ** Starting Redis setup **

redis-cluster 13:05:46.83 WARN ==> You set the environment variable ALLOW_EMPTY_PASSWORD=yes. For safety reasons, do not use this flag in a production environment.

redis-cluster 13:05:46.84 INFO ==> Initializing Redis

redis-cluster 13:05:46.85 INFO ==> Setting Redis config file

redis-cluster 13:05:46.92 INFO ==> Changing old IP 10.42.3.30 by the new one 10.42.3.30

redis-cluster 13:05:46.97 INFO ==> Changing old IP 10.42.1.48 by the new one 10.42.1.48

redis-cluster 13:05:47.00 INFO ==> Changing old IP 10.42.0.183 by the new one 10.42.0.183

redis-cluster 13:05:47.01 INFO ==> ** Redis setup finished! **

1:C 03 Dec 2023 13:05:47.057 # WARNING: Changing databases number from 16 to 1 since we are in cluster mode

1:C 03 Dec 2023 13:05:47.057 * oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

1:C 03 Dec 2023 13:05:47.057 * Redis version=7.2.3, bits=64, commit=00000000, modified=0, pid=1, just started

1:C 03 Dec 2023 13:05:47.057 * Configuration loaded

1:M 03 Dec 2023 13:05:47.057 * monotonic clock: POSIX clock_gettime

_._

_.-``__ ''-._

_.-`` `. `_. ''-._ Redis 7.2.3 (00000000/0) 64 bit

.-`` .-```. ```\/ _.,_ ''-._

( ' , .-` | `, ) Running in cluster mode

|`-._`-...-` __...-.``-._|'` _.-'| Port: 6379

| `-._ `._ / _.-' | PID: 1

`-._ `-._ `-./ _.-' _.-'

|`-._`-._ `-.__.-' _.-'_.-'|

| `-._`-._ _.-'_.-' | https://redis.io

`-._ `-._`-.__.-'_.-' _.-'

|`-._`-._ `-.__.-' _.-'_.-'|

| `-._`-._ _.-'_.-' |

`-._ `-._`-.__.-'_.-' _.-'

`-._ `-.__.-' _.-'

`-._ _.-'

`-.__.-'

1:M 03 Dec 2023 13:05:47.062 * Node configuration loaded, I'm eae710660c9ba961343e4ca9f78ae5721bb4b408

1:M 03 Dec 2023 13:05:47.062 * Server initialized

1:M 03 Dec 2023 13:05:47.133 # Unable to obtain the AOF file appendonly.aof.2.base.rdb length. stat: Permission denied

Spoiler: I ended up, as you’ll see at the end of this section, needing to remove the PVCs, STS and then recreate - but I wanted to show you how I got there

I’ll create a ‘fix’ pods

$ cat fixRedis0.yaml

apiVersion: v1

kind: Pod

metadata:

name: ubuntu-pod-redis0

spec:

containers:

- name: ubuntu-container

image: ubuntu

command: ["tail", "-f", "/dev/null"]

volumeMounts:

- mountPath: /scripts

name: scripts

- mountPath: /bitnami/redis/data

name: redis-data

- mountPath: /opt/bitnami/redis/etc/redis-default.conf

name: default-config

subPath: redis-default.conf

- mountPath: /opt/bitnami/redis/etc/

name: redis-tmp-conf

volumes:

- name: redis-data

persistentVolumeClaim:

claimName: redis-data-gitea-redis-cluster-0

- configMap:

defaultMode: 493

name: gitea-redis-cluster-scripts

name: scripts

- configMap:

defaultMode: 420

name: gitea-redis-cluster-default

name: default-config

- emptyDir: {}

name: redis-tmp-conf

$ kubectl apply -f fixRedis0.yaml

pod/ubuntu-pod-redis0 created

I looked at the content of the rdb file in the busted Redis 0

root@ubuntu-pod-redis0:/bitnami/redis/data/appendonlydir# cat /bitnami/redis/data/appendonlydir/appendonly.aof.2.base.rdb

REDIS0011� redis-ver7.2.1�

redis-bits�@�ctime��Teused-mem�`�aof-base����⸮�ԋ?commits-count-5-commit-b2abd6e99f93a5b66d9477a3c2824d31b0382c3c���^ԋ11fffeee7da0ad41�@Y@m����] string

uidint64�uname� builder�

_old_ >�1�<3x؋3e498fded5a5a418�@Y@m����] string

uname� builder�uidint64�

_old_ �1��'6520b7f1ef543fbc�@Z@m����] string

_old_uid�1�

int64�uname� builder���xԋ'system.setting.picture.disable_gravatarfalse��c�?commits-count-4-c�}root@ubuntu-pod-redis0:/bitnami/redis/data/appendonlydir#

root@ubuntu-pod-redis0:/bitnami/redis/data/appendonlydir# cp /bitnami/redis/data/appendonlydir/appendonly.aof.2.base.rdb > /bitnami/redis/data/appendonlydir/appendonly.aof.2.base.rdb.old

cp: missing destination file operand after '/bitnami/redis/data/appendonlydir/appendonly.aof.2.base.rdb'

Try 'cp --help' for more information.

Perhaps if I move that out of the way and copy a generally empty one I saw from my working Redis cluster 2, that might work?

root@ubuntu-pod-redis0:/bitnami/redis/data/appendonlydir# cp /bitnami/redis/data/appendonlydir/appendonly.aof.2.base.rdb /bitnami/redis/data/appendonlydir/appendonly.aof.2.base.rdb.old

root@ubuntu-pod-redis0:/bitnami/redis/data/appendonlydir# echo L2JpdG5hbWkvcmVkaXMvZGF0YS9hcHBlbmRvbmx5ZGlyL2FwcGVuZG9ubHkuYW9mLjEuYmFzZS5yZGIK | base64 --decode > /bitnami/redis/data/appendonlydir/appendonly.aof.2.base.rdb

root@ubuntu-pod-redis0:/bitnami/redis/data/appendonlydir# chmod 666 /bitnami/redis/data/appendonlydir/appendonly.aof.2.base.rdb

I saw other guides that suggested do a bgrewriteaof. Perhaps that will sort us out?

builder@DESKTOP-QADGF36:~$ kubectl exec -it gitea-redis-cluster-0 -- /bin/bash

I have no name!@gitea-redis-cluster-0:/$ which redis

I have no name!@gitea-redis-cluster-0:/$ which redis-cli

/opt/bitnami/redis/bin/redis-cli

I have no name!@gitea-redis-cluster-0:/$ redis-cli -a bgrewriteaof

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

AUTH failed: ERR AUTH <password> called without any password configured for the default user. Are you sure your configuration is correct?

127.0.0.1:6379>

127.0.0.1:6379> bgrewriteaof

Background append only file rewriting started

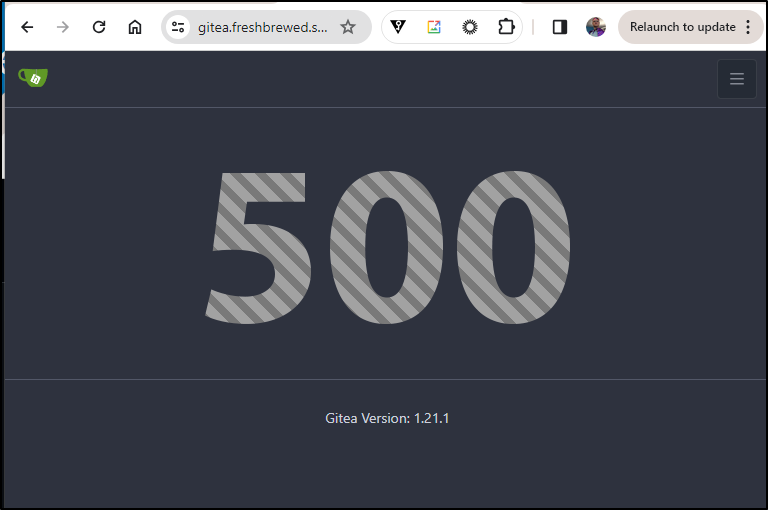

Even though I could get it partially back up, the redis cluster was in a bad way. Logging in would 500

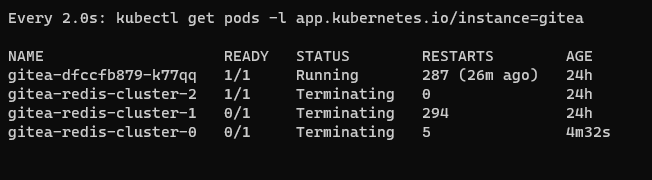

I decided to take a more nuclear approach. I would delete the stateful set and recreate

$ kubectl get sts gitea-redis-cluster -o yaml > save.sts.yaml

$ kubectl delete sts gitea-redis-cluster

statefulset.apps "gitea-redis-cluster" deleted

But they were just as jacked

Every 2.0s: kubectl get pods -l app.kubernetes.io/instance=gitea DESKTOP-QADGF36: Sun Dec 3 07:57:58 2023

NAME READY STATUS RESTARTS AGE

gitea-dfccfb879-k77qq 1/1 Running 287 (27m ago) 24h

gitea-redis-cluster-2 0/1 Running 0 32s

gitea-redis-cluster-0 0/1 CrashLoopBackOff 2 (8s ago) 33s

gitea-redis-cluster-1 0/1 CrashLoopBackOff 2 (8s ago) 33s

I decided to do it again, this time adding a couple more to the replicas (3 to 5). Perhaps some new replicas would gloss past the crashing ones?

The StatefulSet came up but I still got 500 errors logging in

kubectl get pods -l app.kubernetes.io/instance=gitea DESKTOP-QADGF36: Sun Dec 3 08:03:06 2023

NAME READY STATUS RESTARTS AGE

gitea-dfccfb879-k77qq 1/1 Running 287 (32m ago) 24h

gitea-redis-cluster-2 0/1 Running 0 2m39s

gitea-redis-cluster-3 1/1 Running 0 2m39s

gitea-redis-cluster-4 1/1 Running 0 2m39s

gitea-redis-cluster-1 0/1 CrashLoopBackOff 4 (66s ago) 2m39s

gitea-redis-cluster-0 0/1 CrashLoopBackOff 4 (48s ago) 2m39s

My last idea was to kill the Redis sts, then scrub the PVCs, then create again.

I removed the sts

$ kubectl delete sts gitea-redis-cluster

statefulset.apps "gitea-redis-cluster" deleted

Checked the volumes that were there

$ kubectl get pvc | grep gitea | grep redis

redis-data-gitea-redis-cluster-0 Bound pvc-068e7a7a-0cae-413f-85d3-7b98cc15c14c 8Gi RWO managed-nfs-storage 26d

redis-data-gitea-redis-cluster-1 Bound pvc-ca977aa4-decd-44ef-9919-14aa73b7b53b 8Gi RWO managed-nfs-storage 26d

redis-data-gitea-redis-cluster-2 Bound pvc-4859c13f-b647-4faa-a3de-be1609f7b278 8Gi RWO managed-nfs-storage 26d

redis-data-gitea-redis-cluster-4 Bound pvc-8a148d27-3264-41c0-9c21-d995fb6184af 8Gi RWO managed-nfs-storage 26d

redis-data-gitea-redis-cluster-3 Bound pvc-d3926a7e-f2a9-41eb-aadd-b4d9aca6060c 8Gi RWO managed-nfs-storage 26d

redis-data-gitea-redis-cluster-5 Bound pvc-da65f09e-7fab-4a59-8d08-4406f51b192e 8Gi RWO managed-nfs-storage 26d

Then scrubbed them out

$ kubectl delete pvc redis-data-gitea-redis-cluster-0 & \

kubectl delete pvc redis-data-gitea-redis-cluster-1 & \

kubectl delete pvc redis-data-gitea-redis-cluster-2 & \

kubectl delete pvc redis-data-gitea-redis-cluster-3 & \

kubectl delete pvc redis-data-gitea-redis-cluster-4 & \

kubectl delete pvc redis-data-gitea-redis-cluster-5

quick note: I did have one get stuck because I forgot I had created that temporary pod to scrub files/debug redis cluster 0. Once I reemoved that, then i could get that last PVC deleted. i.e.

$ kubectl delete pod ubuntu-pod-redis0

pod "ubuntu-pod-redis0" deleted

$ kubectl delete pvc redis-data-gitea-redis-cluster-0

persistentvolumeclaim "redis-data-gitea-redis-cluster-0" deleted

Next, I changed the save.sts.yaml back to 3 replicas then fired it off to recreate them

$ kubectl apply -f save.sts.yaml

statefulset.apps/gitea-redis-cluster created

$get pods -l app.kubernetes.io/instance=gitea DESKTOP-QADGF36: Sun Dec 3 08:11:07 2023

NAME READY STATUS RESTARTS AGE

gitea-dfccfb879-k77qq 1/1 Running 287 (40m ago) 25h

gitea-redis-cluster-2 1/1 Running 0 72s

gitea-redis-cluster-1 1/1 Running 0 73s

gitea-redis-cluster-0 1/1 Running 0 73s

This time I could sign in! Whew!

(Checking later)

4 hours and all is still well so I would call the issue sorted

$ kubectl get pods -l app.kubernetes.io/instance=gitea

NAME READY STATUS RESTARTS AGE

gitea-dfccfb879-k77qq 1/1 Running 287 (4h50m ago) 29h

gitea-redis-cluster-2 1/1 Running 0 4h10m

gitea-redis-cluster-1 1/1 Running 0 4h10m

gitea-redis-cluster-0 1/1 Running 0 4h10m

$ kubectl get pvc | grep redis | grep gitea

redis-data-gitea-redis-cluster-0 Bound pvc-901e54c1-84e5-49cd-852e-5fcbd9f12ff6 8Gi RWO managed-nfs-storage 4h10m

redis-data-gitea-redis-cluster-1 Bound pvc-1b355407-d1b0-4cbd-80a9-792aeda23dbc 8Gi RWO managed-nfs-storage 4h10m

redis-data-gitea-redis-cluster-2 Bound pvc-501c8106-bd42-473b-8f3a-2efe034b6dec 8Gi RWO managed-nfs-storage 4h10m

The summary might be that Redis clusters should be mere caching servers for things like sessions and temporary form data. They really should be safe to delete and recreate.

Some time back I got into a bit of a heated discussion with a SME from an APM vendor over whether Redis was a “Database”. While it stores data, if one is using it as a database, they’re going to have a bad day.

Summary

Well, buckle up, because today was quite the rollercoaster ride in the world of home lab maintenance! We started off by playing matchmaker, introducing Uptime Kuma to a bunch of boxes for some quality monitoring time. Then, we decided to throw a party in a Matrix room and guess who we invited? That’s right, Uptime Kuma!

But wait, there’s more: We then turned our attention to Gitea, not once, but twice! It was like a double feature movie night, but with upgrades. The sequel, however, had a surprise twist - a Redis Cluster crash. It was like a popcorn spill in the middle of the movie. But fear not, we tackled it head-on by deleting the STS and PVCs, and then re-launching from a saved STS YAML. It was a classic case of “If at first you don’t succeed, delete and relaunch!”

Now, with Forgejo joining our tech family, I’m feeling much more secure when it comes to upgrading Gitea. It’s like having a tech superhero on standby! With two reasonably HA systems that can use each other for DR, it’s like we’ve built our own tech fortress. And who knows? In the future, we might just add some more bells and whistles to this setup, including PSQL backups. Stay tuned for the next episode of our tech adventures where we’ll check out “Process Compose” and more.

full disclosure. I asked copilot to spruce up my summary statement above as it was really drab. i think it did a good job, though I did trim a bit of the folksy yukyuks