Published: Dec 26, 2023 by Isaac Johnson

Plane.so was started by Vamsi and Vihar Kurama who have worked together seven years and launched Plane in December of 2022. The first dev release was in January 2023. For some time they’ve been on my list of OS suites to checkout.

At just a year old, how good is Plane? It has both a SaaS option and self-hosted one. Let’s give both a try and see how easy they are to setup and use. Today, we’ll explore Docker, On-prem Kubernetes (K3s) and Azure Kubernetes Service (AKS) as well as the cloud hosted option (SaaS).

Self-Hosted Docker

I’ll pull down the source and run setup

Use the develop branch

builder@builder-T100:~$ git clone https://github.com/makeplane/plane.git

Cloning into 'plane'...

remote: Enumerating objects: 58418, done.

remote: Counting objects: 100% (13984/13984), done.

remote: Compressing objects: 100% (3986/3986), done.

remote: Total 58418 (delta 11060), reused 11884 (delta 9927), pack-reused 44434

Receiving objects: 100% (58418/58418), 57.14 MiB | 35.94 MiB/s, done.

Resolving deltas: 100% (41815/41815), done.

builder@builder-T100:~$ cd plane/

builder@builder-T100:~/plane$ git checkout develop

Branch 'develop' set up to track remote branch 'develop' from 'origin'.

Switched to a new branch 'develop'

builder@builder-T100:~/plane$ ./setup.sh

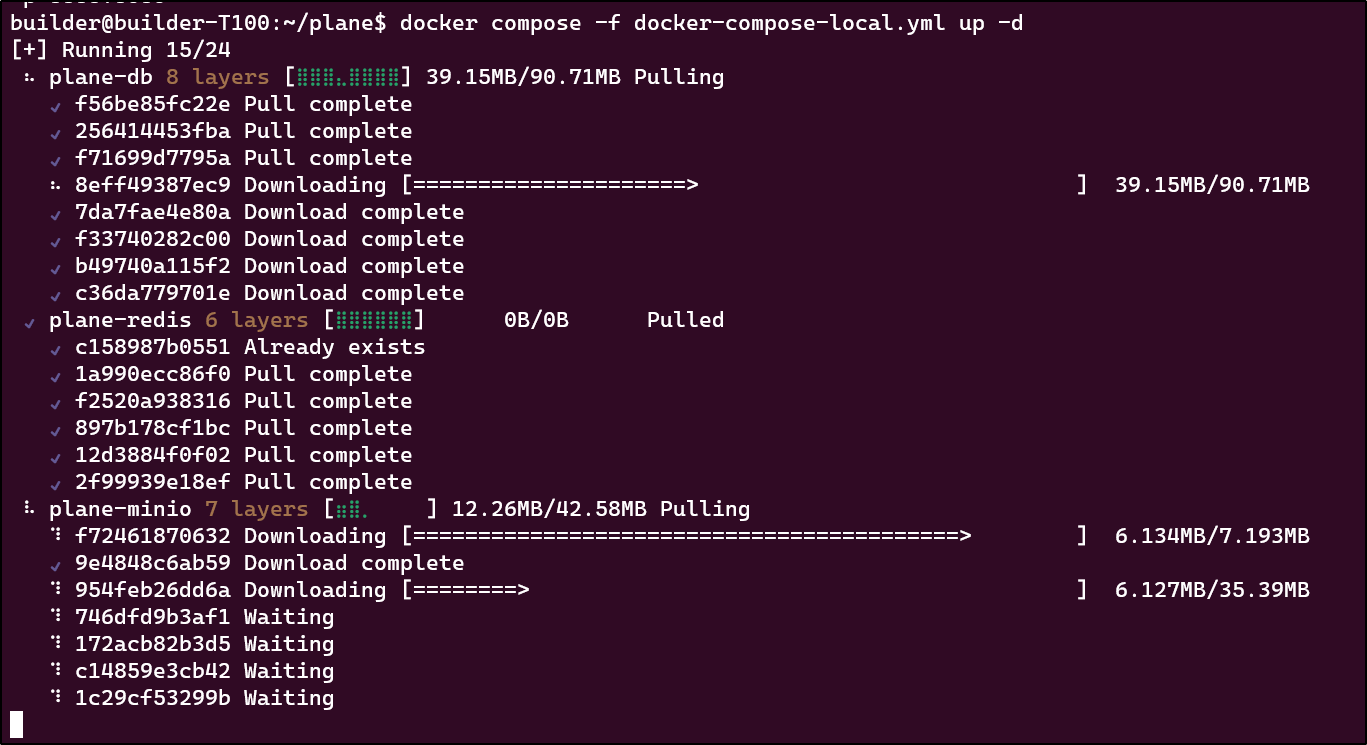

Then I ran docker compose -f docker-compose-local up -d to launch the stack

It took a while but I saw it starting

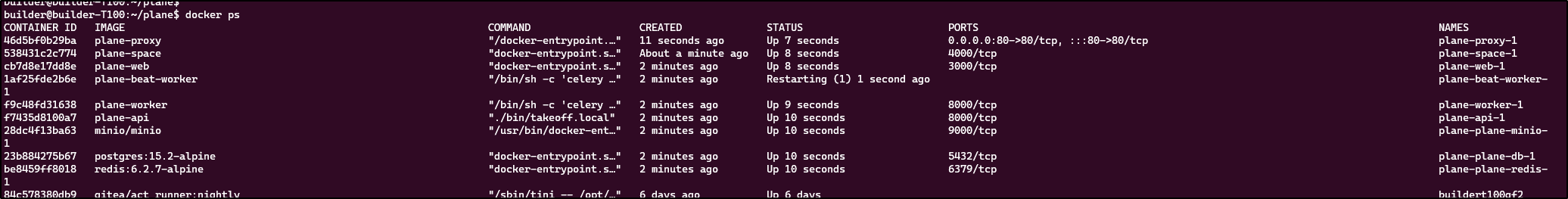

I noticed it launched quite a lot

My next step is to add an External reference to route traffic from K3s as well as test in my home network to 192.168.1.100:80

I’ll need to change the NGINX_PORT in the .env file from the default 80 and restart.

builder@builder-T100:~/plane$ docker compose -f ./docker-compose-local.yml down

[+] Running 10/10

✔ Container plane-proxy-1 Removed 0.6s

✔ Container plane-plane-minio-1 Removed 0.6s

✔ Container plane-beat-worker-1 Removed 0.8s

✔ Container plane-space-1 Removed 0.3s

✔ Container plane-web-1 Removed 0.2s

✔ Container plane-worker-1 Removed 2.2s

✔ Container plane-api-1 Removed 10.2s

✔ Container plane-plane-redis-1 Removed 0.3s

✔ Container plane-plane-db-1 Removed 0.2s

✔ Network plane_dev_env Removed

Then change the port

builder@builder-T100:~/plane$ cp .env .env.backup

builder@builder-T100:~/plane$ vi .env

builder@builder-T100:~/plane$ diff .env .env.backup

35c35

< NGINX_PORT=8885

---

> NGINX_PORT=80

Now relaunch it

builder@builder-T100:~/plane$ docker compose -f ./docker-compose-local.yml up -d

[+] Building 0.0s (0/0)

[+] Running 10/10

✔ Network plane_dev_env Created 0.1s

✔ Container plane-plane-minio-1 Started 60.8s

✔ Container plane-plane-db-1 Started 60.9s

✔ Container plane-plane-redis-1 Started 60.8s

✔ Container plane-api-1 Started 61.2s

✔ Container plane-beat-worker-1 Started 61.7s

✔ Container plane-worker-1 Started 61.8s

✔ Container plane-web-1 Started 62.1s

✔ Container plane-space-1 Started 30.4s

✔ Container plane-proxy-1 Started 3.5s

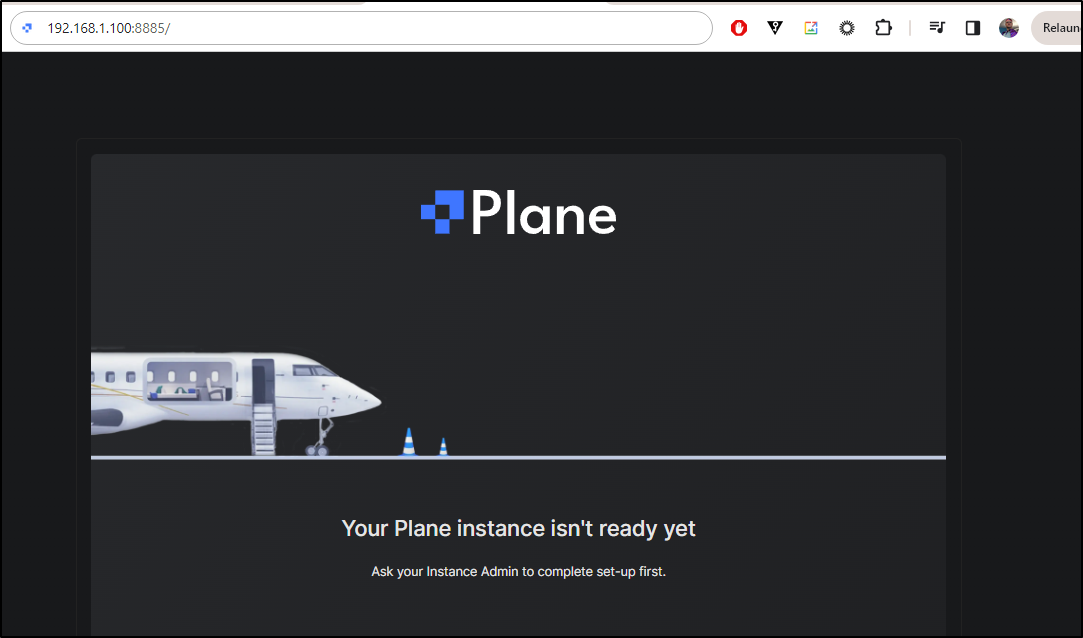

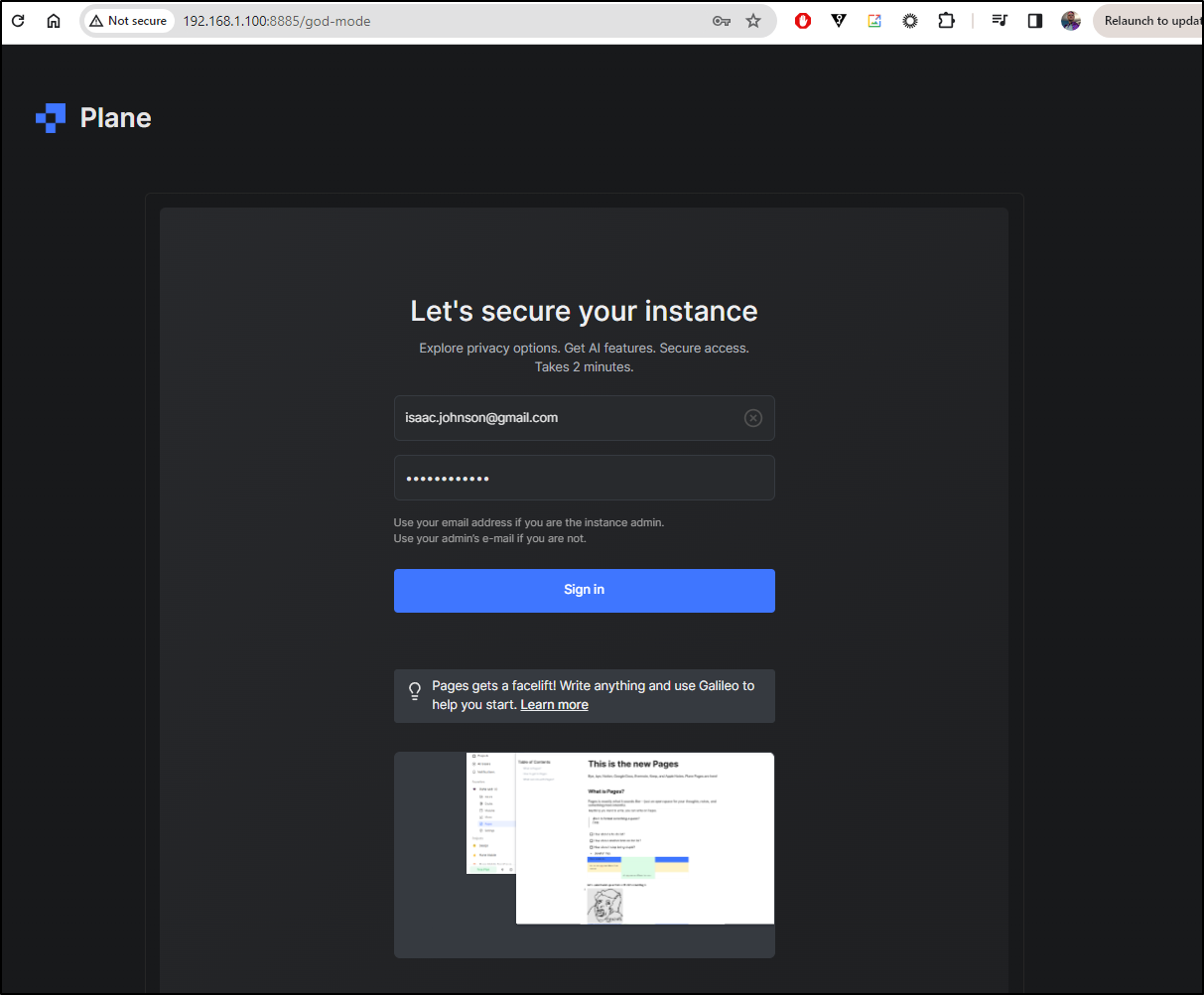

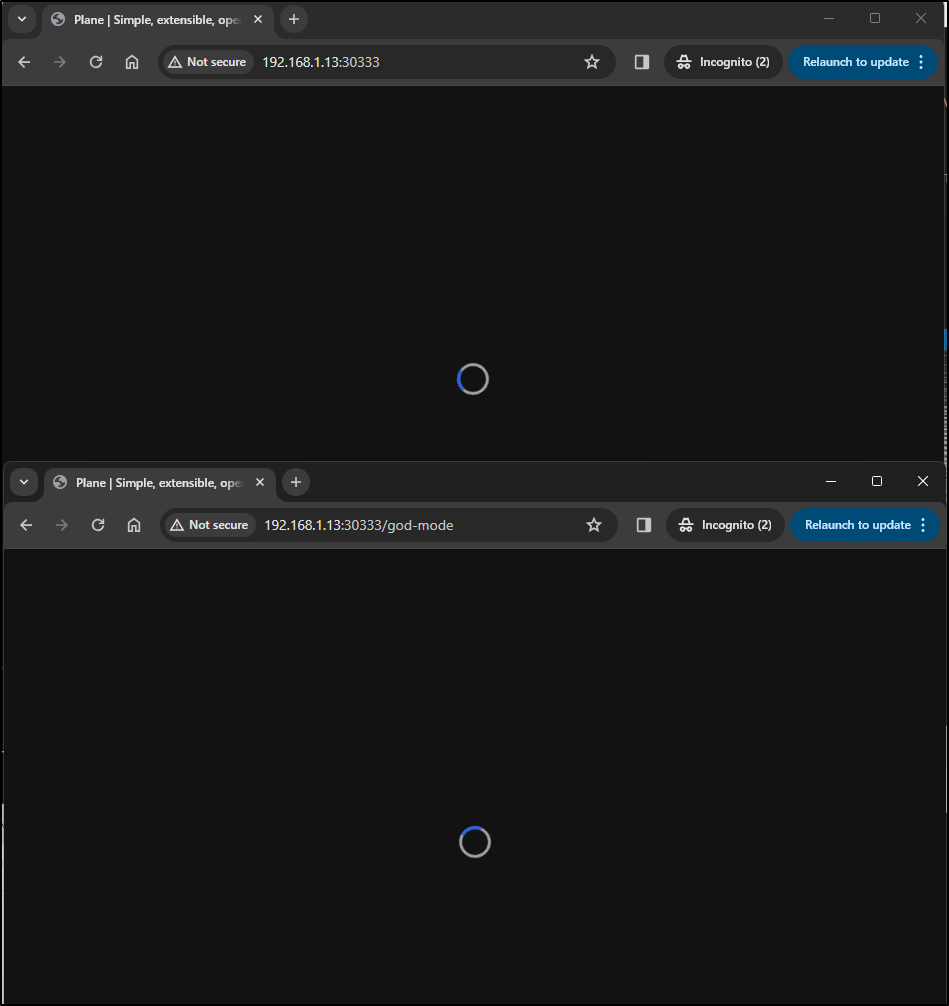

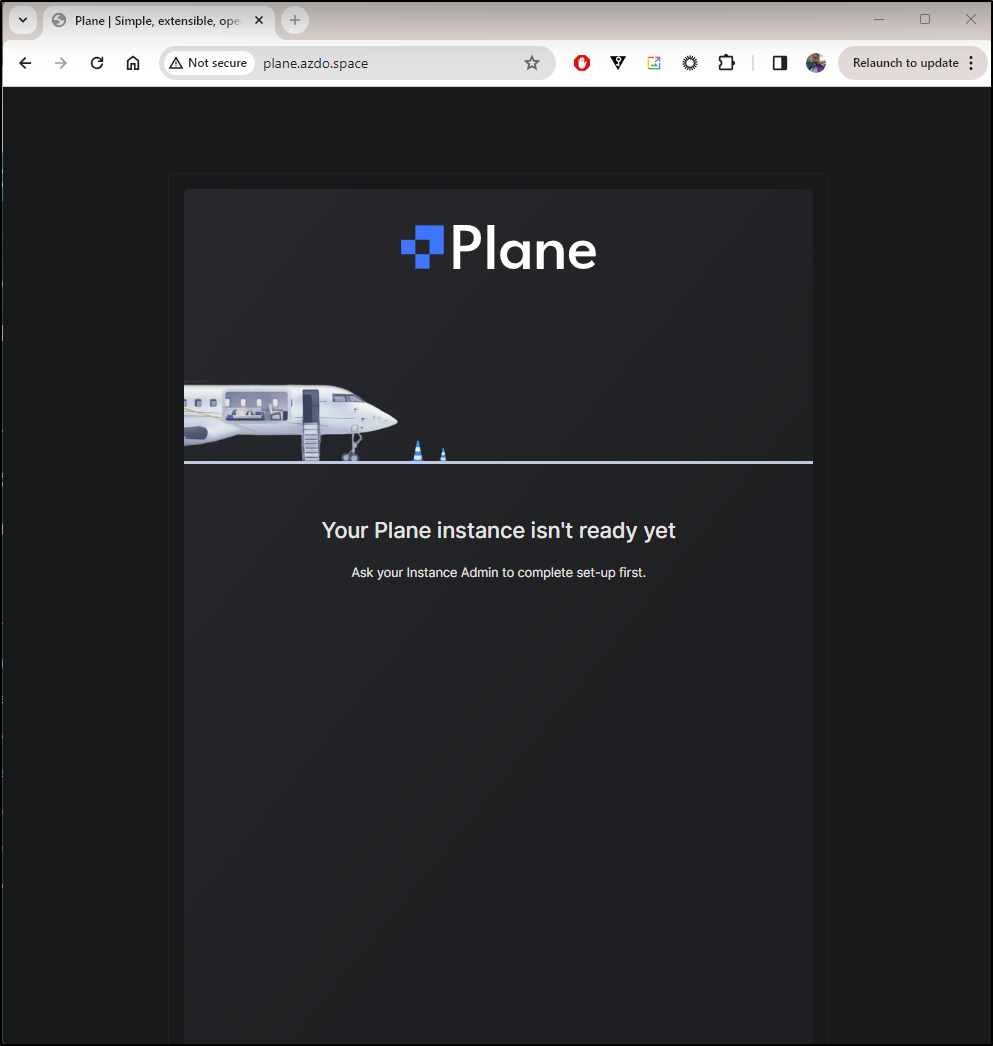

At first, I just see a window saying the instance isn’t ready

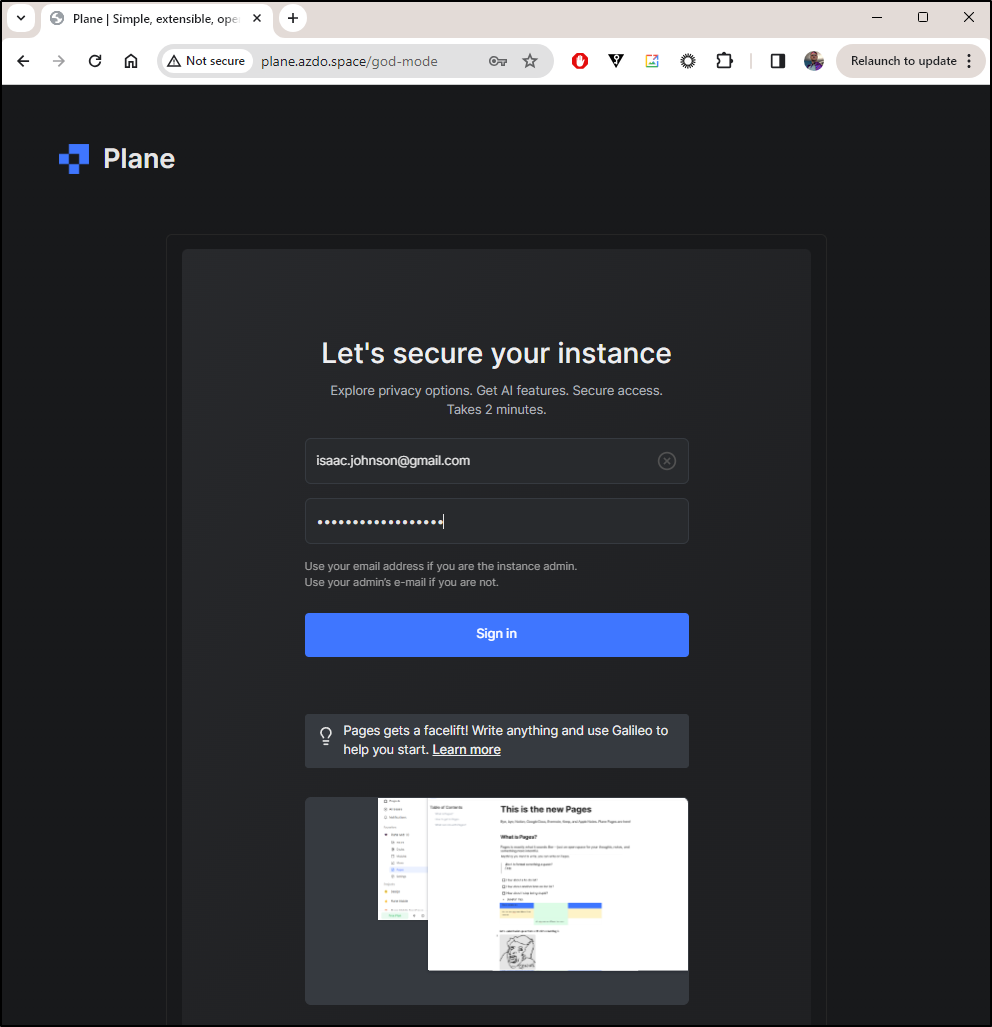

To set this up we need to go to god-mode first

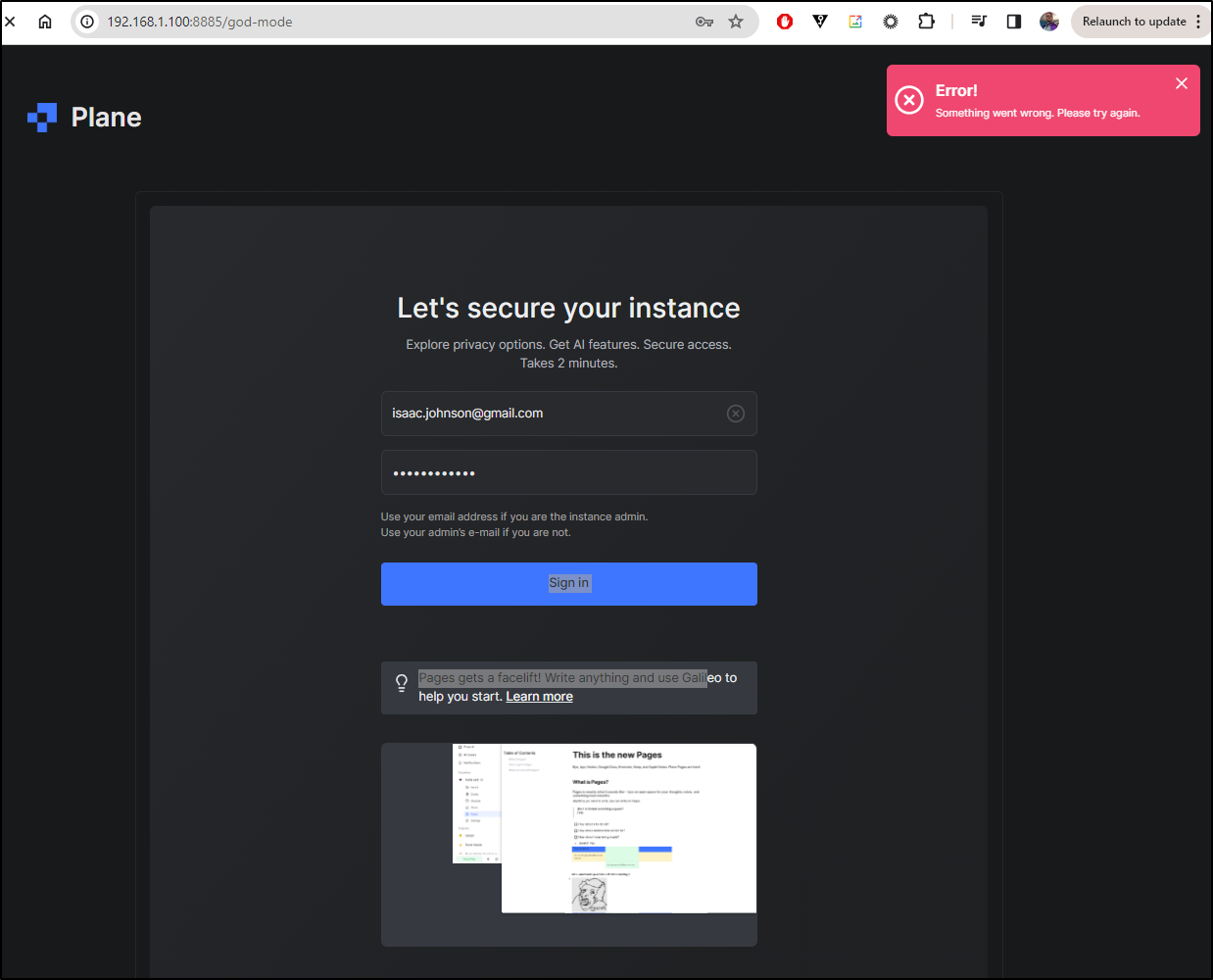

thing is, it seems to crash the dockerhost

and

After crashing the host a few times, I removed it

$ docker compose -f ./docker-compose-local.yml down

[+] Running 10/10

✔ Container plane-plane-minio-1 Removed 0.5s

✔ Container plane-proxy-1 Removed 0.6s

✔ Container plane-beat-worker-1 Removed 1.1s

✔ Container plane-space-1 Removed 0.4s

✔ Container plane-web-1 Removed 0.5s

✔ Container plane-worker-1 Removed 3.2s

✔ Container plane-api-1 Removed 10.7s

✔ Container plane-plane-redis-1 Removed 0.8s

✔ Container plane-plane-db-1 Removed 0.8s

✔ Network plane_dev_env Removed 0.3s

We’ve tried Docker Compose. Let’s move on to on-prem kubernetes.

On-Prem Kubernetes (K3s)

Let’s add the helm chart

$ helm repo add makeplane https://helm.plane.so/

"makeplane" has been added to your repositories

Then deploy

$ helm install \

ate-name> --create-namespace \

--na> --namespace plane-ns \

> --set ingress.host="plane.freshbrewed.science" \

> my-plane makeplane/plane-ce

W1216 20:48:59.040804 25834 warnings.go:70] unknown field "spec.backend"

NAME: my-plane

LAST DEPLOYED: Sat Dec 16 20:48:57 2023

NAMESPACE: plane-ns

STATUS: deployed

REVISION: 1

TEST SUITE: None

Though it gets into a crash

Every 2.0s: kubectl get pods -n plane-ns DESKTOP-QADGF36: Sat Dec 16 20:52:35 2023

NAME READY STATUS RESTARTS AGE

my-plane-minio-wl-0 0/1 Pending 0 3m36s

my-plane-pgdb-wl-0 0/1 Pending 0 3m36s

my-plane-redis-wl-0 0/1 Pending 0 3m36s

my-plane-minio-bucket-rgvgl 0/1 Init:0/1 0 3m36s

my-plane-space-wl-699dcb8f4-8lcqb 1/1 Running 0 3m36s

my-plane-web-wl-5967775d5b-hfv8n 1/1 Running 0 3m36s

my-plane-space-wl-699dcb8f4-qg8rf 1/1 Running 0 3m36s

my-plane-web-wl-5967775d5b-rs69h 1/1 Running 0 3m36s

my-plane-space-wl-699dcb8f4-lzwpr 1/1 Running 0 3m36s

my-plane-web-wl-5967775d5b-swdb2 1/1 Running 0 3m36s

my-plane-worker-wl-854687c594-qgr7n 1/1 Running 0 3m36s

my-plane-api-wl-599bd48dc8-qf57b 0/1 CrashLoopBackOff 4 (61s ago) 3m36s

my-plane-beat-worker-wl-68dbfdb544-tjpq9 0/1 CrashLoopBackOff 4 (58s ago) 3m36s

my-plane-api-wl-599bd48dc8-j9rt4 0/1 CrashLoopBackOff 3 (18s ago) 3m36s

my-plane-api-wl-599bd48dc8-qhtxb 1/1 Running 4 (45s ago) 3m36s

Let me fix the first issue, PVCs using ‘longhorn’ not set in this cluster

$ helm install --create-namespace --namespace plane-ns --set ingress.host="plane.freshbrewed.science" --set postgres.storageClass='local-path' --set redis.storageClass='local-path' --set minio.storageClass='local-path' my-p

lane makeplane/plane-ce

W1216 20:59:12.400540 31543 warnings.go:70] unknown field "spec.backend"

NAME: my-plane

LAST DEPLOYED: Sat Dec 16 20:59:10 2023

NAMESPACE: plane-ns

STATUS: deployed

REVISION: 1

TEST SUITE: None

After a bit, I saw the pods come up

builder@DESKTOP-QADGF36:~$ kubectl get pvc -n plane-ns

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-my-plane-pgdb-vol-my-plane-pgdb-wl-0 Bound pvc-43f1336e-94e8-4527-910d-3106ee8e106c 5Gi RWO local-path 109s

pvc-my-plane-minio-vol-my-plane-minio-wl-0 Bound pvc-d14a5115-fdbd-4f21-a814-6ec7747ede7b 5Gi RWO local-path 109s

pvc-my-plane-redis-vol-my-plane-redis-wl-0 Bound pvc-77d9f82d-30f5-4f79-b59f-b1c4e7f90b74 1Gi RWO local-path 109s

builder@DESKTOP-QADGF36:~$ kubectl get pods -n plane-ns

NAME READY STATUS RESTARTS AGE

my-plane-worker-wl-854687c594-dwzt8 1/1 Running 0 118s

my-plane-space-wl-699dcb8f4-dbgq9 1/1 Running 0 118s

my-plane-web-wl-5967775d5b-hthhc 1/1 Running 0 118s

my-plane-web-wl-5967775d5b-gdmjz 1/1 Running 0 118s

my-plane-space-wl-699dcb8f4-ppcpc 1/1 Running 0 118s

my-plane-api-wl-599bd48dc8-hmt7h 1/1 Running 1 (104s ago) 118s

my-plane-pgdb-wl-0 1/1 Running 0 118s

my-plane-minio-wl-0 1/1 Running 0 118s

my-plane-web-wl-5967775d5b-khv2j 1/1 Running 0 118s

my-plane-space-wl-699dcb8f4-v59n6 1/1 Running 0 118s

my-plane-api-wl-599bd48dc8-rwdvd 1/1 Running 3 (50s ago) 118s

my-plane-beat-worker-wl-68dbfdb544-b2r49 1/1 Running 1 (60s ago) 119s

my-plane-api-wl-599bd48dc8-28bvh 1/1 Running 1 (50s ago) 118s

my-plane-redis-wl-0 1/1 Running 0 118s

my-plane-minio-bucket-llfc6 0/1 Completed 0 118s

I’ll create a NodePort service

apiVersion: v1

kind: Service

metadata:

name: my-plane-web-np

namespace: plane-ns

spec:

ports:

- targetPort: 30008

port: 3000

targetPort: 3000

selector:

app.name: plane-ns-my-plane-web

type: NodePort

Then create based on a YAML

$ kubectl apply -f ./nodeport.yaml

service/my-plane-web-np created

I can now see the nodeport

builder@DESKTOP-QADGF36:~$ kubectl get svc -n plane-ns

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

my-plane-minio ClusterIP None <none> 9000/TCP,9090/TCP 10m

my-plane-pgdb ClusterIP None <none> 5432/TCP 10m

my-plane-web ClusterIP None <none> 3000/TCP 10m

my-plane-redis ClusterIP None <none> 6379/TCP 10m

my-plane-api ClusterIP None <none> 8000/TCP 10m

my-plane-space ClusterIP None <none> 3000/TCP 10m

my-plane-pgdb-cli-connect NodePort 10.43.203.46 <none> 5432:30000/TCP 10m

my-plane-web-np NodePort 10.43.15.33 <none> 3000:32332/TCP 60s

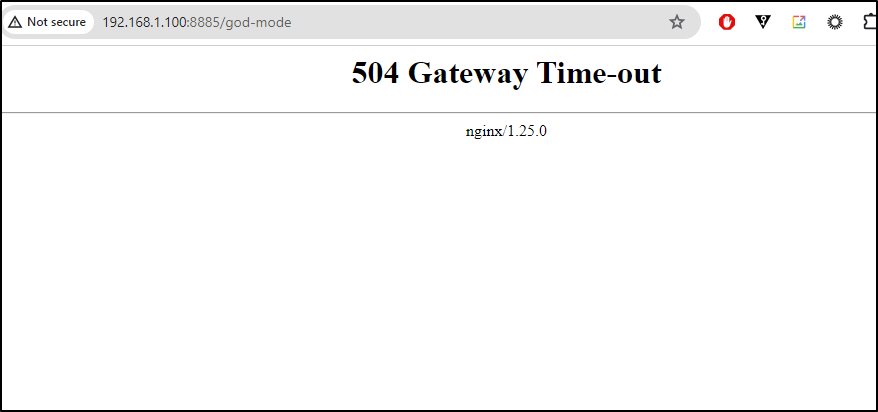

Though it seems to time out

And port-forwarding also failed

$ kubectl get pods -l app.name=plane-ns-my-plane-web -n plane-ns

NAME READY STATUS RESTARTS AGE

my-plane-web-wl-5967775d5b-gdmjz 1/1 Running 0 15m

my-plane-web-wl-5967775d5b-khv2j 1/1 Running 0 15m

$ kubectl port-forward my-plane-web-wl-5967775d5b-gdmjz -n plane-ns 6543:80

Forwarding from 127.0.0.1:6543 -> 80

Forwarding from [::1]:6543 -> 80

Handling connection for 6543

Handling connection for 6543

E1216 21:17:20.488016 7108 portforward.go:409] an error occurred forwarding 6543 -> 80: error forwarding port 80 to pod 60beecac1c4b26774ff8736c3800fa1ca0155e8886dce1825f86805b74322d11, uid : failed to execute portforward in network namespace "/var/run/netns/cni-8afccb7e-6d01-44bf-e57a-d13ebec9bea1": failed to connect to localhost:80 inside namespace "60beecac1c4b26774ff8736c3800fa1ca0155e8886dce1825f86805b74322d11", IPv4: dial tcp4 127.0.0.1:80: connect: connection refused IPv6 dial tcp6: address localhost: no suitable address found

error: lost connection to pod

I tried to fetch logs and then tried port 3000

builder@DESKTOP-QADGF36:~$ kubectl logs my-plane-web-wl-5967775d5b-khv2j -n plane-ns

+ echo 'Starting Plane Frontend..'

Starting Plane Frontend..

+ node web/server.js

▲ Next.js 14.0.3

- Local: http://my-plane-web-wl-5967775d5b-khv2j:3000

- Network: http://10.42.2.27:3000

✓ Ready in 11.6s

builder@DESKTOP-QADGF36:~$ kubectl port-forward my-plane-web-wl-5967775d5b-khv2j -n plane-ns 6543:3000

Forwarding from 127.0.0.1:6543 -> 3000

Forwarding from [::1]:6543 -> 3000

Handling connection for 6543

Handling connection for 6543

E1216 21:19:23.941310 8000 portforward.go:409] an error occurred forwarding 6543 -> 3000: error forwarding port 3000 to pod cb9c9d09ea63478b563d4bfde43d6326407c45aa72b8e581bb55767732c2d447, uid : failed to execute portforward in network namespace "/var/run/netns/cni-d06b3ff2-cb63-f7b5-91a3-131f3dacbb4e": failed to connect to localhost:3000 inside namespace "cb9c9d09ea63478b563d4bfde43d6326407c45aa72b8e581bb55767732c2d447", IPv4: dial tcp4 127.0.0.1:3000: connect: connection refused IPv6 dial tcp6: address localhost: no suitable address found

error: lost connection to pod

I again tried to create a NodePort

$ kubectl get svc -n plane-ns

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

my-plane-minio ClusterIP None <none> 9000/TCP,9090/TCP 22m

my-plane-pgdb ClusterIP None <none> 5432/TCP 22m

my-plane-web ClusterIP None <none> 3000/TCP 22m

my-plane-redis ClusterIP None <none> 6379/TCP 22m

my-plane-api ClusterIP None <none> 8000/TCP 22m

my-plane-space ClusterIP None <none> 3000/TCP 22m

my-plane-pgdb-cli-connect NodePort 10.43.203.46 <none> 5432:30000/TCP 22m

my-plane-web-np NodePort 10.43.12.170 <none> 3000:30333/TCP 11s

$ kubectl get pods -n plane-ns

NAME READY STATUS RESTARTS AGE

my-plane-worker-wl-854687c594-dwzt8 1/1 Running 0 24m

my-plane-space-wl-699dcb8f4-dbgq9 1/1 Running 0 24m

my-plane-web-wl-5967775d5b-gdmjz 1/1 Running 0 24m

my-plane-space-wl-699dcb8f4-ppcpc 1/1 Running 0 24m

my-plane-api-wl-599bd48dc8-hmt7h 1/1 Running 1 (23m ago) 24m

my-plane-pgdb-wl-0 1/1 Running 0 24m

my-plane-minio-wl-0 1/1 Running 0 24m

my-plane-web-wl-5967775d5b-khv2j 1/1 Running 0 24m

my-plane-api-wl-599bd48dc8-rwdvd 1/1 Running 3 (22m ago) 24m

my-plane-beat-worker-wl-68dbfdb544-b2r49 1/1 Running 1 (23m ago) 24m

my-plane-api-wl-599bd48dc8-28bvh 1/1 Running 1 (22m ago) 24m

my-plane-redis-wl-0 1/1 Running 0 24m

my-plane-minio-bucket-llfc6 0/1 Completed 0 24m

But nothing came up

I tried the ports on all three nodes

I tried 11 hours later from both WSL and Windows, still without success

$ kubectl get svc -n plane-ns

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

my-plane-minio ClusterIP None <none> 9000/TCP,9090/TCP 11h

my-plane-pgdb ClusterIP None <none> 5432/TCP 11h

my-plane-web ClusterIP None <none> 3000/TCP 11h

my-plane-redis ClusterIP None <none> 6379/TCP 11h

my-plane-api ClusterIP None <none> 8000/TCP 11h

my-plane-space ClusterIP None <none> 3000/TCP 11h

my-plane-pgdb-cli-connect NodePort 10.43.203.46 <none> 5432:30000/TCP 11h

my-plane-web-np NodePort 10.43.12.170 <none> 3000:30333/TCP 11h

PS C:\Users\isaac> kubectl get nodes

NAME STATUS ROLES AGE VERSION

builder-macbookpro2 Ready <none> 4d10h v1.27.6+k3s1

isaac-macbookpro Ready <none> 4d10h v1.27.6+k3s1

anna-macbookair Ready control-plane,master 4d11h v1.27.6+k3s1

PS C:\Users\isaac> kubectl port-forward svc/my-plane-web -n plane-ns 3500:3000

Forwarding from 127.0.0.1:3500 -> 3000

Forwarding from [::1]:3500 -> 3000

Handling connection for 3500

Handling connection for 3500

E1217 08:46:33.673148 29828 portforward.go:406] an error occurred forwarding 3500 -> 3000: error forwarding port 3000 to pod 60beecac1c4b26774ff8736c3800fa1ca0155e8886dce1825f86805b74322d11, uid : failed to execute portforward in network namespace "/var/run/netns/cni-8afccb7e-6d01-44bf-e57a-d13ebec9bea1": failed to connect to localhost:3000 inside namespace "60beecac1c4b26774ff8736c3800fa1ca0155e8886dce1825f86805b74322d11", IPv4: dial tcp4 127.0.0.1:3000: connect: connection refused IPv6 dial tcp6: address localhost: no suitable address found

E1217 08:46:33.673670 29828 portforward.go:234] lost connection to pod

Handling connection for 3500

E1217 08:46:33.674206 29828 portforward.go:346] error creating error stream for port 3500 -> 3000: EOF

PS C:\Users\isaac>

PS C:\Users\isaac> kubectl port-forward my-plane-web-wl-5967775d5b-gdmjz -n plane-ns 3500:3000

Forwarding from 127.0.0.1:3500 -> 3000

Forwarding from [::1]:3500 -> 3000

Handling connection for 3500

Handling connection for 3500

E1217 08:48:02.557787 26684 portforward.go:406] an error occurred forwarding 3500 -> 3000: error forwarding port 3000 to pod 60beecac1c4b26774ff8736c3800fa1ca0155e8886dce1825f86805b74322d11, uid : failed to execute portforward in network namespace "/var/run/netns/cni-8afccb7e-6d01-44bf-e57a-d13ebec9bea1": failed to connect to localhost:3000 inside namespace "60beecac1c4b26774ff8736c3800fa1ca0155e8886dce1825f86805b74322d11", IPv4: dial tcp4 127.0.0.1:3000: connect: connection refused IPv6 dial tcp6: address localhost: no suitable address found

E1217 08:48:02.558815 26684 portforward.go:234] lost connection to pod

Handling connection for 3500

E1217 08:48:02.559345 26684 portforward.go:346] error creating error stream for port 3500 -> 3000: EOF

I can see the web server is running, however

PS C:\Users\isaac> kubectl logs my-plane-web-wl-5967775d5b-gdmjz -n plane-ns

+ echo 'Starting Plane Frontend..'

Starting Plane Frontend..

+ node web/server.js

▲ Next.js 14.0.3

- Local: http://my-plane-web-wl-5967775d5b-gdmjz:3000

- Network: http://10.42.1.21:3000

✓ Ready in 14s

Let’s do this in Azure.

I’ll create a subscription and SP

$ az group create --name planesorg --location centralus

{

"id": "/subscriptions/8defc61d-657a-453d-a6ff-cb9f91289a61/resourceGroups/planesorg",

"location": "centralus",

"managedBy": null,

"name": "planesorg",

"properties": {

"provisioningState": "Succeeded"

},

"tags": null,

"type": "Microsoft.Resources/resourceGroups"

}

$ az ad sp create-for-rbac -n idjaksupg01sp --skip-assignment --output json > my_sp.json

WARNING: Option '--skip-assignment' has been deprecated and will be removed in a future release.

WARNING: Found an existing application instance: (id) 34fede26-2ae7-4ccd-9c29-3bc220b9784a. We will patch it.

WARNING: The output includes credentials that you must protect. Be sure that you do not include these credentials in your code or check the credentials into your source control. For more information, see https://aka.ms/azadsp-cli

$ export SP_PASS=`cat my_sp.json | jq -r .password`

$ export SP_ID=`cat my_sp.json | jq -r .appId`

Now I’ll create the AKS cluster

$ az aks create --resource-group planesorg --name idjplanetest --location centralus --node-count 3 --enable-cluster-autoscaler --min-count 2 --max-count 4 --generate-ssh-keys --network-plugin azure --network-policy azure --service-principal $SP_ID --client-secret $SP_PASS

docker_bridge_cidr is not a known attribute of class <class 'azure.mgmt.containerservice.v2023_10_01.models._models_py3.ContainerServiceNetworkProfile'> and will be ignored

\ Running ..

Now let’s get admin creds

$ az aks get-credentials -n idjplanetest -g planesorg --admin

I need a basic Nginx ingress controller

$ NAMESPACE=ingress-basic

$ helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

"ingress-nginx" has been added to your repositories

$ helm repo update

$ helm install ingress-nginx ingress-nginx/ingress-nginx \

--create-namespace \

--namespace $NAMESPACE \

--set controller.service.annotations."service\.beta\.kubernetes\.io/azure-load-balancer-health-probe-request-path"=/healthz

This really will need cert-manager as well

$ helm repo add jetstack https://charts.jetstack.io

$ helm repo update

Then install with CRDs

$ helm install \

> cert-manager jetstack/cert-manager \

> --namespace cert-manager \

> --create-namespace \

> --version v1.13.3 \

> --set installCRDs=true

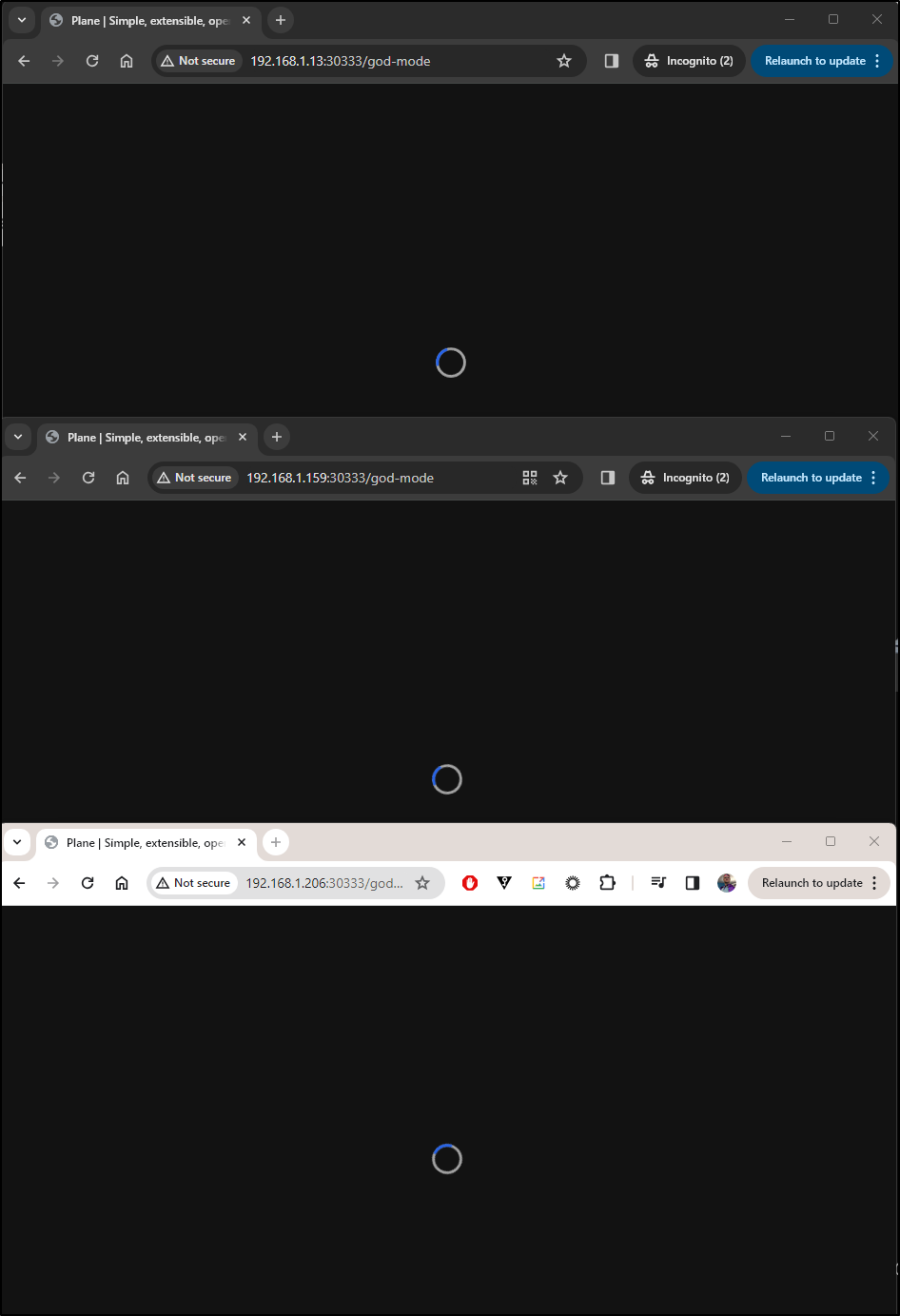

I’ll want to get the external IP of the Nginx LB:

$ kubectl get svc --all-namespaces | grep Load

ingress-basic ingress-nginx-controller LoadBalancer 10.0.60.100 20.98.176.239 80:31488/TCP,443:31468/TCP 3m16s

And use it in a new A Record

Finally, I should have the basics setup for a helm deploy of Plane.so into AKS

$ helm install --create-namespace --namespace plane-ns --set ingress.appHost="plane.azdo.space" --set ingress.host="plane.azdo.space" --set postgres.storageClass=default --set redis.storageClass=default --set minio.storageClass=default my-plane makeplane/plane-ce

W1217 16:57:46.340037 26259 warnings.go:70] unknown field "spec.backend"

NAME: my-plane

LAST DEPLOYED: Sun Dec 17 16:57:43 2023

NAMESPACE: plane-ns

STATUS: deployed

REVISION: 1

TEST SUITE: None

I have a few crashing pods, but it sees basically up

$ kubectl get pods -n plane-ns

NAME READY STATUS RESTARTS AGE

my-plane-api-wl-599bd48dc8-c59ll 1/1 Running 0 102s

my-plane-api-wl-599bd48dc8-d75dr 0/1 CrashLoopBackOff 2 (20s ago) 102s

my-plane-api-wl-599bd48dc8-v6x55 0/1 CrashLoopBackOff 2 (20s ago) 102s

my-plane-beat-worker-wl-68dbfdb544-zt8js 1/1 Running 3 (34s ago) 102s

my-plane-minio-bucket-hfrc2 0/1 Completed 0 102s

my-plane-minio-wl-0 1/1 Running 0 102s

my-plane-pgdb-wl-0 1/1 Running 0 102s

my-plane-redis-wl-0 1/1 Running 0 102s

my-plane-space-wl-699dcb8f4-75fnm 1/1 Running 0 102s

my-plane-space-wl-699dcb8f4-cd5dp 1/1 Running 0 102s

my-plane-space-wl-699dcb8f4-fljc9 1/1 Running 0 102s

my-plane-web-wl-5967775d5b-mndh9 1/1 Running 0 102s

my-plane-web-wl-5967775d5b-n7vj2 1/1 Running 0 102s

my-plane-web-wl-5967775d5b-nbx77 1/1 Running 0 102s

my-plane-worker-wl-854687c594-g2v9g 1/1 Running 0 102s

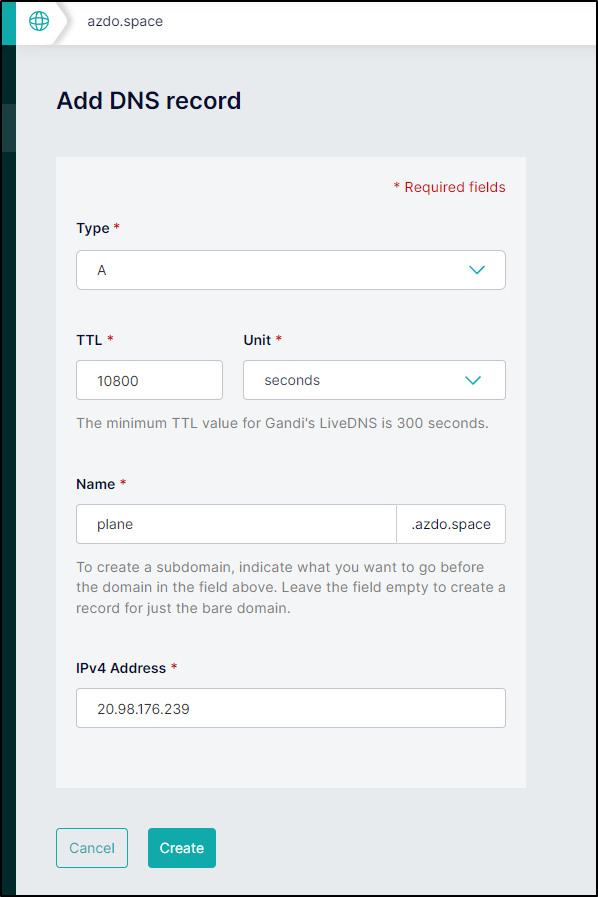

I’ll try god mode, albeit in http

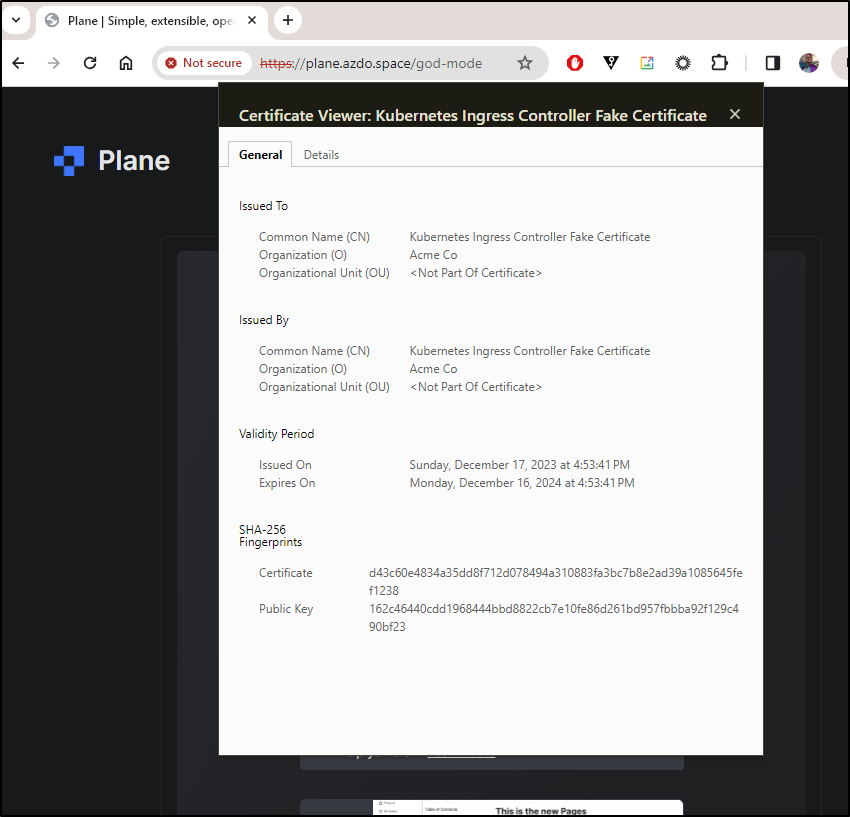

the Cert is not valid with HTTPS. I’m guessing we need to add the LE Acme provider.

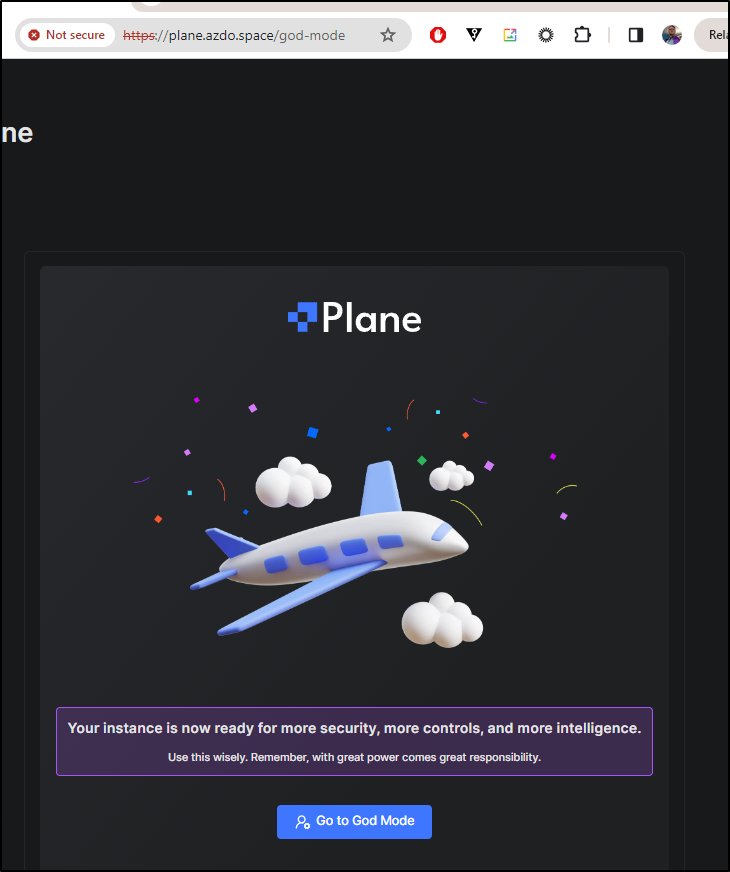

This time initial setup did work

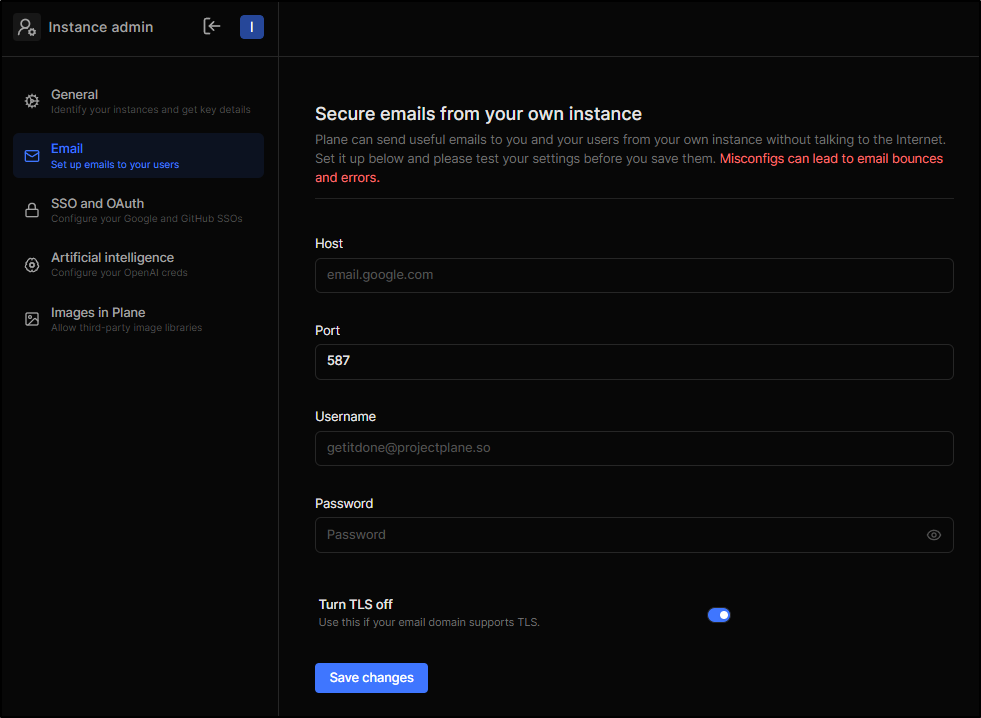

To use it, there are a few things we need to handle…

1. Email SMTP:

Here we could set the outgoing mail server host and credentials

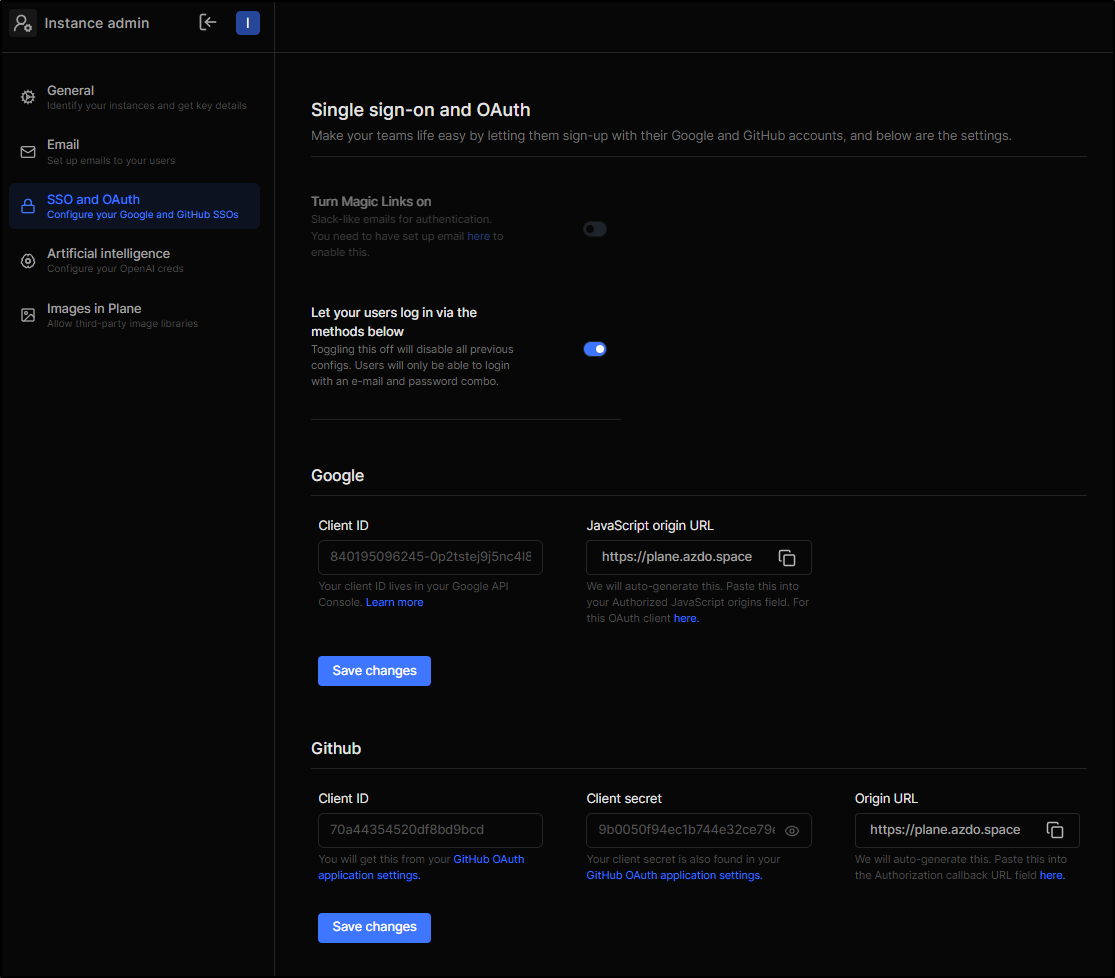

2. SSO / OAuth

Out of the box, Plane supports Google and Github federated IdPs. We could configurate that here.

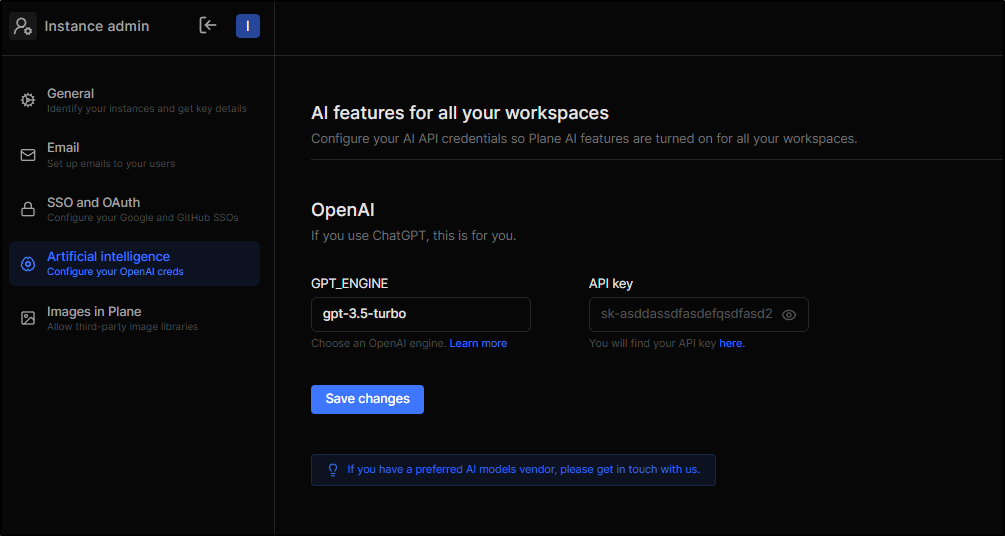

3. AI

AI setup is used for the “AI” issue description generation, if you want that.

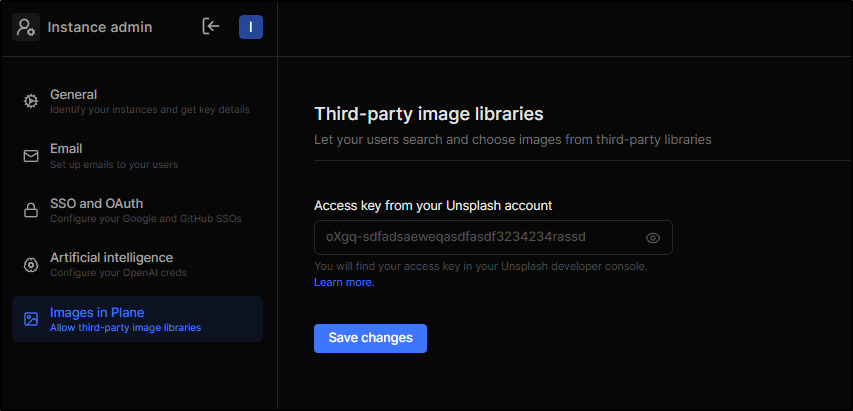

4. Unsplash Libraries

To add some nice backgrounds and images, Plane uses Unsplash which requires an Access key

With regard to these above setup steps; At this time, I don’t have GPT key but I could use Resend.dev or Sendgrid for the email and setup proper OAuth, at least through Github.

Since I do not plan to keep this instance up, I’ll skip over it for now and do a tour of what we get just out-of-the-box.

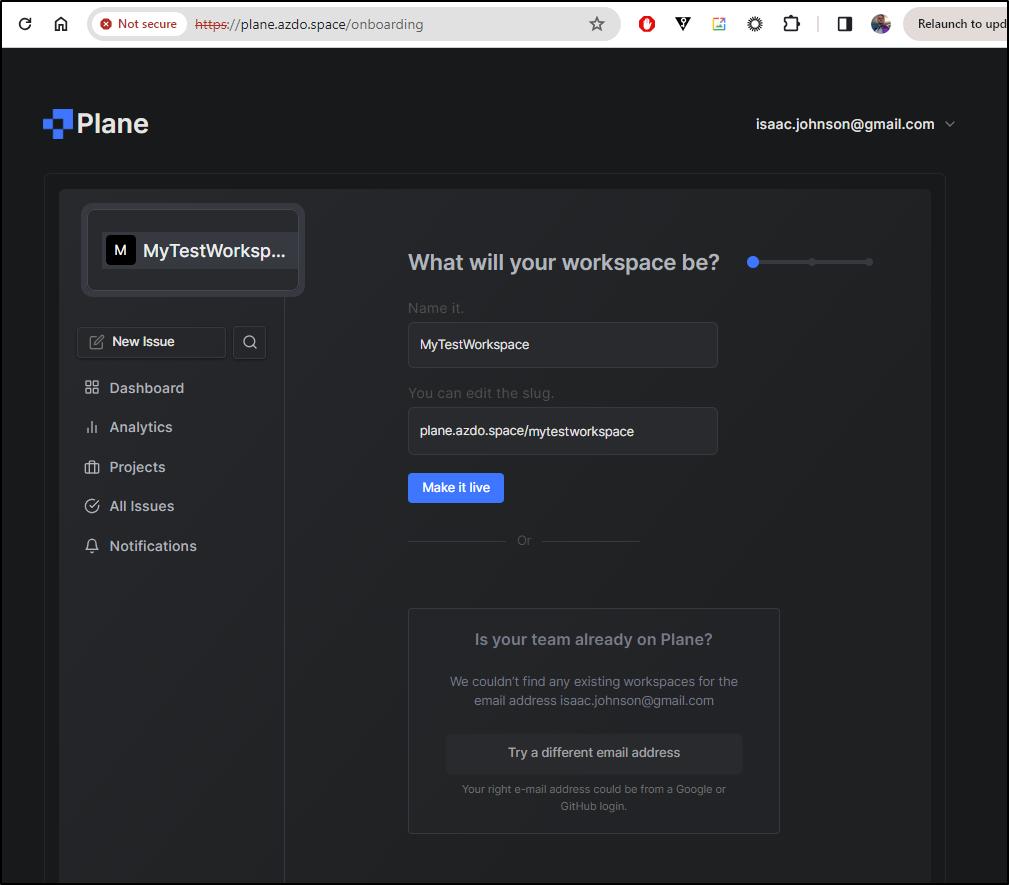

User mode

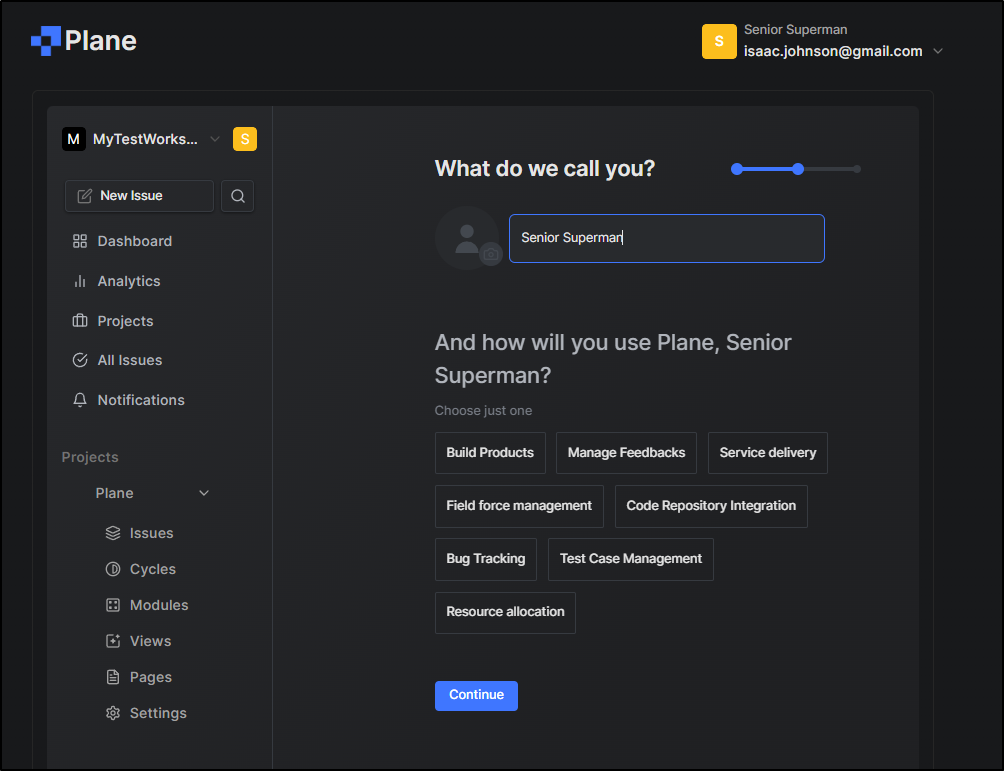

Since we are already logged in, we can create a workspace

and give ourselves a title

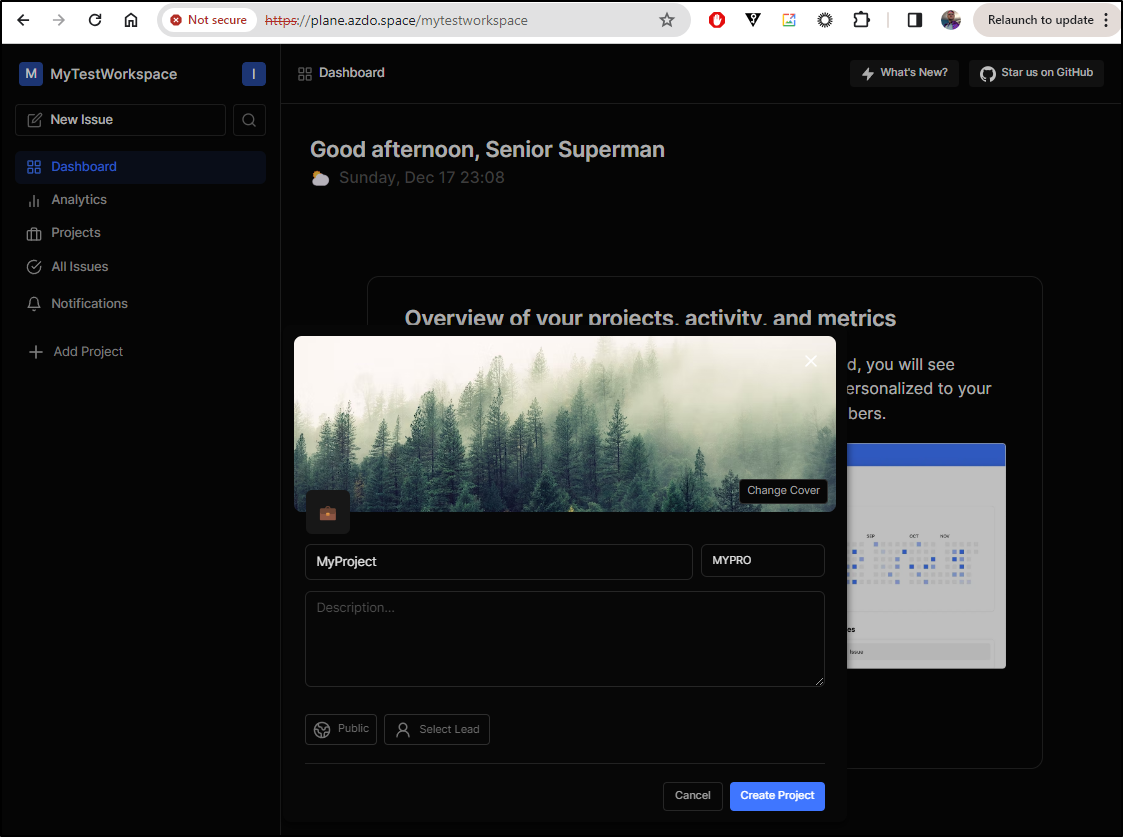

Then move on to creating a Project

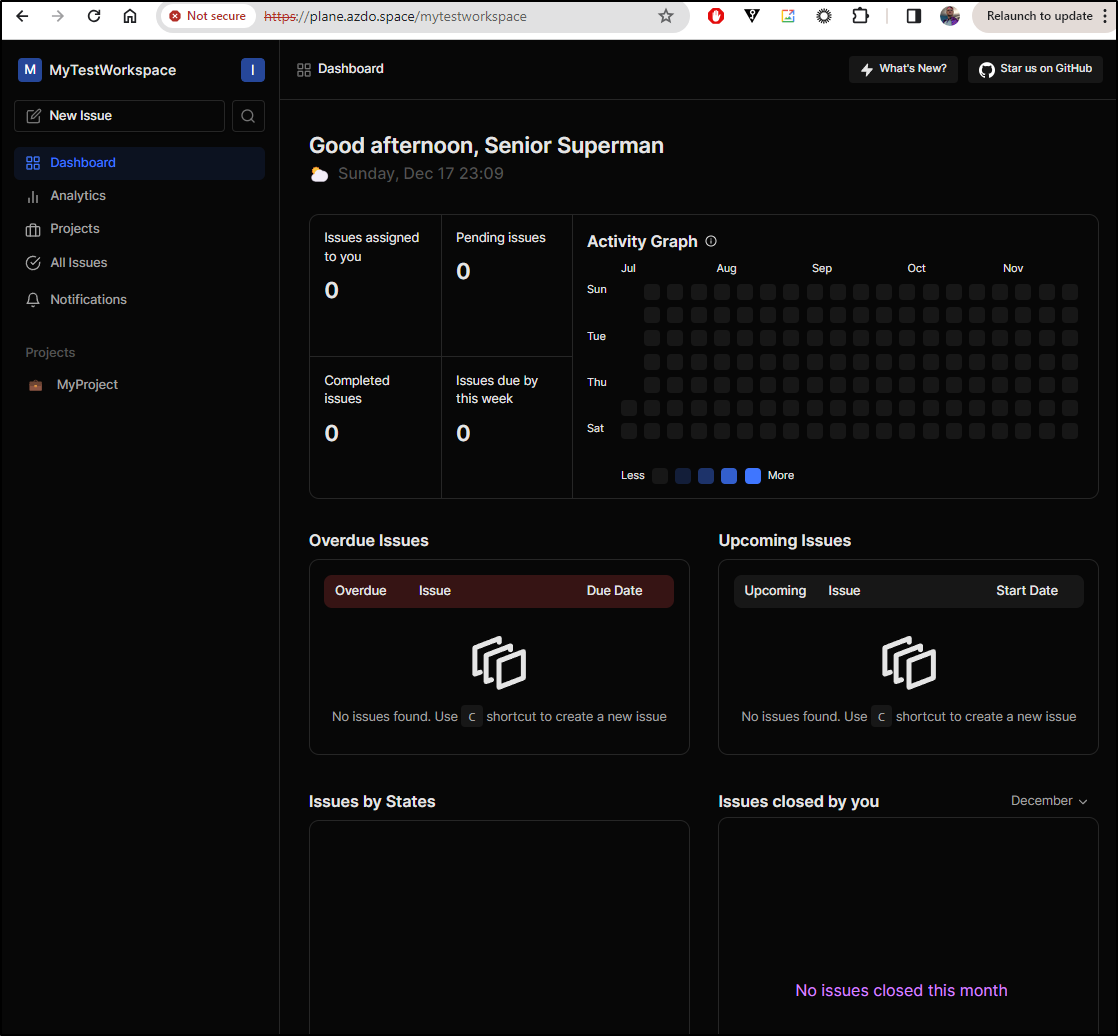

and when created

Since I’m paying for the AKS, I don’t want to take too much time - the point was to see that I could self-host if I wanted to… instead let’s pivot to using SaaS instance.

Always, before we do, let’s cleanup first

$ az aks delete -n idjplanetest -g planesorg

Are you sure you want to perform this operation? (y/n): y

$ az group delete -n planesorg

Are you sure you want to perform this operation? (y/n): y

Plane.so Cloud SaaS

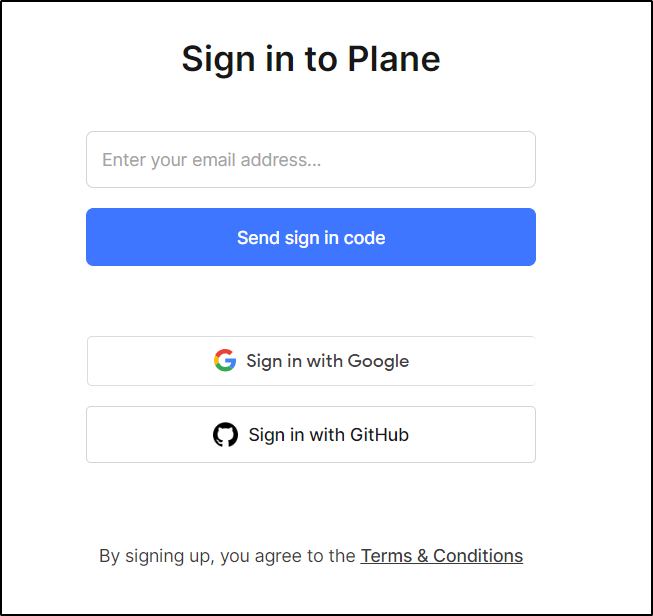

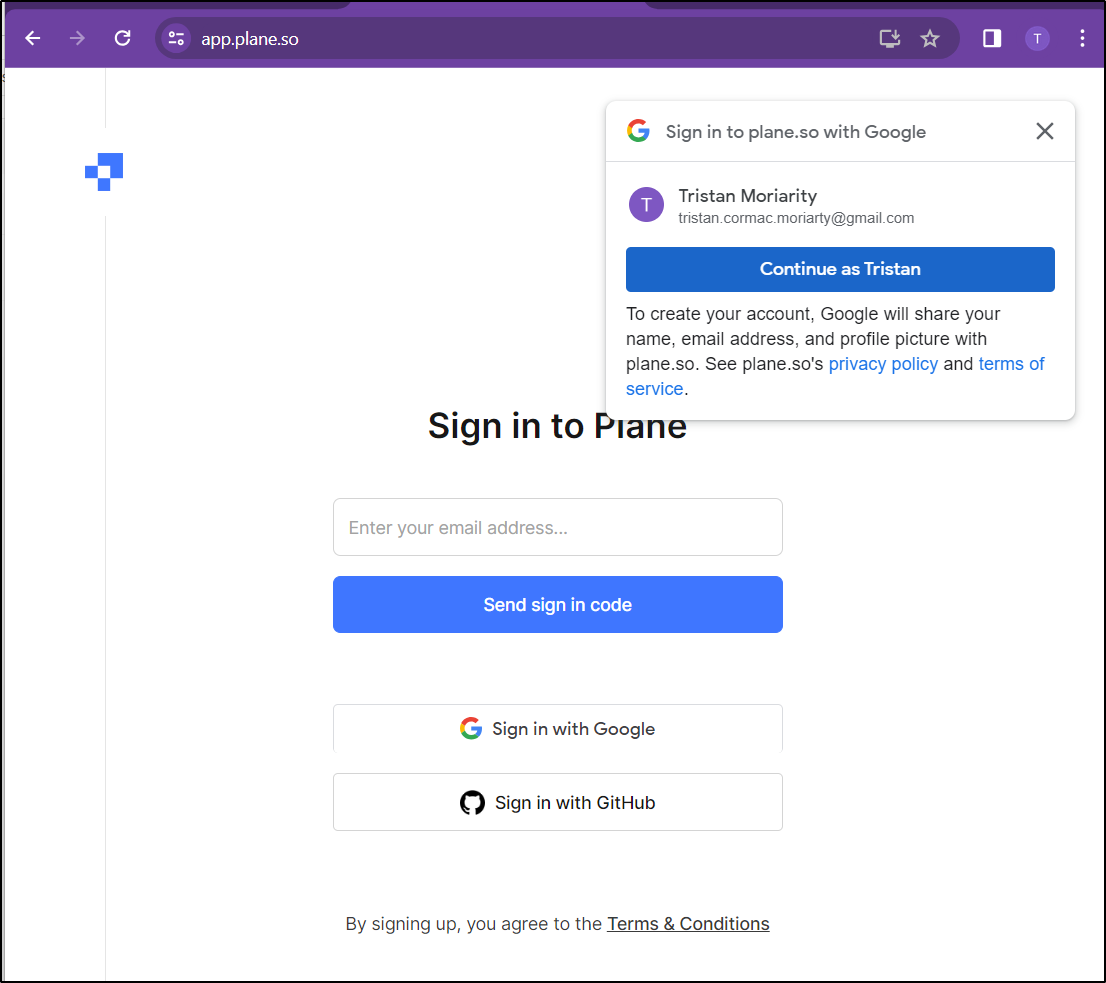

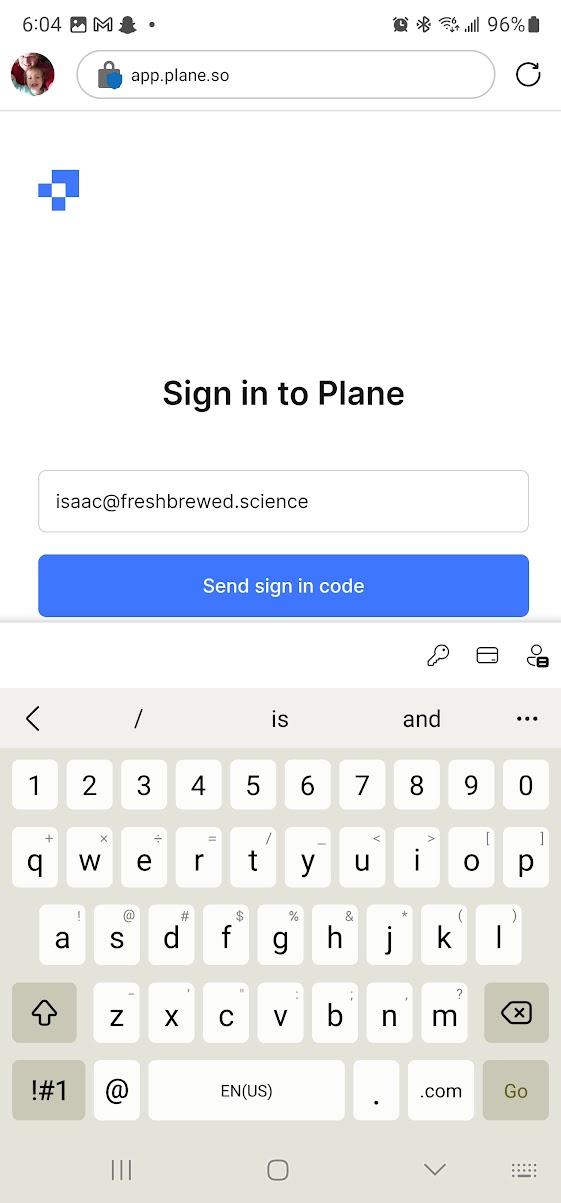

To sign up for the Cloud hosted option, we can go to https://app.plane.so/ where we can use, today, Github or Google as federated IdP’s or create a local account

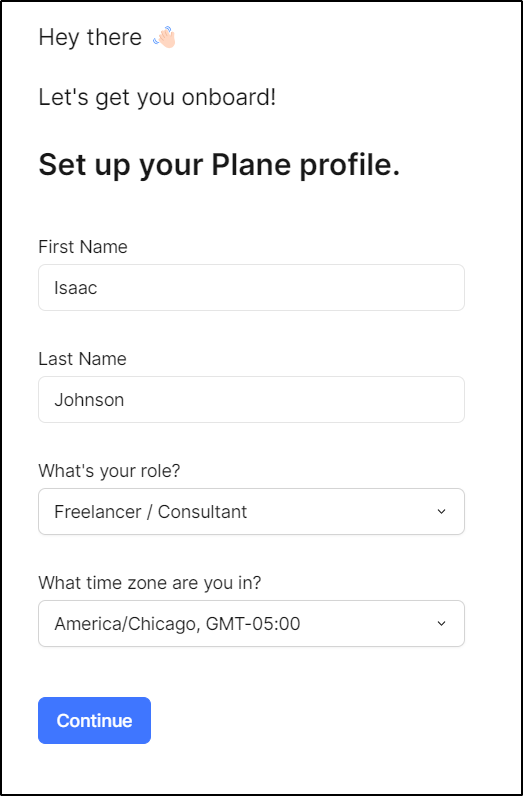

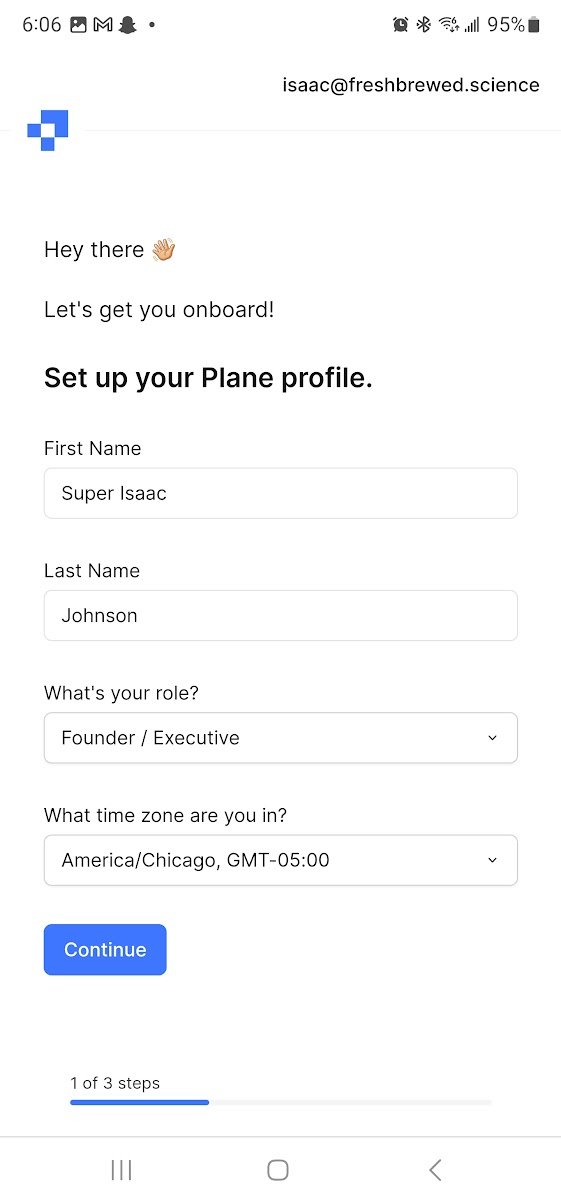

That then takes me to a page to set name details, role and time zone

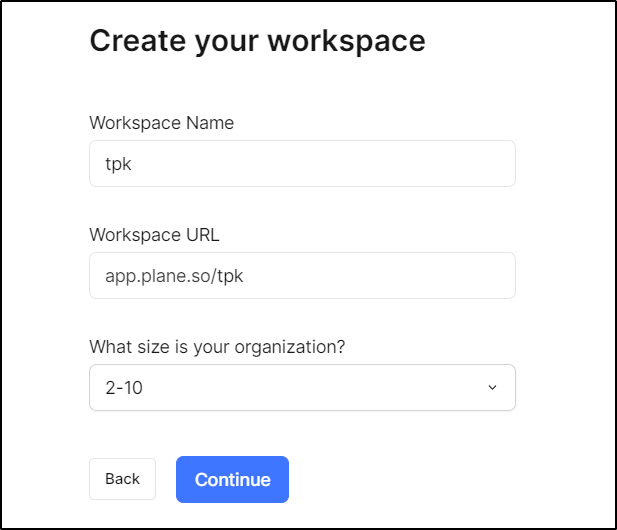

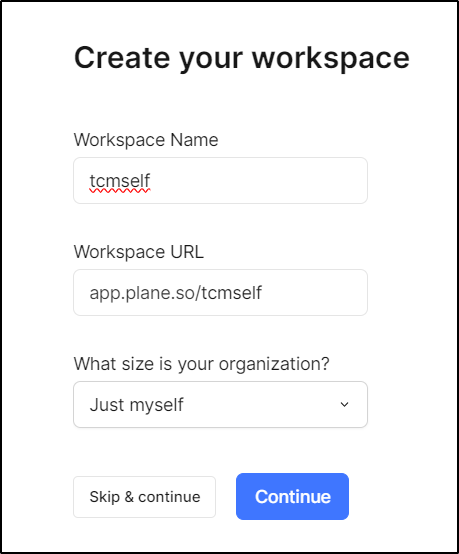

I’ll then have a chance to name my workspace and org size

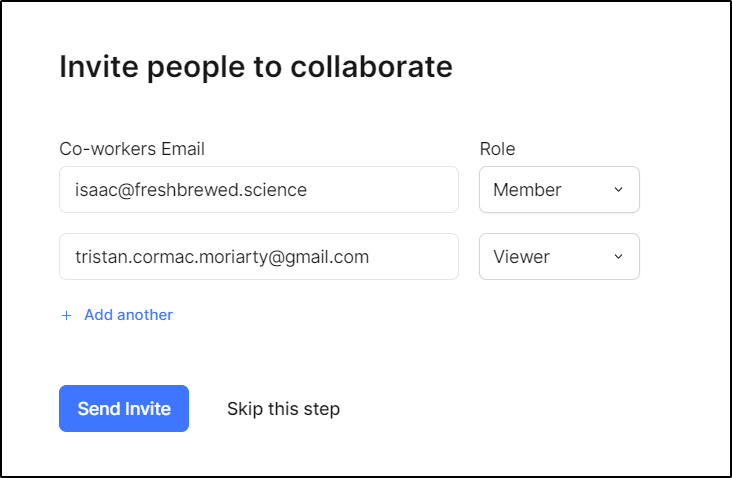

I can invite people into my org. I’ll choose some different roles so we can see how they behave

Projects

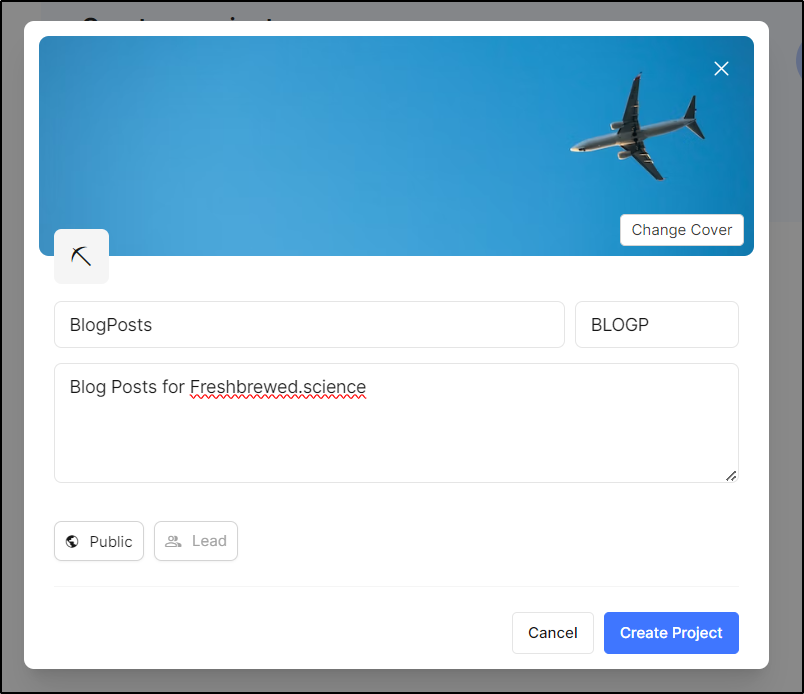

My first step will be to create a project

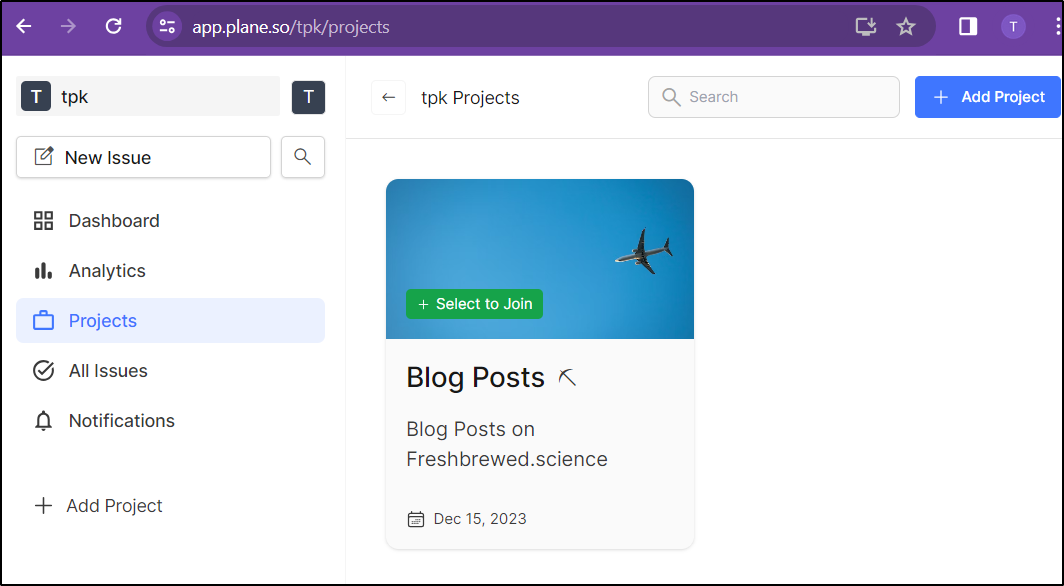

I’ll start with a public facing “Blog Posts” project (BLOGP)

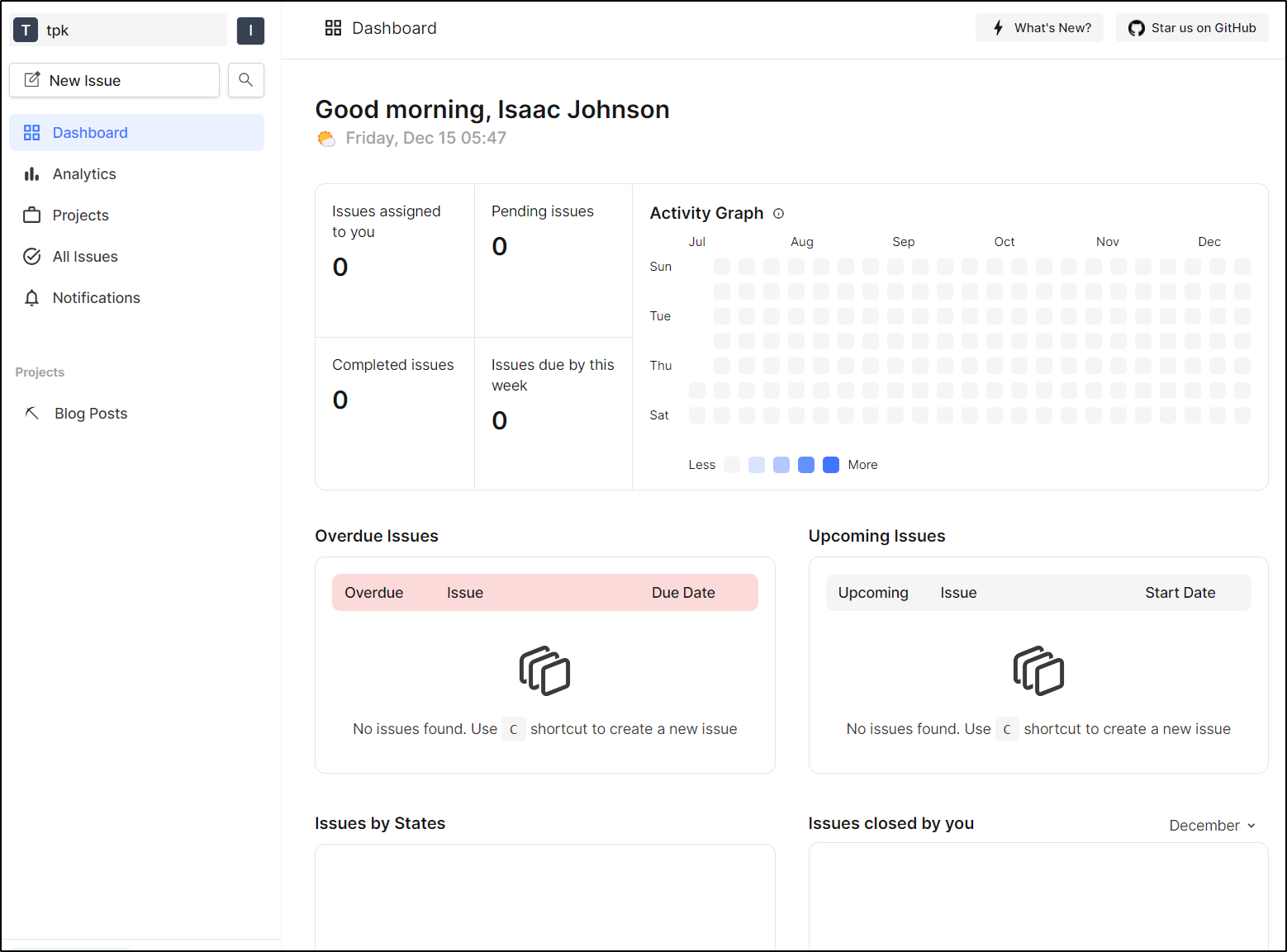

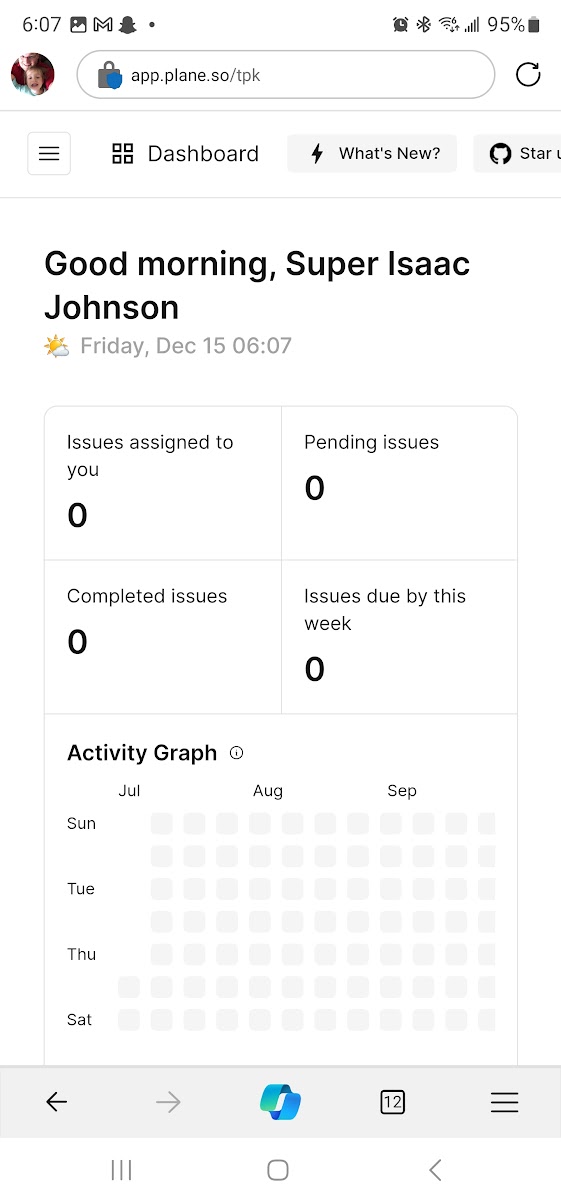

This returns us to a nice-looking activity dashboard for the project

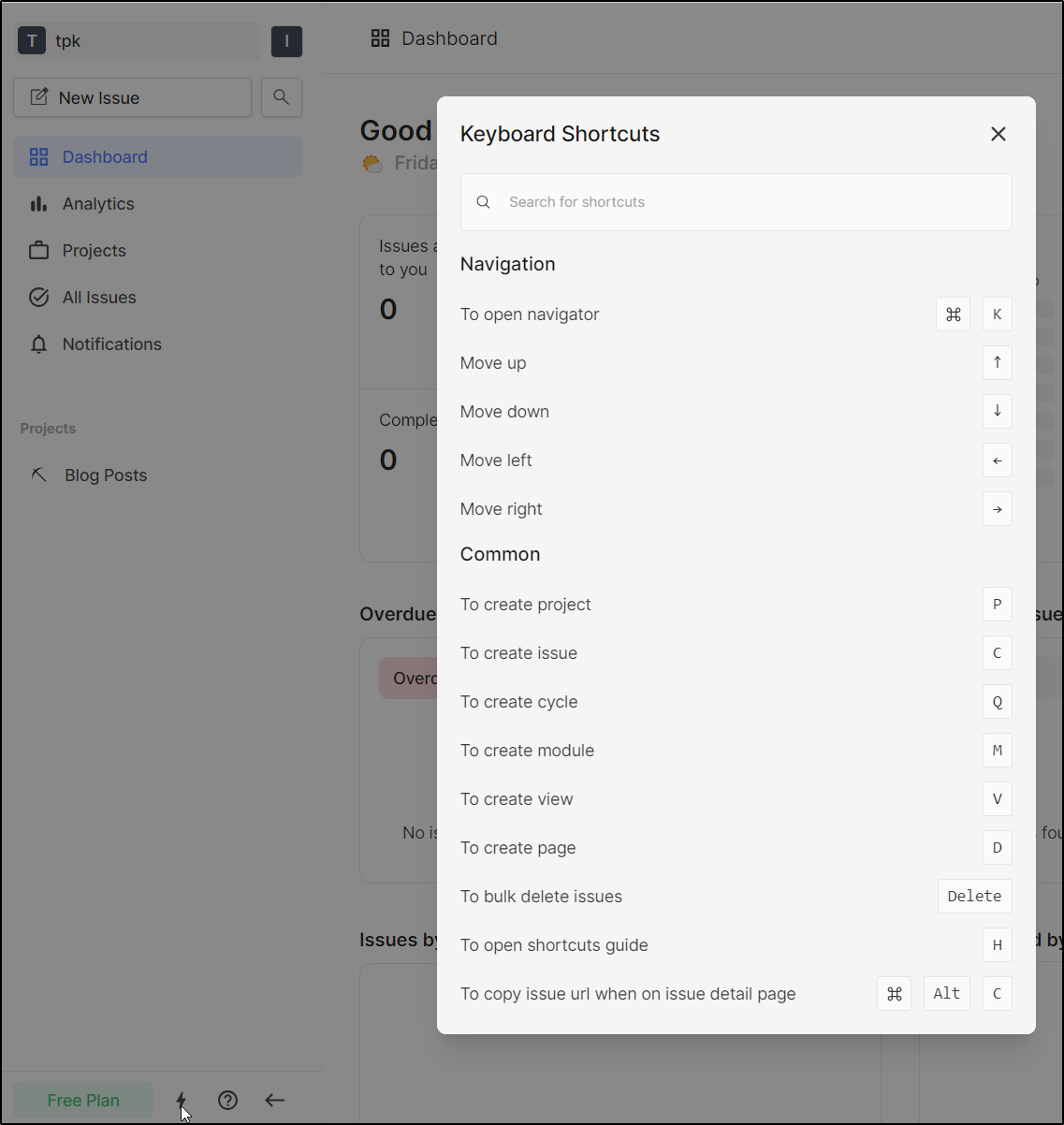

One handy feature of Plane.so is it has slick keyboard shortcuts. We can see the list by clicking the lightning bolt icon in the lower left

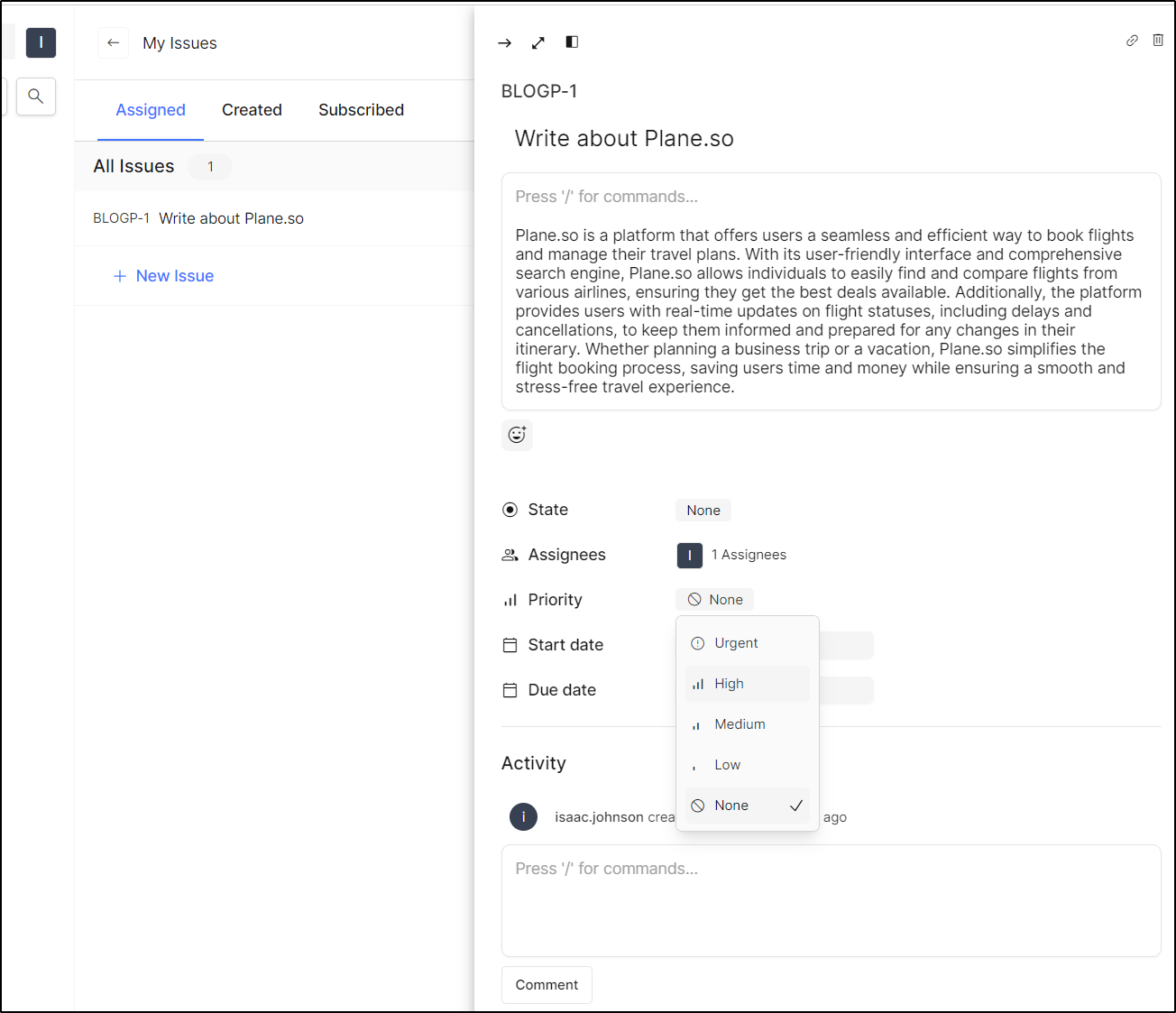

I’ll enter ‘c’ to bring up the create window for an issue. We can even try “AI”, why not.

We can now view the issue and set fields like Priority

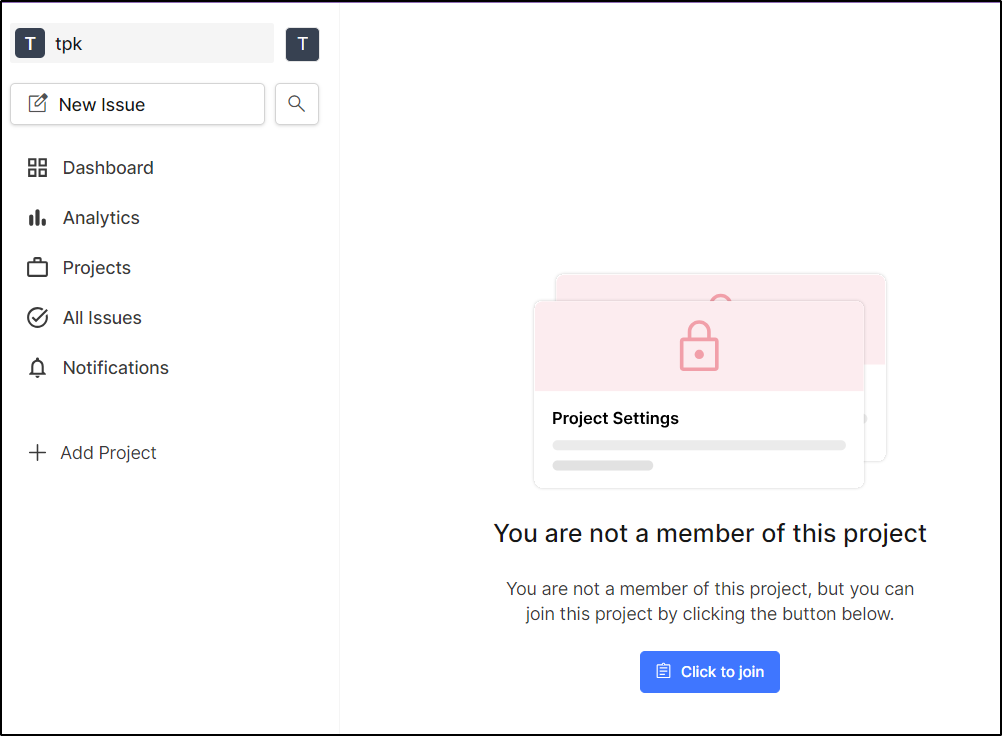

Interestingly I tried to view this issue in a different browser and it told me I wasn’t a member of the workspace. So we’ll have to review what “public” means or perhaps I’m using it errantly.

Other Users

Just hitting the site as a different user prompted for my IdP

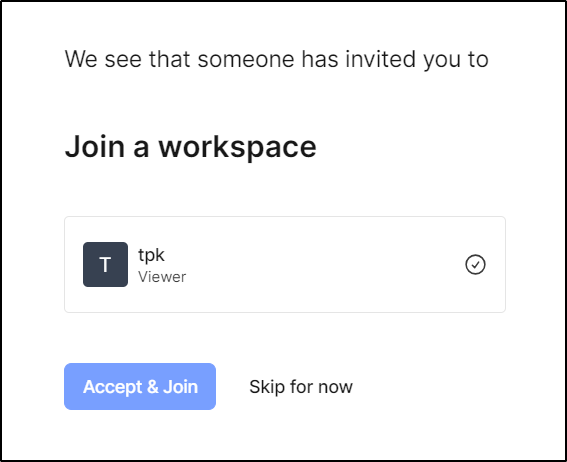

I confirmed my TZ and it then noticed an invite was awaiting this identity

Even though Tristan is just user/engineer, it would seem everyone gets their own “space”, so we’ll create a ‘tcmself’ space

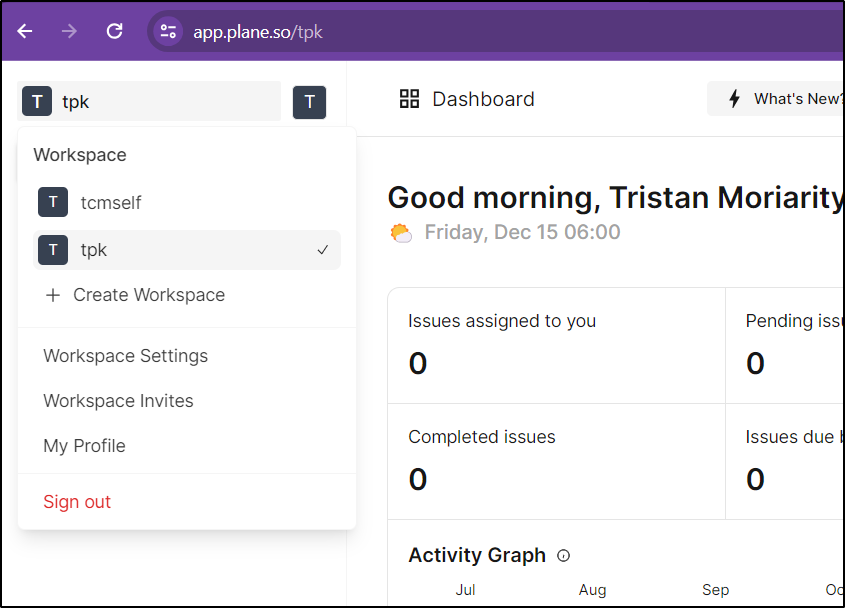

While they were dropped into TPK, they can hop back to the ‘tcmself’ space from the Workspaces dropdown

Just because Tristan is a user in TPK, doesn’t make them a member of all projects. To contribute, Tristan must “join” the Blog Posts project

They’ll click the join button

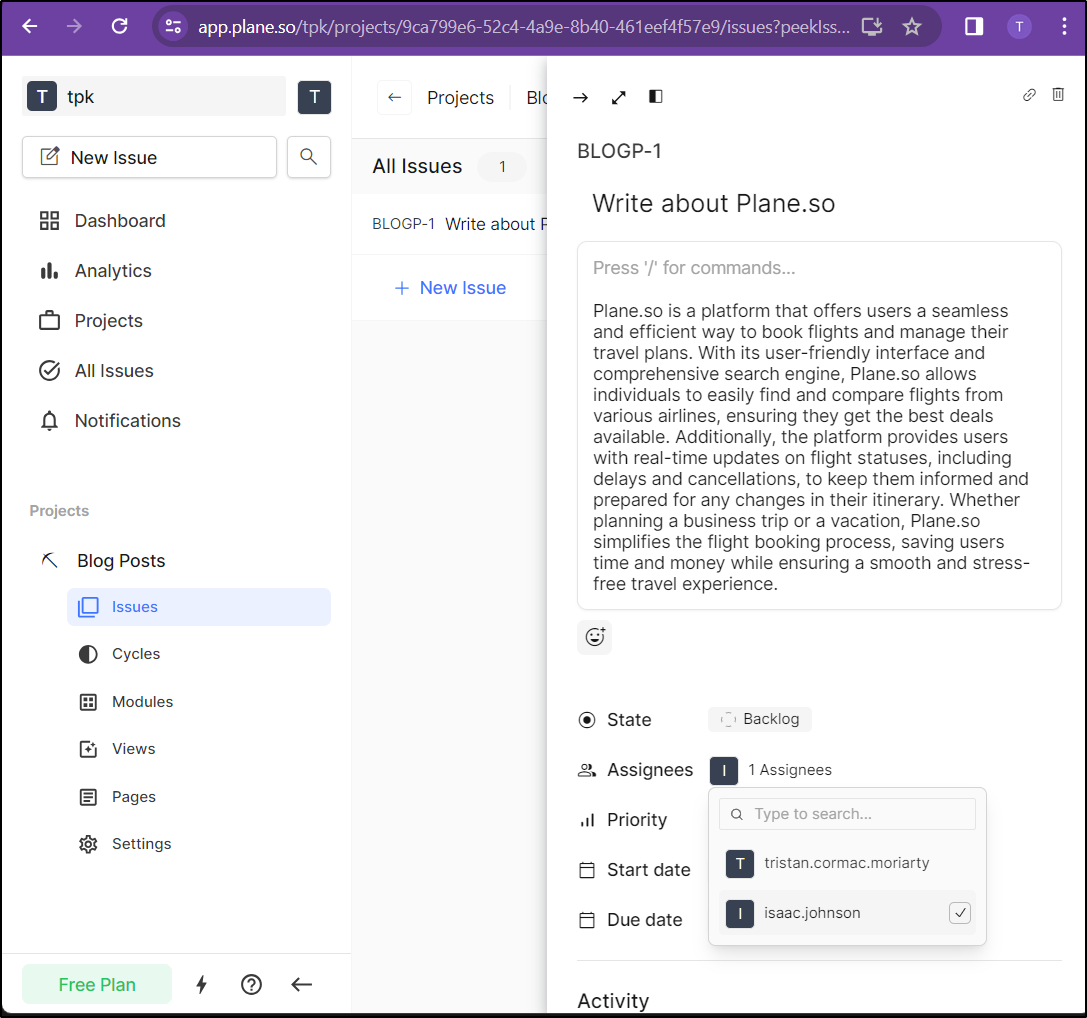

Now, the assignee list has two choices

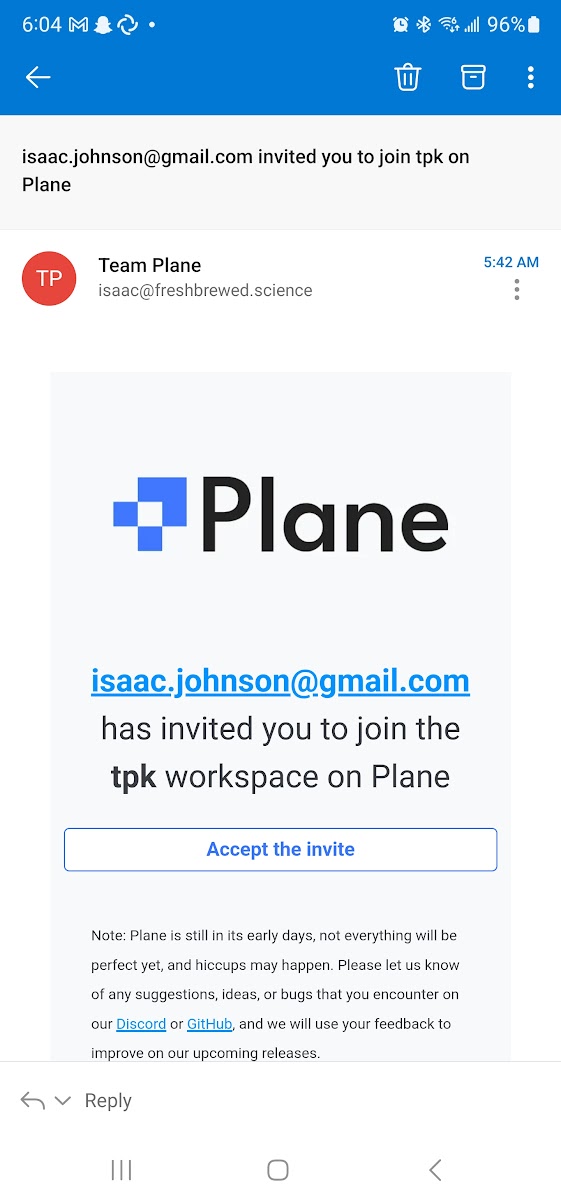

Let’s see how this looks in mobile for my last user who doesn’t use Google IdP.

The Executive get’s an email in outlook

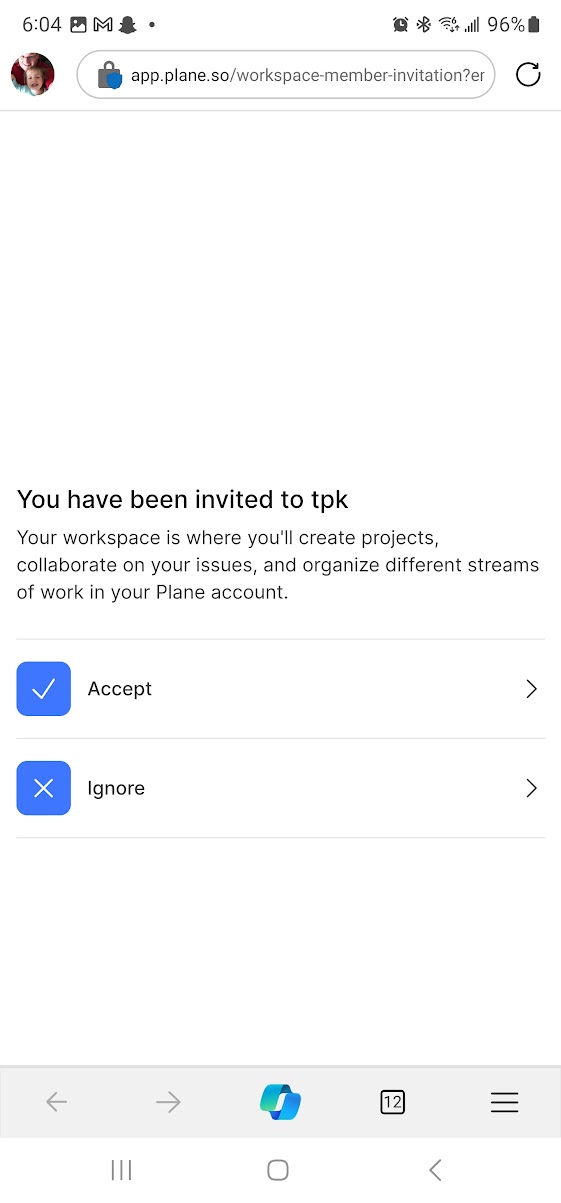

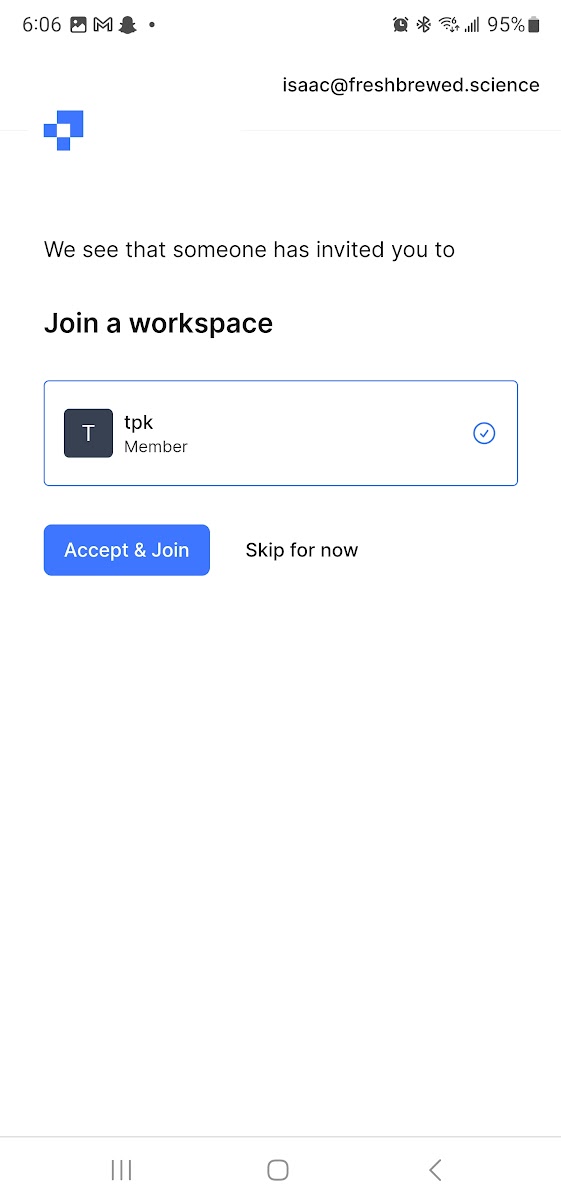

He sees he is invited to the workspace already

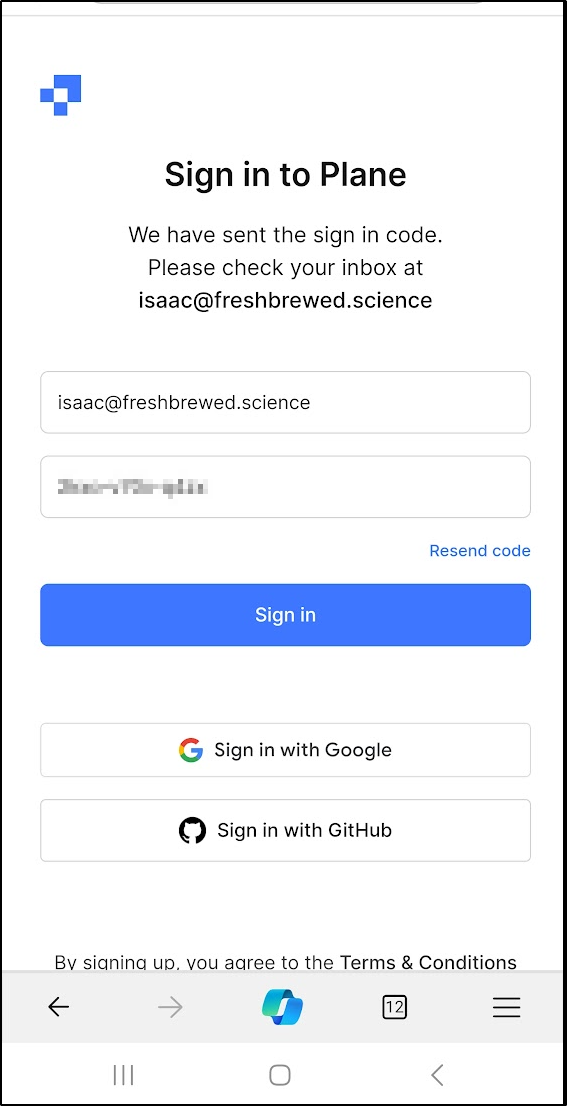

In this flow, the user is sent a code

They put in the code

Then it’s the normal flow of TZ and role

One last accept

and we can see the mobile version of the site

Summary

We have given a decent shot at getting this running on Docker and Kubernetes on prem. Clearly it’s a bit hefty for my current cluster as moving to Azure Kubernetes Service (AKS) sorted things out. We did a quick tour of the self-hosted before pivoting to the Cloud SaaS option.

We’ve only scratched the surface; Setting up a Workspace, project and users of various levels. We touched on AI descriptions and keyboard shortcuts. I’m rather excited to do more with Plane.so. In our next article, we’ll follow this up with a dive into Github integrations (it supposedly can do syncing with Github issues), API usage and how the open-source/free scales to the paid plans.