Published: Mar 28, 2024 by Isaac Johnson

With a new cluster comes the need to create a new Github action runner controller. The new method is using an “ARC” setup that can scale to 0.

We’ll start with the traditional runners as this will unblock our workflows and is a more common pattern.

In this writeup we will also cover Azure DevOps, Forgejo and Gitea.

Helm setup

Before we can setup a RunnerDeployment we need the Actions Controller and CRDs.

$ helm repo add actions-runner-controller https://actions-runner-controller.github.io/actions-runner-controller

"actions-runner-controller" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "openfunction" chart repository

...Successfully got an update from the "backstage" chart repository

...Successfully got an update from the "spacelift" chart repository

...Successfully got an update from the "sonatype" chart repository

...Successfully got an update from the "gitea-charts" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "openproject" chart repository

...Successfully got an update from the "frappe" chart repository

...Successfully got an update from the "ananace-charts" chart repository

...Successfully got an update from the "deliveryhero" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

I’ll need to fetch a Github token

$ export GHPAT=`getpass.sh GHRunnerPAT idjakv | tr -d '\n'`

$ helm upgrade --install --namespace actions-runner-system --create-namespace\

--set=authSecret.create=true\

--set=authSecret.github_token="$GHPAT"\

--wait actions-runner-controller actions-runner-controller/actions-runner-controller

Release "actions-runner-controller" does not exist. Installing it now.

NAME: actions-runner-controller

LAST DEPLOYED: Mon Mar 4 18:58:01 2024

NAMESPACE: actions-runner-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace actions-runner-system -l "app.kubernetes.io/name=actions-runner-controller,app.kubernetes.io/instance=actions-runner-controller" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace actions-runner-system $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace actions-runner-system port-forward $POD_NAME 8080:$CONTAINER_PORT

I need to apply a secret that will have my secrets for copying to S3. The rest is straightforward

$ cat DONTADDaddMyRunner.yml

---

apiVersion: v1

kind: Secret

metadata:

name: awsjekyll

type: Opaque

data:

PASSWORD: xxxxxxxxxxxxxxxxxxxxxxxxxxxx

USER_NAME: xxxxxxxxxxxxxxxxxxxxxxxxxxxx

---

apiVersion: actions.summerwind.dev/v1alpha1

kind: RunnerDeployment

metadata:

name: my-jekyllrunner-deployment

spec:

template:

spec:

repository: idjohnson/jekyll-blog

image: harbor.freshbrewed.science/freshbrewedprivate/myghrunner:1.1.16

imagePullSecrets:

- name: myharborreg

imagePullPolicy: IfNotPresent

dockerEnabled: true

env:

- name: AWS_DEFAULT_REGION

value: "us-east-1"

- name: AWS_ACCESS_KEY_ID

valueFrom:

secretKeyRef:

name: awsjekyll

key: USER_NAME

- name: AWS_SECRET_ACCESS_KEY

valueFrom:

secretKeyRef:

name: awsjekyll

key: PASSWORD

labels:

- my-jekyllrunner-deployment

---

apiVersion: actions.summerwind.dev/v1alpha1

kind: HorizontalRunnerAutoscaler

metadata:

name: my-jekyllrunner-deployment-autoscaler

spec:

# Runners in the targeted RunnerDeployment won't be scaled down for 5 minutes instead of the default 10 minutes now

scaleDownDelaySecondsAfterScaleOut: 300

scaleTargetRef:

name: my-jekyllrunner-deployment

minReplicas: 1

maxReplicas: 3

metrics:

- type: PercentageRunnersBusy

scaleUpThreshold: '0.75'

scaleDownThreshold: '0.25'

scaleUpFactor: '2'

scaleDownFactor: '0.5'

$ kubectl apply -f ./DONTADDaddMyRunner.yml

secret/awsjekyll created

runnerdeployment.actions.summerwind.dev/my-jekyllrunner-deployment created

horizontalrunnerautoscaler.actions.summerwind.dev/my-jekyllrunner-deployment-autoscaler created

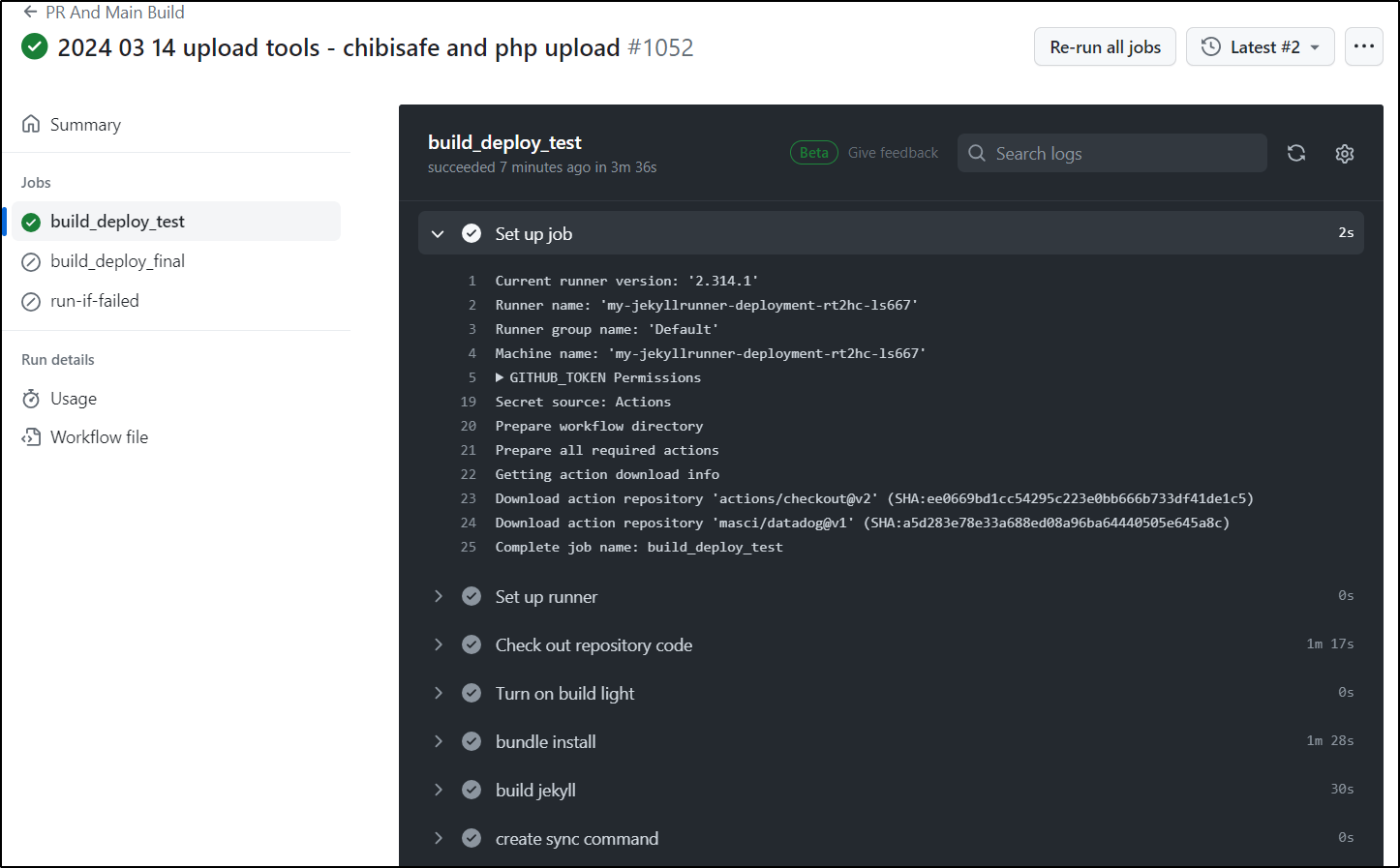

And I can see it working

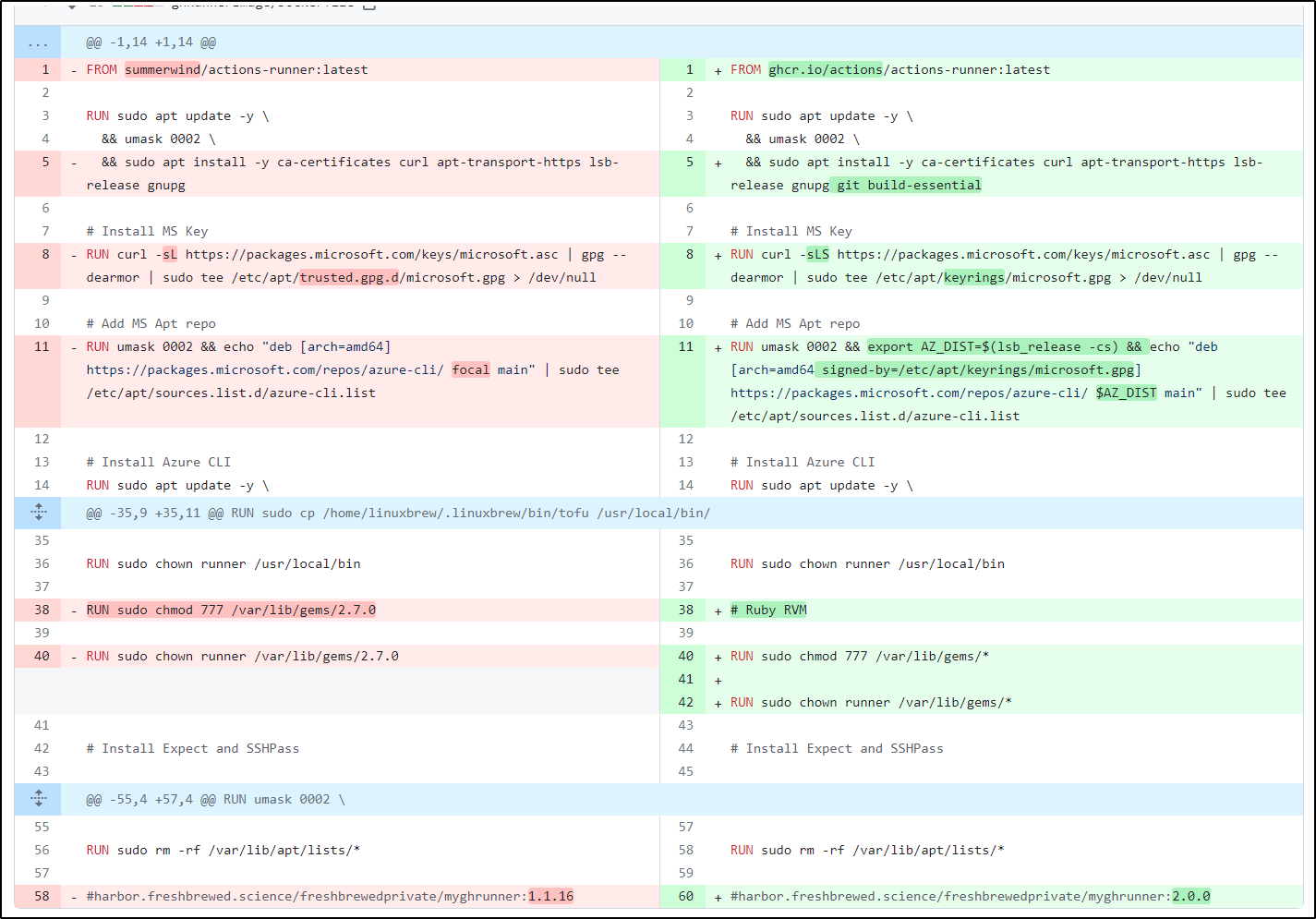

While I tried using my new 2.0.0 image I used with new ARC, it failed on the old

Azure DevOps

I added the helm repo

$ helm repo add nevertheless.space https://gitlab.com/api/v4/projects/24399618/packages/helm/stable

"nevertheless.space" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "spacelift" chart repository

...Successfully got an update from the "openfunction" chart repository

...Successfully got an update from the "backstage" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "nevertheless.space" chart repository

...Successfully got an update from the "sonatype" chart repository

...Successfully got an update from the "gitea-charts" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "openproject" chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "frappe" chart repository

...Successfully got an update from the "ananace-charts" chart repository

...Successfully got an update from the "deliveryhero" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

Then I can set some values

$ cat azdo.values.yaml

replicaCount: 1

azureDevOps:

url: "https://dev.azure.com/princessking"

pool: "K8s-onprem-int33"

pat: "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

workingDir: "_work"

statefulSet:

enabled: false

storageClassName: "default"

storageSize: "20Gi"

limits:

enabled: false

requestedMemory: "128Mi"

requestedCPU: "250m"

memoryLimit: "1Gi"

CPULimit: "1"

nodeSelector:

enabled: false

key: "kubernetes.io/hostname"

value: "eu-central-1.10.11.10.2"

Then install with helm

$ helm install -f azdo.values.yaml --namespace="azdo" --create-namespace "azdo" nevertheless.space/azuredevops-agent --version="1.2.0"

NAME: azdo

LAST DEPLOYED: Mon Mar 4 20:47:56 2024

NAMESPACE: azdo

STATUS: deployed

REVISION: 1

TEST SUITE: None

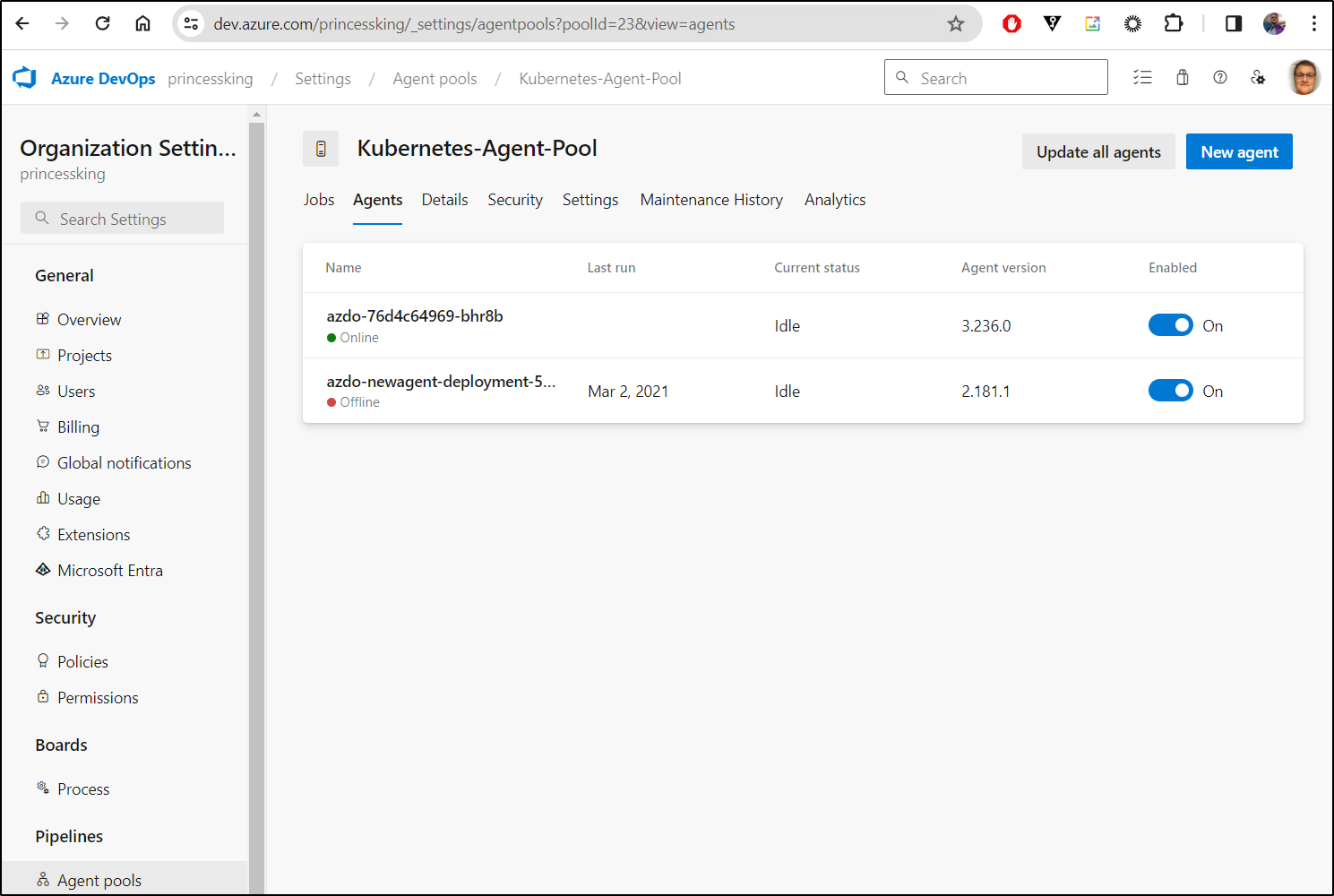

We can now see it running

$ kubectl get pods -n azdo

NAME READY STATUS RESTARTS AGE

azdo-76d4c64969-bhr8b 1/1 Running 0 95s

and I can see it listed now

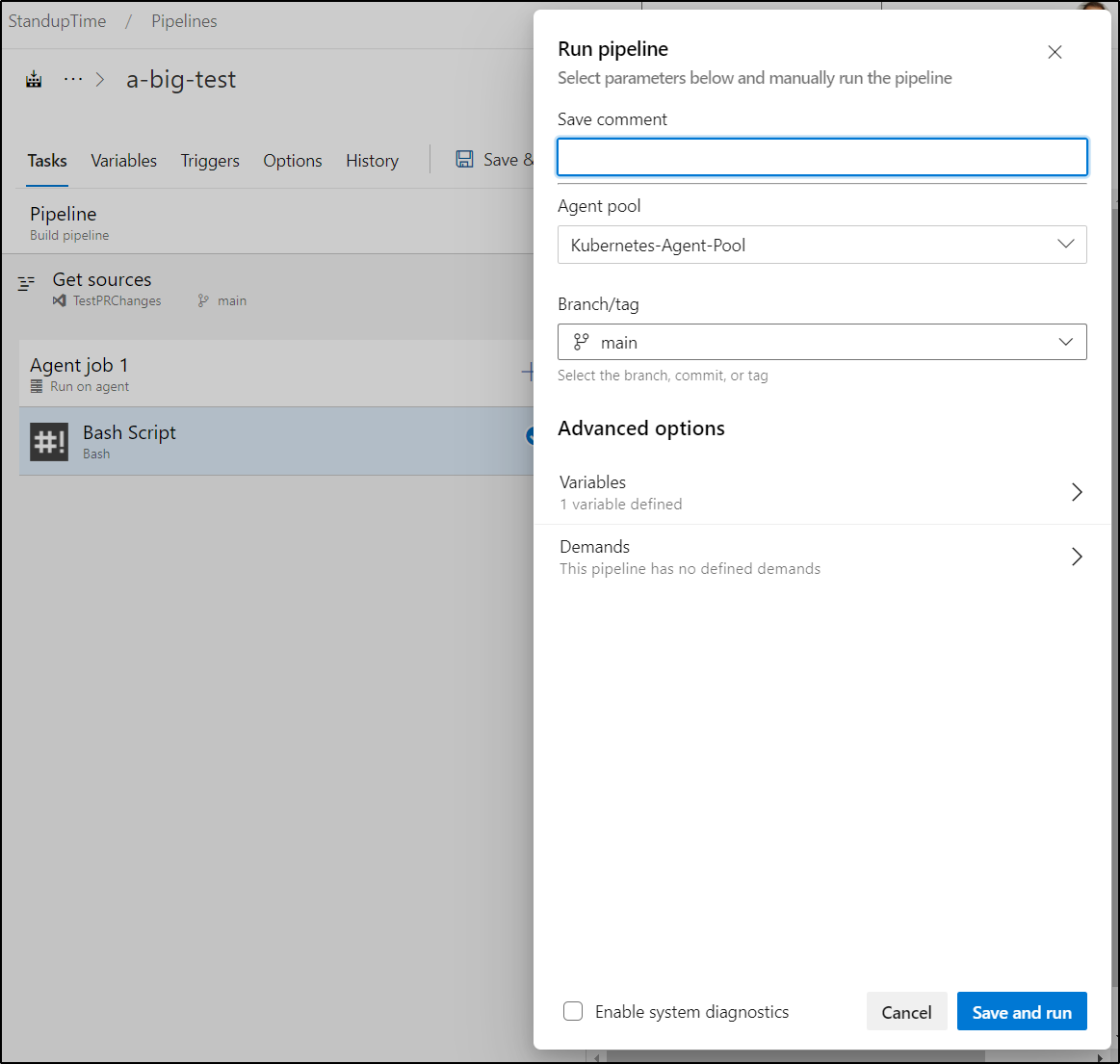

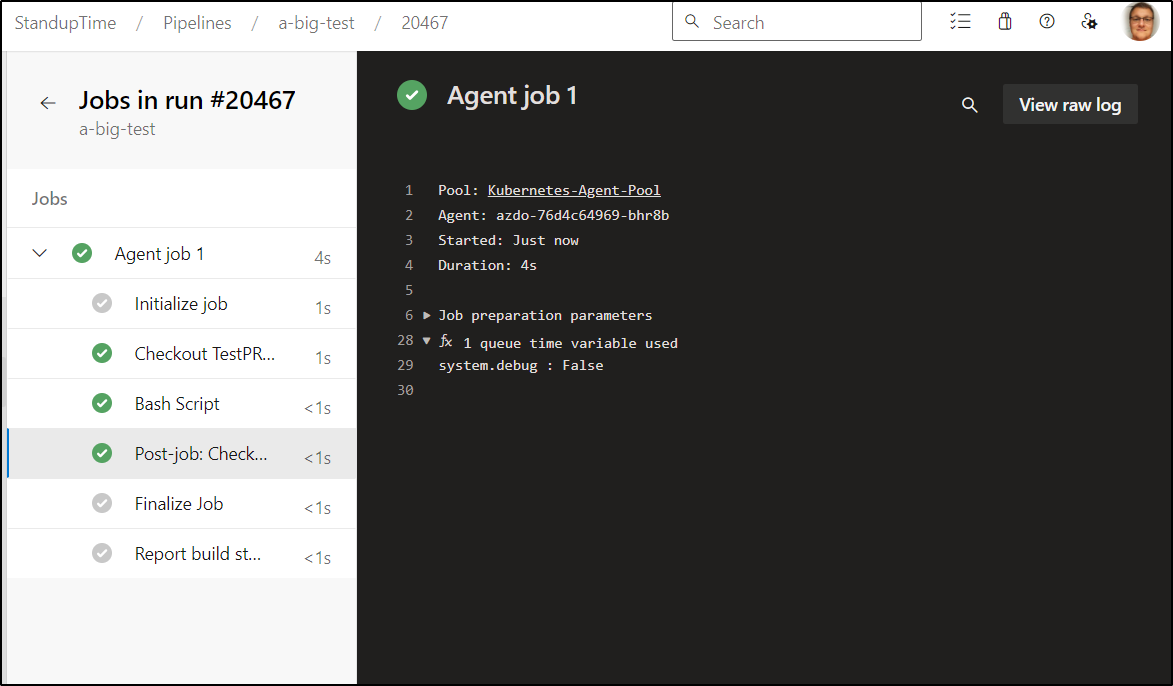

Now I can test

And I can test with a quick bash step

Forgejo Runner

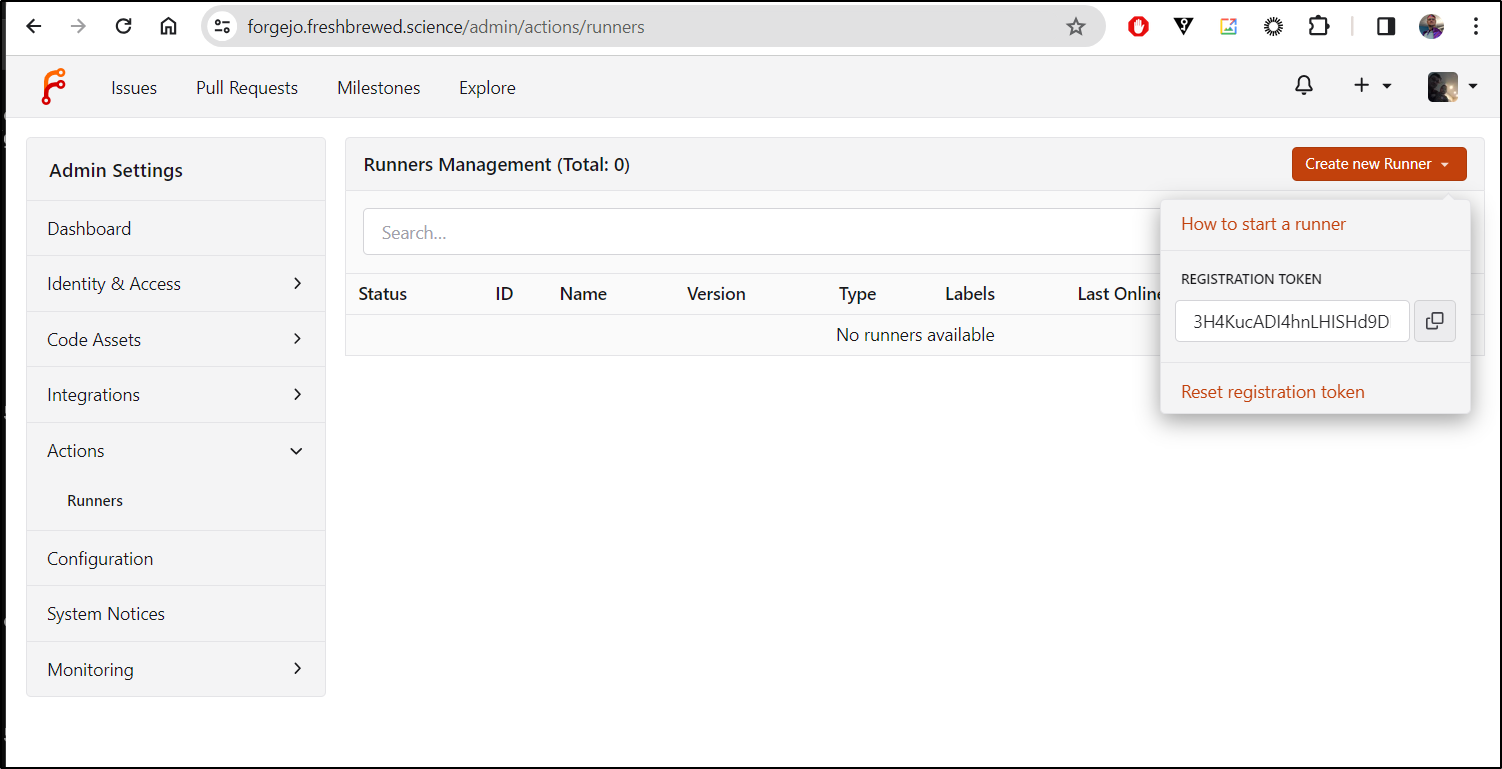

I first need to create a token

I can then pull the latest chart from codeberg

$ helm pull oci://codeberg.org/wrenix/helm-charts/forgejo-runner

Pulled: codeberg.org/wrenix/helm-charts/forgejo-runner:0.1.9

Digest: sha256:7d70a873ca26b312bafcbf24368e8db3f9fedebe2d2556357a42c550c09e6959

I can then install while passing the instance name, token and scaling details

$ helm install -n forgejo --create-namespace forgejo-runner-release \

--set runner.config.instance='https://forgejo.freshbrewed.science' \

--set runner.config.token=3H4KucADI4hnLHISHd9DR8VpPAmJo69jDTTezqnM \

--set runner.config.name='int33-kubernetes' \

--set autoscaling.minReplicas=1 \

--set autoscaling.maxReplicas=3 \

--set autoscaling.enabled=true \

oci://codeberg.org/wrenix/helm-charts/forgejo-runner

This timed out

$ helm install -n forgejo --create-namespace forgejo-runner-release \

--set runner.config.instance='https://forgejo.freshbrewed.science' \

--set runner.config.token=3H4KucADI4hnLHISHd9DR8VpPAmJo69jDTTezqnM \

--set runner.config.name='int33-kubernetes' \

--set autoscaling.minReplicas=1 \

--set autoscaling.maxReplicas=3 \

--set autoscaling.enabled=true \

oci://codeberg.org/wrenix/helm-charts/forgejo-runner

Pulled: codeberg.org/wrenix/helm-charts/forgejo-runner:0.1.9

Digest: sha256:7d70a873ca26b312bafcbf24368e8db3f9fedebe2d2556357a42c550c09e6959

Error: INSTALLATION FAILED: failed pre-install: 1 error occurred:

* timed out waiting for the condition

But I did see things creating

$ kubectl get pods -n forgejo

NAME READY STATUS RESTARTS AGE

forgejo-redis-cluster-1 1/1 Running 0 26h

forgejo-redis-cluster-2 1/1 Running 0 26h

forgejo-redis-cluster-0 1/1 Running 0 26h

forgejo-556468bc5c-xhqt7 1/1 Running 0 25h

forgejo-runner-release-config-generate-f4vzc 0/2 ContainerCreating 0 8m53s

However, I seemed to get stuck

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 15s default-scheduler Successfully assigned forgejo/forgejo-runner-release-config-generate-ld8sq to hp-hp-elitebook-850-g2

Warning FailedMount 0s (x6 over 15s) kubelet MountVolume.SetUp failed for volume "runner-data" : secret "forgejo-runner-release-config" not found

Using a Gitea chart

$ helm repo add vquie https://vquie.github.io/helm-charts

"vquie" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "vquie" chart repository

...Successfully got an update from the "actions-runner-controller" chart repository

...Successfully got an update from the "backstage" chart repository

...Successfully got an update from the "openfunction" chart repository

...Successfully got an update from the "spacelift" chart repository

...Successfully got an update from the "sonatype" chart repository

...Successfully got an update from the "nevertheless.space" chart repository

...Successfully got an update from the "openproject" chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "gitea-charts" chart repository

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "frappe" chart repository

...Successfully got an update from the "ananace-charts" chart repository

...Successfully got an update from the "deliveryhero" chart repository

...Successfully got an update from the "bitnami" chart repository

Update Complete. ⎈Happy Helming!⎈

Then launching the helm

$ helm install act-runner -n forgejo --set act_runner.storageclass="local-path" --set act_runner.instance="https://forgejo.freshbrewed

.science" --set act_runner.token=3H4KucADI4hnLHISHd9DR8VpPAmJo69jDTTezqnM vquie/act-runner

NAME: act-runner

LAST DEPLOYED: Mon Mar 4 21:40:18 2024

NAMESPACE: forgejo

STATUS: deployed

REVISION: 1

TEST SUITE: None

And I can see it running

$ kubectl get pods -n forgejo

NAME READY STATUS RESTARTS AGE

forgejo-runner-release-config-generate-ld8sq 0/2 ContainerCreating 0 21h

act-runner-f657f4cb-tq2bq 2/2 Running 0 20h

forgejo-redis-cluster-0 1/1 Running 0 12h

forgejo-redis-cluster-1 1/1 Running 0 12h

forgejo-redis-cluster-2 1/1 Running 0 12h

forgejo-798c6f4f4b-fxq6p 1/1 Running 0 11h

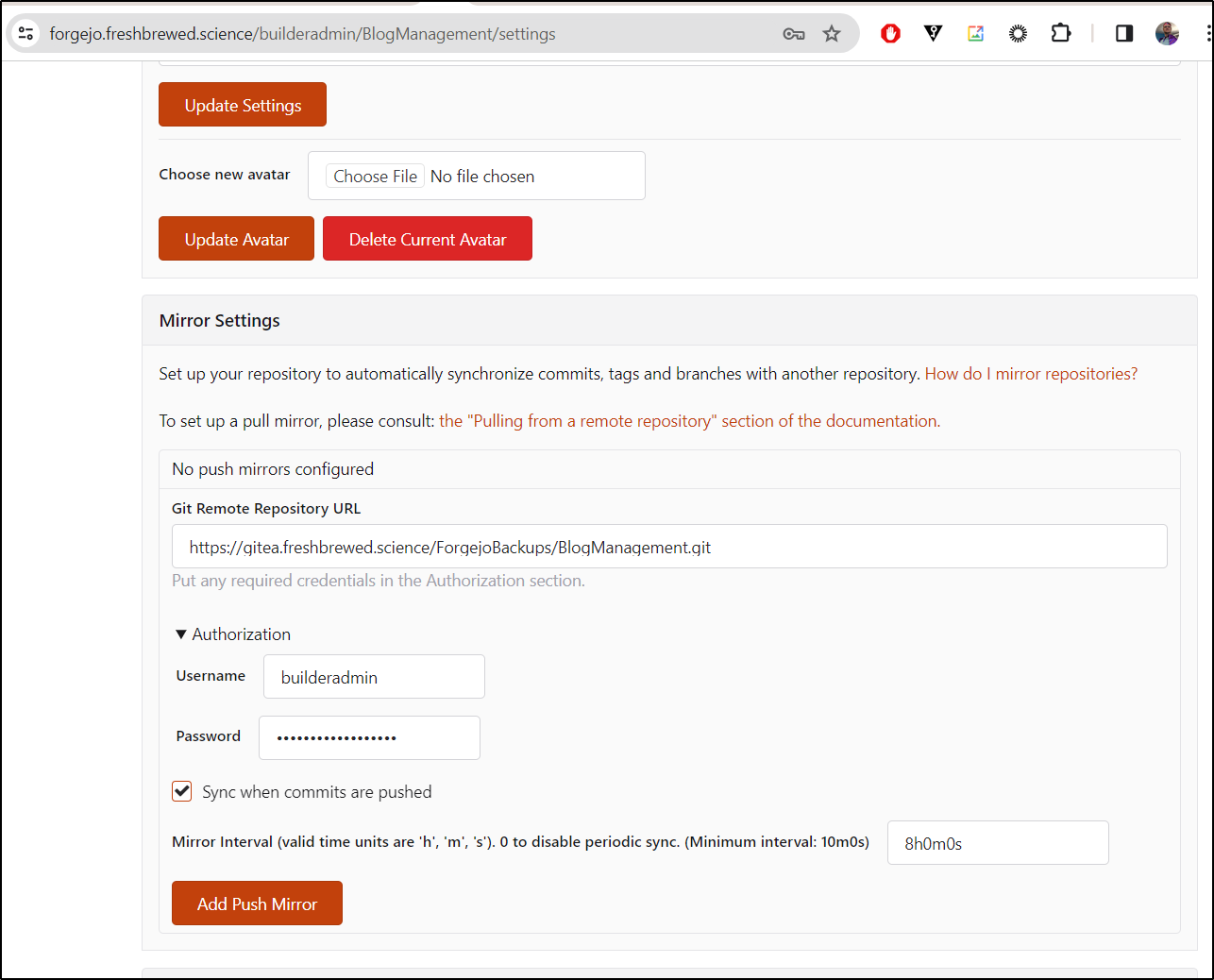

I have to admit I did a dumb thing. While testing, i errantly cleaned up what I thought was a bad Action Runner and managed to dump the whole Forgejo system. I had not made that many changes, but it was another illustration about why it’s important to sync to external systems for safety.

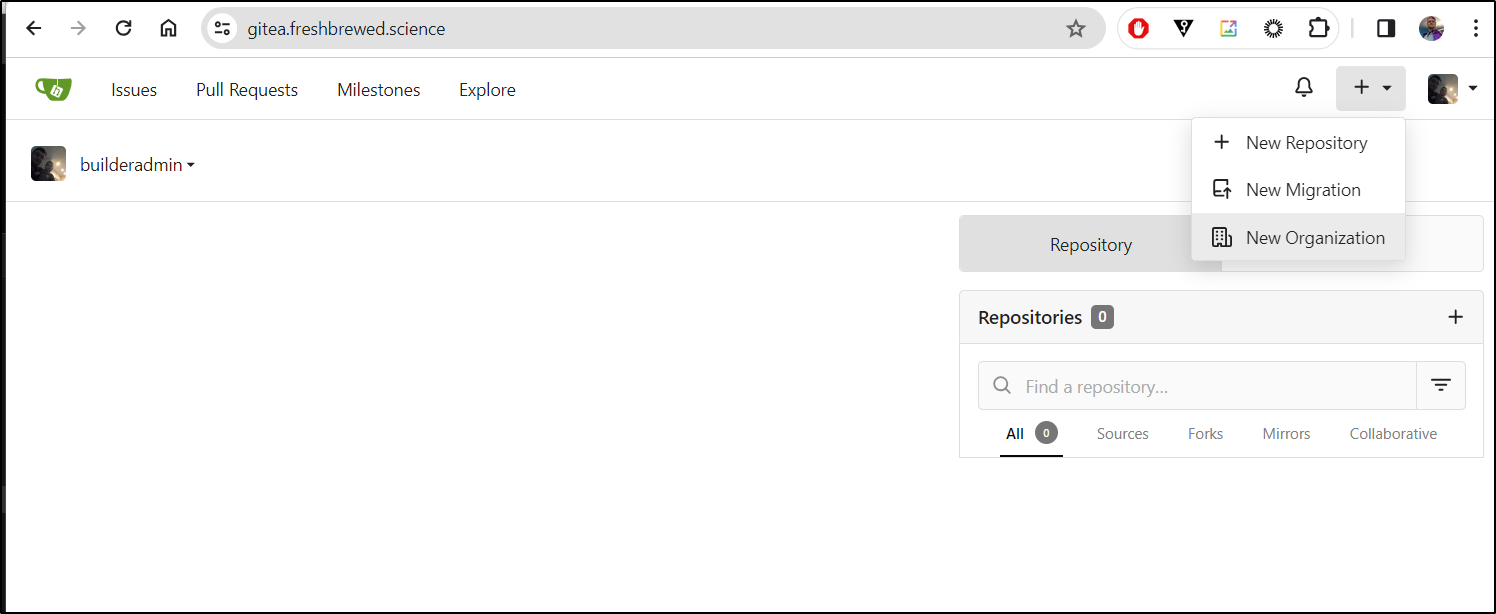

Gitea

This leads me to my respin of Gitea.

I had an active, albeit unused Gitea on my Dockerhost (192.168.1.100).

I just needed to expose it with an external endpoint and ingress

$ cat gitea.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: gitea-external-ip

labels:

app.kubernetes.io/instance: giteaingress

name: giteaingress

spec:

rules:

- host: gitea.freshbrewed.science

http:

paths:

- backend:

service:

name: gitea-external-ip

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- gitea.freshbrewed.science

secretName: gitea-tls

---

apiVersion: v1

kind: Service

metadata:

annotations:

name: gitea-external-ip

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: giteap

port: 80

protocol: TCP

targetPort: 4220

sessionAffinity: None

type: ClusterIP

---

apiVersion: v1

kind: Endpoints

metadata:

annotations:

name: gitea-external-ip

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: giteap

port: 4220

protocol: TCP

$ kubectl apply -f ./gitea.yaml

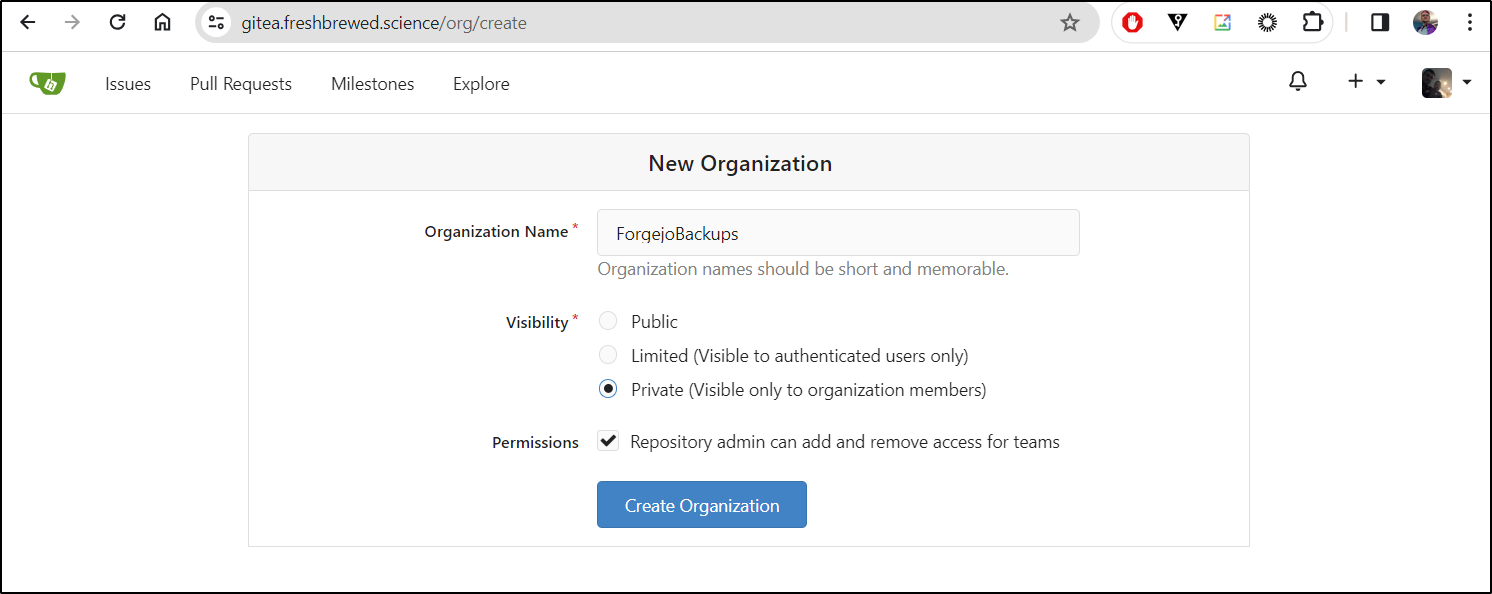

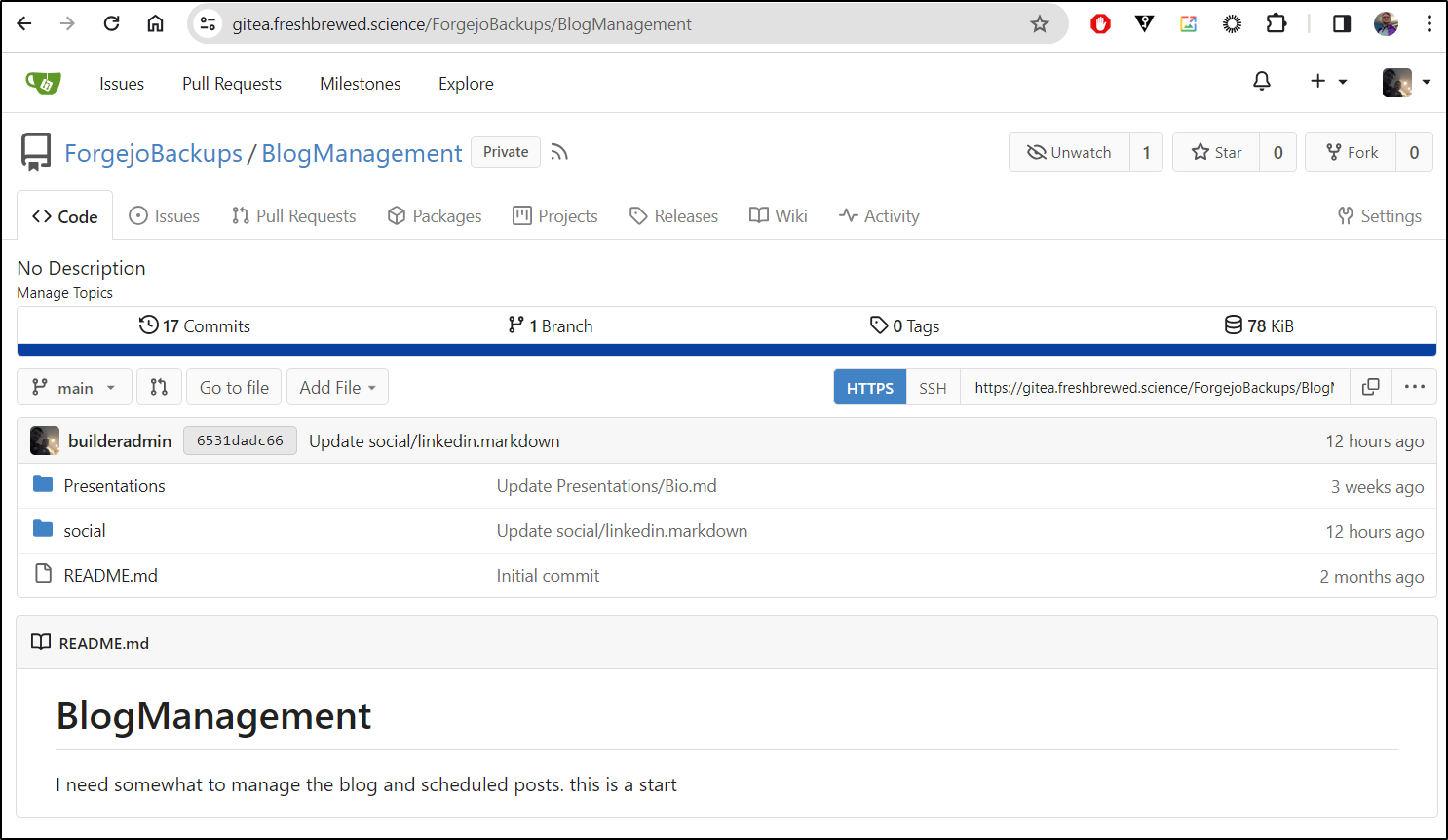

I then will create a new Organization

This will be used for backups, so I’ll call it ‘ForgejoBackups’

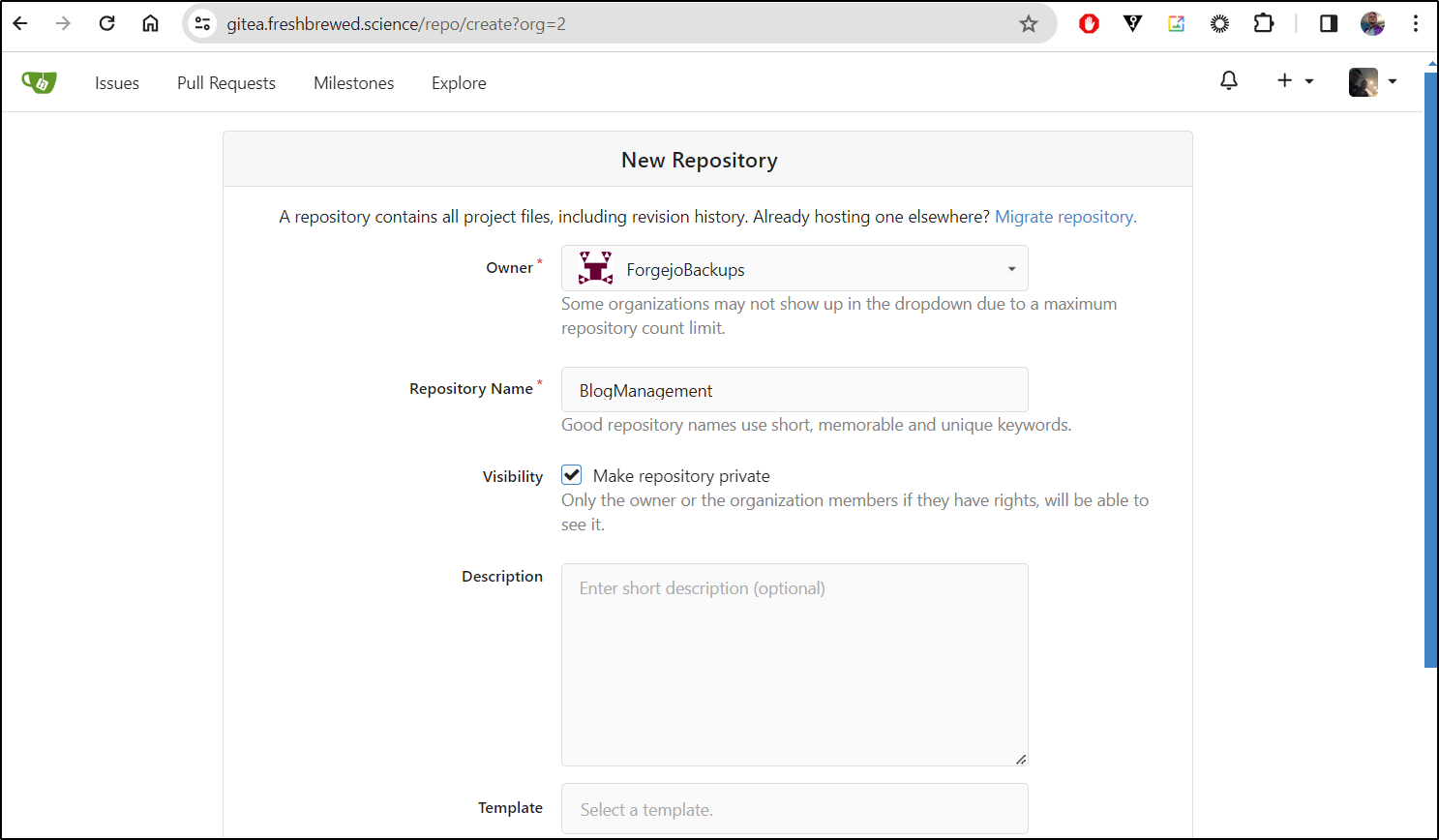

I then created the Repo

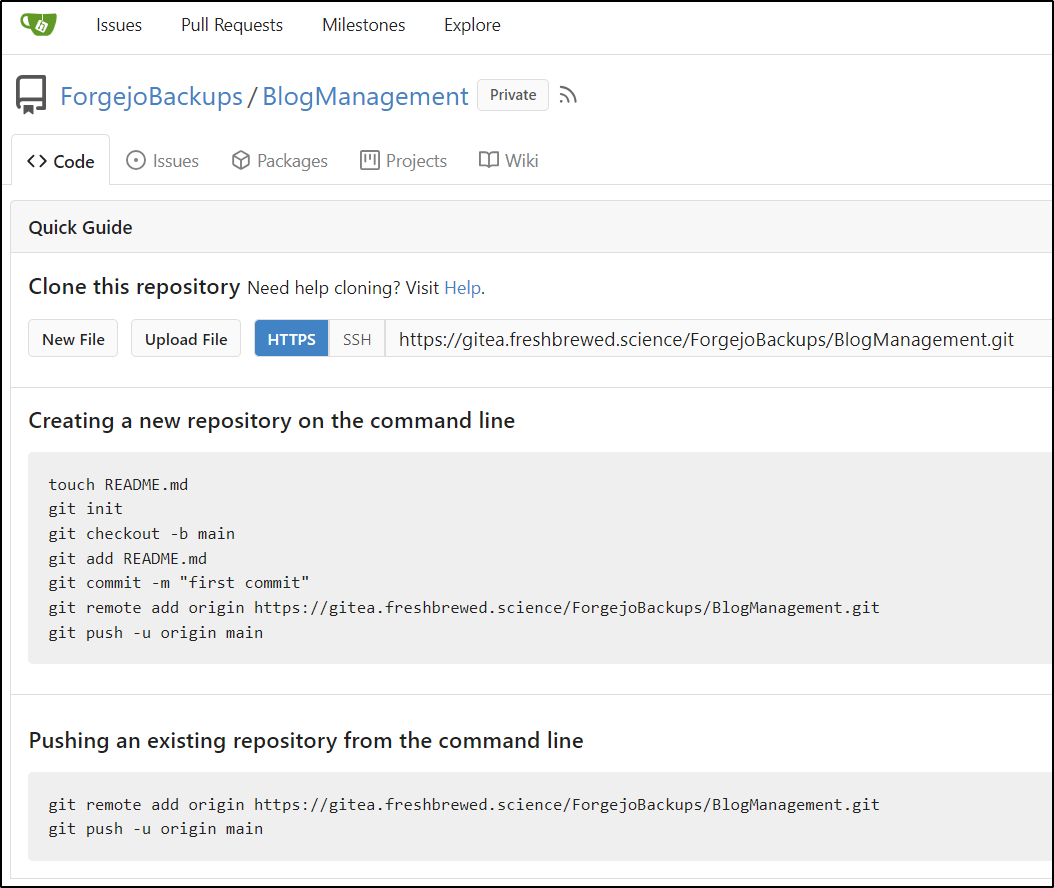

I now have a URL

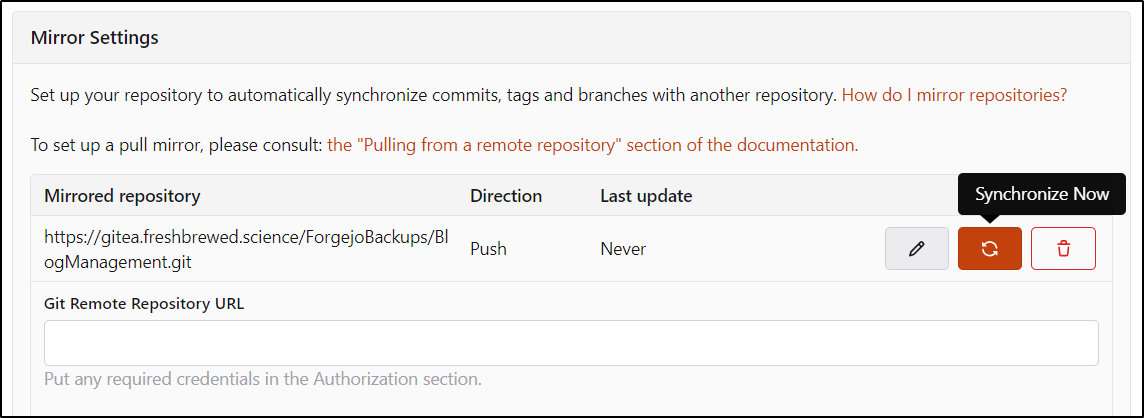

Now, back in Forgejo, I can set a remote mirror

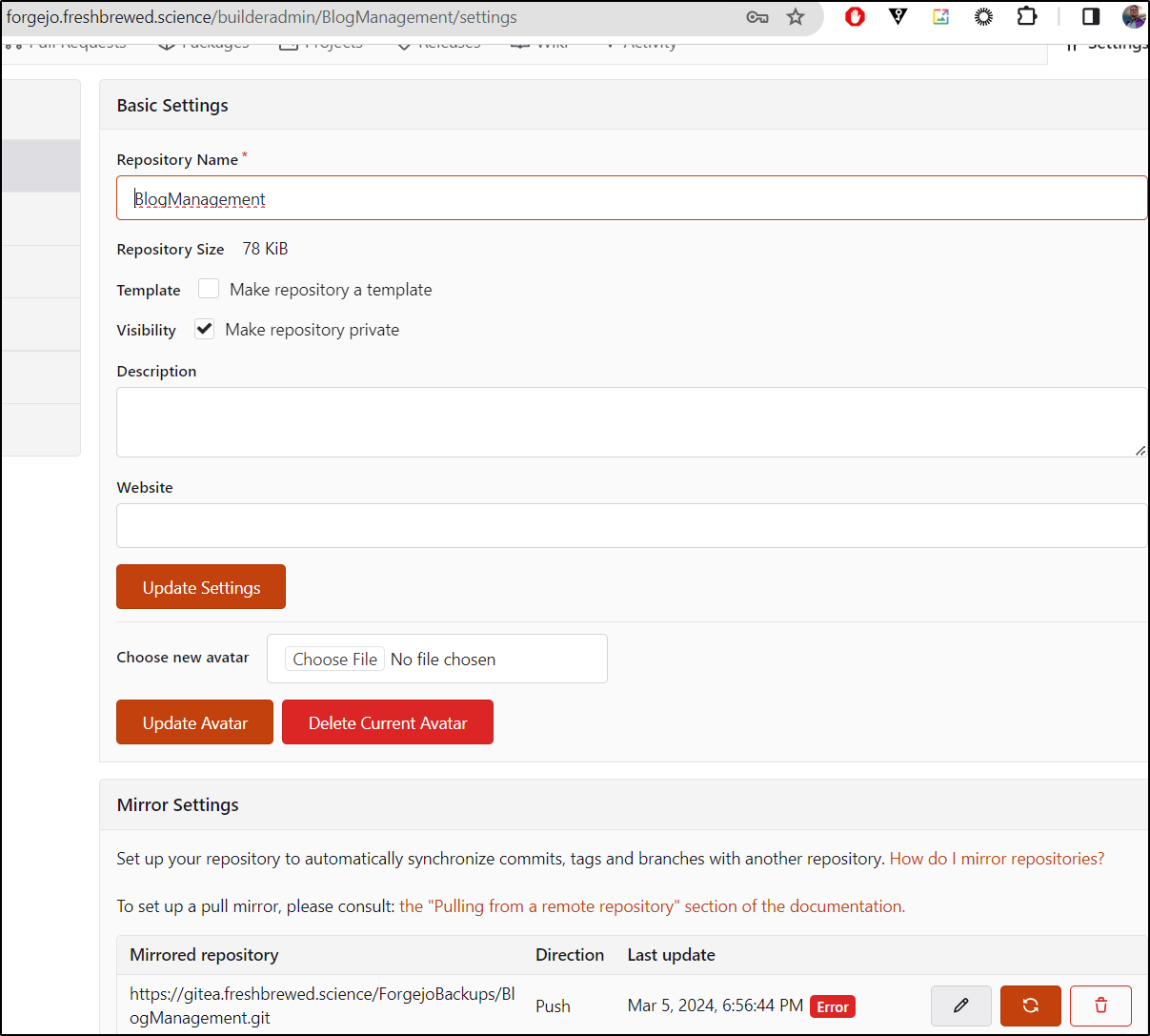

I then synced it

And we can see it synced

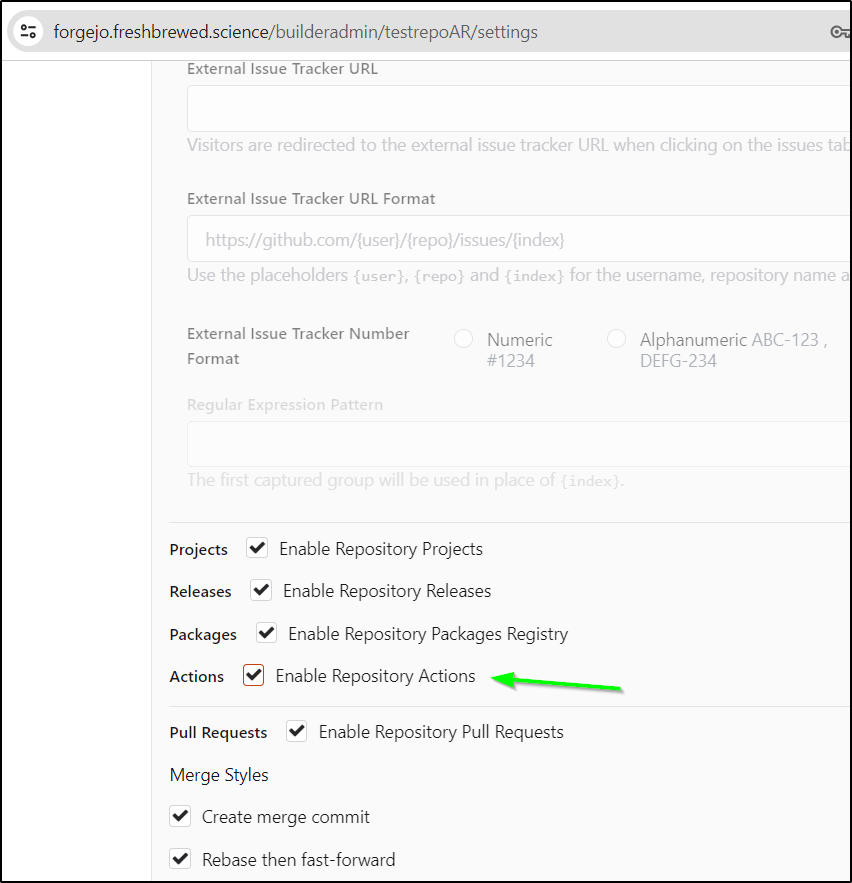

In testing actions, make sure to enable actions on a test repo

I added a quick runner test

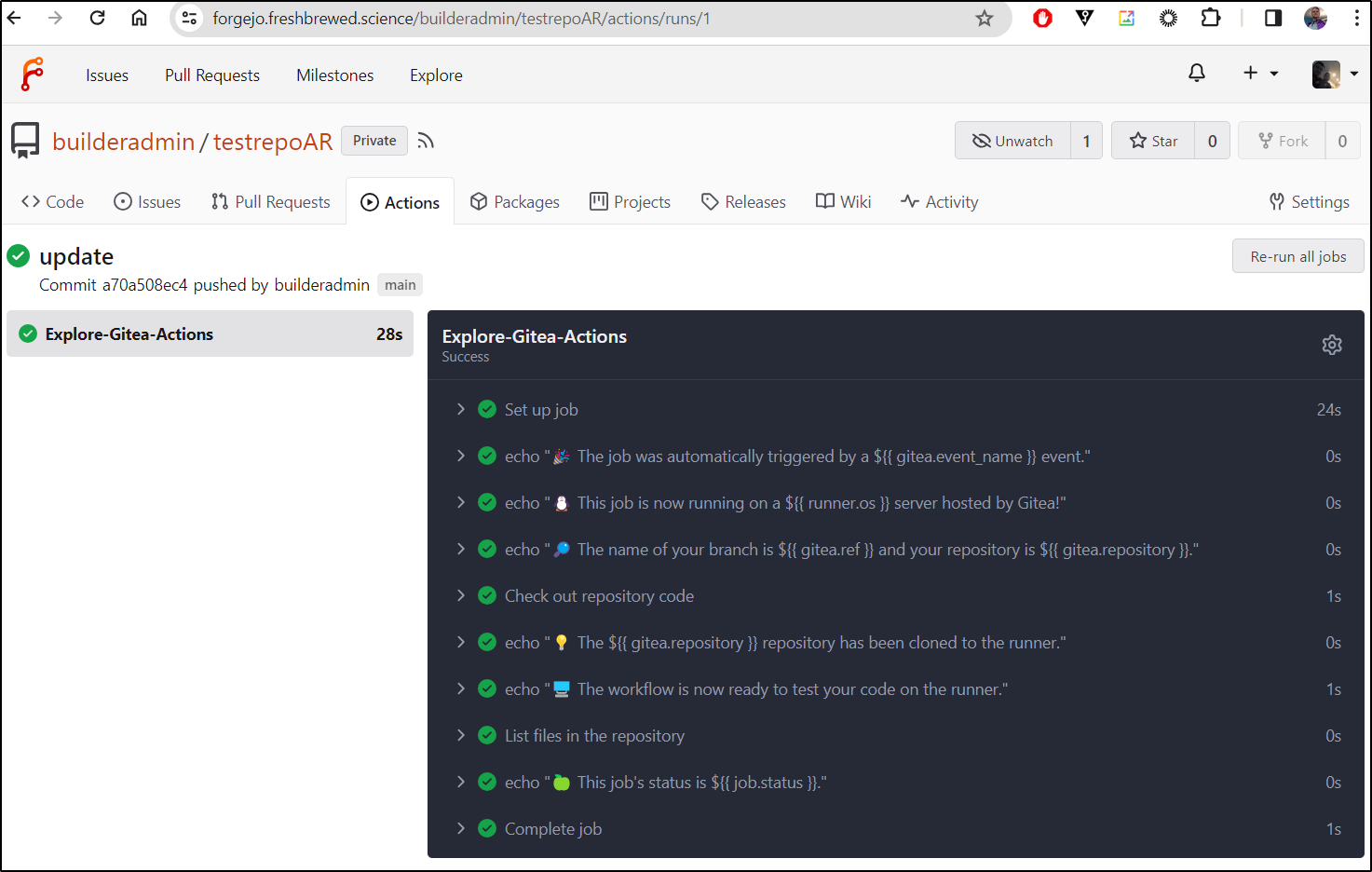

$ cat .gitea/workflows/demo.yaml

name: Gitea Actions Demo

run-name: $ is testing out Gitea Actions 🚀

on: [push]

jobs:

Explore-Gitea-Actions:

runs-on: ubuntu-latest

steps:

- run: echo "🎉 The job was automatically triggered by a $ event."

- run: echo "🐧 This job is now running on a $ server hosted by Gitea!"

- run: echo "🔎 The name of your branch is $ and your repository is $."

- name: Check out repository code

uses: actions/checkout@v3

- run: echo "💡 The $ repository has been cloned to the runner."

- run: echo "🖥️ The workflow is now ready to test your code on the runner."

- name: List files in the repository

run: |

ls $

- run: echo "🍏 This job's status is $."

Then pushed it up

builder@LuiGi17:~/Workspaces/testrepoAR$ vi .gitea/workflows/demo.yaml

builder@LuiGi17:~/Workspaces/testrepoAR$ git status

On branch main

Your branch is up to date with 'origin/main'.

Untracked files:

(use "git add <file>..." to include in what will be committed)

.gitea/

nothing added to commit but untracked files present (use "git add" to track)

builder@LuiGi17:~/Workspaces/testrepoAR$ git add .gitea/

builder@LuiGi17:~/Workspaces/testrepoAR$ git status

On branch main

Your branch is up to date with 'origin/main'.

Changes to be committed:

(use "git restore --staged <file>..." to unstage)

new file: .gitea/workflows/demo.yaml

builder@LuiGi17:~/Workspaces/testrepoAR$ git commit -m update

[main a70a508] update

1 file changed, 19 insertions(+)

create mode 100644 .gitea/workflows/demo.yaml

builder@LuiGi17:~/Workspaces/testrepoAR$ git push

Enumerating objects: 6, done.

Counting objects: 100% (6/6), done.

Delta compression using up to 16 threads

Compressing objects: 100% (3/3), done.

Writing objects: 100% (5/5), 795 bytes | 795.00 KiB/s, done.

Total 5 (delta 0), reused 0 (delta 0), pack-reused 0

remote: . Processing 1 references

remote: Processed 1 references in total

To https://forgejo.freshbrewed.science/builderadmin/testrepoAR.git

0ceb65b..a70a508 main -> main

Which worked

Summary

We covered a lot of things in this writeup including Github Runners, Azure DevOps agents and restoration of Forgejo. We included forwarding traffic to a containerized Gitea. After learning a lesson about running kubectl delete when tired, I setup push mirrors to the containerized Gitea for all new repos. Lastly, I did a quick test of Gitea actions.