Published: May 22, 2025 by Isaac Johnson

Today we are going to dig into two very cool Open-Source containerized apps. The first, which is much smaller is Airstation which is just a very simple way to fire up a containerize app to serve a stream of music (or anything mp3 - could be audiobooks, podcasts, sermons, etc).

The other app we’ll explore is much bigger, NextCloud, which has many ways to install and configure. We’ll look at a few including Kubernetes with helm. I’ll just cover some of the basics as well as mobile app usage. I’ll wrap with some notes on costs and hosting options.

Let’s get some tunes going first…

Airstation

I saw a MariousHosting article on Airstation recently and wanted to check into it.

We can see Airstation on Github and the Installation Docs.

We can see the docker-compose file in the Github repo

builder@DESKTOP-QADGF36:~/Workspaces$ git clone https://github.com/cheatsnake/airstation.git

Cloning into 'airstation'...

remote: Enumerating objects: 1816, done.

remote: Counting objects: 100% (253/253), done.

remote: Compressing objects: 100% (165/165), done.

remote: Total 1816 (delta 110), reused 197 (delta 65), pack-reused 1563 (from 1)

Receiving objects: 100% (1816/1816), 2.89 MiB | 11.10 MiB/s, done.

Resolving deltas: 100% (1013/1013), done.

builder@DESKTOP-QADGF36:~/Workspaces$ cd airstation/

builder@DESKTOP-QADGF36:~/Workspaces/airstation$ cat docker-compose.yml

services:

app:

build:

context: .

dockerfile: Dockerfile

args:

- AIRSTATION_PLAYER_TITLE=${AIRSTATION_PLAYER_TITLE}

ports:

- "7331:7331"

volumes:

- database:/app/storage

- ./static:/app/static

restart: unless-stopped

env_file:

- .env

volumes:

database:

Let’s now create some random strings

builder@DESKTOP-QADGF36:~/Workspaces/airstation$ cat .env

AIRSTATION_SECRET_KEY=lzJtFwSMQzUKdBmAmq6b

AIRSTATION_JWT_SIGN=LUzCDDM5X2kMjrKvOHoclku

Then we can fire up docker compose

builder@DESKTOP-QADGF36:~/Workspaces/airstation$ docker compose up -d

WARN[0000] The "AIRSTATION_PLAYER_TITLE" variable is not set. Defaulting to a blank string.

Compose can now delegate builds to bake for better performance.

To do so, set COMPOSE_BAKE=true.

[+] Building 45.8s (34/34) FINISHED docker:default

=> [app internal] load build definition from Dockerfile 0.1s

=> => transferring dockerfile: 830B 0.0s

=> [app internal] load metadata for docker.io/library/node:22-alpine 1.4s

=> [app internal] load metadata for docker.io/library/golang:1.24-alpine 1.4s

=> [app internal] load metadata for docker.io/library/alpine:latest 1.4s

=> [app auth] library/golang:pull token for registry-1.docker.io 0.0s

=> [app auth] library/alpine:pull token for registry-1.docker.io 0.0s

=> [app auth] library/node:pull token for registry-1.docker.io 0.0s

=> [app internal] load .dockerignore 0.0s

=> => transferring context: 85B 0.0s

=> [app server 1/7] FROM docker.io/library/golang:1.24-alpine@sha256:ef18ee7117463ac1055f5a370ed18b8750f01589f13ea0b48642f5792b234044 17.6s

=> => resolve docker.io/library/golang:1.24-alpine@sha256:ef18ee7117463ac1055f5a370ed18b8750f01589f13ea0b48642f5792b234044 0.0s

=> => sha256:ef18ee7117463ac1055f5a370ed18b8750f01589f13ea0b48642f5792b234044 10.29kB / 10.29kB 0.0s

=> => sha256:be1cf73ca9fbe9c5108691405b627cf68b654fb6838a17bc1e95cc48593e70da 1.92kB / 1.92kB 0.0s

=> => sha256:68d4da47fd566b8a125df912bff4ab337355b1f6b2c478f5e8593cb98b4d1563 2.08kB / 2.08kB 0.0s

=> => sha256:bcde94e77dfab30cceb8ba9b43d3c7ac5efb03bcd79b63cf02b60a0c23261582 294.91kB / 294.91kB 0.4s

=> => extracting sha256:bcde94e77dfab30cceb8ba9b43d3c7ac5efb03bcd79b63cf02b60a0c23261582 0.2s

=> => sha256:92b00dc8dfbaa6cd7e39d09d4f1c726259b4d9a29c697192955da032f472d642 78.98MB / 78.98MB 4.5s

=> => sha256:9b664c7c39c20f5e319a449219b9641bd2fdf7325727a3f69abe1e81bf0f726a 126B / 126B 0.7s

=> => sha256:4f4fb700ef54461cfa02571ae0db9a0dc1e0cdb5577484a6d75e68dc38e8acc1 32B / 32B 0.8s

=> => extracting sha256:92b00dc8dfbaa6cd7e39d09d4f1c726259b4d9a29c697192955da032f472d642 12.3s

=> => extracting sha256:9b664c7c39c20f5e319a449219b9641bd2fdf7325727a3f69abe1e81bf0f726a 0.0s

=> => extracting sha256:4f4fb700ef54461cfa02571ae0db9a0dc1e0cdb5577484a6d75e68dc38e8acc1 0.0s

=> [app stage-3 1/6] FROM docker.io/library/alpine:latest@sha256:a8560b36e8b8210634f77d9f7f9efd7ffa463e380b75e2e74aff4511df3ef88c 0.1s

=> => resolve docker.io/library/alpine:latest@sha256:a8560b36e8b8210634f77d9f7f9efd7ffa463e380b75e2e74aff4511df3ef88c 0.0s

=> => sha256:1c4eef651f65e2f7daee7ee785882ac164b02b78fb74503052a26dc061c90474 1.02kB / 1.02kB 0.0s

=> => sha256:aded1e1a5b3705116fa0a92ba074a5e0b0031647d9c315983ccba2ee5428ec8b 581B / 581B 0.0s

=> => sha256:a8560b36e8b8210634f77d9f7f9efd7ffa463e380b75e2e74aff4511df3ef88c 9.22kB / 9.22kB 0.0s

=> [app internal] load build context 0.1s

=> => transferring context: 764.21kB 0.1s

=> [app studio 1/6] FROM docker.io/library/node:22-alpine@sha256:152270cd4bd094d216a84cbc3c5eb1791afb05af00b811e2f0f04bdc6c473602 7.8s

=> => resolve docker.io/library/node:22-alpine@sha256:152270cd4bd094d216a84cbc3c5eb1791afb05af00b811e2f0f04bdc6c473602 0.0s

=> => sha256:97c5ed51c64a35c1695315012fd56021ad6b3135a30b6a82a84b414fd6f65851 6.21kB / 6.21kB 0.0s

=> => sha256:f3bde9621711f54e446f593ab1fb00d66aad452bb83a5417a77c5b37bbdb4874 50.42MB / 50.42MB 3.1s

=> => sha256:e7db676aa884bcad4c892a937041d52418dd738c1e0a8347868c9a3f996a9b6a 1.26MB / 1.26MB 0.4s

=> => sha256:28e4c8cb54c6966a37f792aa8b3fe9b8b4e6957eb2957dac0c99ade5fb2350b2 447B / 447B 0.3s

=> => sha256:152270cd4bd094d216a84cbc3c5eb1791afb05af00b811e2f0f04bdc6c473602 6.41kB / 6.41kB 0.0s

=> => sha256:d1068d8b737ffed2b8e9d0e9313177a2e2786c36780c5467ac818232e603ccd0 1.72kB / 1.72kB 0.0s

=> => extracting sha256:f3bde9621711f54e446f593ab1fb00d66aad452bb83a5417a77c5b37bbdb4874 4.2s

=> => extracting sha256:e7db676aa884bcad4c892a937041d52418dd738c1e0a8347868c9a3f996a9b6a 0.1s

=> => extracting sha256:28e4c8cb54c6966a37f792aa8b3fe9b8b4e6957eb2957dac0c99ade5fb2350b2 0.0s

=> [app stage-3 2/6] WORKDIR /app 0.1s

=> [app stage-3 3/6] RUN apk add --no-cache ffmpeg 8.8s

=> [app studio 2/6] WORKDIR /app 1.1s

=> [app player 3/6] COPY ./web/player/package*.json ./ 0.1s

=> [app studio 3/6] COPY ./web/studio/package*.json ./ 0.1s

=> [app player 4/6] RUN npm install 10.7s

=> [app studio 4/6] RUN npm install 13.6s

=> [app server 2/7] WORKDIR /app 1.3s

=> [app server 3/7] COPY go.mod go.sum ./ 0.1s

=> [app server 4/7] RUN go mod download 8.1s

=> [app player 5/6] COPY ./web/player . 0.0s

=> [app player 6/6] RUN npm run build 11.0s

=> [app studio 5/6] COPY ./web/studio . 0.1s

=> [app studio 6/6] RUN npm run build 16.2s

=> [app server 5/7] COPY cmd/ ./cmd/ 0.0s

=> [app server 6/7] COPY internal/ ./internal/ 0.0s

=> [app server 7/7] RUN CGO_ENABLED=0 GOOS=linux go build -ldflags="-s -w" -o /app/bin/main ./cmd/main.go 15.8s

=> [app stage-3 4/6] COPY --from=server /app/bin/main . 0.1s

=> [app stage-3 5/6] COPY --from=player /app/dist ./web/player/dist 0.0s

=> [app stage-3 6/6] COPY --from=studio /app/dist ./web/studio/dist 0.0s

=> [app] exporting to image 0.9s

=> => exporting layers 0.9s

=> => writing image sha256:905fd2440aae135199581fb7a9b22c18e2621b5688e74a3e573dc913fc45dc6e 0.0s

=> => naming to docker.io/library/airstation-app 0.0s

=> [app] resolving provenance for metadata file 0.0s

[+] Running 4/4

✔ app Built 0.0s

✔ Network airstation_default Created 0.1s

✔ Volume "airstation_database" Created 0.0s

✔ Container airstation-app-1 Started

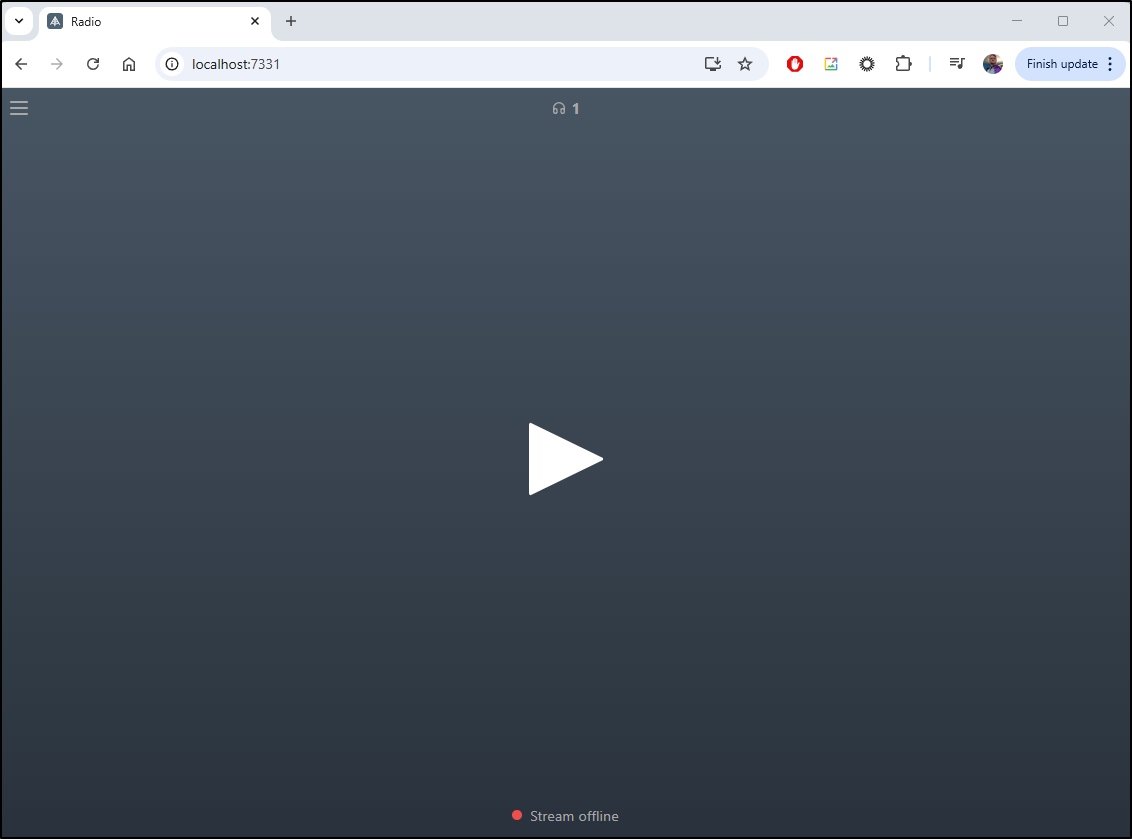

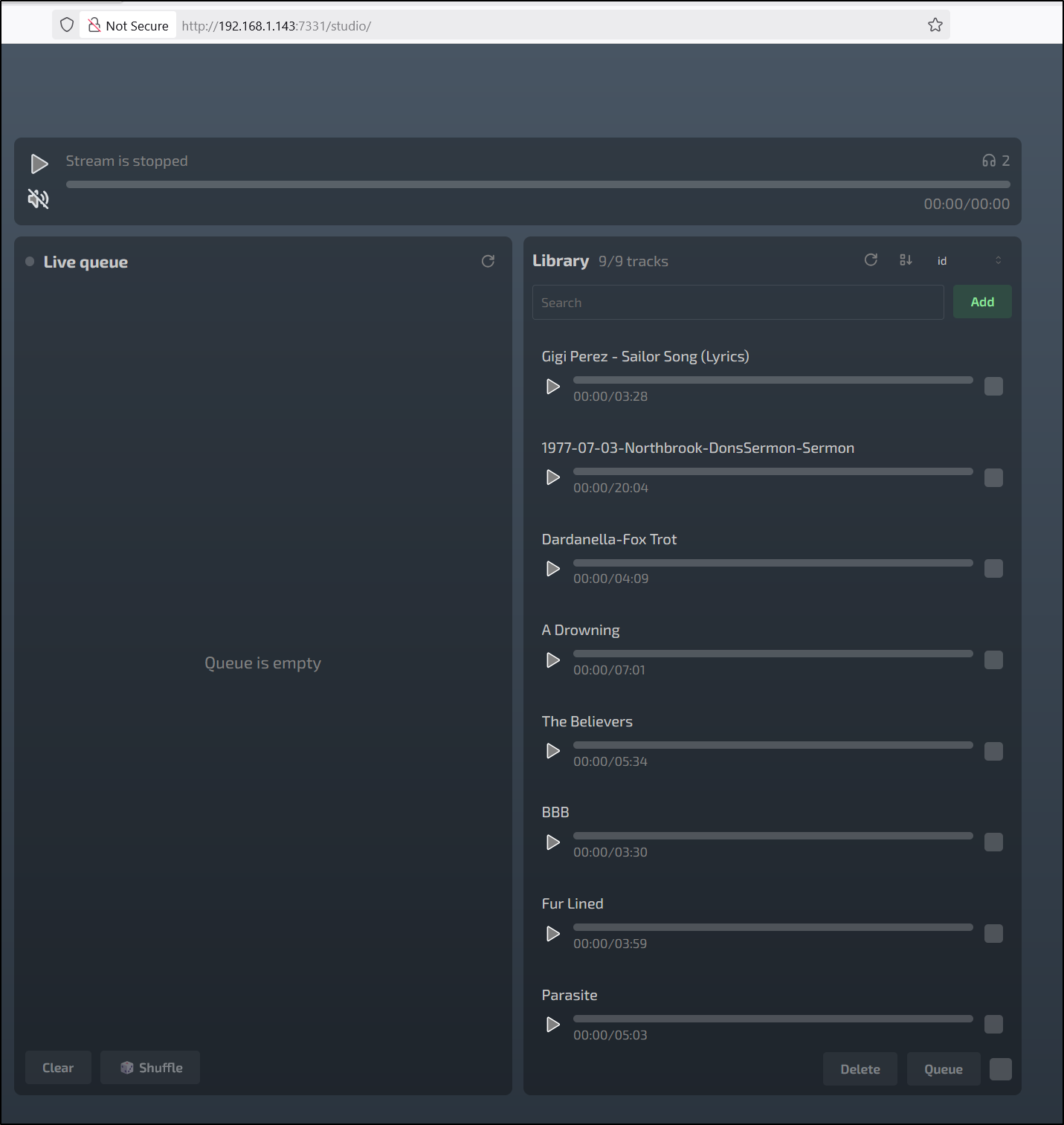

I can now access it from port 7331

I’ll stop it

builder@DESKTOP-QADGF36:~/Workspaces/airstation$ docker stop airstation-app-1

airstation-app-1

builder@DESKTOP-QADGF36:~/Workspaces/airstation$ docker rm airstation-app-1

airstation-app-1

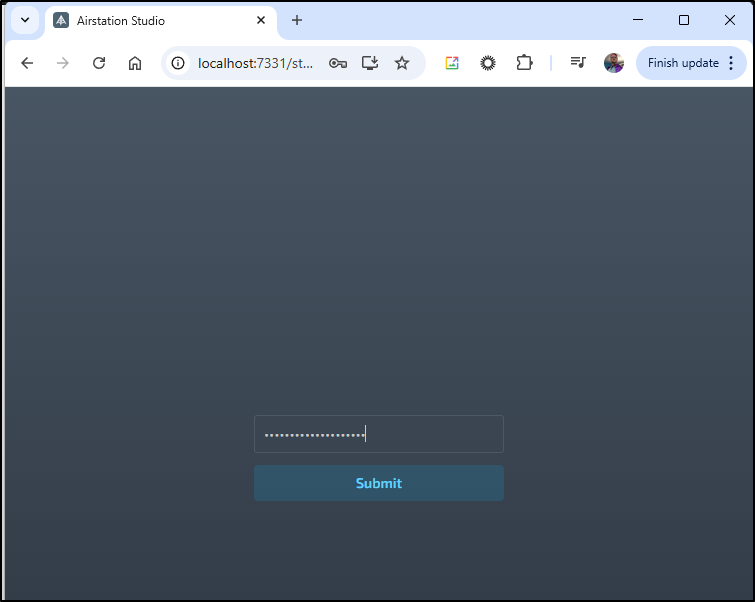

We can login to the admin page

I uploaded some Mp3s (I bought on Vinyl but is also on bandcamp by the way)

builder@DESKTOP-QADGF36:~/Workspaces/airstation$ cp /mnt/c/Users/isaac/MP3/*.mp3 ./static/tracks/

cp: cannot create regular file './static/tracks/01-01 KAZU Salty.mp3': Permission denied

cp: cannot create regular file './static/tracks/01-02 KAZU Come_Behind_Me_So_Good.mp3': Permission denied

cp: cannot create regular file './static/tracks/01-03 KAZU Meo.mp3': Permission denied

cp: cannot create regular file './static/tracks/01-04 KAZU Adult_Baby.mp3': Permission denied

cp: cannot create regular file './static/tracks/01-05 KAZU Place_of_Birth.mp3': Permission denied

cp: cannot create regular file './static/tracks/01-06 KAZU Name_and_Age.mp3': Permission denied

cp: cannot create regular file './static/tracks/01-07 KAZU Unsure_in_Waves.mp3': Permission denied

cp: cannot create regular file './static/tracks/01-08 KAZU Undo.mp3': Permission denied

cp: cannot create regular file './static/tracks/01-09 KAZU Coyote.mp3': Permission denied

builder@DESKTOP-QADGF36:~/Workspaces/airstation$ sudo cp /mnt/c/Users/isaac/MP3/*.mp3 ./static/tracks/

[sudo] password for builder:

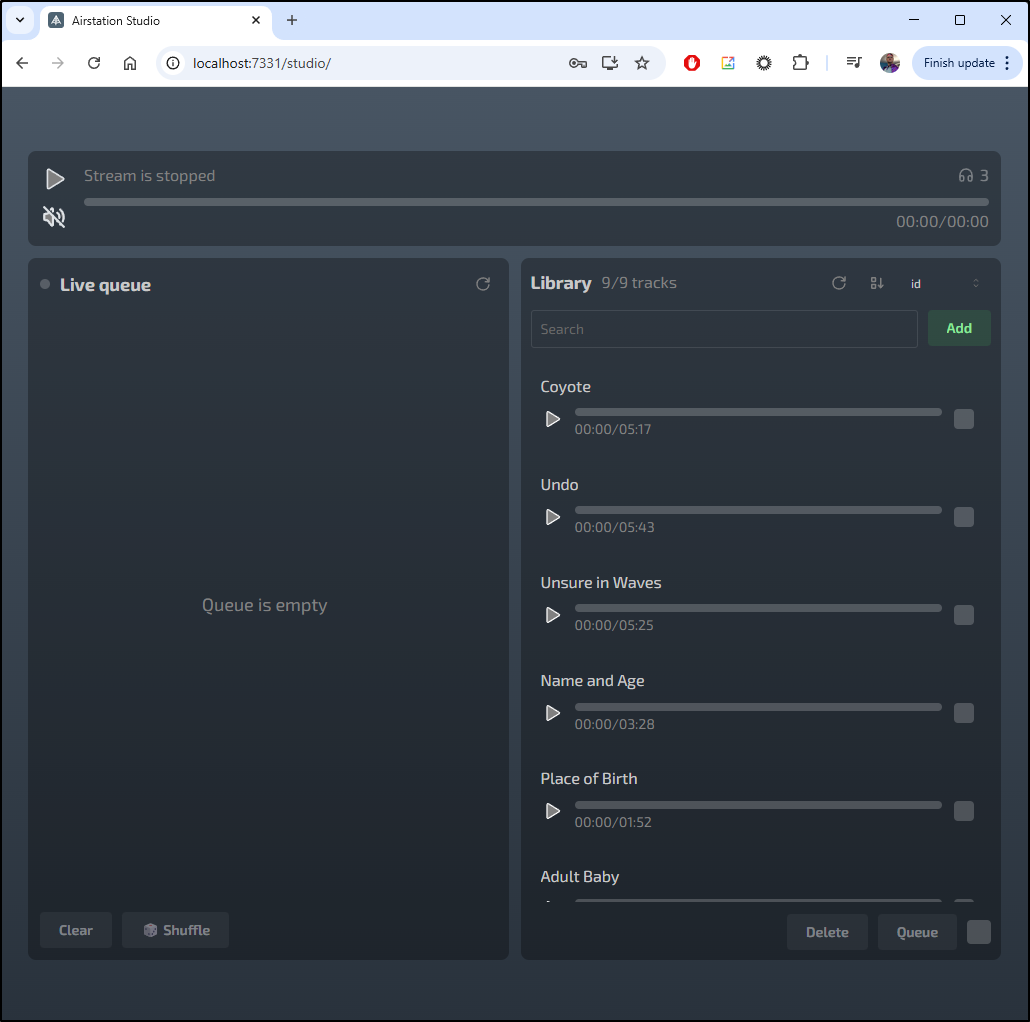

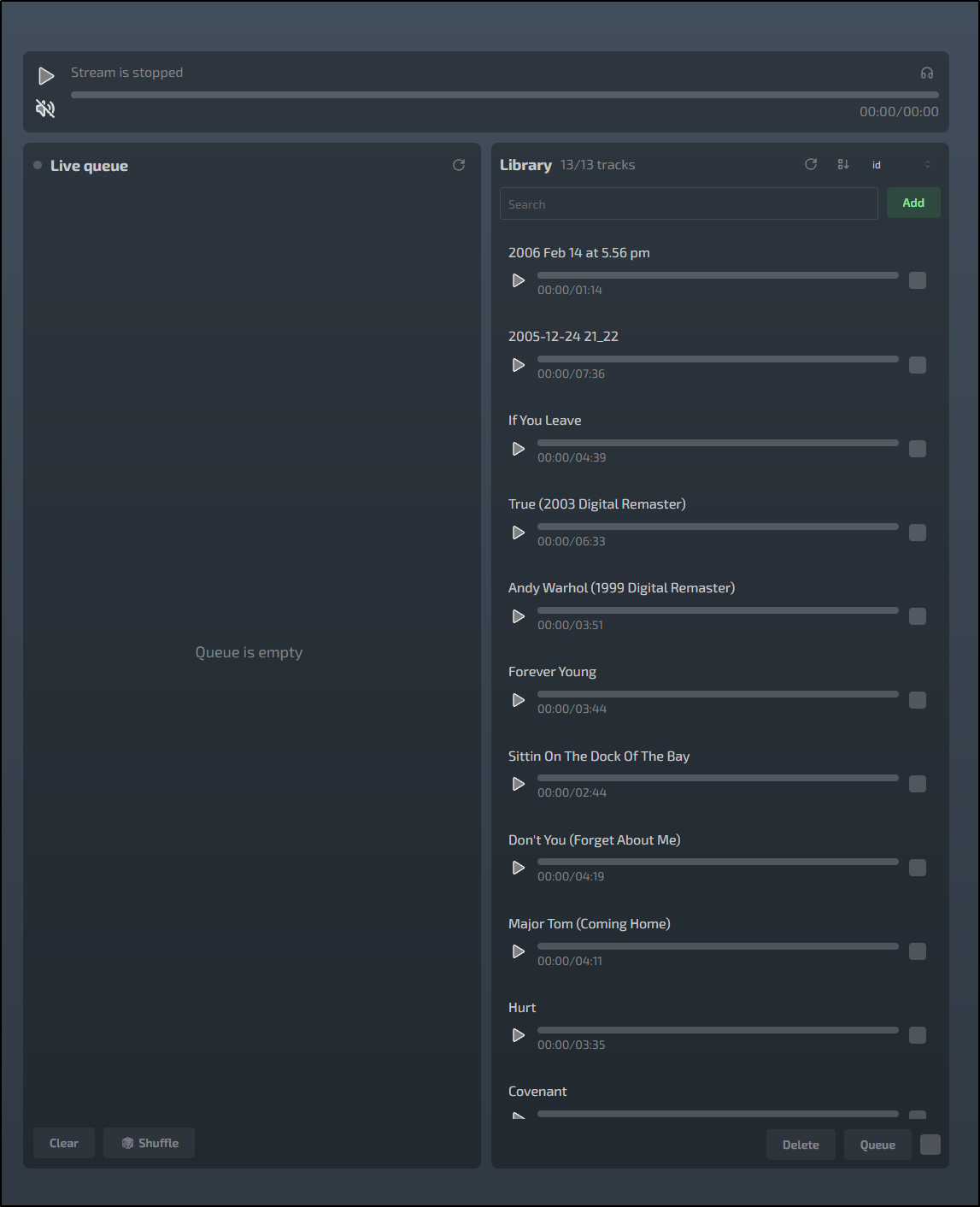

I can then see the tracks in the UI

I can queue up some tracks

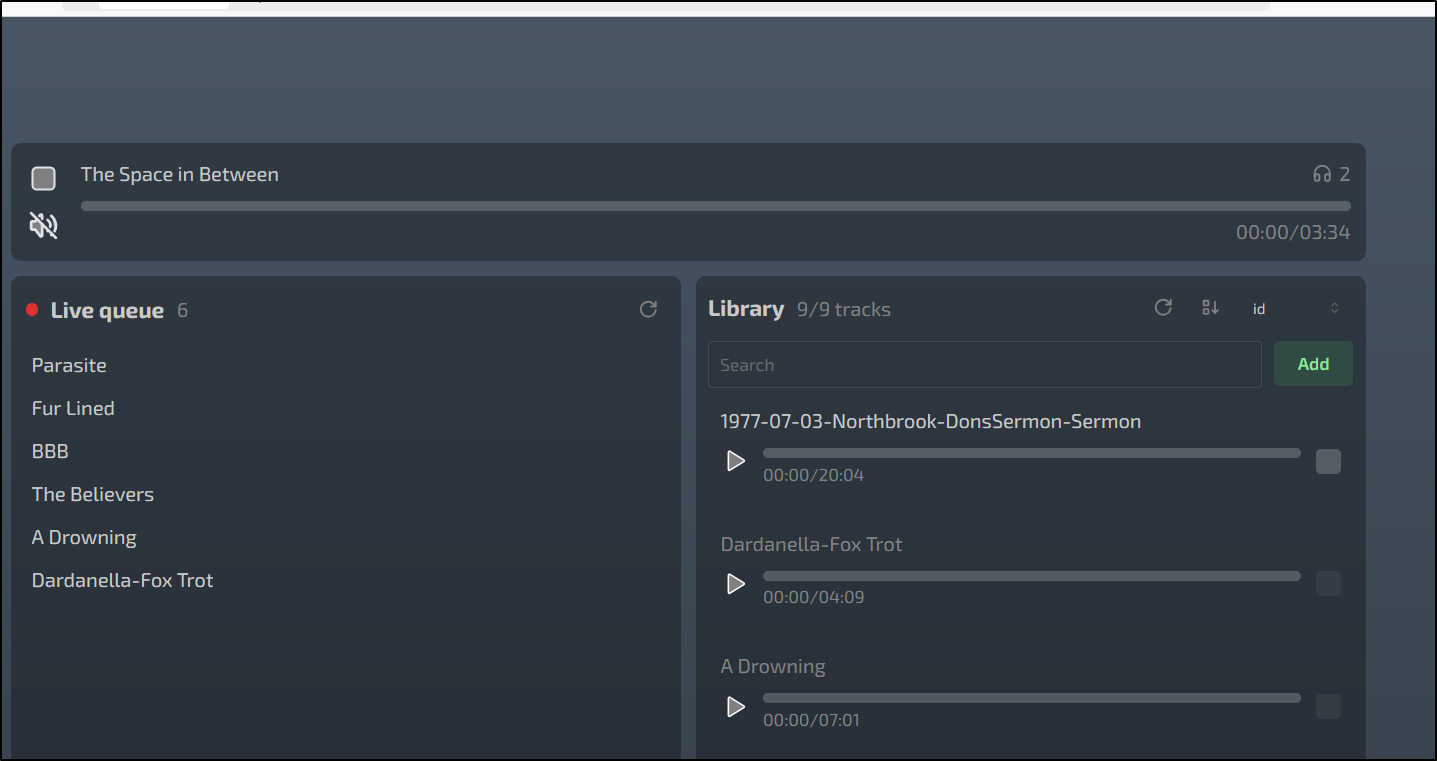

which now show in the queue

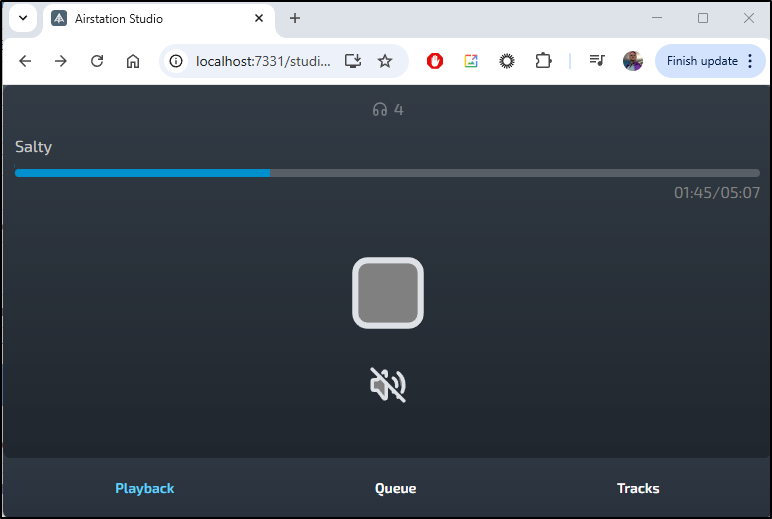

I can then play the track

I can then play to connect to the track

If the window is small enough, the Admin portal will show the current track playing

Ingress

Let’s create an A record

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n airstation

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "9ba9e07f-4bc6-4018-bc1f-7a6f7427f672",

"fqdn": "airstation.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/airstation",

"name": "airstation",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

I’ll go to the docker host to run this.

builder@builder-T100:~/airstation$ docker compose up -d

WARN[0000] The "AIRSTATION_PLAYER_TITLE" variable is not set. Defaulting to a blank string.

[+] Building 64.4s (33/33) FINISHED

=> [app internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 830B 0.0s

=> [app internal] load metadata for docker.io/library/alpine:latest 1.0s

=> [app internal] load metadata for docker.io/library/node:22-alpine 1.0s

=> [app internal] load metadata for docker.io/library/golang:1.24-alpine 1.0s

=> [app auth] library/golang:pull token for registry-1.docker.io 0.0s

=> [app auth] library/alpine:pull token for registry-1.docker.io 0.0s

=> [app auth] library/node:pull token for registry-1.docker.io 0.0s

=> [app internal] load .dockerignore 0.0s

=> => transferring context: 85B 0.0s

=> [app server 1/7] FROM docker.io/library/golang:1.24-alpine@sha256:ef18ee7117463ac1055f5a370ed18b8750f01589f13ea0b48642f5792b234044 8.0s

=> => resolve docker.io/library/golang:1.24-alpine@sha256:ef18ee7117463ac1055f5a370ed18b8750f01589f13ea0b48642f5792b234044 0.0s

=> => sha256:be1cf73ca9fbe9c5108691405b627cf68b654fb6838a17bc1e95cc48593e70da 1.92kB / 1.92kB 0.0s

=> => sha256:68d4da47fd566b8a125df912bff4ab337355b1f6b2c478f5e8593cb98b4d1563 2.08kB / 2.08kB 0.0s

=> => sha256:bcde94e77dfab30cceb8ba9b43d3c7ac5efb03bcd79b63cf02b60a0c23261582 294.91kB / 294.91kB 0.2s

=> => sha256:92b00dc8dfbaa6cd7e39d09d4f1c726259b4d9a29c697192955da032f472d642 78.98MB / 78.98MB 2.1s

=> => sha256:9b664c7c39c20f5e319a449219b9641bd2fdf7325727a3f69abe1e81bf0f726a 126B / 126B 0.2s

=> => sha256:ef18ee7117463ac1055f5a370ed18b8750f01589f13ea0b48642f5792b234044 10.29kB / 10.29kB 0.0s

=> => extracting sha256:bcde94e77dfab30cceb8ba9b43d3c7ac5efb03bcd79b63cf02b60a0c23261582 0.1s

=> => sha256:4f4fb700ef54461cfa02571ae0db9a0dc1e0cdb5577484a6d75e68dc38e8acc1 32B / 32B 0.3s

=> => extracting sha256:92b00dc8dfbaa6cd7e39d09d4f1c726259b4d9a29c697192955da032f472d642 5.4s

=> => extracting sha256:9b664c7c39c20f5e319a449219b9641bd2fdf7325727a3f69abe1e81bf0f726a 0.0s

=> => extracting sha256:4f4fb700ef54461cfa02571ae0db9a0dc1e0cdb5577484a6d75e68dc38e8acc1 0.0s

=> [app stage-3 1/6] FROM docker.io/library/alpine:latest@sha256:a8560b36e8b8210634f77d9f7f9efd7ffa463e380b75e2e74aff4511df3ef88c 0.1s

=> => resolve docker.io/library/alpine:latest@sha256:a8560b36e8b8210634f77d9f7f9efd7ffa463e380b75e2e74aff4511df3ef88c 0.0s

=> => sha256:1c4eef651f65e2f7daee7ee785882ac164b02b78fb74503052a26dc061c90474 1.02kB / 1.02kB 0.0s

=> => sha256:aded1e1a5b3705116fa0a92ba074a5e0b0031647d9c315983ccba2ee5428ec8b 581B / 581B 0.0s

=> => sha256:a8560b36e8b8210634f77d9f7f9efd7ffa463e380b75e2e74aff4511df3ef88c 9.22kB / 9.22kB 0.0s

=> [app studio 1/6] FROM docker.io/library/node:22-alpine@sha256:152270cd4bd094d216a84cbc3c5eb1791afb05af00b811e2f0f04bdc6c473602 4.3s

=> => resolve docker.io/library/node:22-alpine@sha256:152270cd4bd094d216a84cbc3c5eb1791afb05af00b811e2f0f04bdc6c473602 0.0s

=> => sha256:152270cd4bd094d216a84cbc3c5eb1791afb05af00b811e2f0f04bdc6c473602 6.41kB / 6.41kB 0.0s

=> => sha256:d1068d8b737ffed2b8e9d0e9313177a2e2786c36780c5467ac818232e603ccd0 1.72kB / 1.72kB 0.0s

=> => sha256:97c5ed51c64a35c1695315012fd56021ad6b3135a30b6a82a84b414fd6f65851 6.21kB / 6.21kB 0.0s

=> => sha256:f3bde9621711f54e446f593ab1fb00d66aad452bb83a5417a77c5b37bbdb4874 50.42MB / 50.42MB 1.9s

=> => sha256:e7db676aa884bcad4c892a937041d52418dd738c1e0a8347868c9a3f996a9b6a 1.26MB / 1.26MB 0.7s

=> => sha256:28e4c8cb54c6966a37f792aa8b3fe9b8b4e6957eb2957dac0c99ade5fb2350b2 447B / 447B 0.8s

=> => extracting sha256:f3bde9621711f54e446f593ab1fb00d66aad452bb83a5417a77c5b37bbdb4874 1.7s

=> => extracting sha256:e7db676aa884bcad4c892a937041d52418dd738c1e0a8347868c9a3f996a9b6a 0.0s

=> => extracting sha256:28e4c8cb54c6966a37f792aa8b3fe9b8b4e6957eb2957dac0c99ade5fb2350b2 0.0s

=> [app internal] load build context 0.0s

=> => transferring context: 764.21kB 0.0s

=> [app stage-3 2/6] WORKDIR /app 0.2s

=> [app stage-3 3/6] RUN apk add --no-cache ffmpeg 14.8s

=> [app studio 2/6] WORKDIR /app 0.6s

=> [app player 3/6] COPY ./web/player/package*.json ./ 0.3s

=> [app studio 3/6] COPY ./web/studio/package*.json ./ 0.3s

=> [app studio 4/6] RUN npm install 14.1s

=> [app player 4/6] RUN npm install 12.1s

=> [app server 2/7] WORKDIR /app 0.6s

=> [app server 3/7] COPY go.mod go.sum ./ 0.1s

=> [app server 4/7] RUN go mod download 9.0s

=> [app player 5/6] COPY ./web/player . 0.4s

=> [app server 5/7] COPY cmd/ ./cmd/ 0.1s

=> [app player 6/6] RUN npm run build 20.4s

=> [app server 6/7] COPY internal/ ./internal/ 0.2s

=> [app server 7/7] RUN CGO_ENABLED=0 GOOS=linux go build -ldflags="-s -w" -o /app/bin/main ./cmd/main.go 44.2s

=> [app studio 5/6] COPY ./web/studio . 0.1s

=> [app studio 6/6] RUN npm run build 31.2s

=> [app stage-3 4/6] COPY --from=server /app/bin/main . 0.1s

=> [app stage-3 5/6] COPY --from=player /app/dist ./web/player/dist 0.1s

=> [app stage-3 6/6] COPY --from=studio /app/dist ./web/studio/dist 0.0s

=> [app] exporting to image 0.6s

=> => exporting layers 0.6s

=> => writing image sha256:53501a11caf6a4714e67626e5f411171db381cf3dbe2427c0eeb55c834e302f1 0.0s

=> => naming to docker.io/library/airstation-app 0.0s

[+] Running 3/3

✔ Network airstation_default Created 0.2s

✔ Volume "airstation_database" Created 0.0s

✔ Container airstation-app-1 Started

And then use a Kubernetes Manifest to launch it

$ cat ./airstation.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: airstation-external-ip

subsets:

- addresses:

- ip: 192.168.1.100

ports:

- name: airstationint

port: 7331

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: airstation-external-ip

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: airstation

port: 80

protocol: TCP

targetPort: 7331

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: airstation-external-ip

generation: 1

name: airstationingress

spec:

rules:

- host: airstation.tpk.pw

http:

paths:

- backend:

service:

name: airstation-external-ip

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- airstation.tpk.pw

secretName: airstation-tls

$ kubectl apply -f ./airstation.yaml

endpoints/airstation-external-ip created

service/airstation-external-ip created

ingress.networking.k8s.io/airstationingress created

When I see the cert is satisified

$ kubectl get cert airstation-tls

NAME READY SECRET AGE

airstation-tls False airstation-tls 63s

$ kubectl get cert airstation-tls

NAME READY SECRET AGE

airstation-tls True airstation-tls 90s

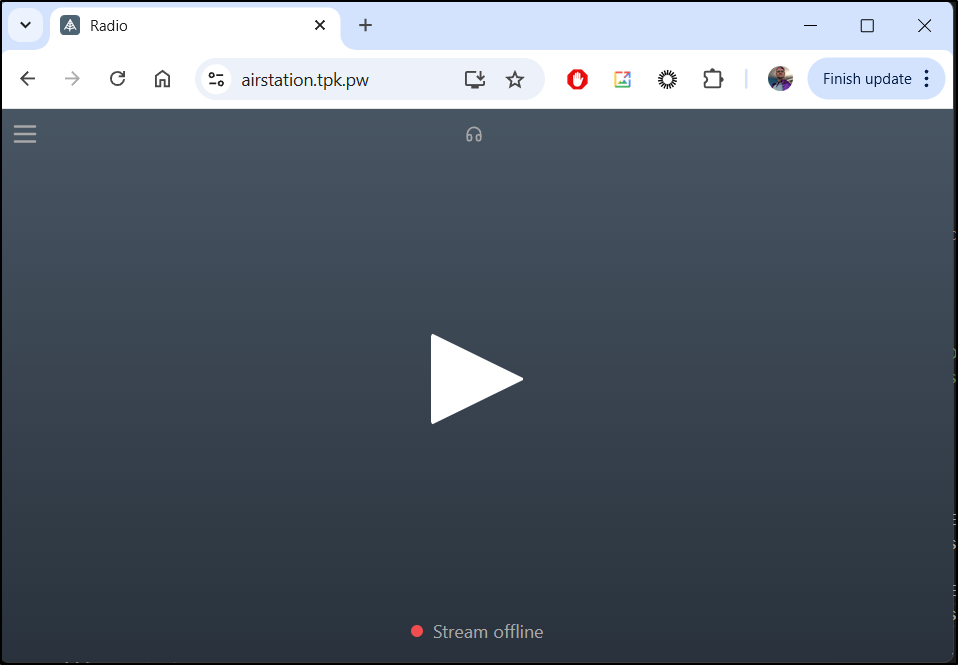

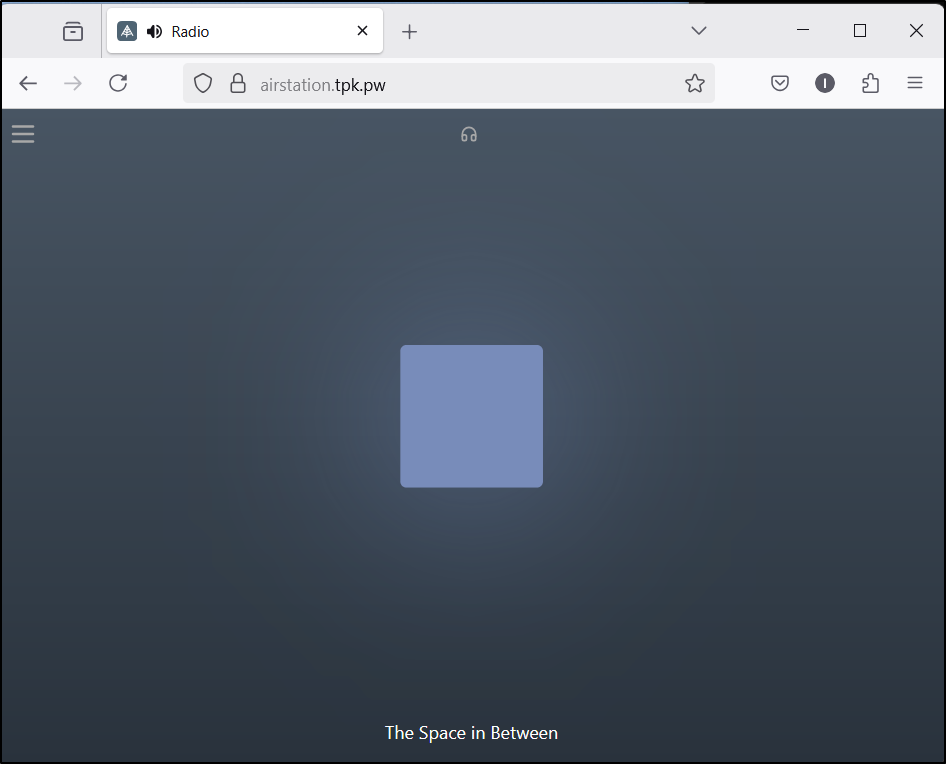

I can now view my station

I realized it was a bit slow on the older dockerhost so i moved to the Ryzen box.

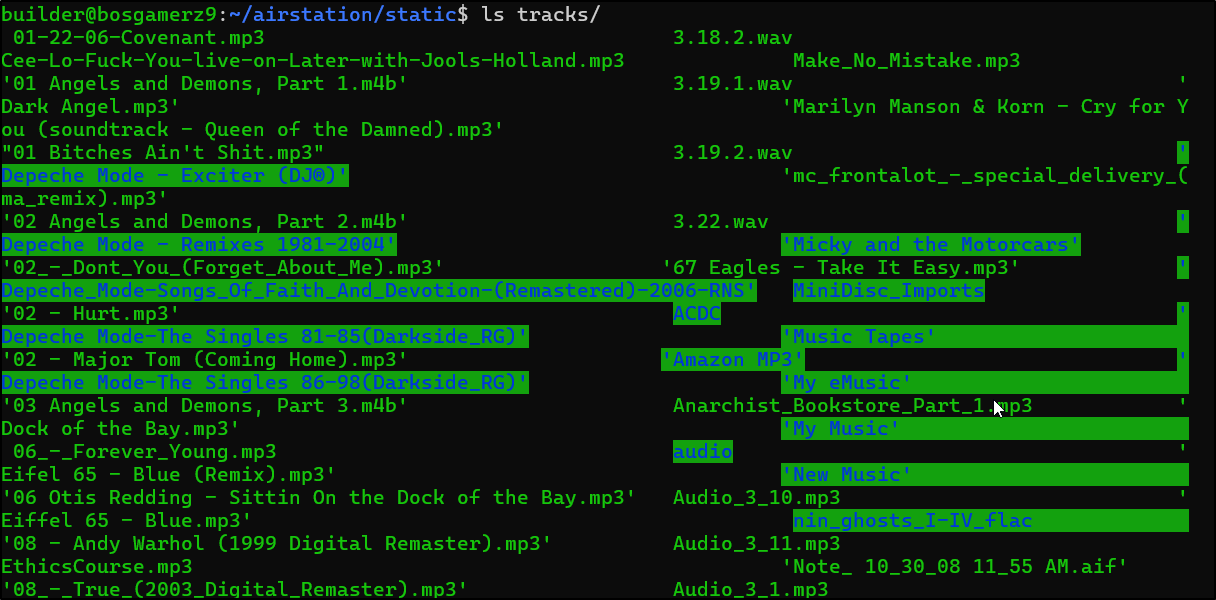

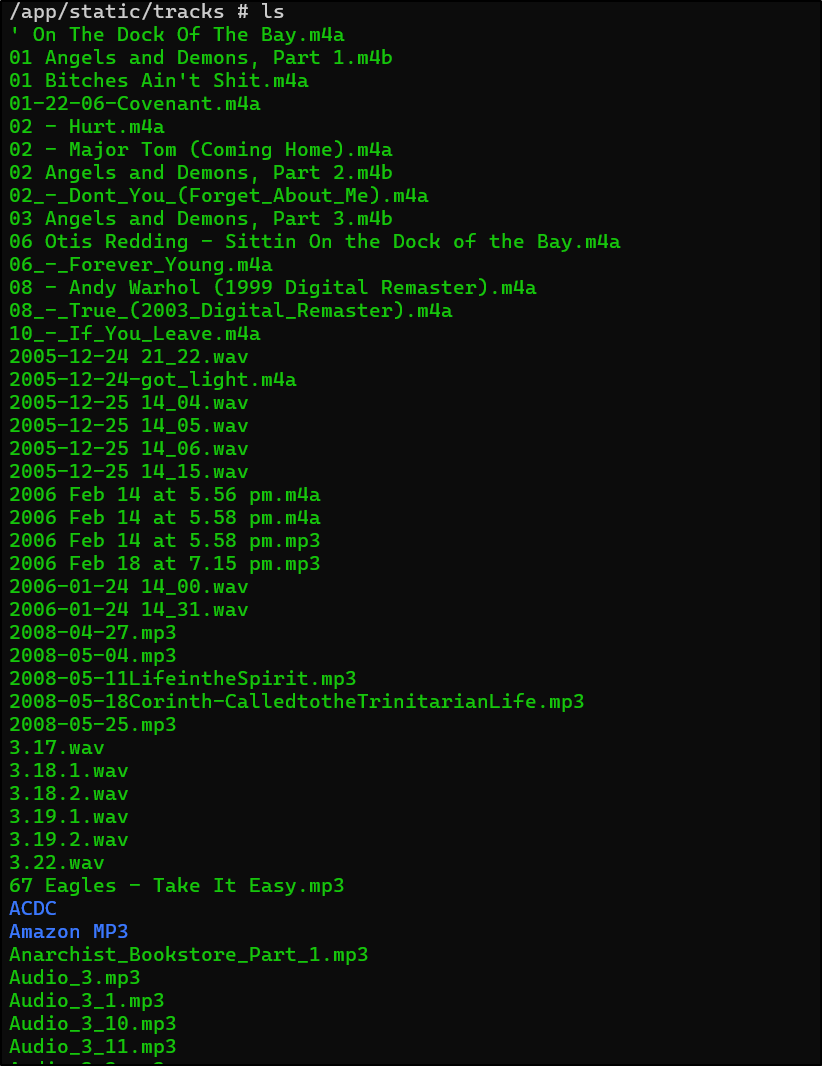

While doing so, I took a moment to mount an old share that had music and mp3s

I fired it up with docker compose up but did not see the tracks

I get different results on some subsequent refreshes.

It doesn’t seem to like subfolders.

I even hopped in the container and could clearly see the files

I tried a different mount and symlinking them. but it seemed to hang up the container from indexing files. From the logs

2025-05-20 20:55:53 WARN trackservice: Failed to prepare a track for streaming: triming audio failed: exit status 254

Output: ffmpeg version 6.1.2 Copyright (c) 2000-2024 the FFmpeg developers

built with gcc 14.2.0 (Alpine 14.2.0)

configuration: --prefix=/usr --disable-librtmp --disable-lzma --disable-static --disable-stripping --enable-avfilter --enable-gpl --enable-ladspa --enable-libaom --enable-libass --enable-libbluray --enable-libdav1d --enable-libdrm --enable-libfontconfig --enable-libfreetype --enable-libfribidi --enable-libharfbuzz --enable-libmp3lame --enable-libopenmpt --enable-libopus --enable-libplacebo --enable-libpulse --enable-librav1e --enable-librist --enable-libsoxr --enable-libsrt --enable-libssh --enable-libtheora --enable-libv4l2 --enable-libvidstab --enable-libvorbis --enable-libvpx --enable-libwebp --enable-libx264 --enable-libx265 --enable-libxcb --enable-libxml2 --enable-libxvid --enable-libzimg --enable-libzmq --enable-lto=auto --enable-lv2 --enable-openssl --enable-pic --enable-postproc --enable-pthreads --enable-shared --enable-vaapi --enable-vdpau --enable-version3 --enable-vulkan --optflags=-O3 --enable-libjxl --enable-libsvtav1 --enable-libvpl

libavutil 58. 29.100 / 58. 29.100

libavcodec 60. 31.102 / 60. 31.102

libavformat 60. 16.100 / 60. 16.100

libavdevice 60. 3.100 / 60. 3.100

libavfilter 9. 12.100 / 9. 12.100

libswscale 7. 5.100 / 7. 5.100

libswresample 4. 12.100 / 4. 12.100

libpostproc 57. 3.100 / 57. 3.100

[in#0 @ 0x77784065a940] Error opening input: No such file or directory

Error opening input file static/tracks/01-The-Space-In-Between.mp3.

Error opening input files: No such file or directory

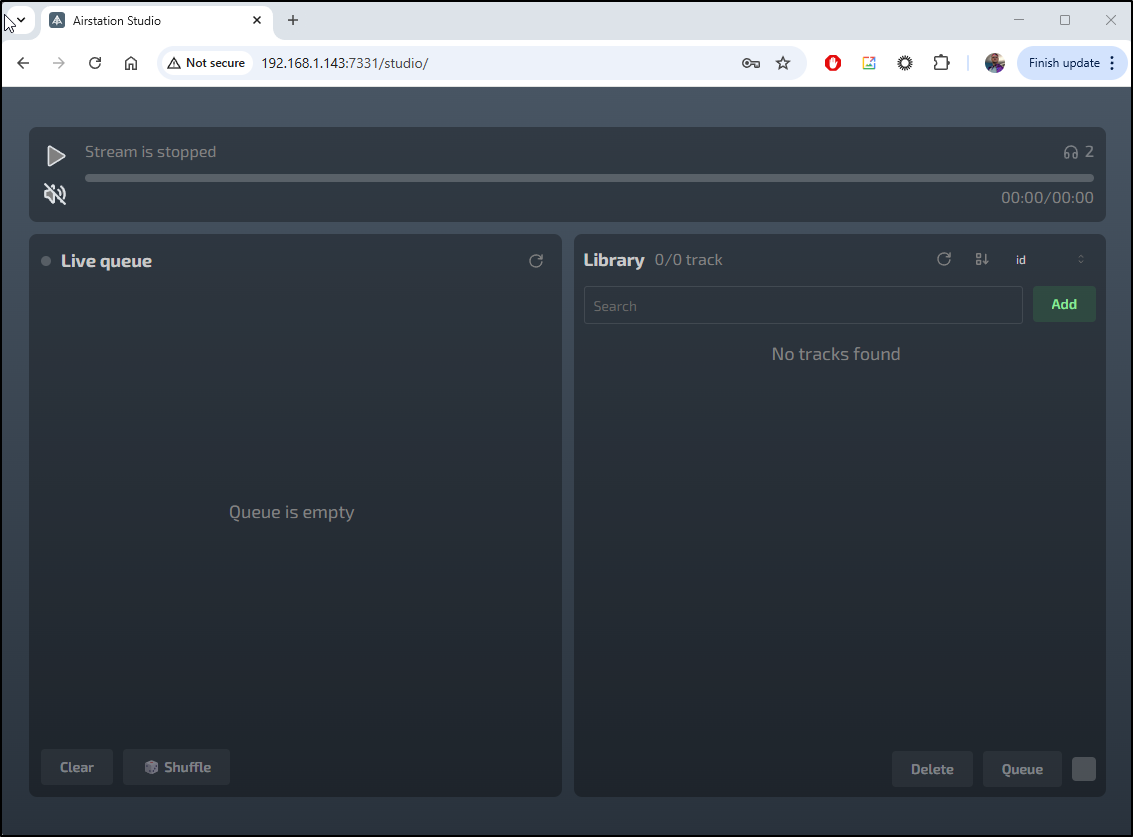

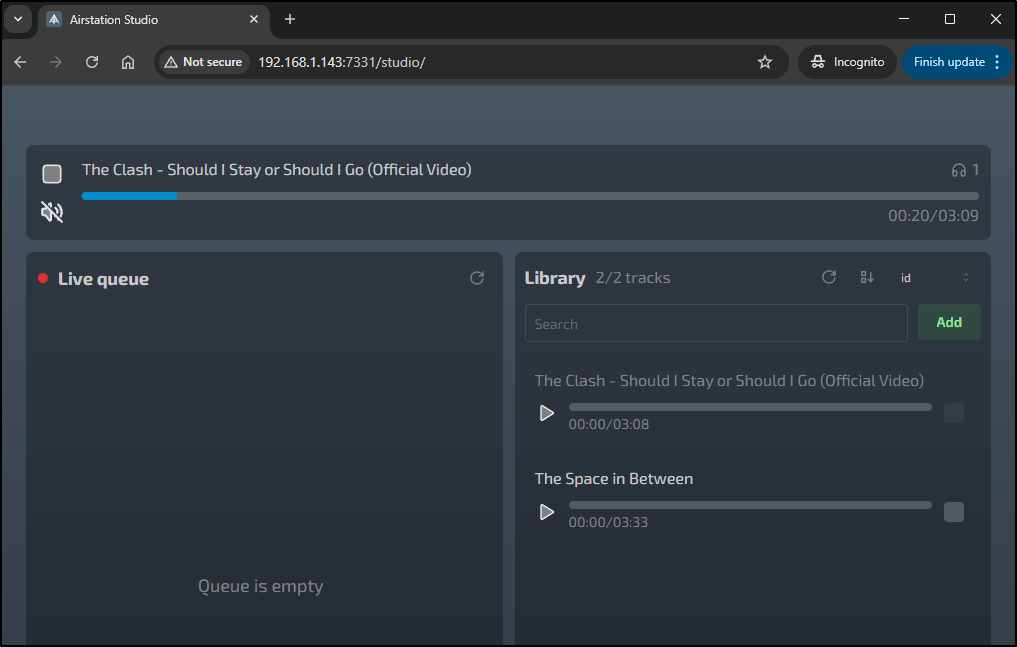

I copied some files and started the station and queued one track.

I found that it did indeed repeat the track over and over so the queue repeats indefinitely

I swapped servers by just changing the Host IP in the Endpoint

$ cat airstation.yaml | head -n 15

apiVersion: v1

kind: Endpoints

metadata:

name: airstation-external-ip

subsets:

- addresses:

- ip: 192.168.1.143

ports:

- name: airstationint

port: 7331

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

$ kubectl apply -f airstation.yaml

endpoints/airstation-external-ip configured

service/airstation-external-ip unchanged

ingress.networking.k8s.io/airstationingress unchanged

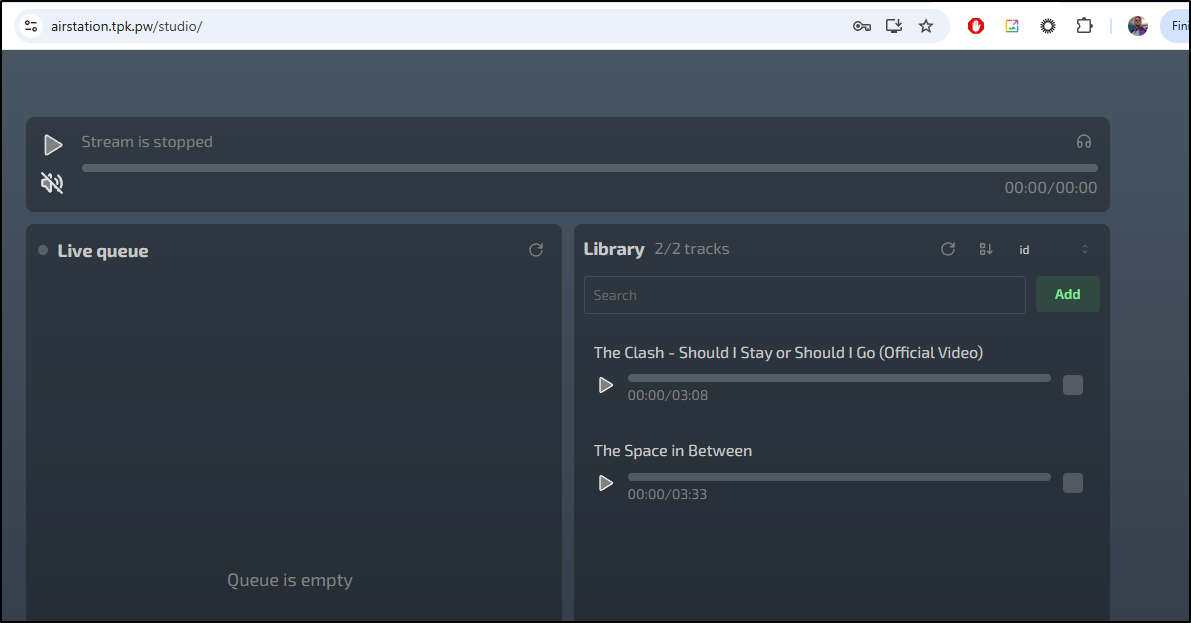

And now the Airstation looks like the one on the Ryzen

I ran into an issue where a uniqueness constraint kept failing

builder@bosgamerz9:~/airstation/static/tracks$ sudo docker logs 5d777a950376

2025-05-20 21:00:16 INFO storage: Sqlite database connected.

2025-05-20 21:00:16 WARN http: Auto start playing failed: playback queue is empty

2025-05-20 21:00:16 INFO http: Server starts on http://localhost:7331

2025-05-20 21:00:21 WARN trackservice: Failed to save track to database: tmptuduf7tq.mp3 is too short for streaming

2025-05-20 21:23:32 INFO http: New login succeed from airstation.tpk.pw with secureCookie=false

2025-05-20 21:30:13 WARN trackservice: Failed to save track to database: failed to insert track: constraint failed: UNIQUE constraint failed: tracks.name (2067)

2025-05-20 21:32:35 WARN trackservice: Failed to save track to database: failed to insert track: constraint failed: UNIQUE constraint failed: tracks.name (2067)

2025-05-20 21:33:20 WARN trackservice: Failed to save track to database: failed to insert track: constraint failed: UNIQUE constraint failed: tracks.name (2067)

Should you need to reset things, you need to remember to wipe the DB too or it will be all for naught

builder@bosgamerz9:~/airstation$ sudo docker compose down

WARN[0000] The "AIRSTATION_PLAYER_TITLE" variable is not set. Defaulting to a blank string.

[+] Running 2/2

✔ Container airstation-app-1 Removed 0.2s

✔ Network airstation_default Removed 0.2s

builder@bosgamerz9:~/airstation$ sudo docker volume remove airstation_database

airstation_database

This time it came up without issue

I then queued the free 2010 How to Destroy Angles NIN EP and a 1908 phonograph recording I made, both of which shouldn’t get me in trouble for streaming.

Then started the stream

I can join the stream to listen at https://airstation.tpk.pw/

Nextcloud

I had come across a few good tools from this article including n8n. The other tool he wrote on was Nextcloud

The setup involved a docker compose on a Hetzner VM.

services:

nextcloud:

depends_on:

- postgres

image: nextcloud:apache

environment:

- POSTGRES_HOST=postgres

- POSTGRES_PASSWORD=nextcloud

- POSTGRES_DB=nextcloud

- POSTGRES_USER=nextcloud

ports:

- 8000:80

restart: always

volumes:

- nc_data:/var/www/html

postgres:

image: postgres:alpine

environment:

- POSTGRES_PASSWORD=nextcloud

- POSTGRES_DB=nextcloud

- POSTGRES_USER=nextcloud

restart: always

volumes:

- db_data:/var/lib/postgresql/data

expose:

- 5432

volumes:

db_data:

nc_data:

This seemed overly complicated to me. I was about to convert the Docker-compose to a manifest when i did a quick search and found this chart repo

We can add the repo and update

helm repo add nextcloud https://nextcloud.github.io/helm/

helm repo update

I can now install with helm install my-release nextcloud/nextcloud

$ helm install my-nc-release nextcloud/nextcloud

NAME: my-nc-release

LAST DEPLOYED: Mon May 19 19:12:06 2025

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

#######################################################################################################

## WARNING: You did not provide an external database host in your 'helm install' call ##

## Running Nextcloud with the integrated sqlite database is not recommended for production instances ##

#######################################################################################################

For better performance etc. you have to configure nextcloud with a resolvable database

host. To configure nextcloud to use and external database host:

1. Complete your nextcloud deployment by running:

export APP_HOST=127.0.0.1

export APP_PASSWORD=$(kubectl get secret --namespace default my-nc-release-nextcloud -o jsonpath="{.data.nextcloud-password}" | base64 --decode)

## PLEASE UPDATE THE EXTERNAL DATABASE CONNECTION PARAMETERS IN THE FOLLOWING COMMAND AS NEEDED ##

helm upgrade my-nc-release nextcloud/nextcloud \

--set nextcloud.password=$APP_PASSWORD,nextcloud.host=$APP_HOST,service.type=ClusterIP,mariadb.enabled=false,externalDatabase.user=nextcloud,externalDatabase.database=nextcloud,externalDatabase.host=YOUR_EXTERNAL_DATABASE_HOST

I checked on the pod

$ kubectl get po -l app.kubernetes.io/name=nextcloud

NAME READY STATUS RESTARTS AGE

my-nc-release-nextcloud-8b479f744-vjk7m 0/1 Running 0 74s

$ kubectl get po -l app.kubernetes.io/name=nextcloud

NAME READY STATUS RESTARTS AGE

my-nc-release-nextcloud-8b479f744-vjk7m 1/1 Running 0 99s

I can see a service created

$ kubectl get svc my-nc-release-nextcloud

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

my-nc-release-nextcloud ClusterIP 10.43.203.50 <none> 8080/TCP 2m9s

$ kubectl port-forward svc/my-nc-release-nextcloud 8888:8080 Forwarding from 127.0.0.1:8888 -> 80 Forwarding from [::1]:8888 -> 80

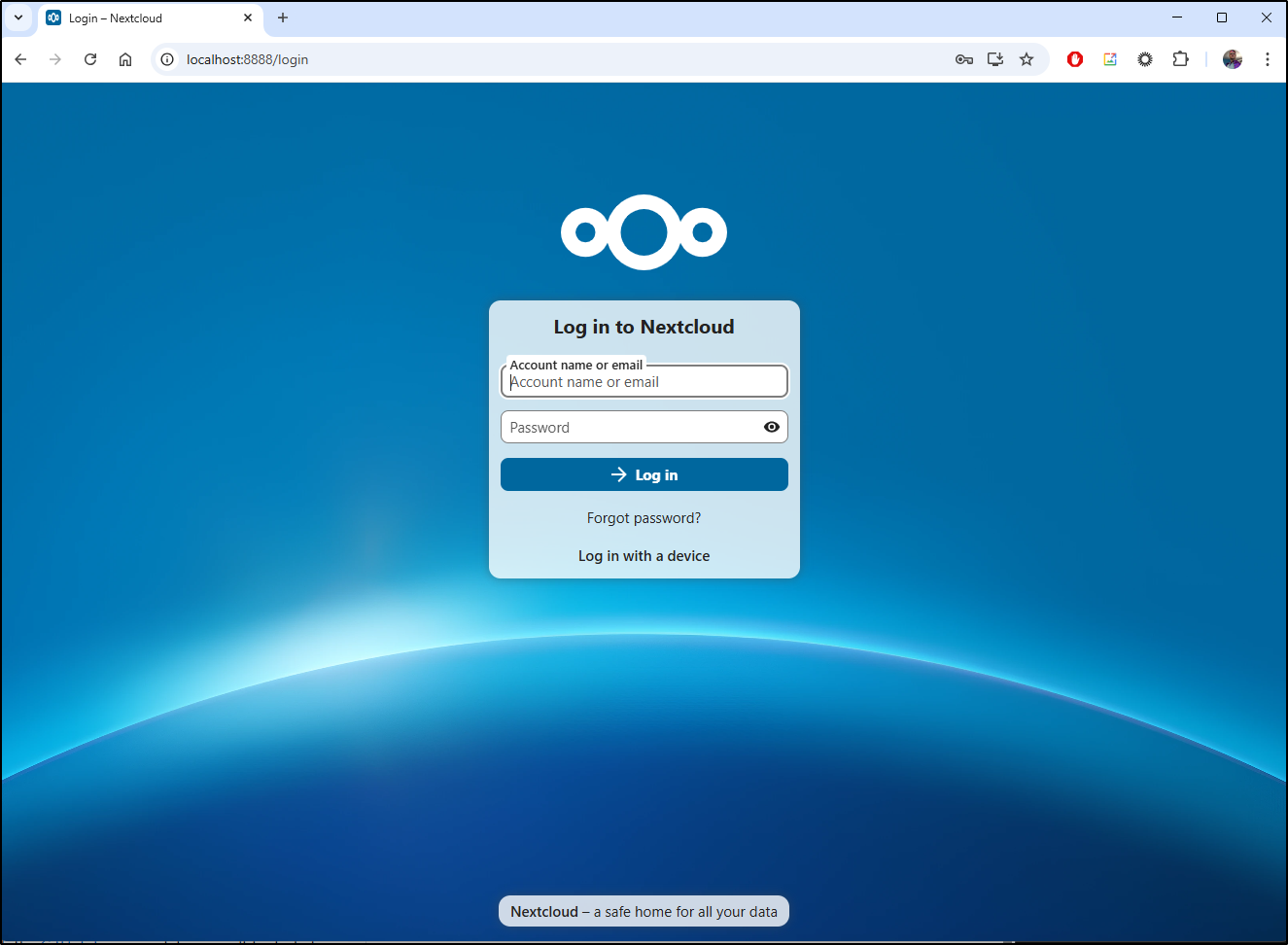

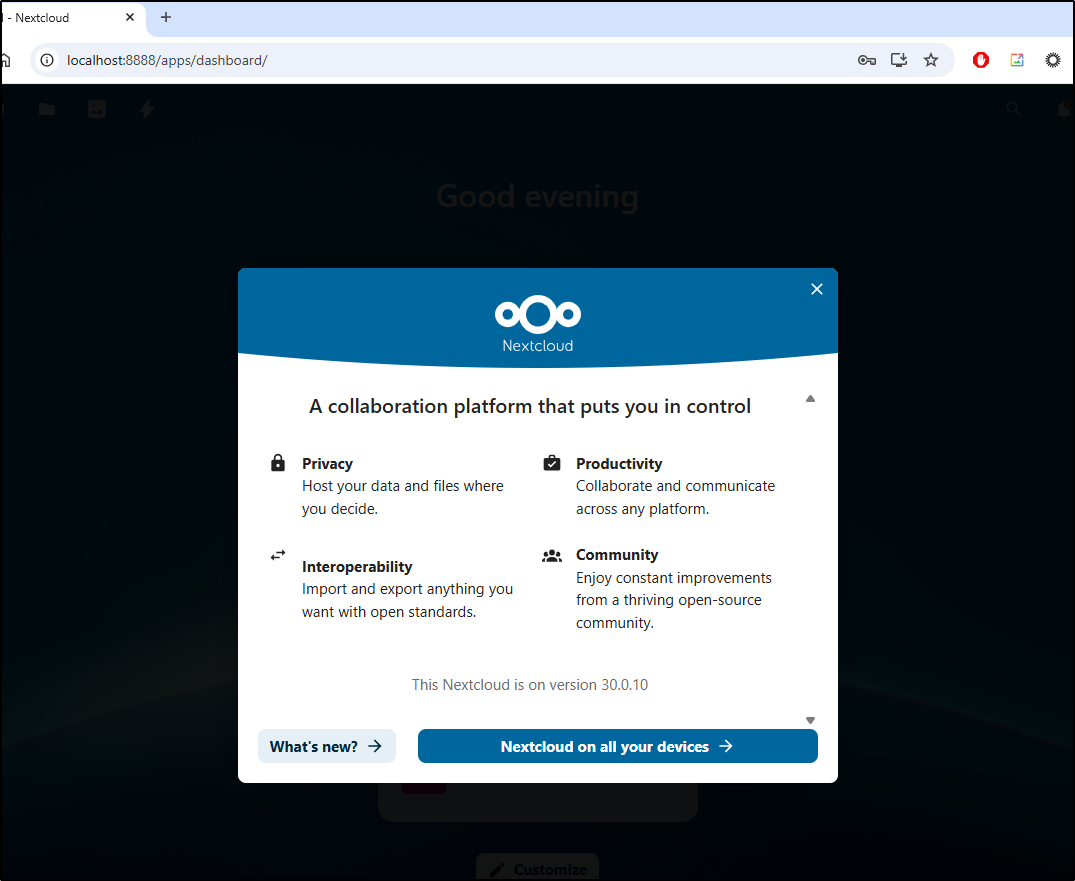

I can now try the web ui

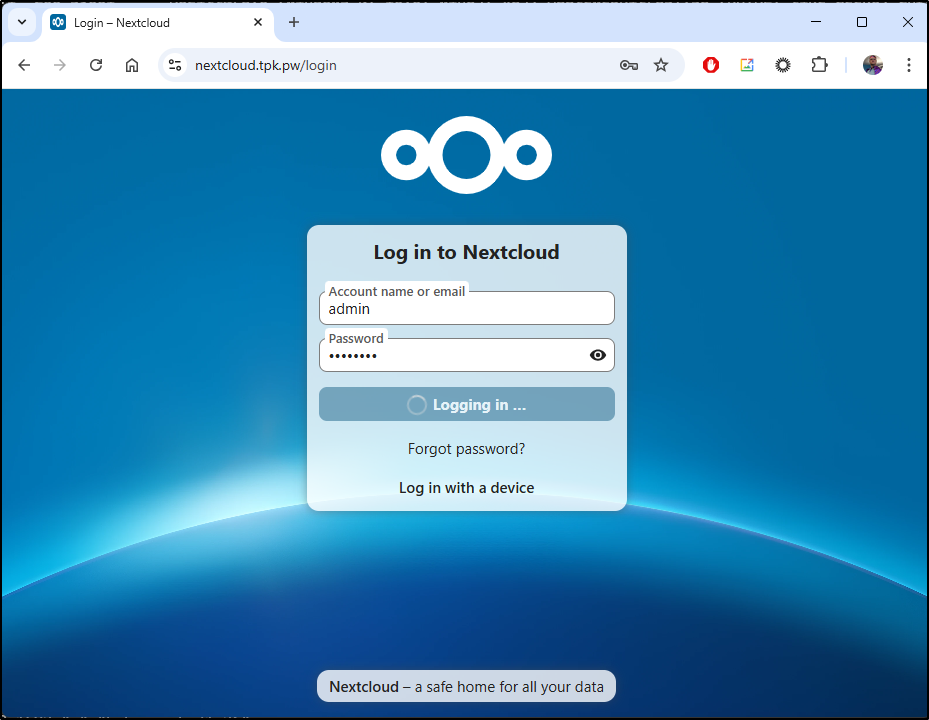

I logged in with admin and the password from the kubectl command (changeme)

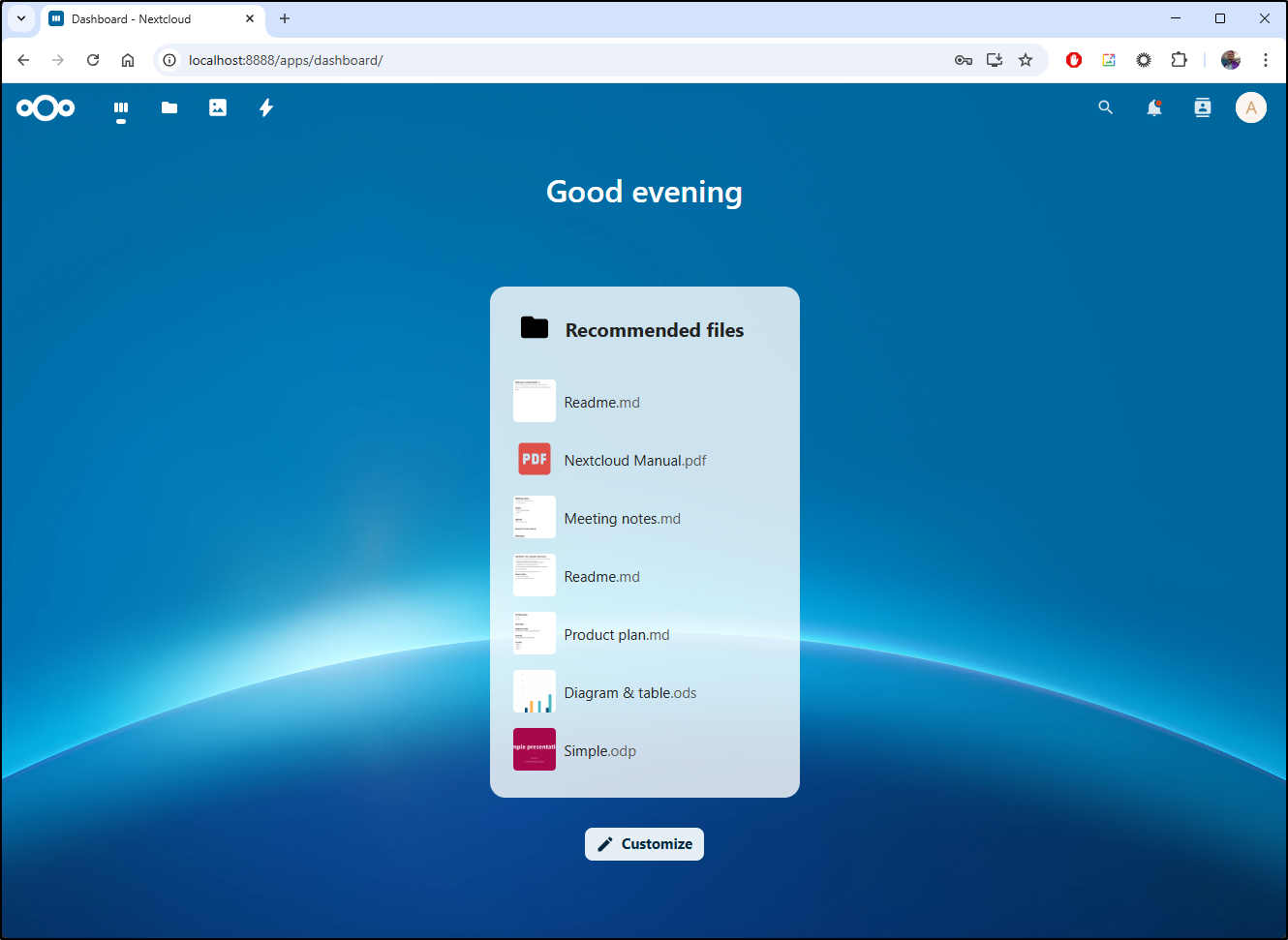

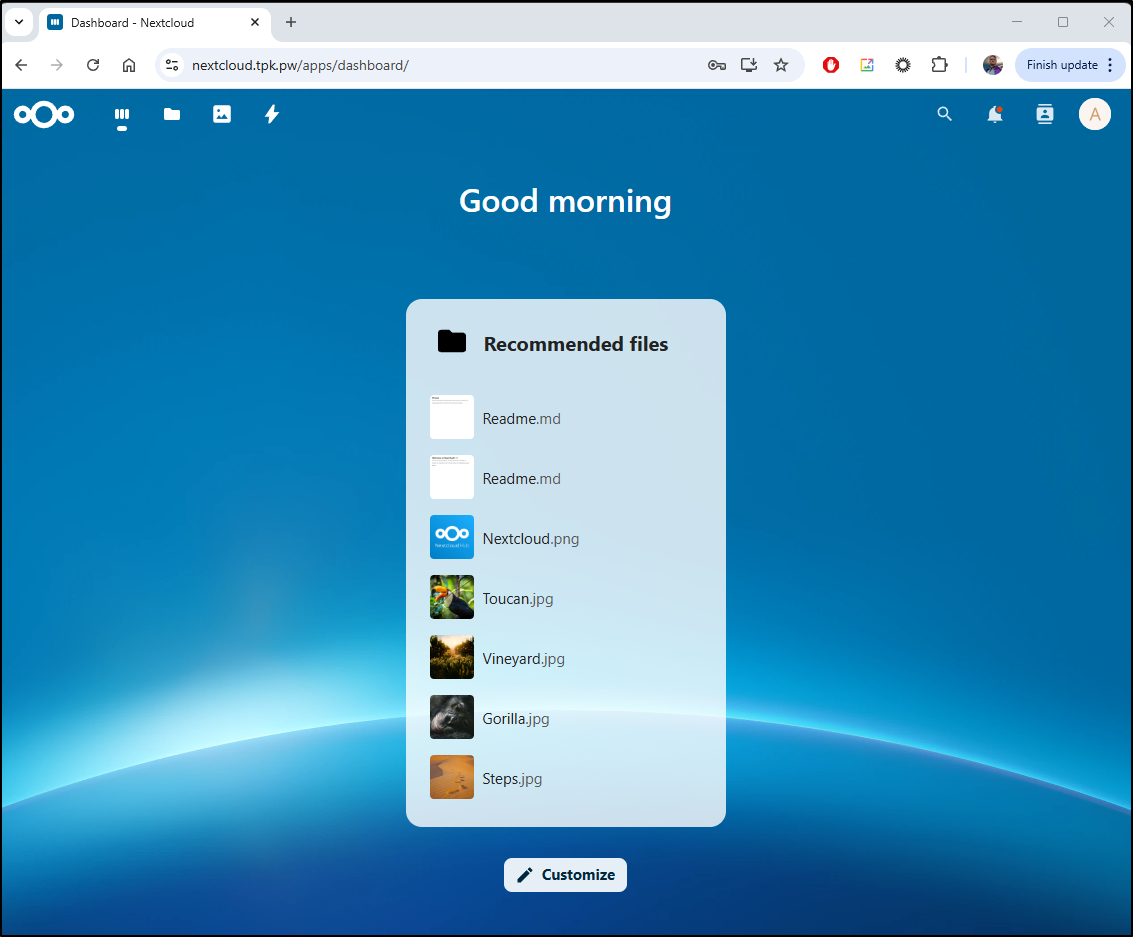

Once through a bit of a slide show I was presented with the dashboard

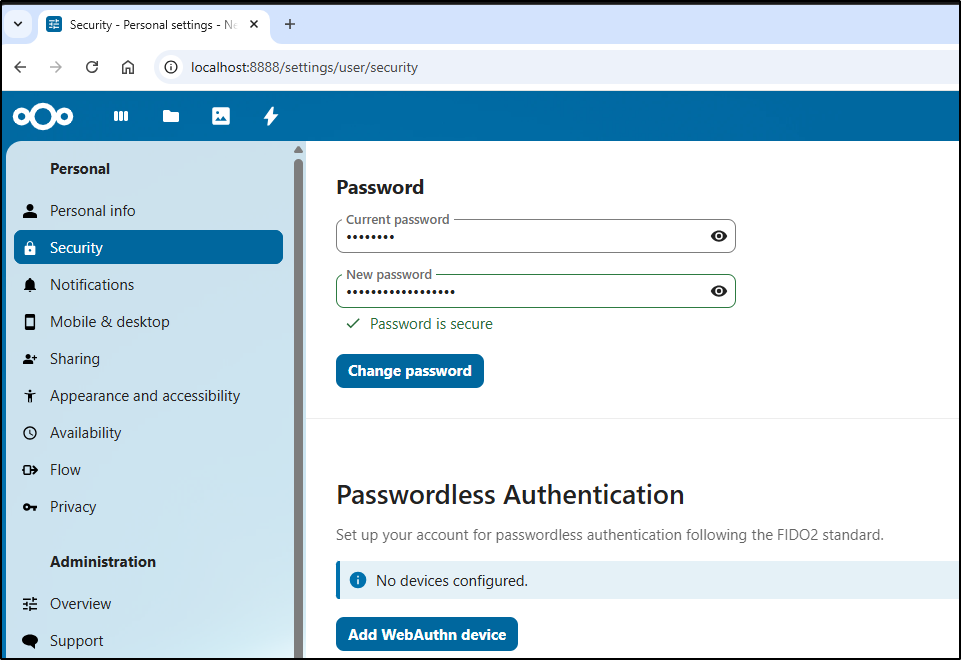

My first step was to change the password

Once I verified that, I decided to pivot to setting up the ingress.

$ az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.73.224.240 -n nextcloud

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "9a9ec82c-b78b-4a3c-bc50-635adfcc535b",

"fqdn": "nextcloud.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/nextcloud",

"name": "nextcloud",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

Since we have a CluusterIP service already, adding an Ingress is easy

$ cat ./nextcloud.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: my-nc-release-nextcloud

name: nextcloudingress

spec:

rules:

- host: nextcloud.tpk.pw

http:

paths:

- backend:

service:

name: my-nc-release-nextcloud

port:

number: 8080

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- nextcloud.tpk.pw

secretName: nextcloud-tls

$ kubectl apply -f ./nextcloud.yaml

ingress.networking.k8s.io/nextcloudingress created

When I saw the cert satisified

$ kubectl get cert nextcloud-tls

NAME READY SECRET AGE

nextcloud-tls False nextcloud-tls 43s

$ kubectl get cert nextcloud-tls

NAME READY SECRET AGE

nextcloud-tls True nextcloud-tls 2m29s

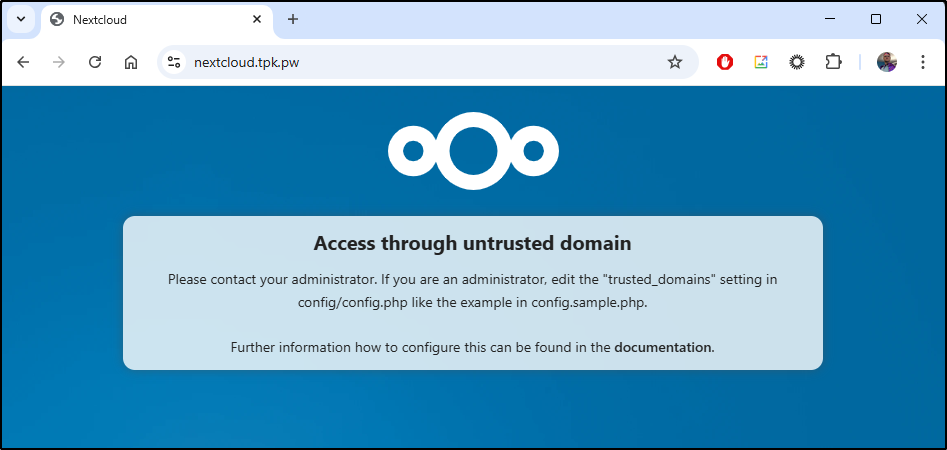

However, it seems we need to set a trusted domain setting somewhere to make this work

I’m going to try a quick upgrade via the helm chart

$ helm upgrade my-nc-release --set nextcloud.trustedDomains[0]=nextcloud.tpk.pw nextcloud/nextcloud

Release "my-nc-release" has been upgraded. Happy Helming!

NAME: my-nc-release

LAST DEPLOYED: Mon May 19 19:29:15 2025

NAMESPACE: default

STATUS: deployed

REVISION: 3

TEST SUITE: None

NOTES:

#######################################################################################################

## WARNING: You did not provide an external database host in your 'helm install' call ##

## Running Nextcloud with the integrated sqlite database is not recommended for production instances ##

#######################################################################################################

For better performance etc. you have to configure nextcloud with a resolvable database

host. To configure nextcloud to use and external database host:

1. Complete your nextcloud deployment by running:

export APP_HOST=127.0.0.1

export APP_PASSWORD=$(kubectl get secret --namespace default my-nc-release-nextcloud -o jsonpath="{.data.nextcloud-password}" | base64 --decode)

## PLEASE UPDATE THE EXTERNAL DATABASE CONNECTION PARAMETERS IN THE FOLLOWING COMMAND AS NEEDED ##

helm upgrade my-nc-release nextcloud/nextcloud \

--set nextcloud.password=$APP_PASSWORD,nextcloud.host=$APP_HOST,service.type=ClusterIP,mariadb.enabled=false,externalDatabase.user=nextcloud,externalDatabase.database=nextcloud,externalDatabase.host=YOUR_EXTERNAL_DATABASE_HOST

Even though the values looks right

$ helm get values my-nc-release

USER-SUPPLIED VALUES:

nextcloud:

trustedDomains:

- nextcloud.tpk.pw

it seems to just keep resetting.

$ kubectl get po -l app.kubernetes.io/name=nextcloud

NAME READY STATUS RESTARTS AGE

my-nc-release-nextcloud-68d944595b-qhbcb 0/1 Running 5 (33s ago) 4m14s

$ kubectl logs my-nc-release-nextcloud-68d944595b-qhbcb --previous

=> Searching for hook scripts (*.sh) to run, located in the folder "/docker-entrypoint-hooks.d/before-starting"

==> Skipped: the "before-starting" folder is empty (or does not exist)

AH00558: apache2: Could not reliably determine the server's fully qualified domain name, using 10.42.1.192. Set the 'ServerName' directive globally to suppress this message

AH00558: apache2: Could not reliably determine the server's fully qualified domain name, using 10.42.1.192. Set the 'ServerName' directive globally to suppress this message

[Tue May 20 00:33:03.363636 2025] [mpm_prefork:notice] [pid 1:tid 1] AH00163: Apache/2.4.62 (Debian) PHP/8.3.21 configured -- resuming normal operations

[Tue May 20 00:33:03.363683 2025] [core:notice] [pid 1:tid 1] AH00094: Command line: 'apache2 -D FOREGROUND'

10.42.1.1 - - [20/May/2025:00:33:21 +0000] "GET /status.php HTTP/1.1" 400 1416 "-" "kube-probe/1.26"

10.42.1.1 - - [20/May/2025:00:33:21 +0000] "GET /status.php HTTP/1.1" 400 1422 "-" "kube-probe/1.26"

10.42.1.1 - - [20/May/2025:00:33:31 +0000] "GET /status.php HTTP/1.1" 400 1420 "-" "kube-probe/1.26"

10.42.1.1 - - [20/May/2025:00:33:31 +0000] "GET /status.php HTTP/1.1" 400 1424 "-" "kube-probe/1.26"

10.42.1.1 - - [20/May/2025:00:33:41 +0000] "GET /status.php HTTP/1.1" 400 1412 "-" "kube-probe/1.26"

10.42.1.1 - - [20/May/2025:00:33:41 +0000] "GET /status.php HTTP/1.1" 400 1410 "-" "kube-probe/1.26"

[Tue May 20 00:33:41.727923 2025] [mpm_prefork:notice] [pid 1:tid 1] AH00170: caught SIGWINCH, shutting down gracefully

10.42.1.1 - - [20/May/2025:00:33:41 +0000] "GET /status.php HTTP/1.1" 400 1416 "-" "kube-probe/1.26"

I’ll try a delete and restart

$ helm delete my-nc-release

release "my-nc-release" uninstalled

builder@LuiGi:~/Workspaces/jekyll-blog$ helm upgrade --install my-nc-release --set nextcloud.trustedDomains[0]=nextcloud.tpk.pw nextcloud/nextcloud

Release "my-nc-release" does not exist. Installing it now.

NAME: my-nc-release

LAST DEPLOYED: Mon May 19 19:35:18 2025

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

#######################################################################################################

## WARNING: You did not provide an external database host in your 'helm install' call ##

## Running Nextcloud with the integrated sqlite database is not recommended for production instances ##

#######################################################################################################

For better performance etc. you have to configure nextcloud with a resolvable database

host. To configure nextcloud to use and external database host:

1. Complete your nextcloud deployment by running:

export APP_HOST=127.0.0.1

export APP_PASSWORD=$(kubectl get secret --namespace default my-nc-release-nextcloud -o jsonpath="{.data.nextcloud-password}" | base64 --decode)

## PLEASE UPDATE THE EXTERNAL DATABASE CONNECTION PARAMETERS IN THE FOLLOWING COMMAND AS NEEDED ##

helm upgrade my-nc-release nextcloud/nextcloud \

--set nextcloud.password=$APP_PASSWORD,nextcloud.host=$APP_HOST,service.type=ClusterIP,mariadb.enabled=false,externalDatabase.user=nextcloud,externalDatabase.database=nextcloud,externalDatabase.host=YOUR_EXTERNAL_DATABASE_HOST

That seemed to work

$ kubectl get po -l app.kubernetes.io/name=nextcloud

NAME READY STATUS RESTARTS AGE

my-nc-release-nextcloud-68d944595b-wk9wn 0/1 Running 2 (19s ago) 100s

I tried fixing the values, including host then trying again. I also defined my ingress in the values file and deleted the one I had made manually

$ helm upgrade --install my-nc-release --values nc.values.yaml nextcloud/nextcloud

Release "my-nc-release" does not exist. Installing it now.

NAME: my-nc-release

LAST DEPLOYED: Mon May 19 20:21:31 2025

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

#######################################################################################################

## WARNING: You did not provide an external database host in your 'helm install' call ##

## Running Nextcloud with the integrated sqlite database is not recommended for production instances ##

#######################################################################################################

For better performance etc. you have to configure nextcloud with a resolvable database

host. To configure nextcloud to use and external database host:

1. Complete your nextcloud deployment by running:

export APP_HOST=127.0.0.1

export APP_PASSWORD=$(kubectl get secret --namespace default my-nc-release-nextcloud -o jsonpath="{.data.nextcloud-password}" | base64 --decode)

## PLEASE UPDATE THE EXTERNAL DATABASE CONNECTION PARAMETERS IN THE FOLLOWING COMMAND AS NEEDED ##

helm upgrade my-nc-release nextcloud/nextcloud \

--set nextcloud.password=$APP_PASSWORD,nextcloud.host=$APP_HOST,service.type=ClusterIP,mariadb.enabled=false,externalDatabase.user=nextcloud,externalDatabase.database=nextcloud,externalDatabase.host=YOUR_EXTERNAL_DATABASE_HOST

$ kubectl get po -l app.kubernetes.io/name=nextcloud

NAME READY STATUS RESTARTS AGE

my-nc-release-nextcloud-6947bd695-jgw4x 1/1 Running 0 50s

Logging in, however, just seems to time out

I actually had to fight this for a bit to get the helm values to work since most tips I found online dealt with the local VM install.

My values ended up as:

$ cat values.yaml

affinity: {}

collabora:

autoscaling:

enabled: false

collabora:

aliasgroups: []

existingSecret:

enabled: false

passwordKey: password

secretName: ""

usernameKey: username

extra_params: --o:ssl.enable=false

password: examplepass

server_name: null

username: admin

enabled: false

ingress:

annotations: {}

className: ""

enabled: false

hosts:

- host: chart-example.local

paths:

- path: /

pathType: ImplementationSpecific

tls: []

resources: {}

cronjob:

command:

- /cron.sh

enabled: false

lifecycle: {}

resources: {}

securityContext: {}

deploymentAnnotations: {}

deploymentLabels: {}

dnsConfig: {}

externalDatabase:

database: nextcloud

enabled: false

existingSecret:

enabled: false

passwordKey: db-password

usernameKey: db-username

host: ""

password: ""

type: mysql

user: nextcloud

fullnameOverride: ""

hpa:

cputhreshold: 60

enabled: false

maxPods: 10

minPods: 1

image:

flavor: apache

pullPolicy: IfNotPresent

repository: nextcloud

tag: null

imaginary:

enabled: false

image:

pullPolicy: IfNotPresent

pullSecrets: []

registry: docker.io

repository: h2non/imaginary

tag: 1.2.4

livenessProbe:

enabled: true

failureThreshold: 3

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

nodeSelector: {}

podAnnotations: {}

podLabels: {}

podSecurityContext: {}

readinessProbe:

enabled: true

failureThreshold: 3

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

replicaCount: 1

resources: {}

securityContext:

runAsNonRoot: true

runAsUser: 1000

service:

annotations: {}

labels: {}

loadBalancerIP: null

nodePort: null

type: ClusterIP

tolerations: []

ingress:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-body-size: 4G

enabled: true

labels: {}

path: /

pathType: Prefix

tls:

- hosts:

- nextcloud.tpk.pw

secretName: nextcloud-tls

internalDatabase:

enabled: true

name: nextcloud

lifecycle: {}

livenessProbe:

enabled: true

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

mariadb:

architecture: standalone

auth:

database: nextcloud

existingSecret: ""

password: changeme

username: nextcloud

enabled: false

global:

defaultStorageClass: ""

primary:

persistence:

accessMode: ReadWriteOnce

enabled: false

existingClaim: ""

size: 8Gi

storageClass: ""

metrics:

affinity: {}

enabled: false

https: false

image:

pullPolicy: IfNotPresent

repository: xperimental/nextcloud-exporter

tag: 0.6.2

info:

apps: false

nodeSelector: {}

podAnnotations: {}

podLabels: {}

podSecurityContext: {}

replicaCount: 1

resources: {}

securityContext:

runAsNonRoot: true

runAsUser: 1000

server: ""

service:

annotations:

prometheus.io/port: "9205"

prometheus.io/scrape: "true"

labels: {}

loadBalancerIP: null

type: ClusterIP

serviceMonitor:

enabled: false

interval: 30s

jobLabel: ""

labels: {}

namespace: ""

namespaceSelector: null

scrapeTimeout: ""

timeout: 5s

tlsSkipVerify: false

token: ""

tolerations: []

nameOverride: ""

nextcloud:

configs:

proxies.config.php: |-

<?php

$CONFIG = array (

'overwriteprotocol' => 'https'

);

containerPort: 80

datadir: /var/www/html/data

defaultConfigs:

.htaccess: true

apache-pretty-urls.config.php: true

apcu.config.php: true

apps.config.php: true

autoconfig.php: true

imaginary.config.php: false

redis.config.php: true

reverse-proxy.config.php: true

s3.config.php: true

smtp.config.php: true

swift.config.php: true

upgrade-disable-web.config.php: true

existingSecret:

enabled: false

passwordKey: nextcloud-password

smtpHostKey: smtp-host

smtpPasswordKey: smtp-password

smtpUsernameKey: smtp-username

tokenKey: ""

usernameKey: nextcloud-username

extraEnv: null

extraInitContainers: []

extraSidecarContainers: []

extraVolumeMounts: null

extraVolumes: null

hooks:

before-starting: null

post-installation: null

post-upgrade: null

pre-installation: null

pre-upgrade: null

host: nextcloud.tpk.pw

mail:

domain: domain.com

enabled: false

fromAddress: user

smtp:

authtype: LOGIN

host: domain.com

name: user

password: pass

port: 465

secure: ssl

mariaDbInitContainer:

resources: {}

securityContext: {}

objectStore:

s3:

accessKey: ""

autoCreate: false

bucket: ""

enabled: false

existingSecret: ""

host: ""

legacyAuth: false

port: "443"

prefix: ""

region: eu-west-1

secretKey: ""

secretKeys:

accessKey: ""

bucket: ""

host: ""

secretKey: ""

sse_c_key: ""

sse_c_key: ""

ssl: true

storageClass: STANDARD

usePathStyle: false

swift:

autoCreate: false

container: ""

enabled: false

project:

domain: Default

name: ""

region: ""

service: swift

url: ""

user:

domain: Default

name: ""

password: ""

password: changeme

persistence:

subPath: null

phpConfigs: {}

podSecurityContext: {}

postgreSqlInitContainer:

resources: {}

securityContext: {}

securityContext: {}

strategy:

type: Recreate

trustedDomains:

- nextcloud.tpk.pw

update: 0

username: admin

nginx:

config:

custom: null

default: true

headers: null

containerPort: 80

enabled: false

extraEnv: []

image:

pullPolicy: IfNotPresent

repository: nginx

tag: alpine

ipFamilies:

- IPv4

resources: {}

securityContext: {}

nodeSelector: {}

persistence:

accessMode: ReadWriteOnce

annotations: {}

enabled: false

nextcloudData:

accessMode: ReadWriteOnce

annotations: {}

enabled: false

size: 8Gi

subPath: null

size: 8Gi

phpClientHttpsFix:

enabled: false

protocol: https

podAnnotations: {}

postgresql:

enabled: false

global:

postgresql:

auth:

database: nextcloud

existingSecret: ""

password: changeme

secretKeys:

adminPasswordKey: ""

replicationPasswordKey: ""

userPasswordKey: ""

username: nextcloud

primary:

persistence:

enabled: false

rbac:

enabled: false

serviceaccount:

annotations: {}

create: true

name: nextcloud-serviceaccount

readinessProbe:

enabled: true

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

redis:

auth:

enabled: true

existingSecret: ""

existingSecretPasswordKey: ""

password: changeme

enabled: false

global:

storageClass: ""

master:

persistence:

enabled: true

replica:

persistence:

enabled: true

replicaCount: 1

resources: {}

securityContext: {}

service:

annotations: {}

loadBalancerIP: ""

nodePort: null

port: 8080

type: ClusterIP

startupProbe:

enabled: false

failureThreshold: 30

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

tolerations: []

But if it helps, here is just the diff with the default helm values:

$ diff myvalues defaultvalues

97,102c97,98

< annotations:

< cert-manager.io/cluster-issuer: azuredns-tpkpw

< kubernetes.io/ingress.class: nginx

< kubernetes.io/tls-acme: "true"

< nginx.ingress.kubernetes.io/proxy-body-size: 4G

< enabled: true

---

> annotations: {}

> enabled: false

106,109d101

< tls:

< - hosts:

< - nextcloud.tpk.pw

< secretName: nextcloud-tls

179,184c171

< configs:

< proxies.config.php: |-

< <?php

< $CONFIG = array (

< 'overwriteprotocol' => 'https'

< );

---

> configs: {}

219c206

< host: nextcloud.tpk.pw

---

> host: nextcloud.kube.home

282,283c269

< trustedDomains:

< - nextcloud.tpk.pw

---

> trustedDomains: []

290c276,284

< headers: null

---

> headers:

> Referrer-Policy: no-referrer

> Strict-Transport-Security: ""

> X-Content-Type-Options: nosniff

> X-Download-Options: noopen

> X-Frame-Options: SAMEORIGIN

> X-Permitted-Cross-Domain-Policies: none

> X-Robots-Tag: noindex, nofollow

> X-XSS-Protection: 1; mode=block

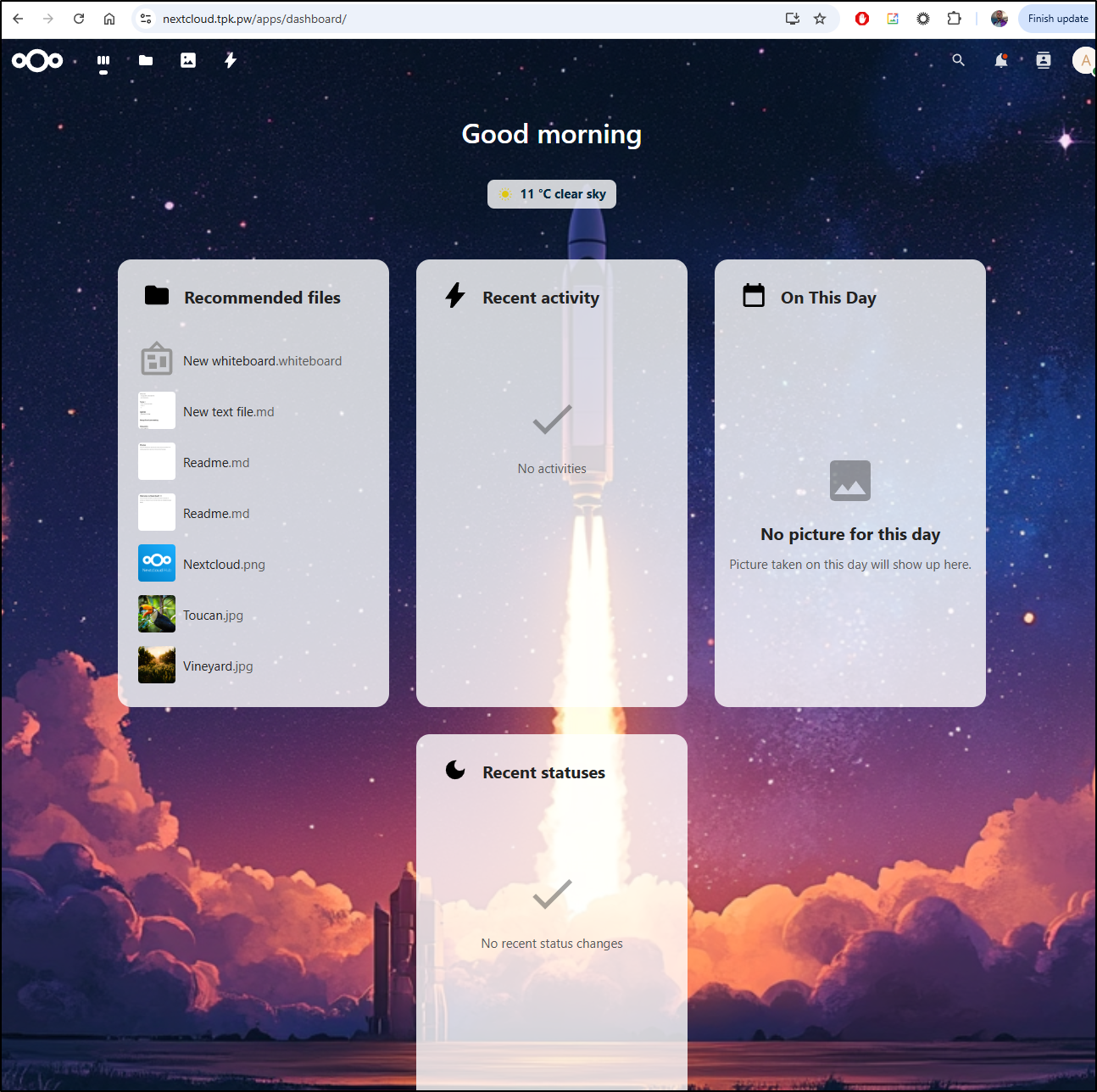

Once in, and having changed the admin password, we can see the landing page

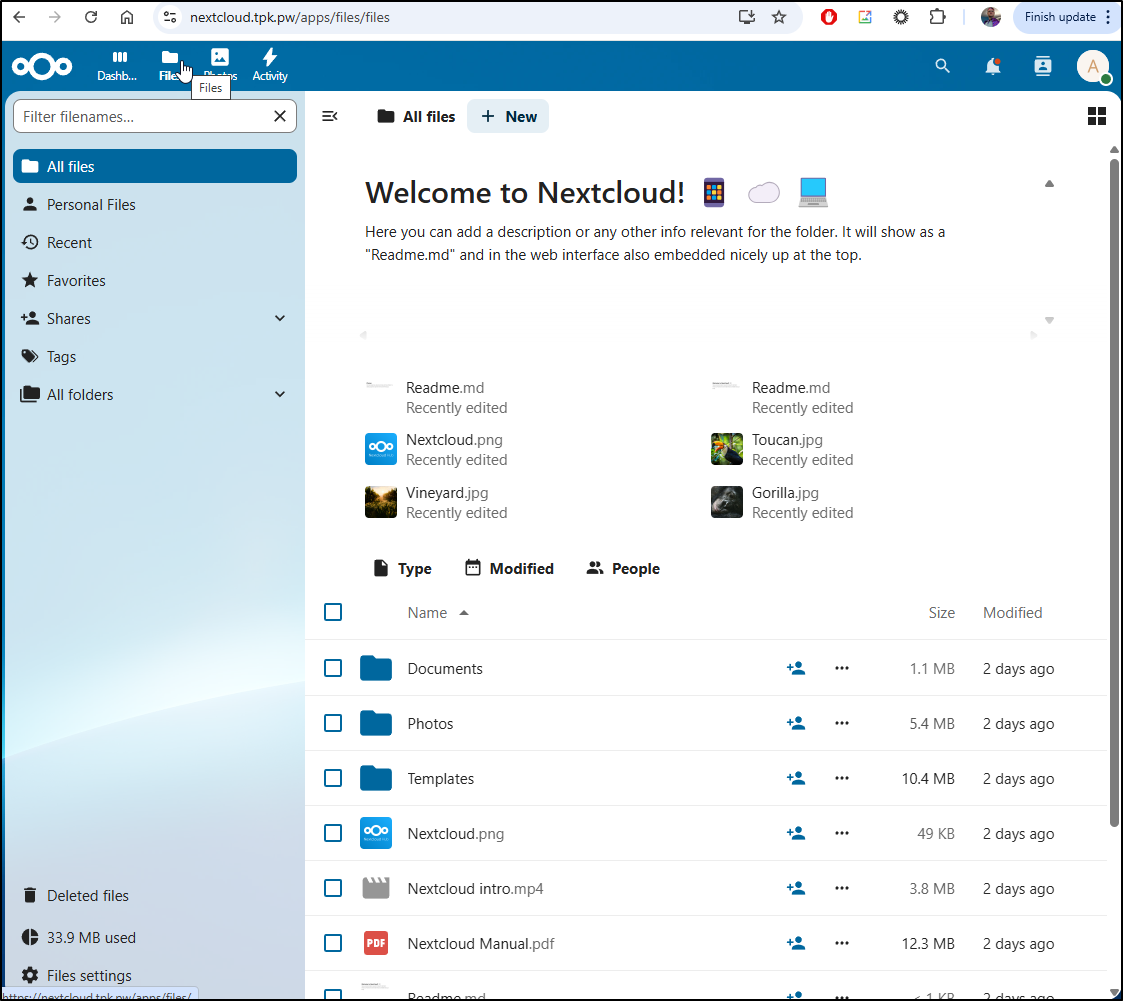

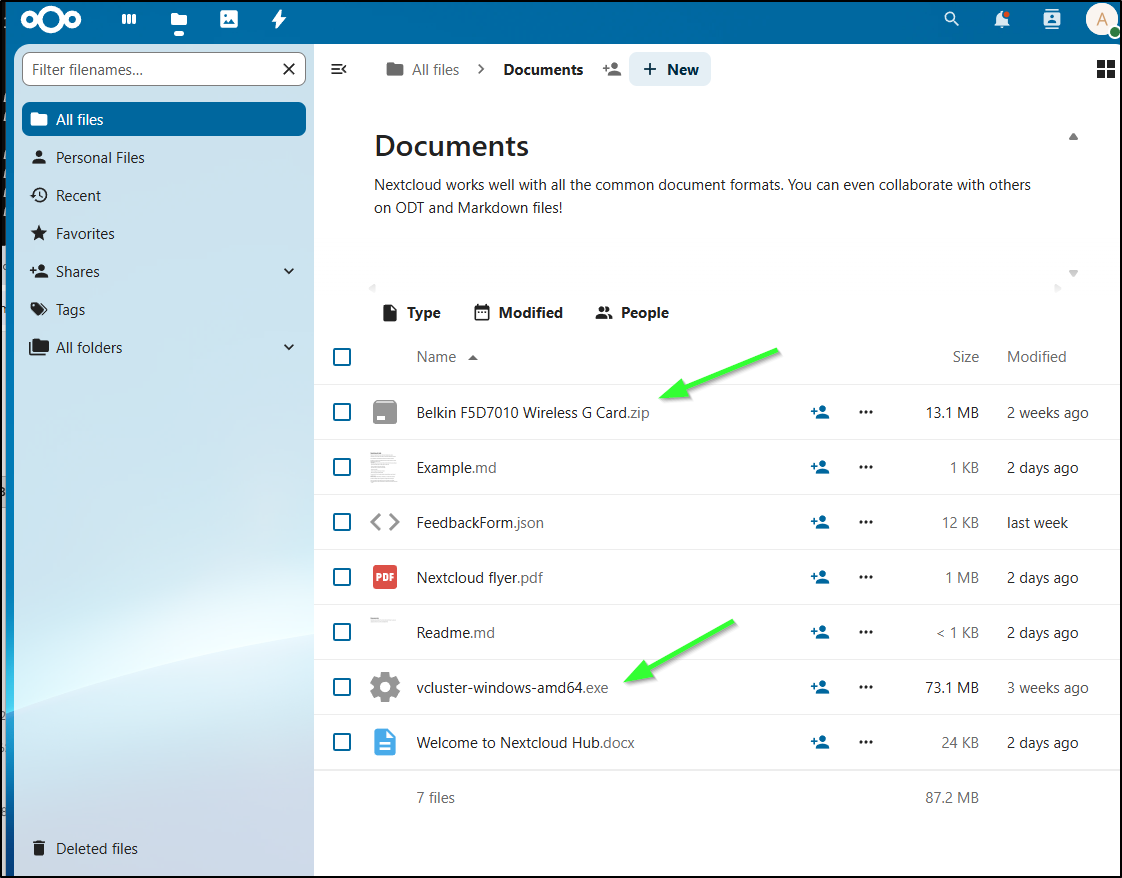

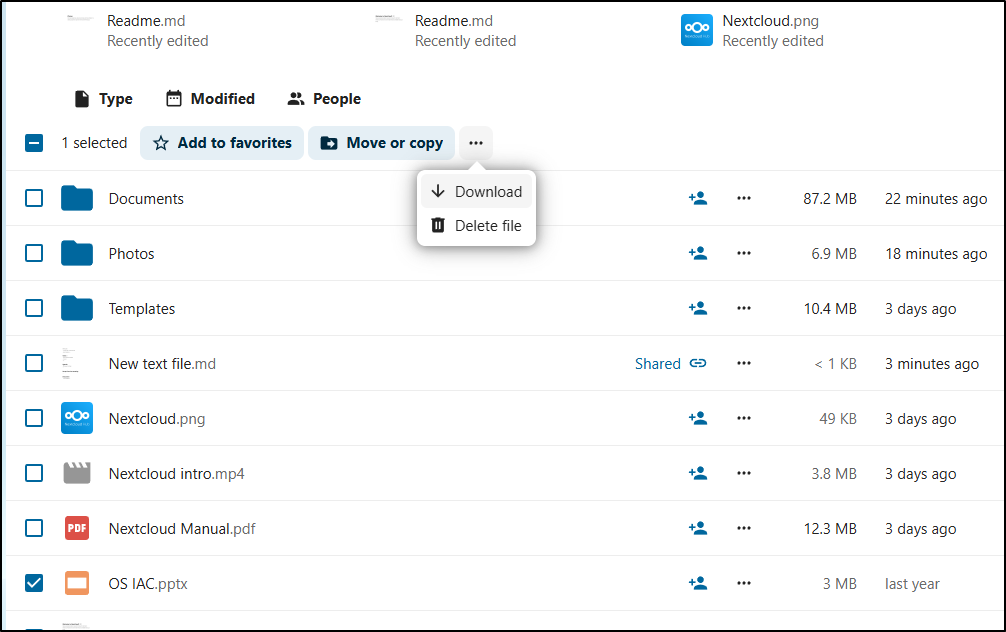

If I click on Files, we can see some default files that are there for us

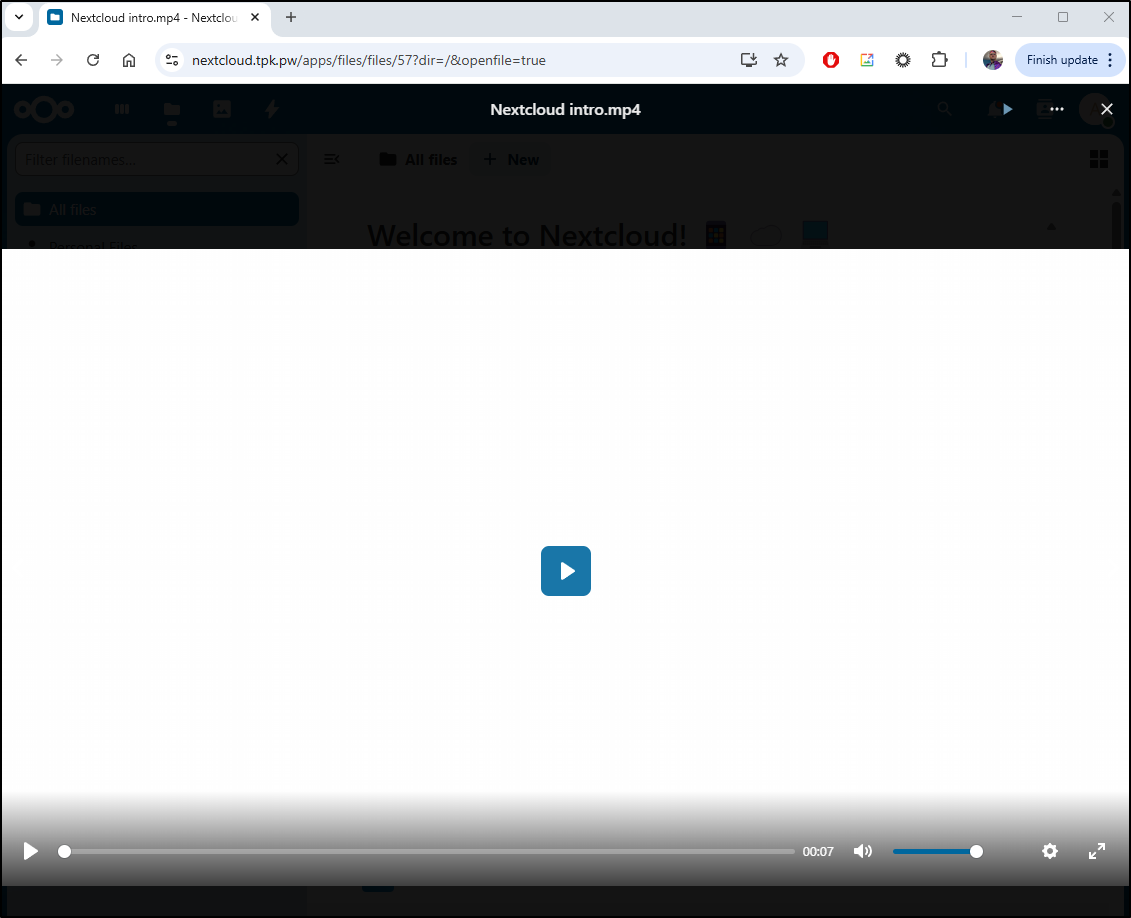

Video files, when clicked play in browser

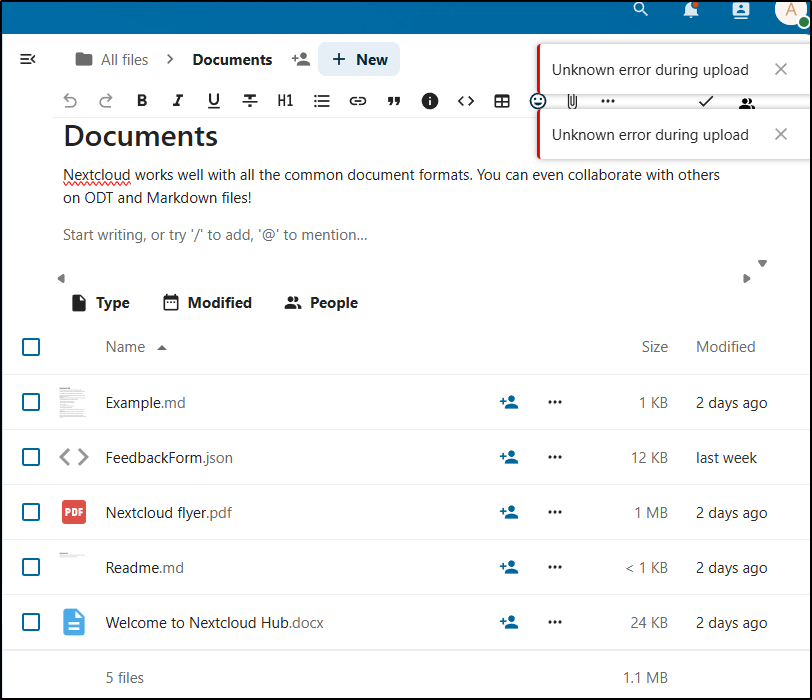

I ran into issues in uploading, however. Small files (like the “FeedbackForm.json” from my n8n demo last week) were fine, but I tried a small 13Mb zip file and a 78Mb exe and both failed on upload

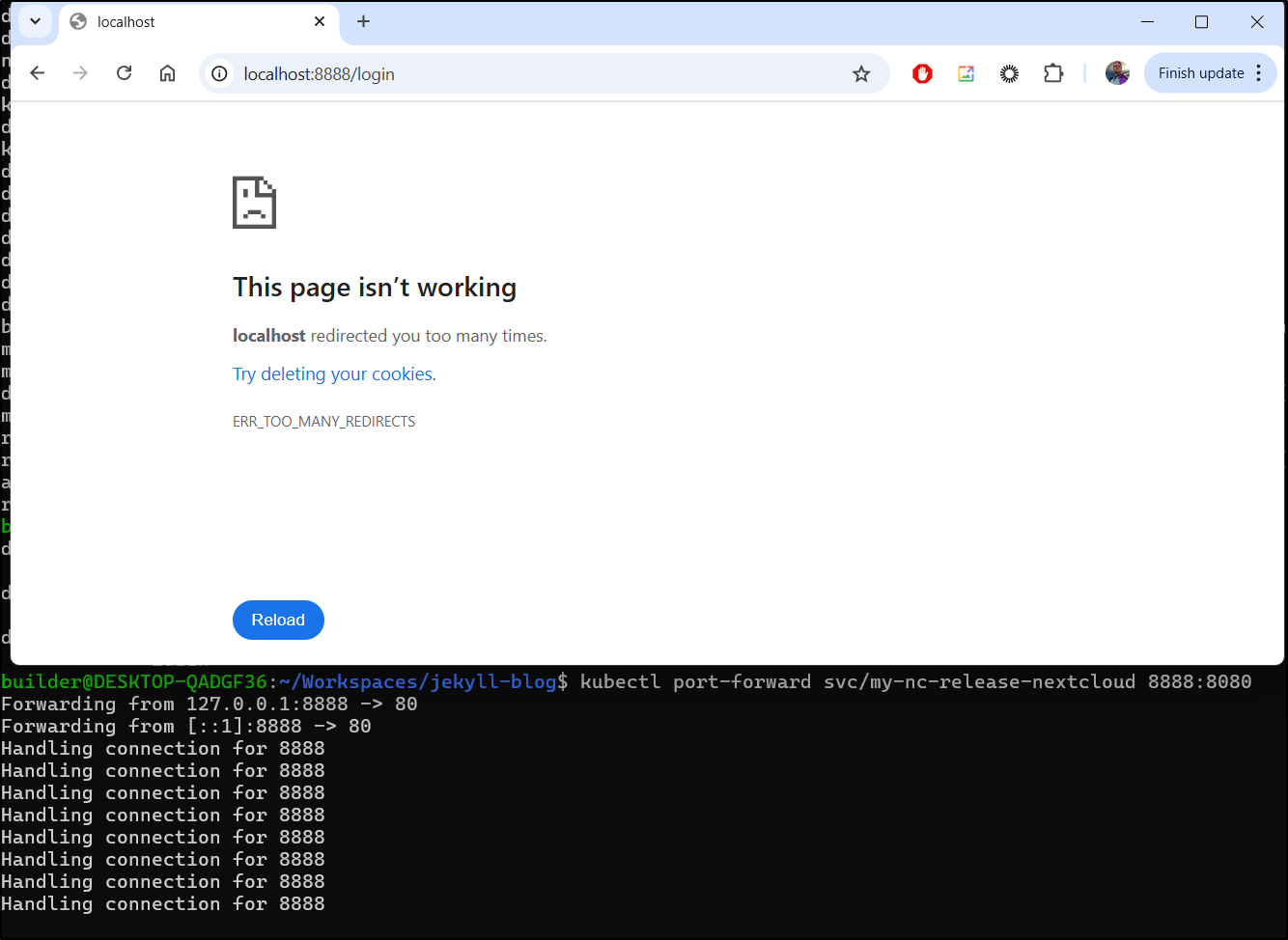

I thought perhaps I could port-forward to the service, but it just wants to redirect to https and gives up

However, I suspected this might be caused by some missing Ingress annotations. I added all the ones I thought might help - not very scientific, but when in doubt, add ‘em all.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

meta.helm.sh/release-name: my-nc-release

meta.helm.sh/release-namespace: default

ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-body-size: "0"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/client-max-body-size: "0"

nginx.org/proxy-connect-timeout: "3600"

nginx.org/proxy-read-timeout: "3600"

creationTimestamp: "2025-05-20T01:44:34Z"

generation: 1

labels:

app.kubernetes.io/component: app

app.kubernetes.io/instance: my-nc-release

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: nextcloud

app.kubernetes.io/version: 30.0.10

helm.sh/chart: nextcloud-6.6.10

name: my-nc-release-nextcloud

namespace: default

resourceVersion: "72780184"

uid: 465c9817-403c-41d3-985d-6dbe3d057df0

... snip ...

I think the various max-body-size and timeout increases solved it.

Now the uploads went fine

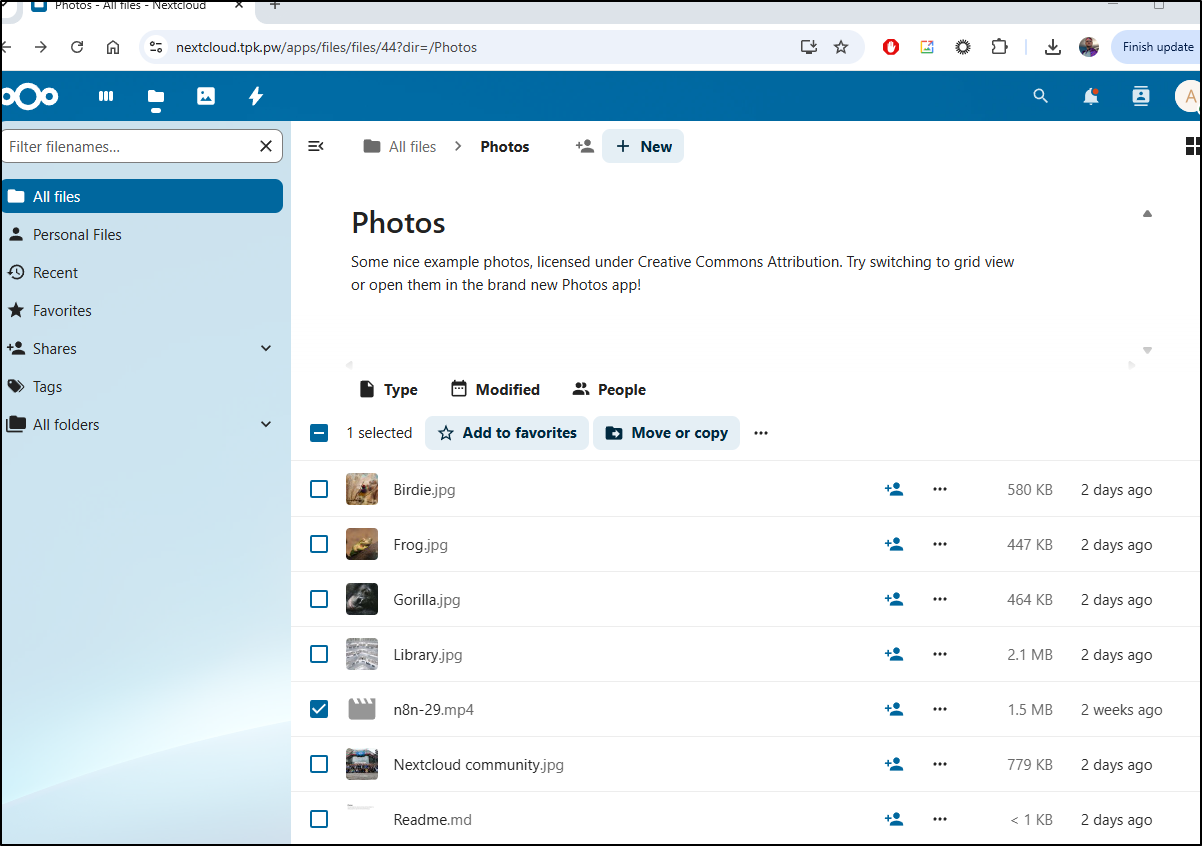

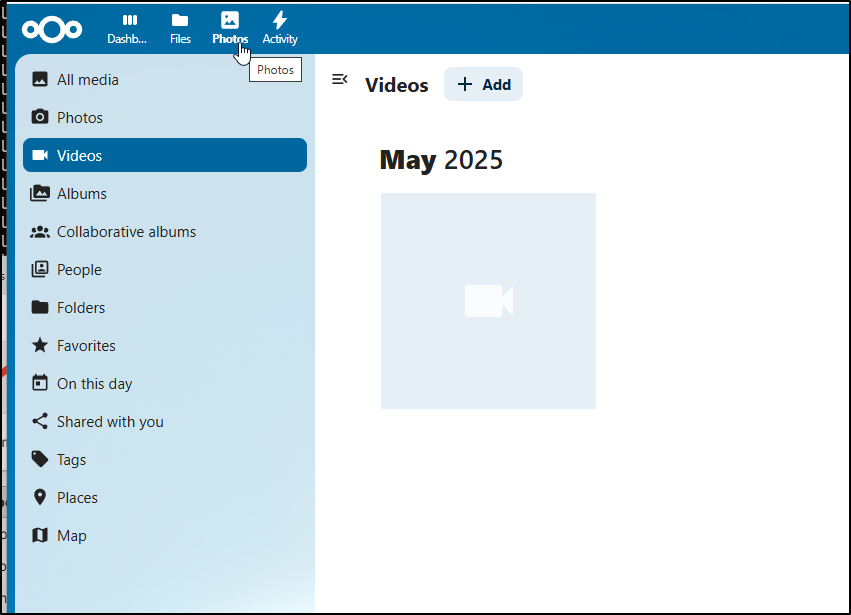

Videos I found a bit tricky. There is no videos folder by default. But if you upload a video file into the “Photos” folder

Then it will show up in the Videos section of Photos

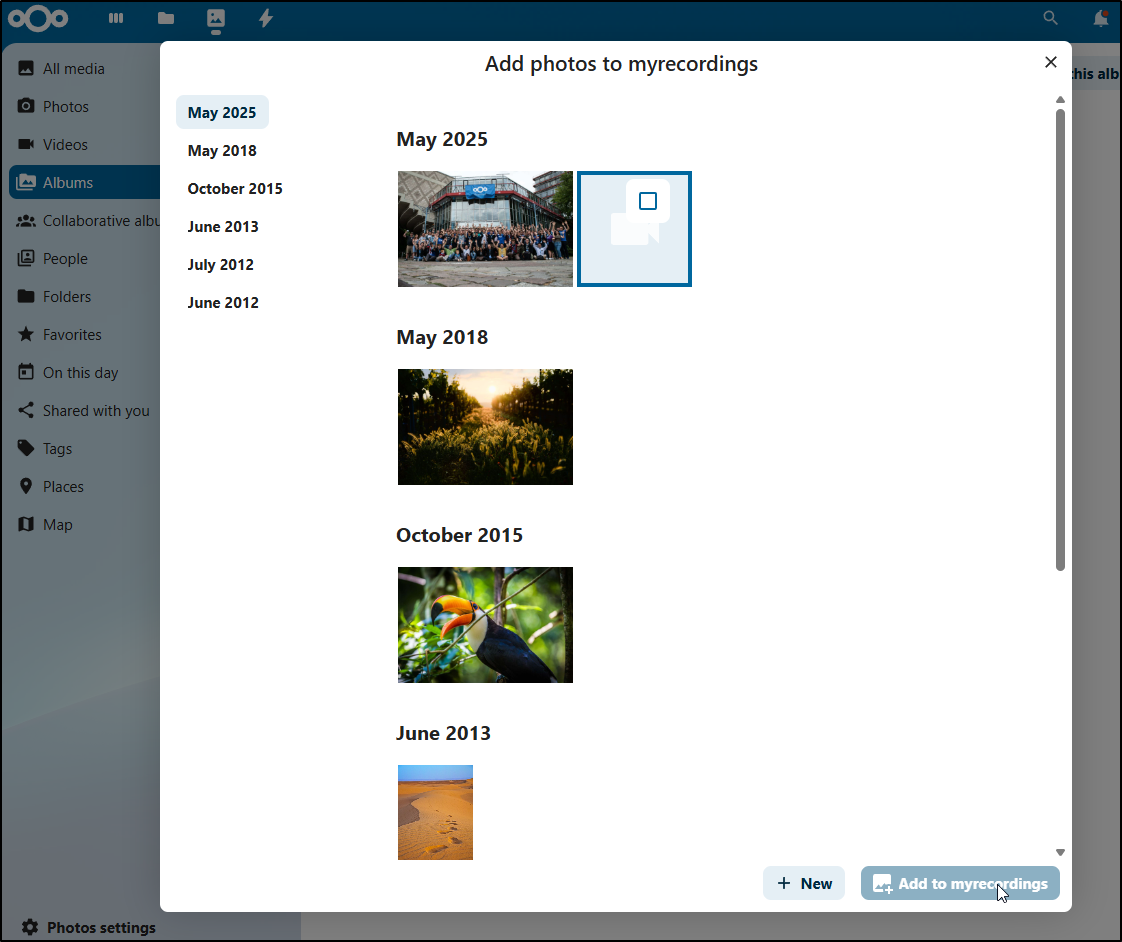

I can then make an albume (e.g. “myrecordings”), then add the video to the album

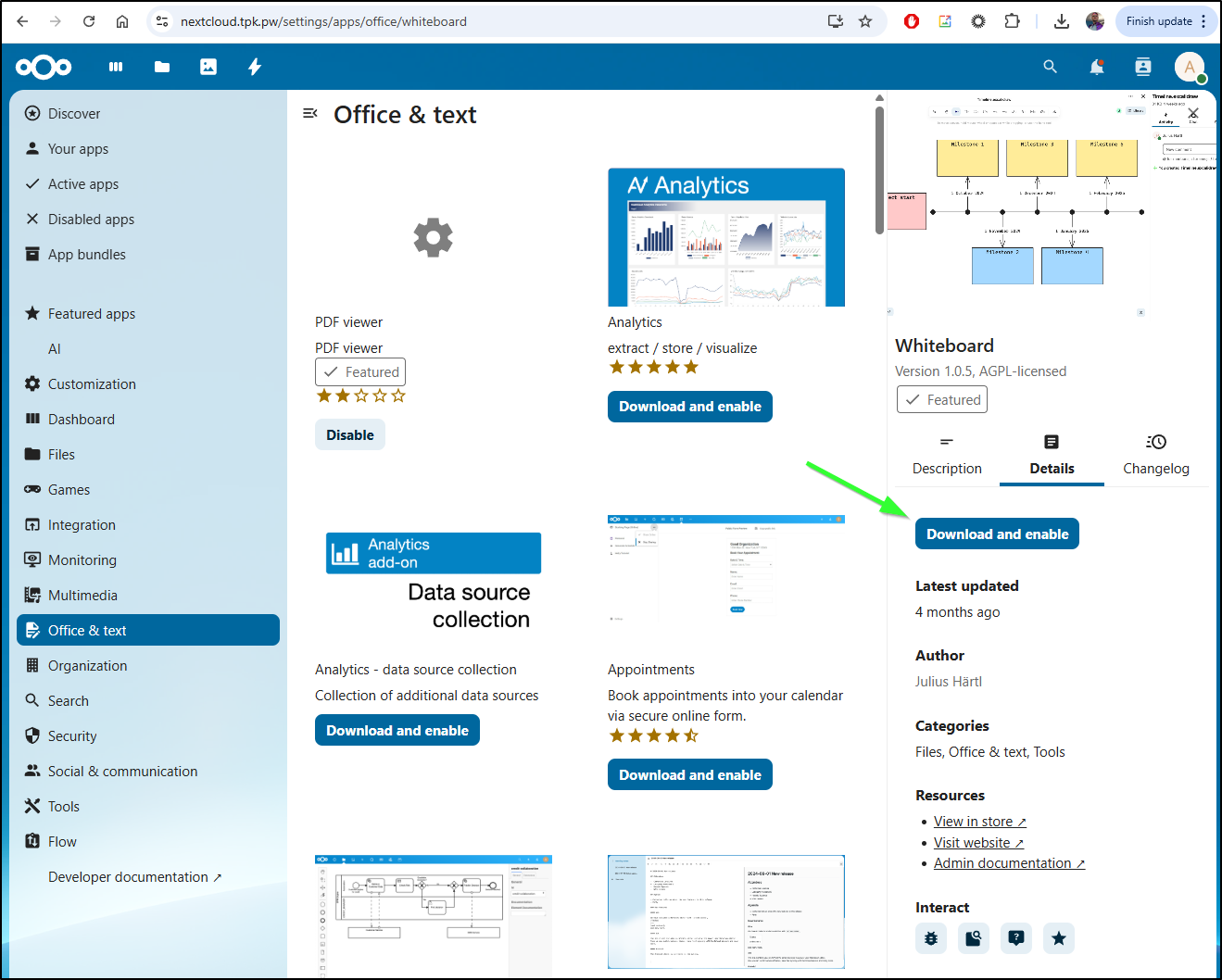

NextCloud is meant to do a whole lot more than just be a glorified Dropbox. It has an “App Store” and a lot of useful apps there.

For instance, we can to go “Office and Text” and see a Whiteboard app we can Download and enable

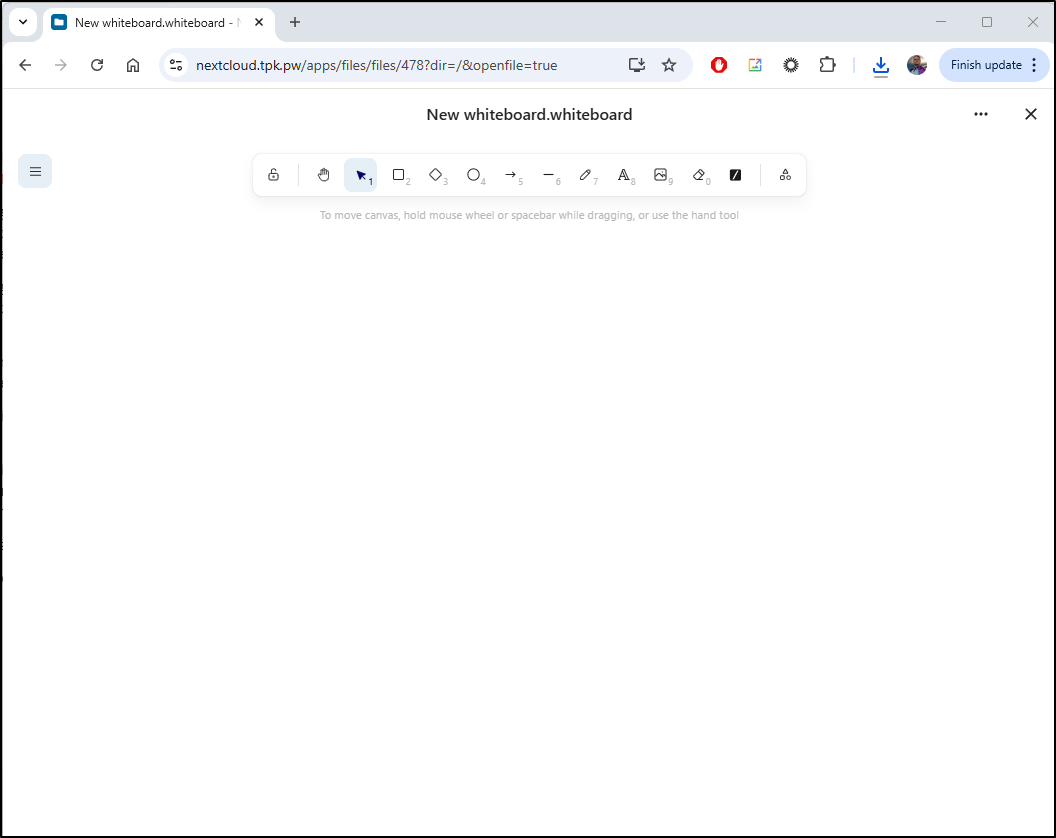

The docs said it requires another server to run, but i found I could create without issue:

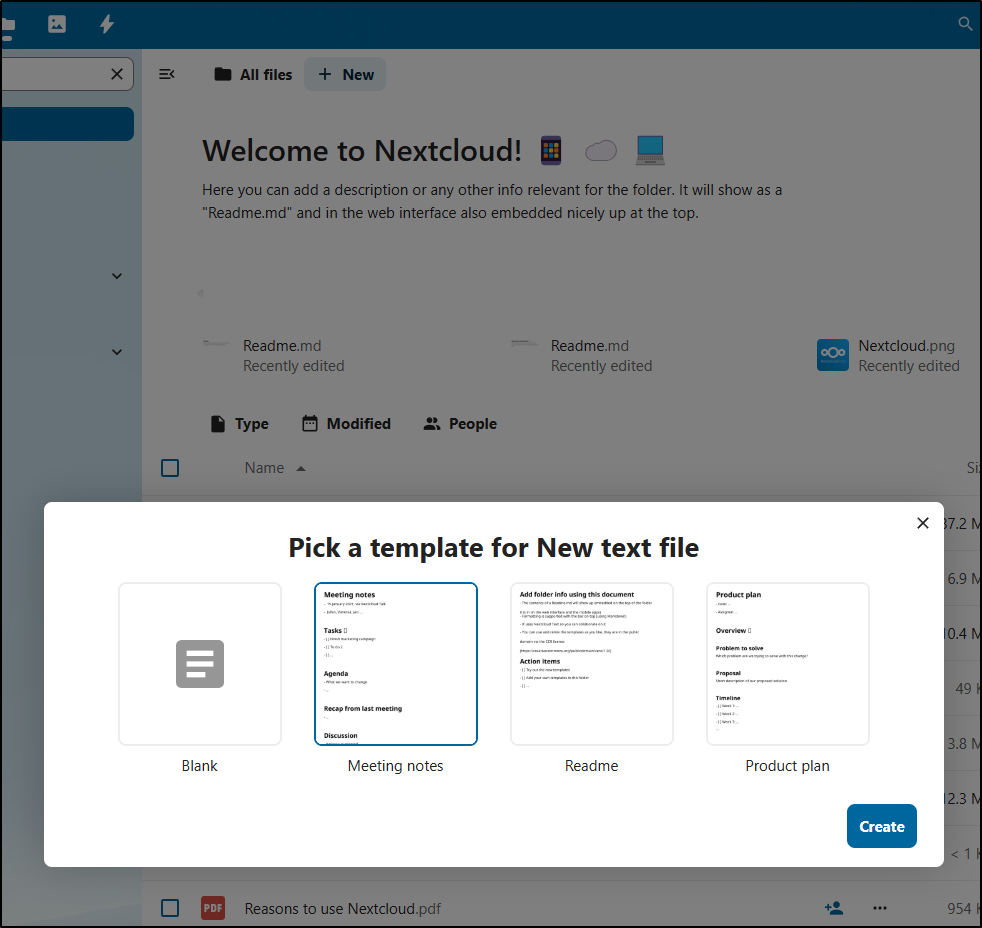

I then tried an “untested” Markdown editor. When making a text file, i could now pick a template

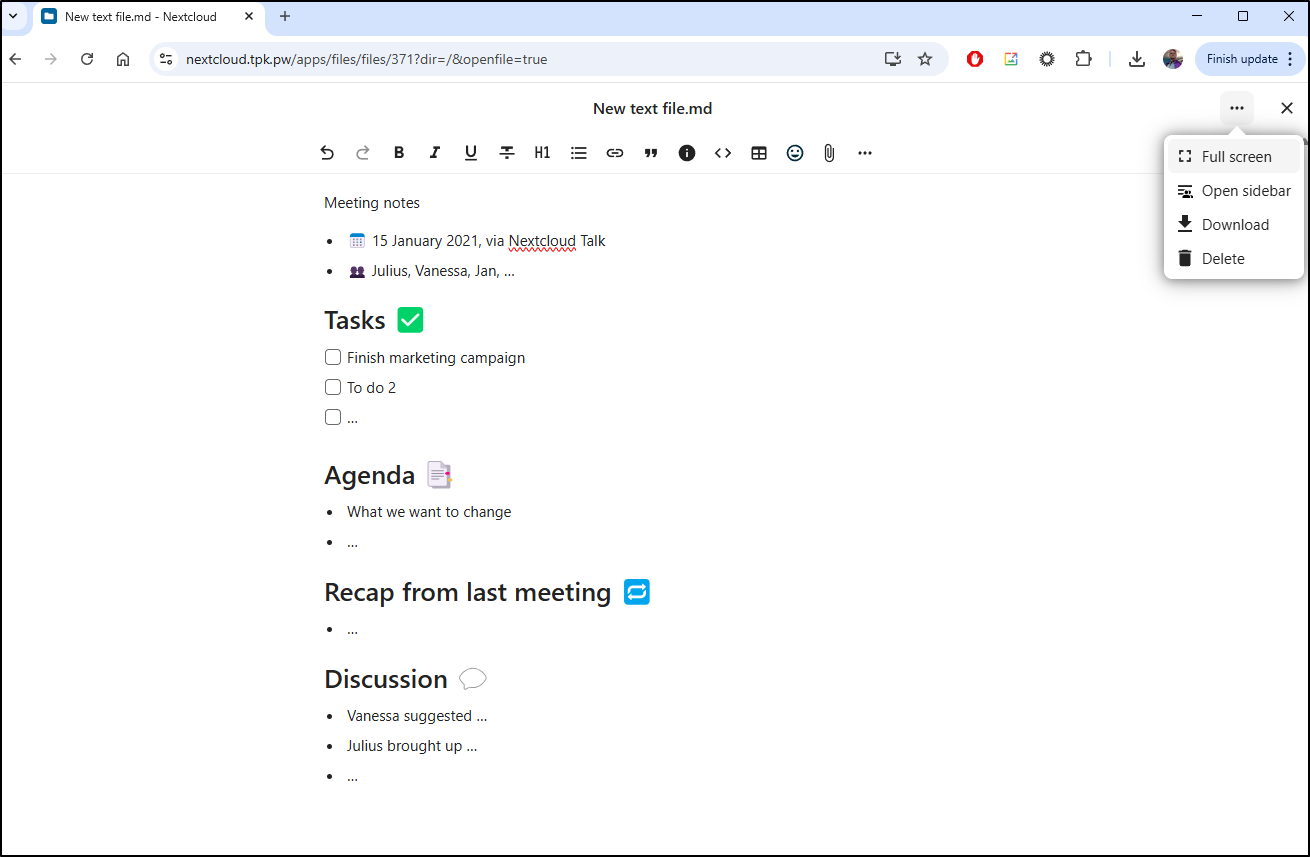

Which gave me a decent WYSIWYG editor

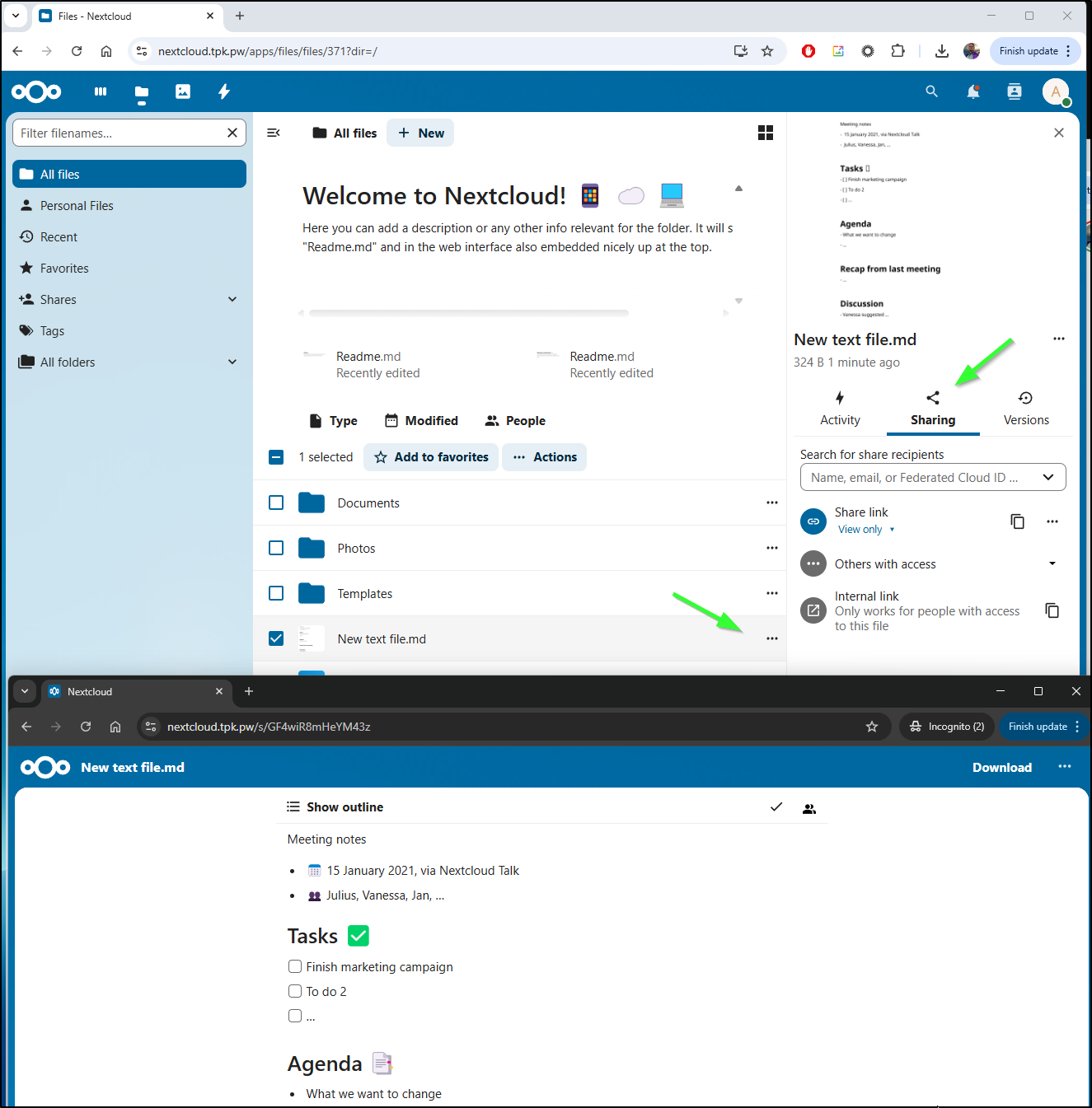

One rather useful feature is one can create an anonymous link and it will render the MD page for people viewing. Good for notes or a presentation

I was hoping this could work for PPTX for presentations, but OOTB, it can display PDFs but not PPTx (they just download)

Users

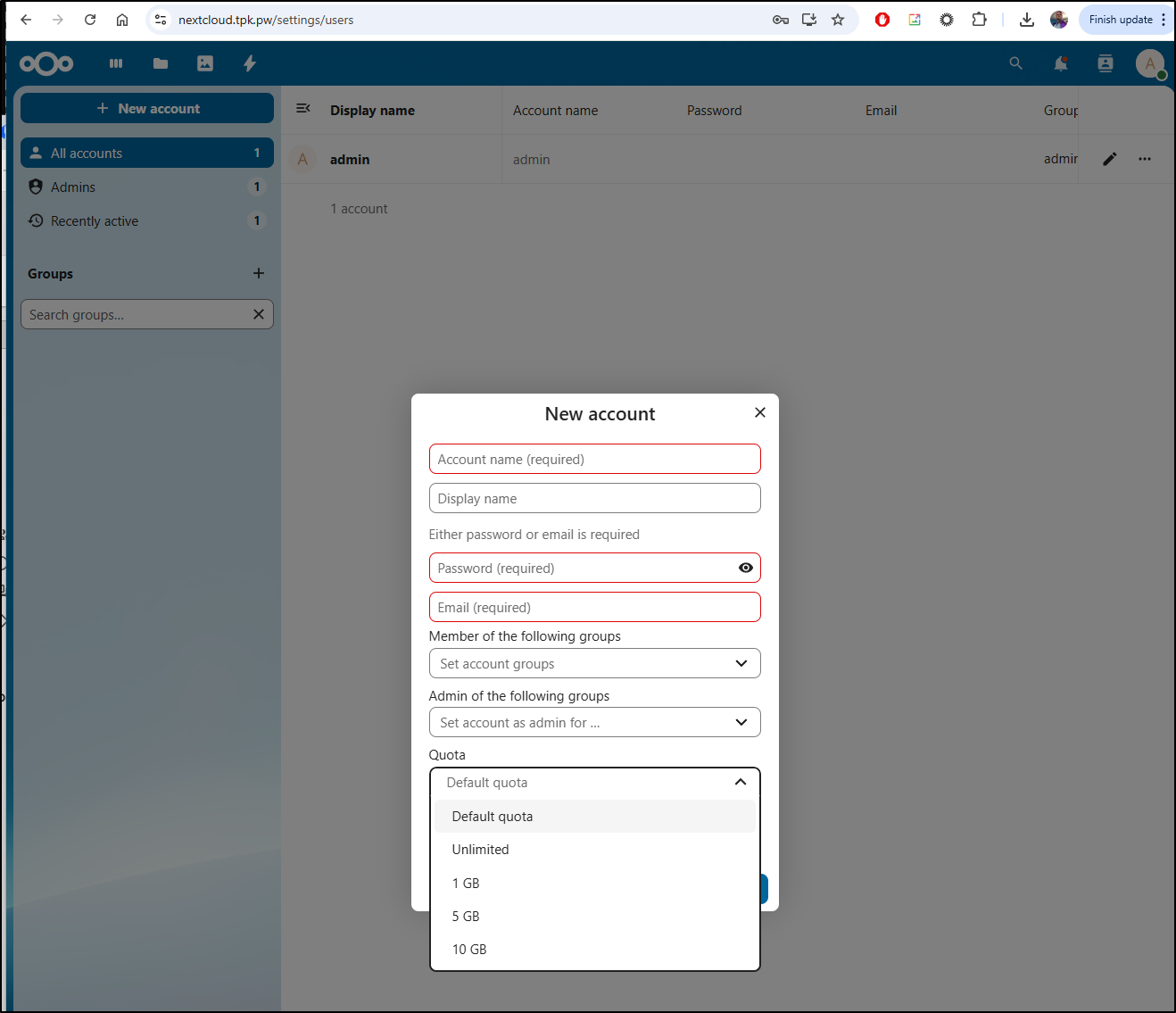

We can add new accounts in Administration as well as set quotas

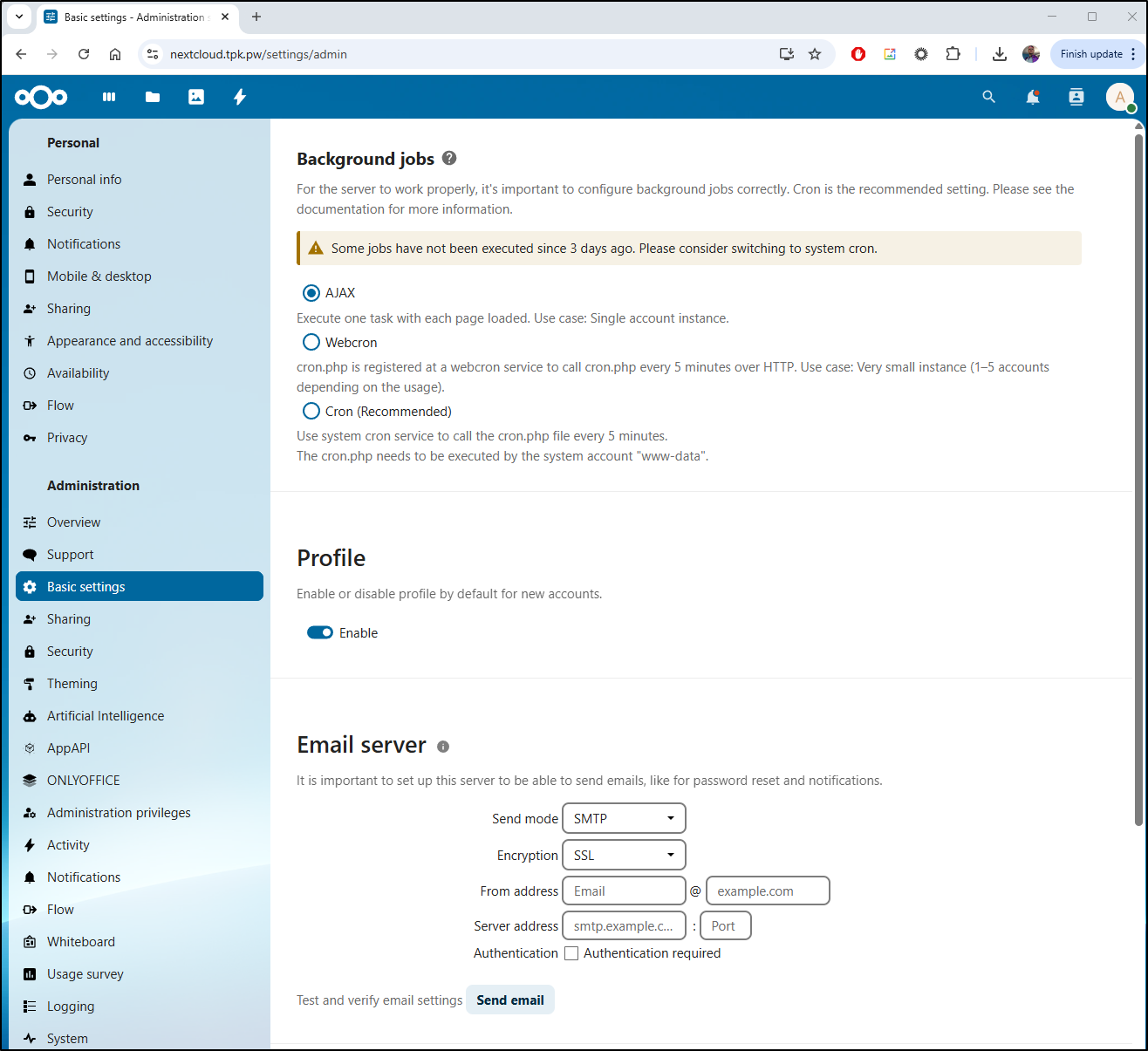

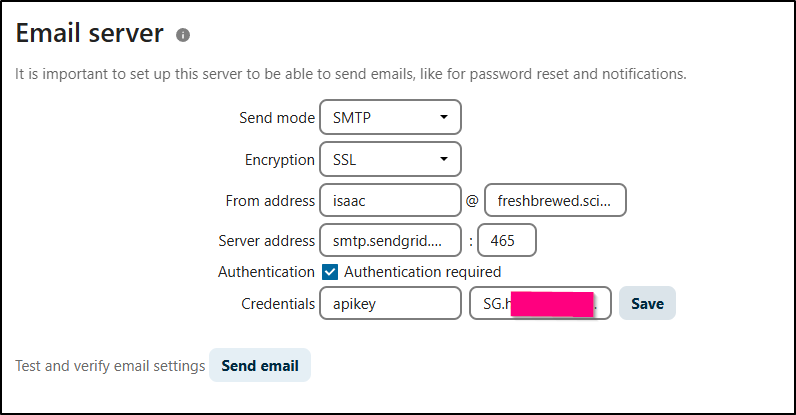

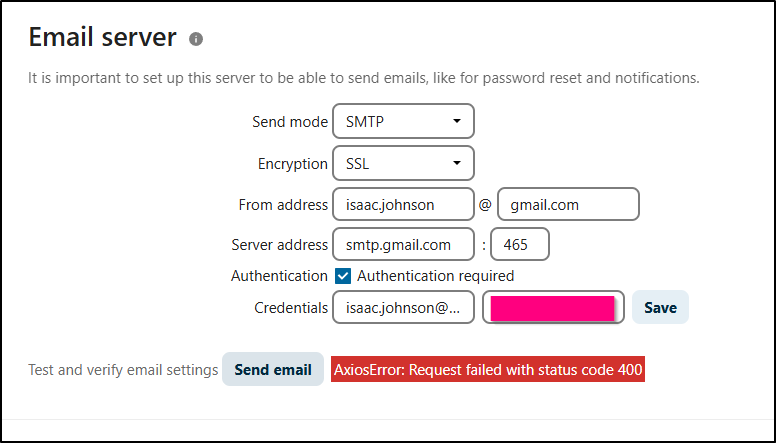

If we want things like password resets to work, we’ll need to setup the Email server, either via the helm chart or in the UI under “Basic Settings”

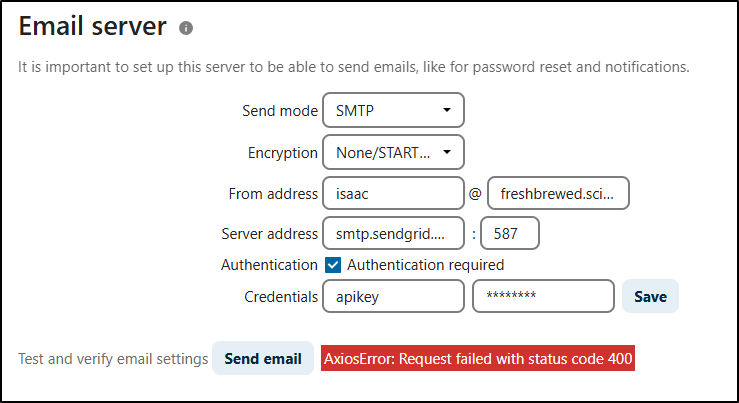

However, i found no working combination of settings for Sendgrid

In testing, they all gave me an AxiosError

Same with my Gmail credentials so i know its not the “.science” extension mucking things up

Themes

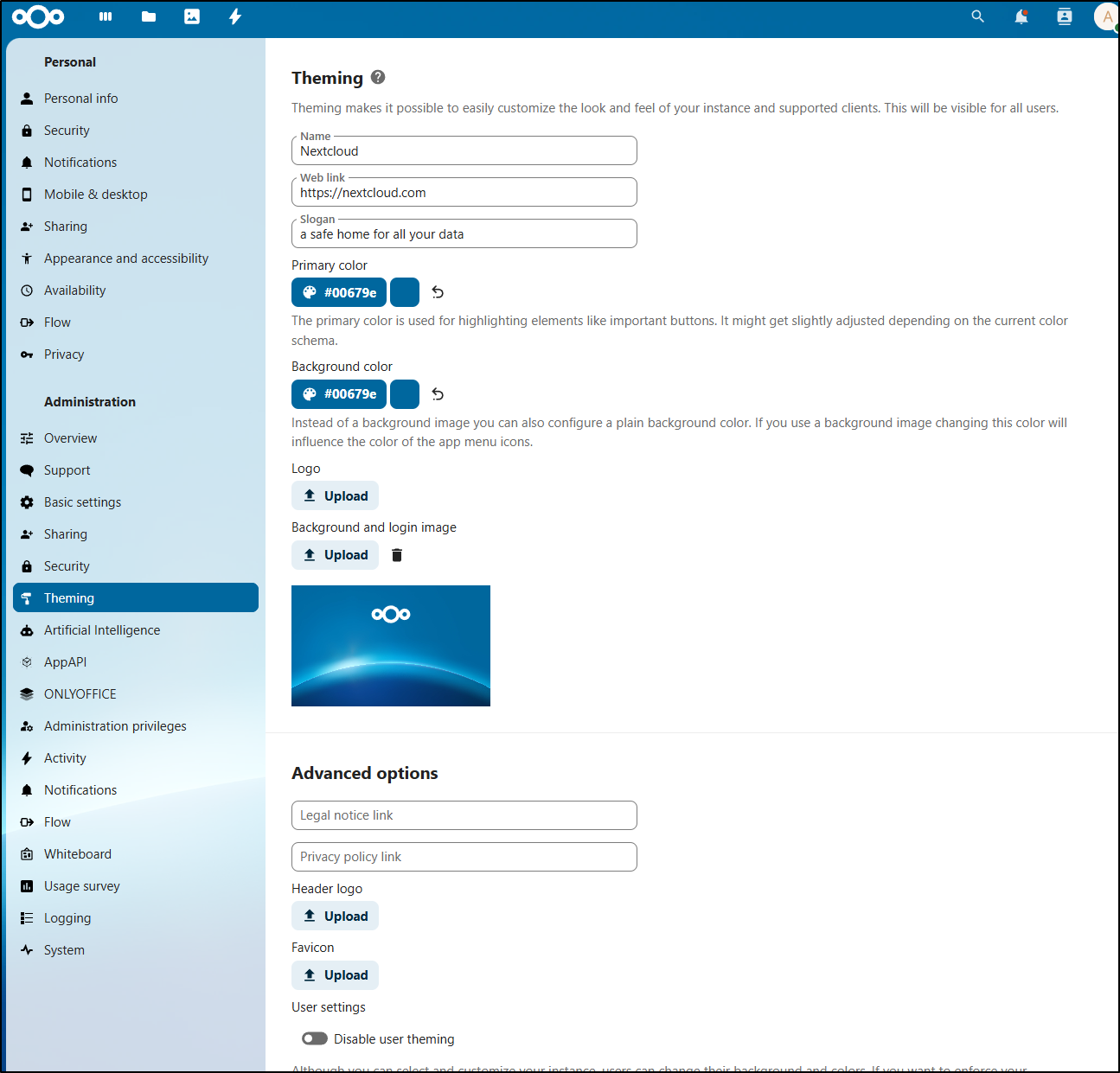

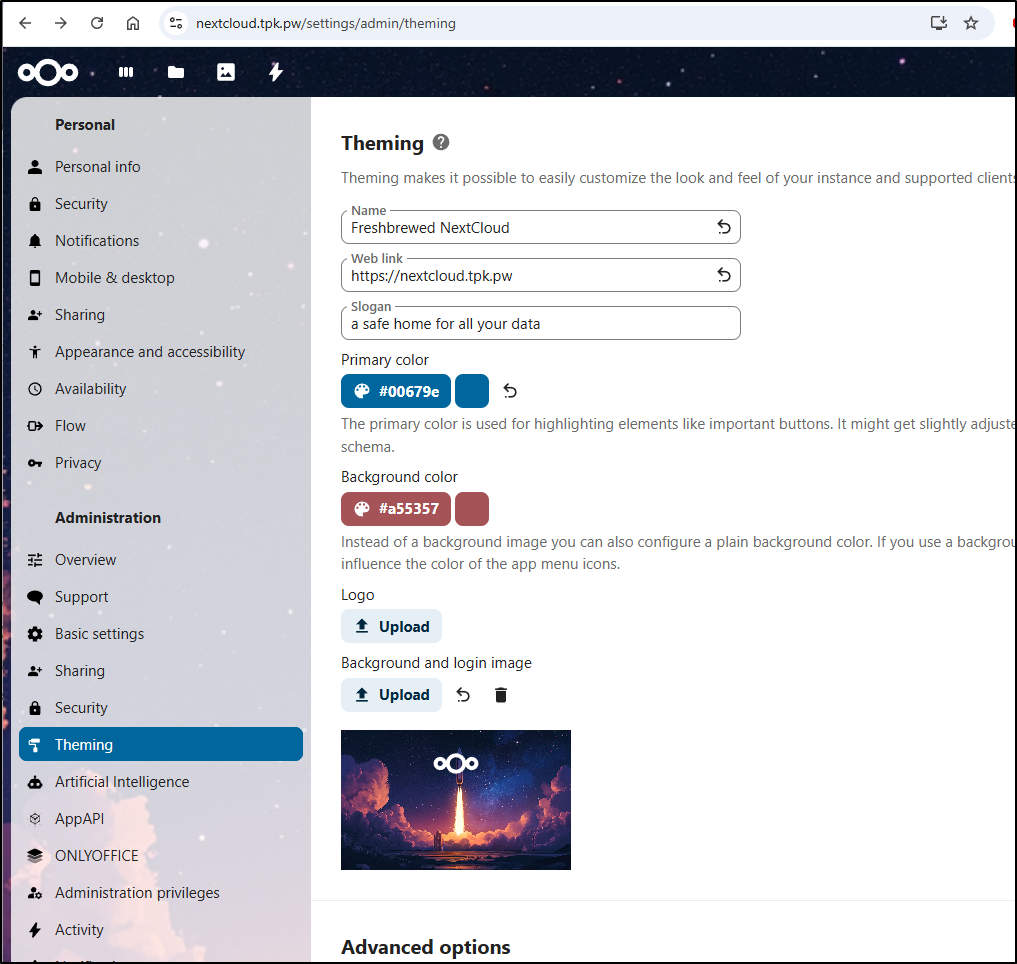

We can tweak a lot of settings related to look and feel under “Theming”

I noticed it picked up a better background colour after I uploaded my own background

I can customize my dashboard to have recent files, the weather and pictures from this day in the past

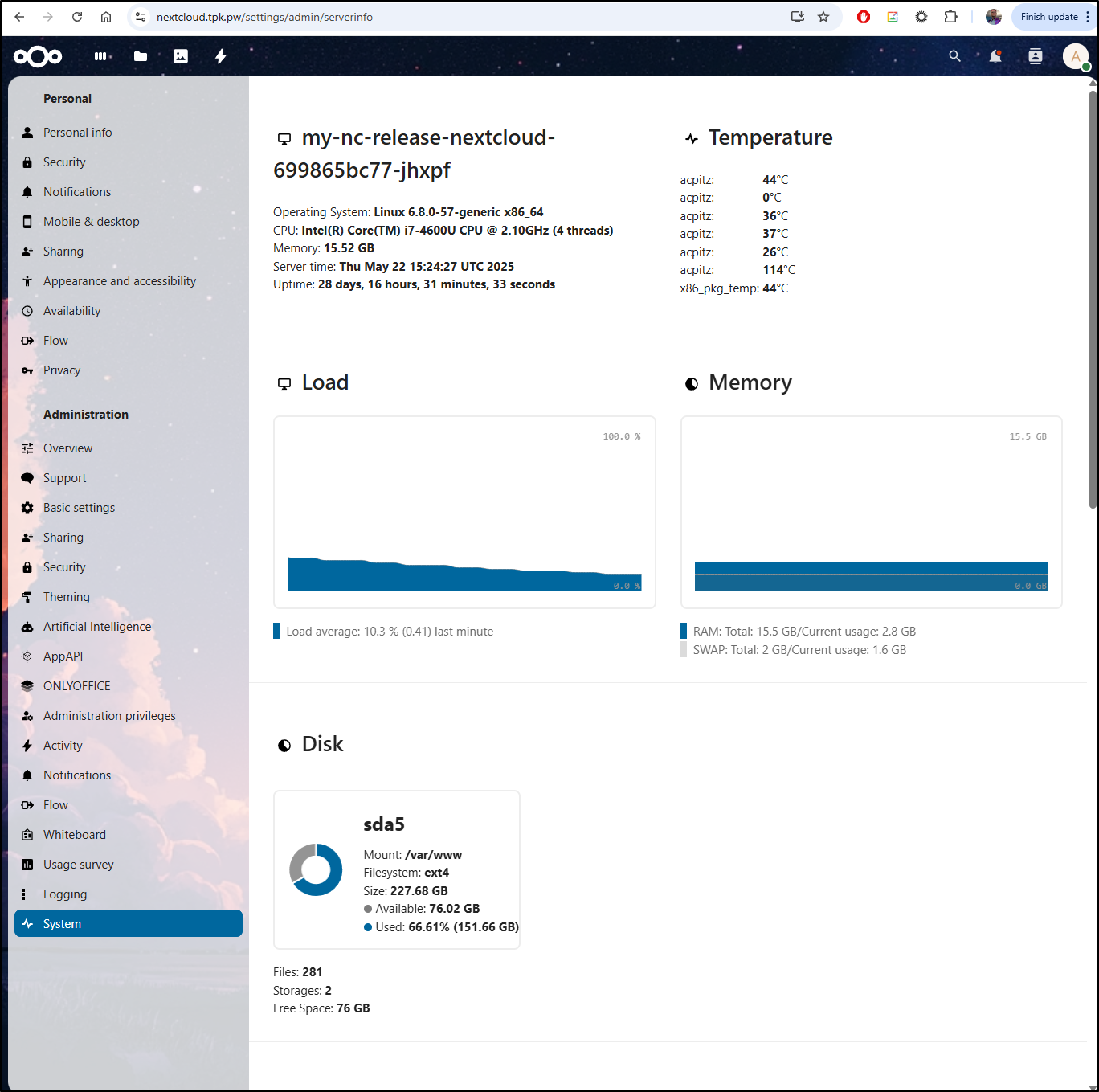

System settings

It’s running as a container, but it still picked up on temps and hardware configurations

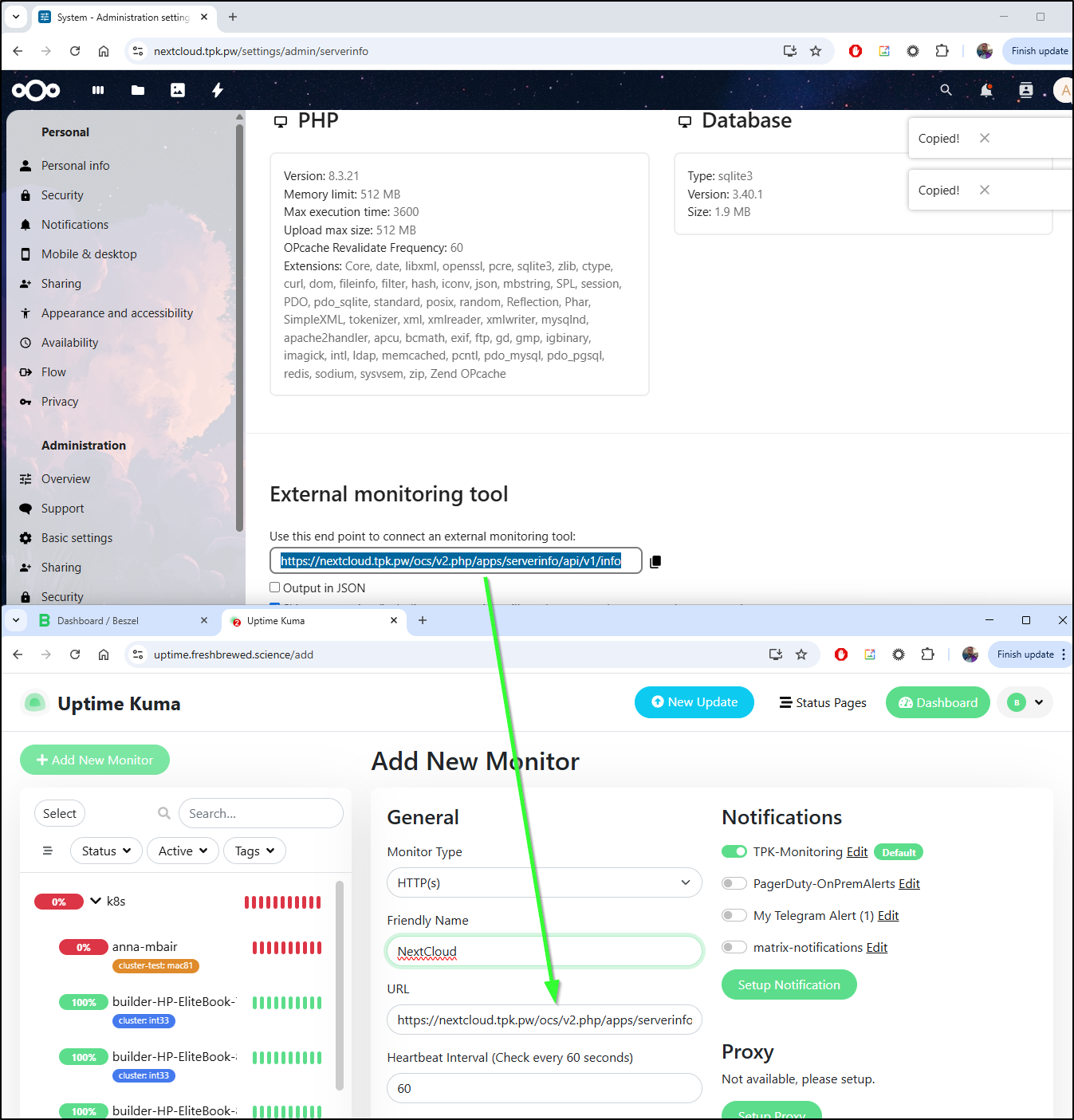

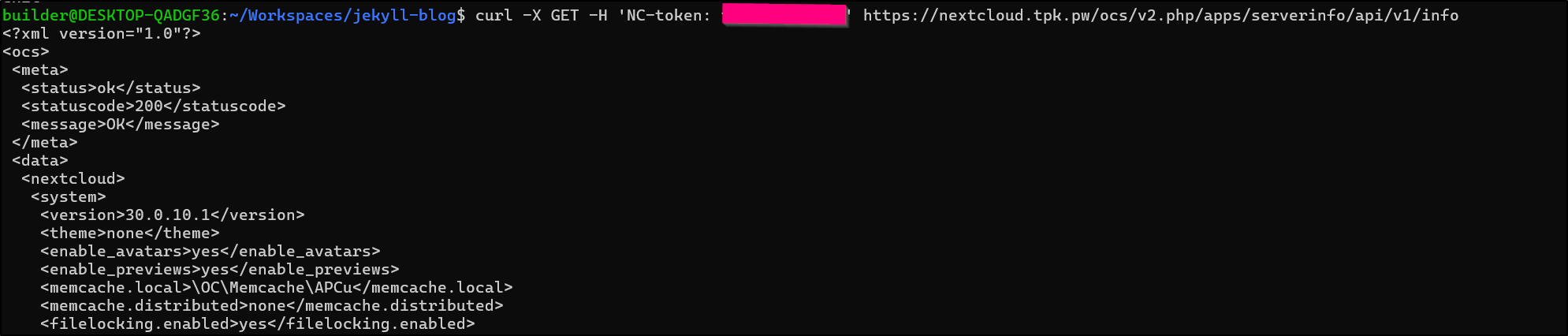

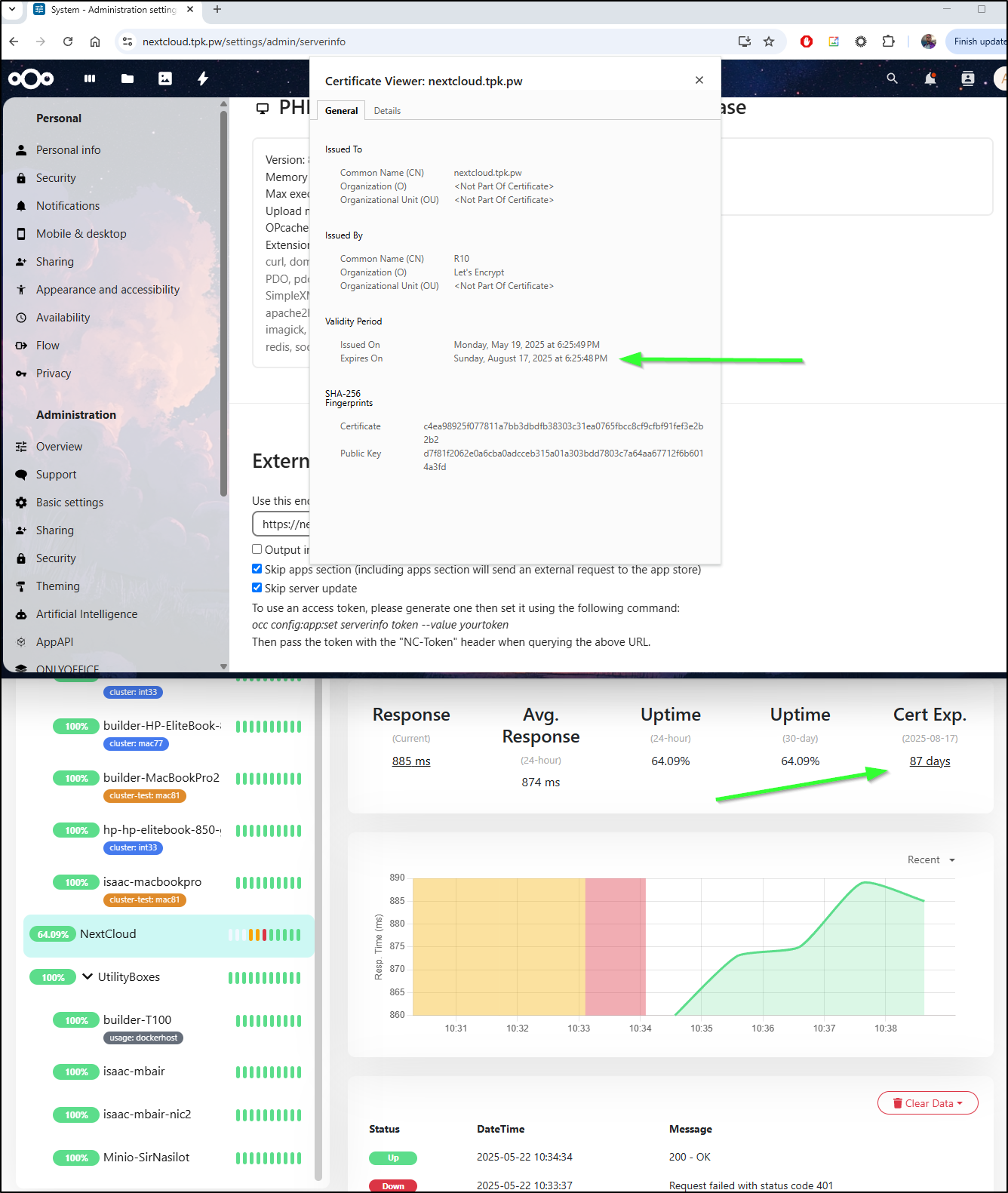

Under “External Monitoring Tool”, we can pull a URL that I could put in something like Uptime Kuma

However, to use this, you have to make a serverstatus token

For a k8s deployment, this means hopping on the pod and setting a value:

$ kubectl exec -it my-nc-release-nextcloud-699865bc77-jhxpf -- /bin/bash

root@my-nc-release-nextcloud-699865bc77-jhxpf:/var/www/html# ./occ config:app:set serverinfo token --value notmyrealtokenbutusewhateverhere

Config value 'token' for app 'serverinfo' is now set to 'notmyrealtokenbutusewhateverhere', stored as mixed in fast cache

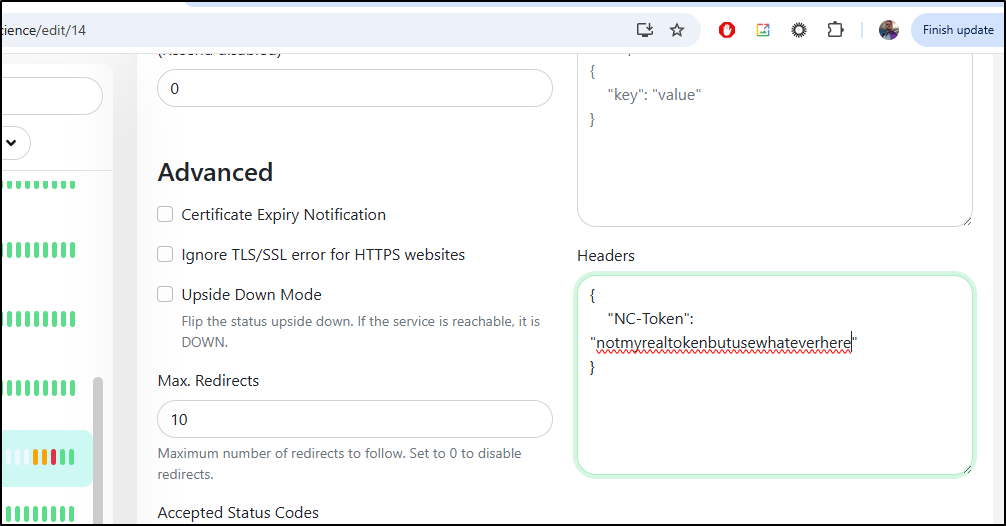

Then save it as a header in Uptime Kuma (or whatever tool you use)

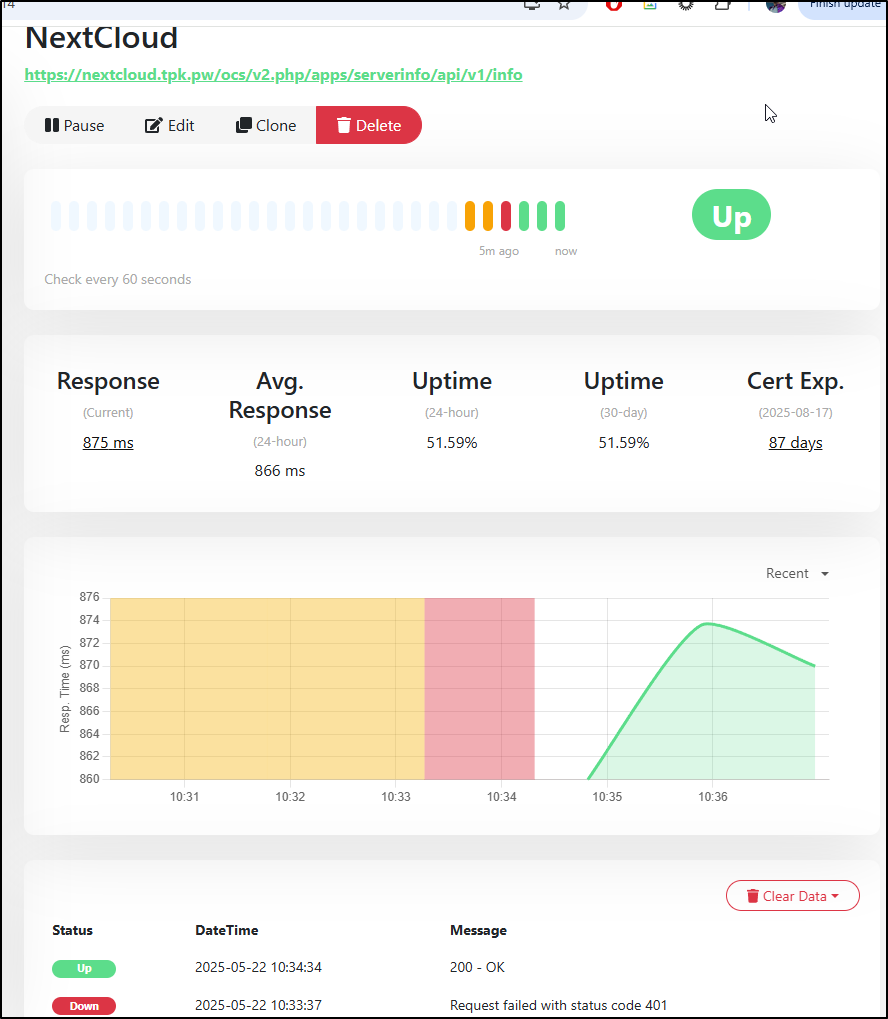

Then it works

You can also just use it with curl if desired

One handy thing I didn’t realize Uptime Kuma caught was SSL cert expiries, but indeed it is correct:

Mobile

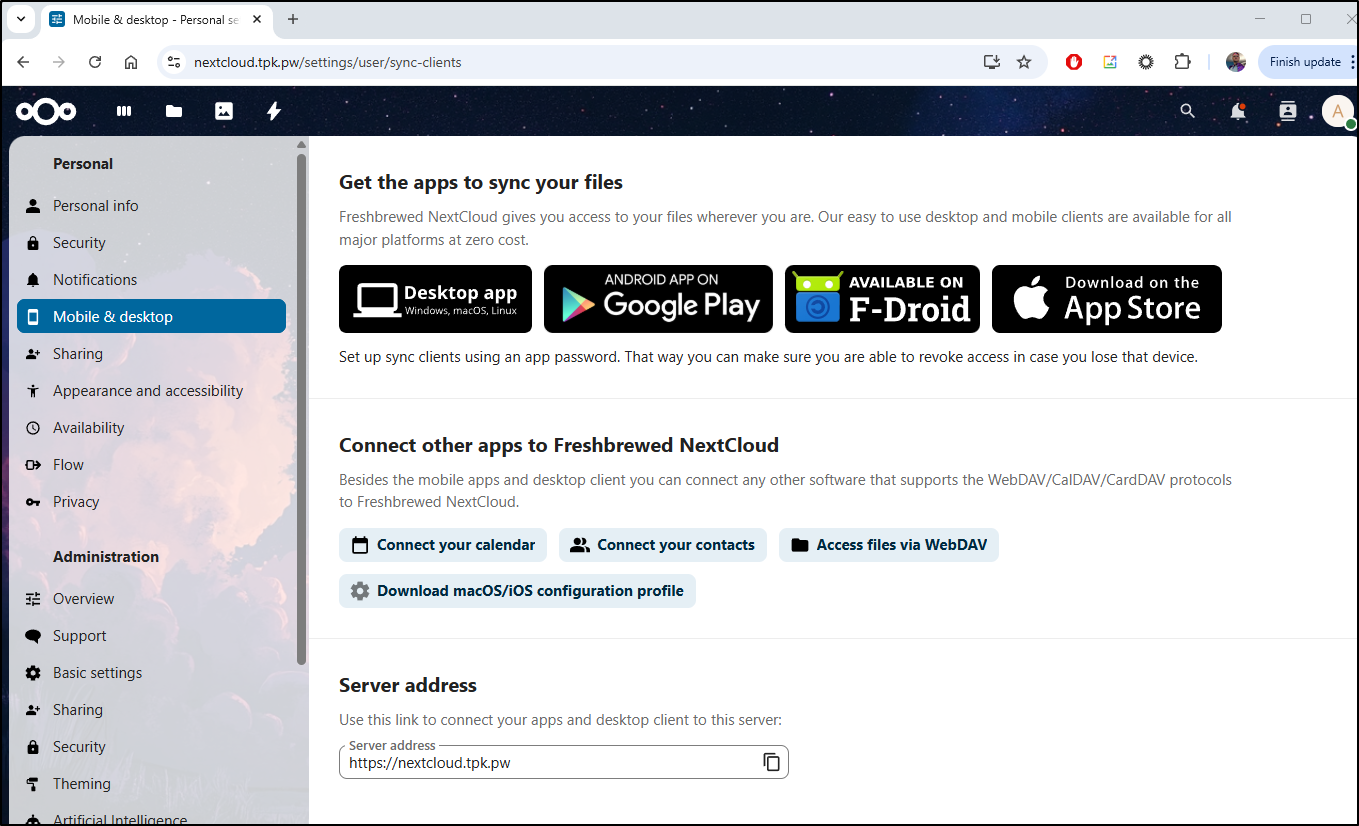

There are full apps for desktop and phones

For instance, I can install it from the Google Play store

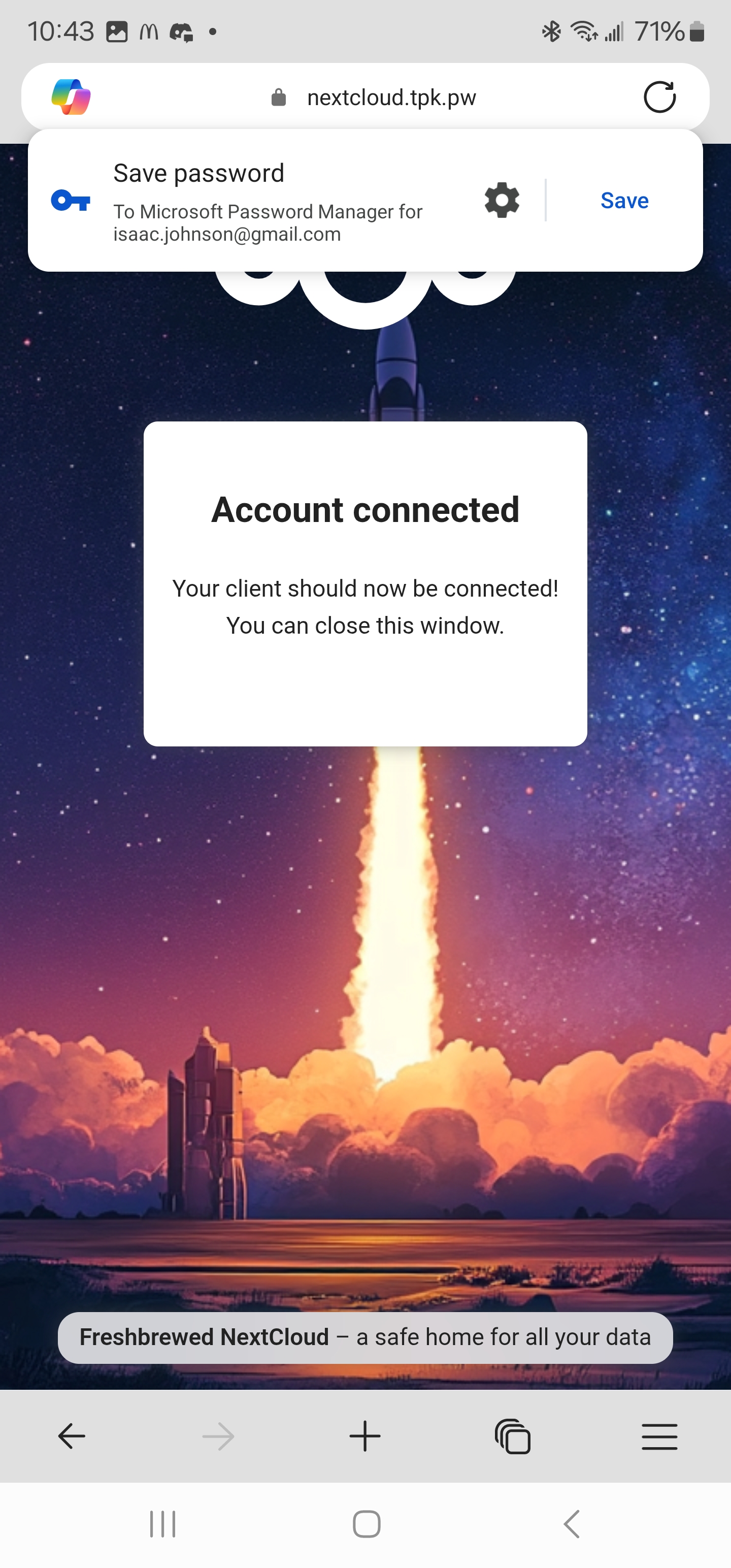

Then when I launch and login

It pops up a web browser to do login and redirect back to the app

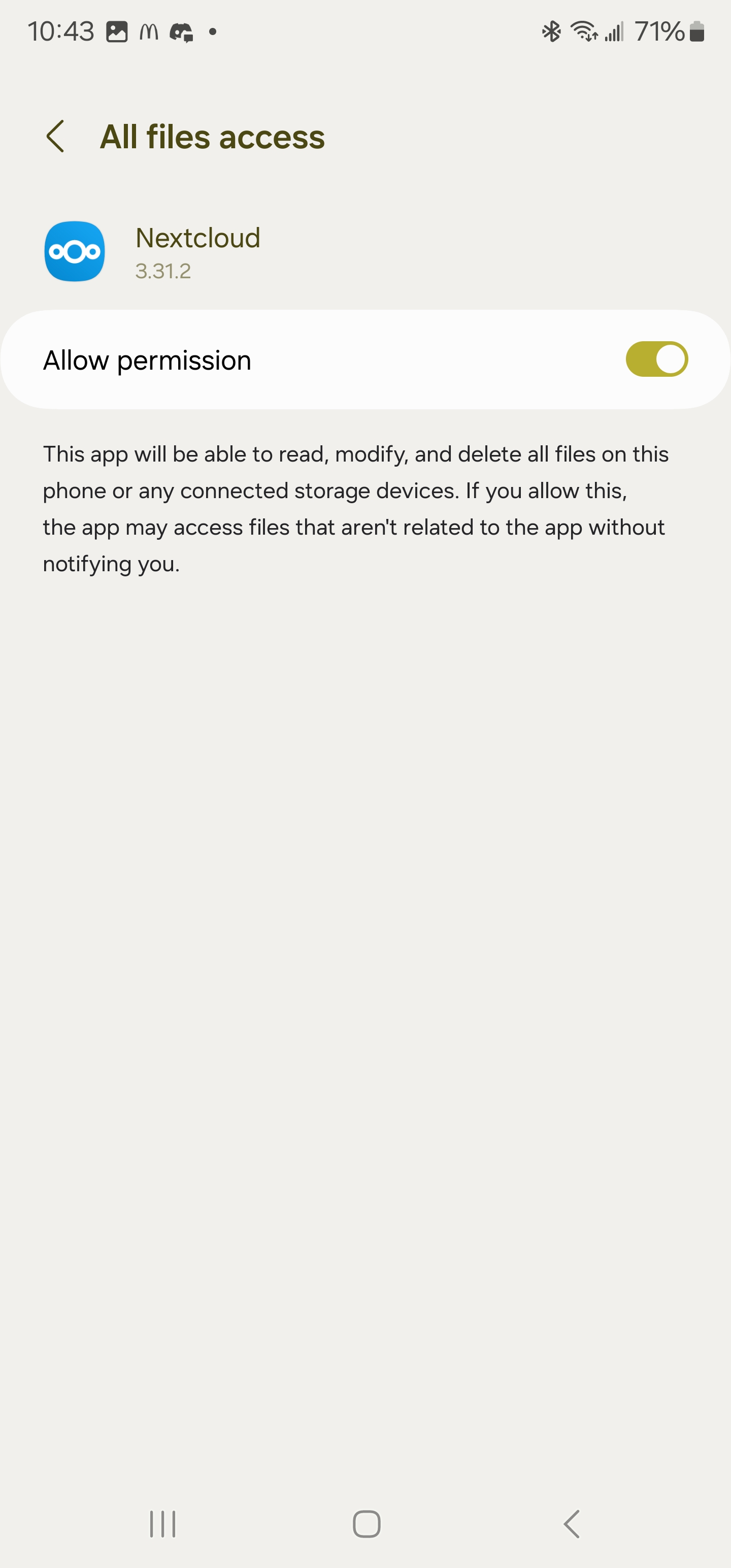

Like you can imagine, it needs some permissions like Notifications and storage

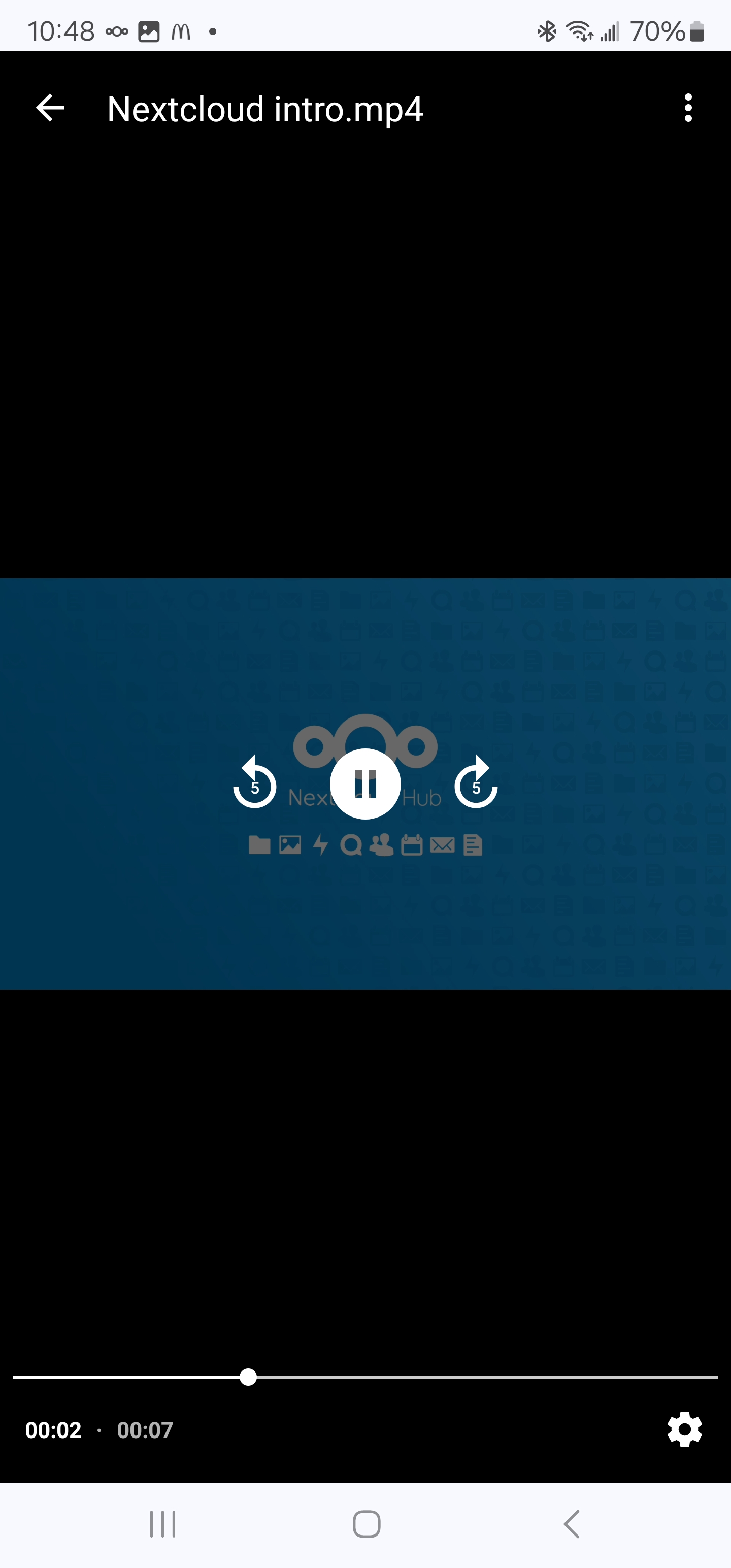

But then I can do interesting things like view and stream videos

I could see that as really handy to view content midflight, or in my case, I often put together videos with Powerdirector on Android which is surprisingly full featured then upload them. I tend to use Google Drive, but that has storage limits and this might be a good option for getting large files off

One issue I could see is that the deployment is using emptydir for storage

volumes:

- emptyDir: {}

name: nextcloud-main

While the helm does expose some additional volume mount settings

extraVolumeMounts: null

extraVolumes: null

I do worry that we might tip over the pod uploading a 15Gb mp4 file or something of real size.

To that end, I might stick with Immich whose helm chart has a PVC option

Summary

We took a tour of two excellent apps. The first was Airstation which is a fantastic little containerized Open-Source app for streaming an infinite playlist of music. I was actually caught in a lot of rush hour traffic this week and used my little station to pass the time.

NextCloud is an incredibly rich open-source offering. I barely scratched the surface of all it can do. The suite is big enough they have their own con in Munich this summer. From Wikipedia it seems this was formed in Germany (Nextcloud GmbH) in 2016 from some former ownCloud devs. I’m not going to repeat the wiki page, but read the “History” section as it seems a bit like a mutiny/coup took place there with devs.

The fact it is based in Germany (and not the US) as well as the AGPL licenses make NextCloud a very attractive option for those looking to get away from US-based companies and software. I really didn’t touch on the SaaS side, but they offer a Standard/Premium/Ultimate commercial instance for 67.89€/user/year to 195€/user/year. And you can start an Instant trial if you provide some contact details.

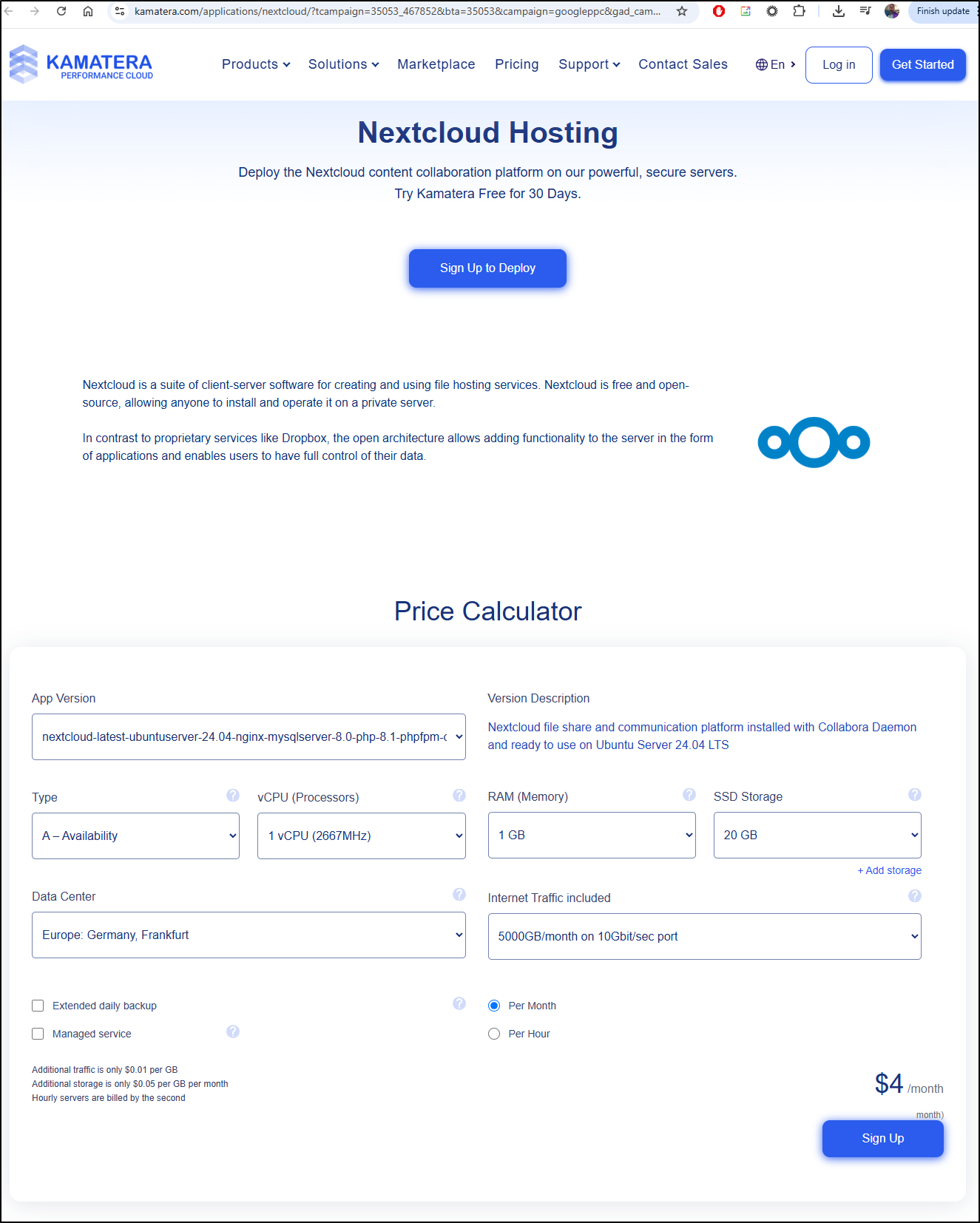

You can also use a partner provider and there are plenty of turnkey options as well, such as Kamatera

I bring this up just to say that the Open-Source model with AGPL can work and I like it when i see a “do it yourself” and supportable commercial option avaiable.