Published: Jul 24, 2025 by Isaac Johnson

Last week, we showed hot to setup a full-stack Grafana with Alloy, Tempo, Loki, Prometheus and Grafana and then test using it with the telemetry data provided by the Gemini CLI.

Today we want to pivot from a full stack observability in cluster to one that is provided by Datadog. I already use Datadog to monitor most of my stacks and alert me on issues through Pagerduty.

Let’s build out a real Gemini CLI monitor using Alloy to Datadog as well as just the Datadog agent with OTLP support alone.

Alloy config

First, we need to be careful in following docs. The Collect Datadog docs on Grafana are about having Datadog send data through to Alloy, not the other way around.

Editors note:

I will cover what I tried at first here with Alloy, then how I pivot. Later in the article I will do an “Alloy circle back” and cover some working options. I do believe the following steps below would have worked clean had I just added an “insecure” block, e.g.

client {

endpoint = "whatever"

tls {

insecure = true

}

}

I saw nothing in the docs suggesting it ASSUMES https and most examples show “http”, but in this first round, i had lots of troubles getting alloy, OOTB, to talk to HTTP… and back to the article…

Continuing….

What we will do instead is send OTLP data to the Datadog Agent running in our cluster. We covered this setup a year ago

otelcol.exporter.otlphttp "datadog" {

client {

endpoint = "http://10.128.0.47:54318"

}

}

I went to my current Datadog (which was updated since then) and found the service lacked OTLP ports

$ kubectl get svc | grep -i datadog

my-datadog-release ClusterIP 10.43.240.68 <none> 8125/UDP,8126/TCP 482d

my-datadog-release-cluster-agent ClusterIP 10.43.80.95 <none> 5005/TCP 482d

my-datadog-release-cluster-agent-admission-controller ClusterIP 10.43.35.135 <none> 443/TCP 482d

Checking my helm, it looks like, by default, those OTLP services are disabled

otlp:

logs:

enabled: false

receiver:

protocols:

grpc:

enabled: false

endpoint: 0.0.0.0:4317

useHostPort: true

http:

enabled: false

endpoint: 0.0.0.0:4318

useHostPort: true

I’ll just enable those (I’m a bit worried what ‘useHostPort’ does, but we’ll find out)

builder@LuiGi:~/Workspaces/alloy-config$ helm get values my-datadog-release -o yaml > my-dd-values-helm.yaml

builder@LuiGi:~/Workspaces/alloy-config$ vi my-dd-values-helm.yaml

builder@LuiGi:~/Workspaces/alloy-config$ helm get values my-datadog-release -o yaml > my-dd-values-helm.yaml.bak

builder@LuiGi:~/Workspaces/alloy-config$ diff my-dd-values-helm.yaml my-dd-values-helm.yaml.bak

453c453

< enabled: true

---

> enabled: false

457c457

< enabled: true

---

> enabled: false

461c461

< enabled: true

---

> enabled: false

Now I can upgrade the Datadog Cluster Agent to add Open Telemetry support

builder@LuiGi:~/Workspaces/alloy-config$ helm list | grep my-datadog-release

my-datadog-release default 3 2024-03-20 21:30:39.174345015 -0500 CDT deployed datadog-3.58.1 7

builder@LuiGi:~/Workspaces/alloy-config$ helm upgrade my-datadog-release -f ./my-dd-values-helm.yaml datadog/datadog

Release "my-datadog-release" has been upgraded. Happy Helming!

NAME: my-datadog-release

LAST DEPLOYED: Wed Jul 16 08:17:36 2025

NAMESPACE: default

STATUS: deployed

REVISION: 4

TEST SUITE: None

NOTES:

Datadog agents are spinning up on each node in your cluster. After a few

minutes, you should see your agents starting in your event stream:

https://app.datadoghq.com/event/explorer

You disabled creation of Secret containing API key, therefore it is expected

that you create Secret named 'datadog-secret' which includes a key called 'api-key' containing the API key.

###################################################################################

#### WARNING: Cluster-Agent should be deployed in high availability mode ####

###################################################################################

The Cluster-Agent should be in high availability mode because the following features

are enabled:

* Admission Controller

To run in high availability mode, our recommendation is to update the chart

configuration with:

* set `clusterAgent.replicas` value to `2` replicas .

* set `clusterAgent.createPodDisruptionBudget` to `true`.

builder@LuiGi:~/Workspaces/alloy-config$ helm list | grep my-datadog-release

my-datadog-release default 4 2025-07-16 08:17:36.72222324 -0500 CDT deployed datadog-3.116.0 7

Now I see the ports showing up

$ kubectl get svc | grep -i datadog

my-datadog-release ClusterIP 10.43.240.68 <none> 8125/UDP,8126/TCP,4317/TCP,4318/TCP 482d

my-datadog-release-cluster-agent ClusterIP 10.43.80.95 <none> 5005/TCP 482d

my-datadog-release-cluster-agent-admission-controller ClusterIP 10.43.35.135 <none> 443/TCP

Alloy Config

I’ll first attempt to use Cluster DNS (which isn’t always successful for me)

logging {

level = "info"

format = "logfmt"

}

// Send to Datadog

otelcol.exporter.otlphttp "datadog" {

client {

endpoint = "http://my-datadog-release.default.svc.cluster.local:4318"

}

}

// Collect OTLP

otelcol.receiver.otlp "httpreceiver" {

grpc {

endpoint = "0.0.0.0:4317"

}

http {

endpoint = "0.0.0.0:4318"

}

output {

metrics = [otelcol.processor.batch.default.input]

logs = [otelcol.processor.batch.default.input]

traces = [otelcol.processor.batch.default.input]

}

}

// Process and send

otelcol.processor.batch "default" {

output {

metrics = [otelcol.exporter.otlphttp.datadog.input]

logs = [otelcol.exporter.otlphttp.datadog.input]

traces = [otelcol.exporter.otlphttp.datadog.input]

}

}

discovery.kubernetes "podmetrics" {

role = "pod"

}

discovery.kubernetes "nodes" {

role = "node"

}

discovery.kubernetes "services" {

role = "service"

}

discovery.kubernetes "endpoints" {

role = "endpoints"

}

I’ll now apply and rotate pods with

kubectl get po -n grafana -l app.kubernetes.io/instance=alloy && kubectl delete cm alloy-config -n grafana && kubectl create configmap -n grafana alloy-config “–from-file=config.alloy=./alloy.config” && kubectl get po -n grafana -l app.kubernetes.io/instance=alloy && kubectl delete po -n grafana -l app.kubernetes.io/instance=alloy && sleep 10 && kubectl get po -n grafana -l app.kubernetes.io/instance=alloy

builder@LuiGi:~/Workspaces/alloy-config$ kubectl get po -n grafana -l app.kubernetes.io/instance=alloy && kubectl delete cm alloy-config -n grafana && kubectl create configmap -n grafana alloy-config "--from-file=config.alloy=./alloy.config" && kubectl get po -n grafana -l app.kubernetes.io/instance=alloy && kubectl delete po -n grafana -l app.kubernetes.io/instance=alloy && sleep 10 && kubectl get po -n grafana -l app.kubernetes.io/instance=alloy

NAME READY STATUS RESTARTS AGE

alloy-9nnxm 2/2 Running 0 19h

alloy-jjkfv 2/2 Running 0 19h

alloy-pn7kj 2/2 Running 0 19h

alloy-pwh7h 2/2 Running 0 19h

configmap "alloy-config" deleted

configmap/alloy-config created

NAME READY STATUS RESTARTS AGE

alloy-9nnxm 2/2 Running 0 19h

alloy-jjkfv 2/2 Running 0 19h

alloy-pn7kj 2/2 Running 0 19h

alloy-pwh7h 2/2 Running 0 19h

pod "alloy-9nnxm" deleted

pod "alloy-jjkfv" deleted

pod "alloy-pn7kj" deleted

pod "alloy-pwh7h" deleted

NAME READY STATUS RESTARTS AGE

alloy-9zrrq 1/2 Running 0 12s

alloy-ld7q5 1/2 Running 0 13s

alloy-q6n8v 1/2 Running 0 13s

alloy-qshtr 1/2 Running 0 12s

builder@LuiGi:~/Workspaces/alloy-config$ kubectl get po -n grafana -l app.kubernetes.io/instance=alloy

NAME READY STATUS RESTARTS AGE

alloy-9zrrq 2/2 Running 0 34s

alloy-ld7q5 2/2 Running 0 35s

alloy-q6n8v 2/2 Running 0 35s

alloy-qshtr 2/2 Running 0 34s

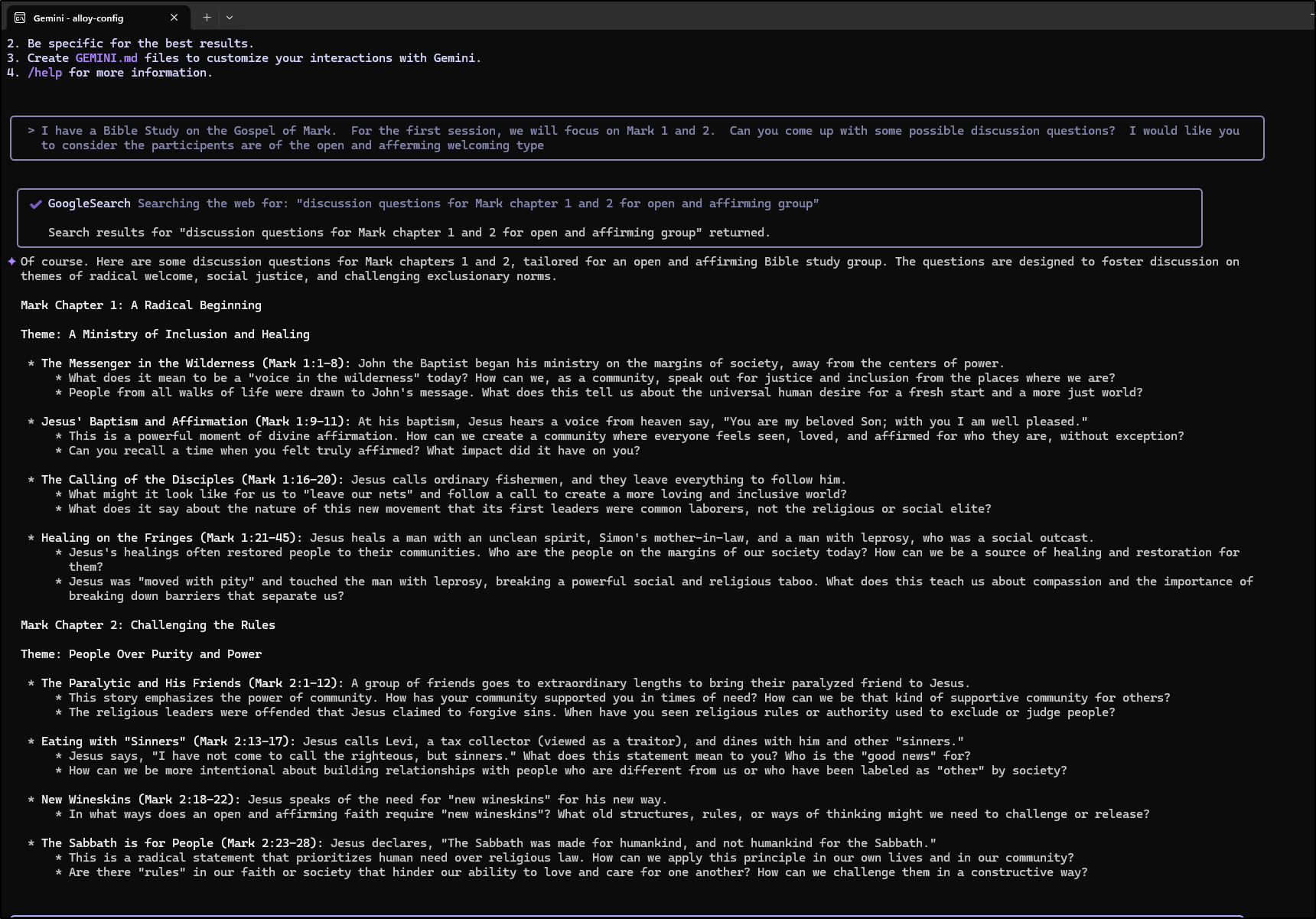

To use Gemini to test, I went a different way asking for some help building out a 2-month course to deep dive on the Gospel of Mark

It has a pretty good agenda, inmho

Here is a proposed agenda and a breakdown of weekly topics designed to be covered in about 60-90 minutes per session.

Proposed 8-Week Study: The Gospel of Mark

Theme: Jesus, the Servant King in Action

---

Weekly Topic Breakdown

* Week 1: The King Arrives

* Scripture: Mark 1-2

* Focus: The authority of Jesus is immediately established. We see His baptism, the calling of the first disciples, powerful healings, and the beginning of conflict with

religious leaders.

* Week 2: Kingdom Authority & Parables

* Scripture: Mark 3-4

* Focus: Jesus defines His true family, faces growing opposition, and begins teaching in parables to reveal the secrets of the Kingdom of God to those who will listen.

* Week 3: Power Over All

* Scripture: Mark 5-6

* Focus: A demonstration of Jesus's absolute authority over demons, disease, nature, and even death itself. We also see His rejection in His hometown of Nazareth.

* Week 4: Who Do You Say I Am?

* Scripture: Mark 7-8

* Focus: Jesus challenges man-made traditions and then asks the most important question of the Gospel: "Who do you say I am?" Peter's confession is the book's turning point.

* Week 5: The Cost of Discipleship

* Scripture: Mark 9-10

* Focus: After the Transfiguration, Jesus teaches that the path to glory is through service and sacrifice. The "first will be last," and true discipleship means following the

way of the cross.

* Week 6: Confrontation in Jerusalem

* Scripture: Mark 11-12

* Focus: Jesus enters Jerusalem as a king, cleanses the temple, and engages in a series of intense debates with the religious authorities, exposing their hypocrisy.

* Week 7: The Final Hours

* Scripture: Mark 13-14

* Focus: Jesus predicts the destruction of the temple, shares the Last Supper with His disciples, prays in Gethsemane, and is ultimately betrayed and arrested.

* Week 8: Crucifixion and Resurrection

* Scripture: Mark 15-16

* Focus: The trial, crucifixion, and burial of Jesus, culminating in the shocking and glorious news of the empty tomb. We'll discuss the victory of the resurrection and the

commission to spread the good news.

---

Proposed Weekly Session Agenda (60-90 min)

1. Welcome & Icebreaker (5-10 minutes)

* Start with a simple, welcoming question to get everyone comfortable and talking. (e.g., "What was a high point or a low point from your week?")

2. Opening Prayer (2-3 minutes)

* A brief prayer to focus the group and invite understanding.

3. Review (5 minutes)

* Briefly ask: "What was one thing that stood out to you from last week's session or reading?" This helps connect the weeks together.

4. Read the Scripture (10-15 minutes)

* Read the assigned two chapters for the week aloud. You can take turns, with each person reading a paragraph or a section.

5. Group Discussion (25-30 minutes)

* This is the core of the study. Use open-ended questions to guide the conversation. Aim for three types of questions:

* Observation: What does the text say? What's happening? Who are the main characters?

* Interpretation: What does this passage mean? Why did the people react that way? What is Jesus teaching here?

* Application: What does this mean for us today? How can we apply this truth to our own lives, work, or relationships?

6. Key Takeaway (5-10 minutes)

* Go around the group and ask everyone to share one key insight or action point they are taking away from the session.

7. Closing Prayer & Prayer Requests (10 minutes)

* Share prayer requests and close the time in prayer for one another.

This structure provides a good balance of fellowship, study, and personal application for your group. Enjoy the study

I did a follow-up to ask about creating an enticing summary (again, it nailed it)

Who Was Jesus of Nazareth? An 8-Week Journey Through the Gospel of Mark

Forget everything you think you know. Before the stained-glass windows and the theological debates, there was a raw, breathless, eyewitness account of a man who turned the world

upside down.

The Gospel of Mark is the earliest, shortest, and most action-packed story of Jesus' life. It reads less like a history book and more like a spiritual thriller, moving with

cinematic pace from one powerful encounter to the next. In this 8-week study, we will dive headfirst into this unfiltered narrative to ask the same questions the first disciples

did:

* Who is this man who commands demons, stills storms, and speaks with such authority?

* Why does He perform miracles, yet so often command people to keep them secret?

* What does it truly mean to follow Him when the cost is everything?

Join us as we strip away centuries of tradition to encounter the person of Jesus in His most immediate and challenging form. This is not just a study of an ancient text; it is an

invitation to an adventure that promises to be as relevant and transformative today as it was two thousand years ago.

Be prepared to be challenged, moved, and amazed. Your perspective will be changed.

I found it just wasn’t routing to the DNS nor Cluster IP.. Let me whip up a NodePort option for Datadog

$ cat ./datadog.svc.nodeport.yaml

apiVersion: v1

kind: Service

metadata:

name: my-datadog-release-ext

spec:

ports:

- name: dogstatsdport

port: 8125

protocol: UDP

targetPort: 8125

- name: traceport

port: 8126

protocol: TCP

targetPort: 8126

- name: otlpgrpcport

port: 4317

protocol: TCP

targetPort: 4317

- name: otlphttpport

port: 4318

protocol: TCP

targetPort: 4318

selector:

app: my-datadog-release

type: NodePort

and apply

builder@LuiGi:~/Workspaces/alloy-config$ kubectl apply -f ./datadog.svc.nodeport.yaml

service/my-datadog-release-ext created

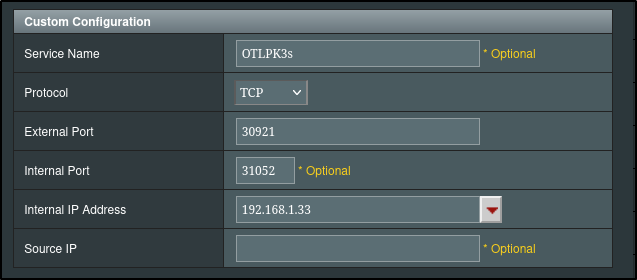

builder@LuiGi:~/Workspaces/alloy-config$ kubectl get svc my-datadog-release-ext

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

my-datadog-release-ext NodePort 10.43.202.236 <none> 8125:30581/UDP,8126:31848/TCP,4317:30780/TCP,4318:31052/TCP 10s

Again, let’s keep working on the Bible Study

But even with NodePort it was not sending

otelcol.exporter.otlphttp "datadog" {

client {

endpoint = "http://192.168.1.33:31052"

}

}

I’m going to take Alloy out of the mix and switch my externalize OTLP endpoint from the 30921 Alloy NodePort to the Datadog 31052 I created. However, I’ll leave the external port the same so I need not modify my Gemini CLI settings.json everywhere

However it seems this is not working

I tried quite a few options but kept getting errors around TLS:

✖ {"stack":"Error: PeriodicExportingMetricReader: metrics export failed (error Error: 14 UNAVAILABLE: No connection established. Last error: Error: │

│ 800CE990E2720000:error:0A00010B:SSL routines:ssl3_get_record:wrong version number:../deps/openssl/openssl/ssl/record/ssl3_record.c:354:\n)\n at doExport │

│ (/home/builder/.nvm/versions/node/v21.7.3/lib/node_modules/@google/gemini-cli/node_modules/@opentelemetry/sdk-metrics/build/src/export/PeriodicExportingMetricRead │

│ er.js:76:23)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async PeriodicExportingMetricReader._doRun │

│ (/home/builder/.nvm/versions/node/v21.7.3/lib/node_modules/@google/gemini-cli/node_modules/@opentelemetry/sdk-metrics/build/src/export/PeriodicExportingMetricRead │

│ er.js:84:13)\n at async PeriodicExportingMetricReader._runOnce │

│ (/home/builder/.nvm/versions/node/v21.7.3/lib/node_modules/@google/gemini-cli/node_modules/@opentelemetry/sdk-metrics/build/src/export/PeriodicExportingMetricRead │

│ er.js:55:13)","message":"PeriodicExportingMetricReader: metrics export failed (error Error: 14 UNAVAILABLE: No connection established. Last error: Error: │

│ 800CE990E2720000:error:0A00010B:SSL routines:ssl3_get_record:wrong version number:../deps/openssl/openssl/ssl/record/ssl3_record.c:354:\n)","name":"Error"} (x9)

Perhaps I need to just stop for a moment and test the endpoints directly.

Testing with Manual Spans

I created a test JSON file for a trace

$ cat test_span.json

{

"resourceSpans": [

{

"resource": {

"attributes": [

{

"key": "service.name",

"value": {

"stringValue": "test-with-curl"

}

}

]

},

"scopeSpans": [

{

"scope": {

"name": "manual-test"

},

"spans": [

{

"traceId": "71699b6fe85982c7c8995ea3d9c95df2",

"spanId": "3c191d03fa8be065",

"name": "spanitron",

"kind": 2,

"droppedAttributesCount": 0,

"events": [],

"droppedEventsCount": 0,

"status": {

"code": 1

}

}

]

}

]

}

]

}

Then tested a post

builder@LuiGi:~/Workspaces/alloy-config$ curl -i http://75.73.224.240:31052/v1/traces -X POST -H "Content-Type: application/json" -d @test_span.json

HTTP/1.1 200 OK

Content-Type: application/json

Date: Wed, 16 Jul 2025 14:37:15 GMT

Content-Length: 21

{"partialSuccess":{}}

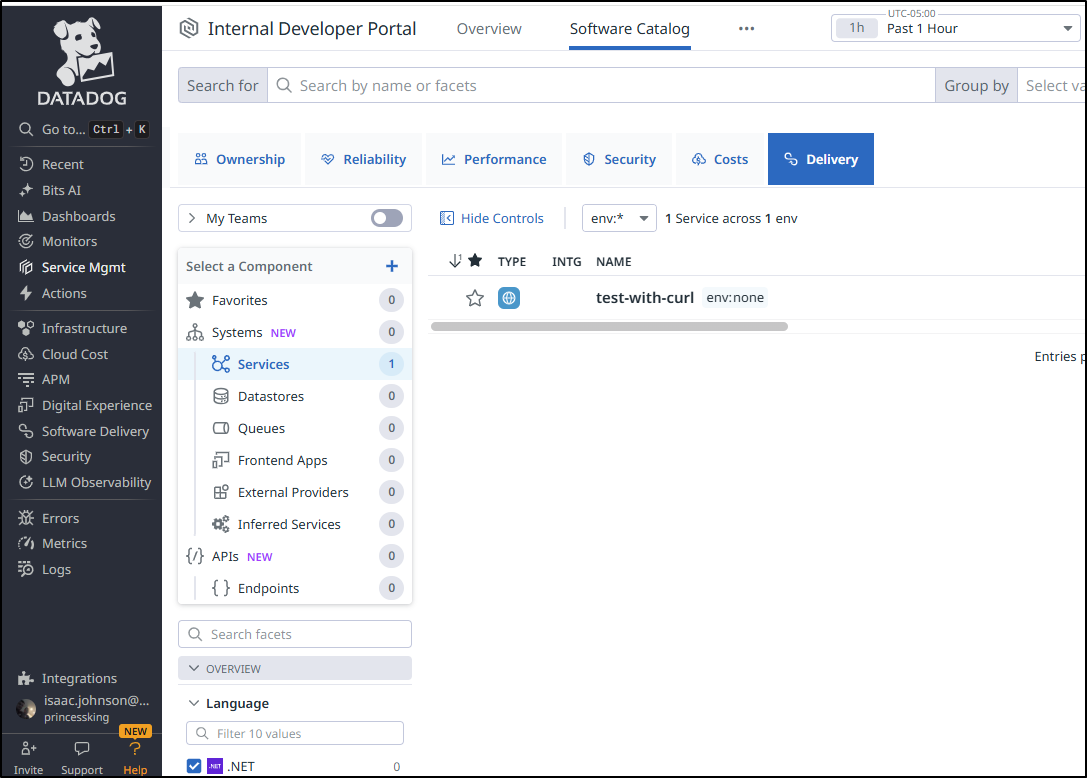

I can definitely see it in my Datadog dashboard - so the Agent in the Cluster is working

Logs

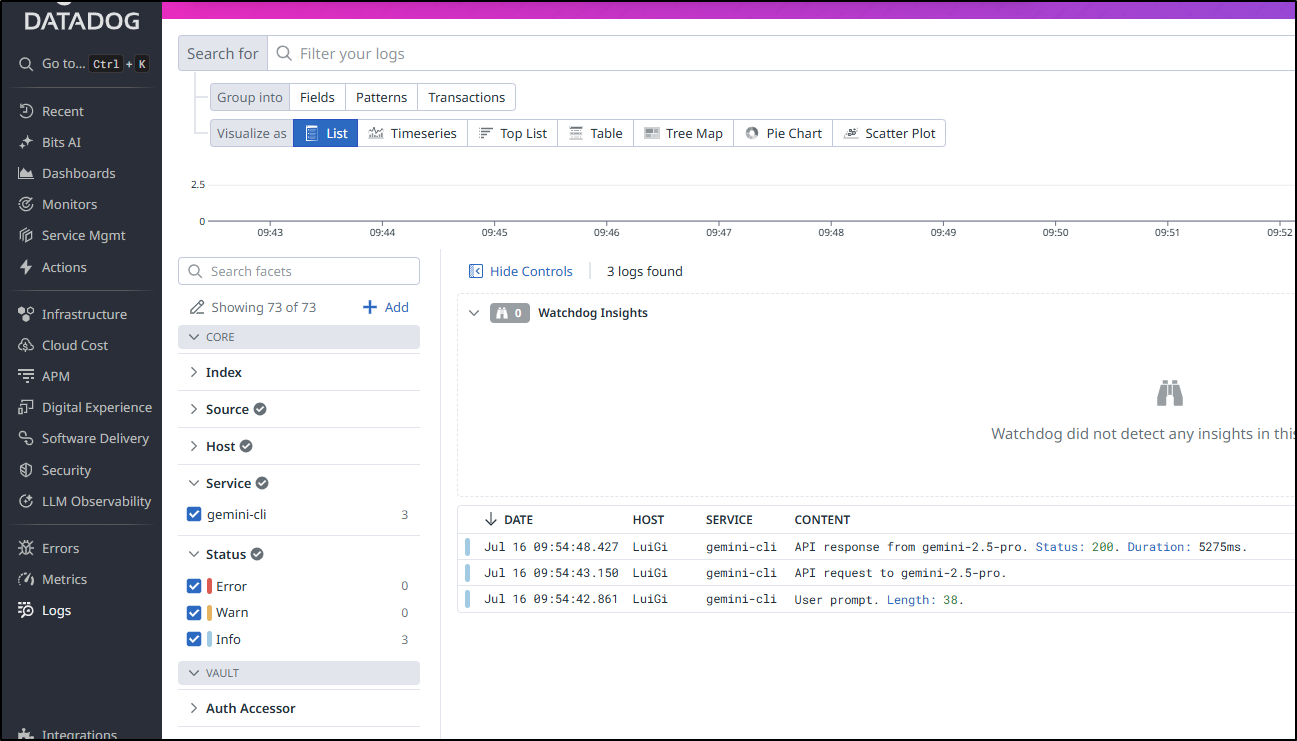

While trying to debug services, I did find that logs where now forwarding.

This was using the NodePort of the Datadog agent (not alloy)

$ cat ~/.gemini/settings.json

{

"theme": "Shades Of Purple",

"selectedAuthType": "oauth-personal",

"telemetry": {

"enabled": true,

"target": "local",

"otlpEndpoint": "http://75.73.224.240:30780",

"logPrompts": true

}

}

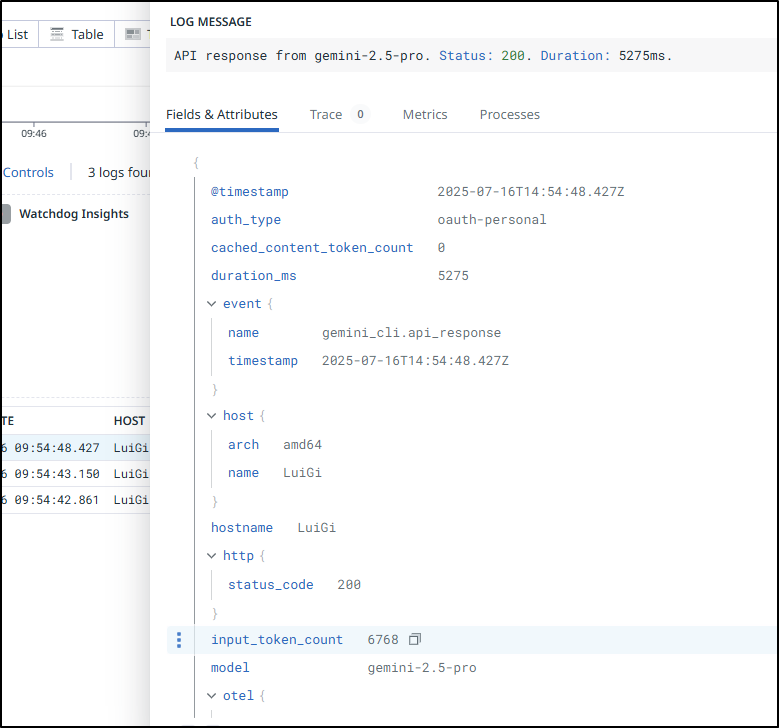

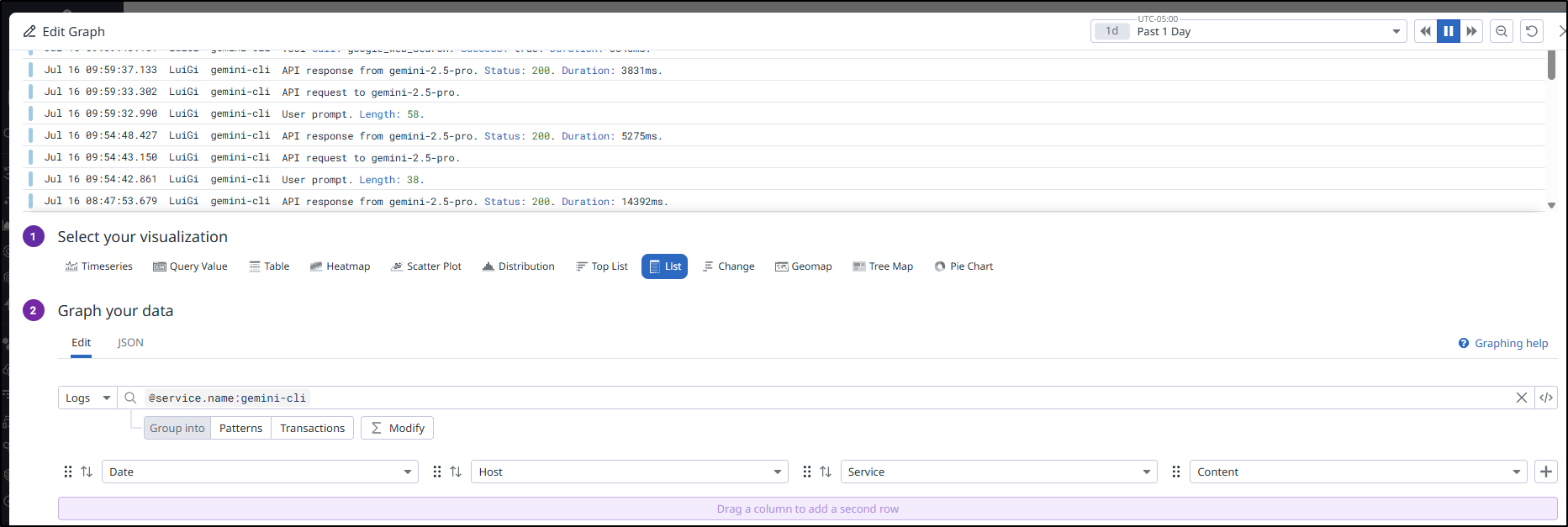

The logs are actually fairly enriched.

For instance, I can see model and token usage for the query

Metrics

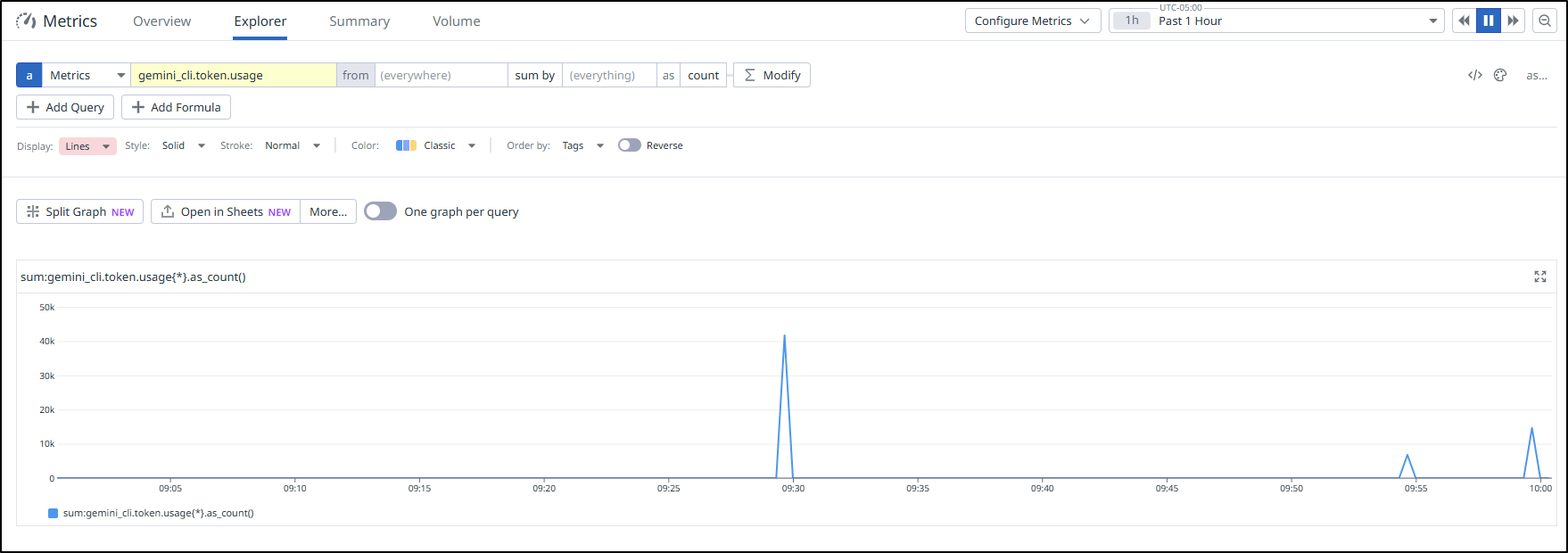

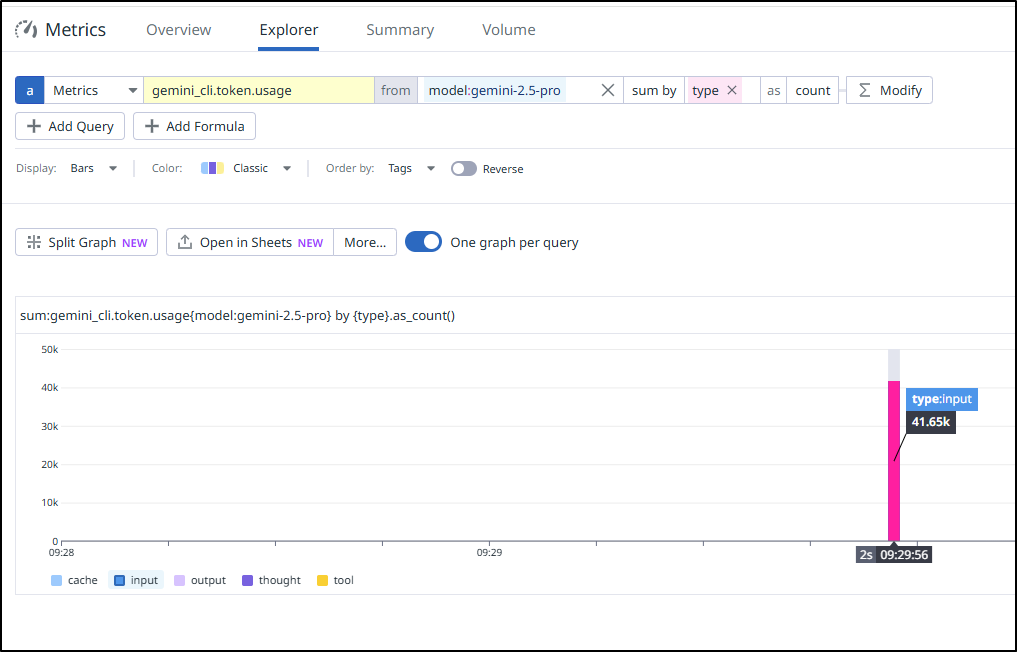

While I was so hung up on “Services” showing up, I neglected to check “Metrics”.

Indeed, the OTLP forwarder and the DD Agent itself was sending the Gemini stats while I was testing

We can easily zoom in and sum by type

Dashboards

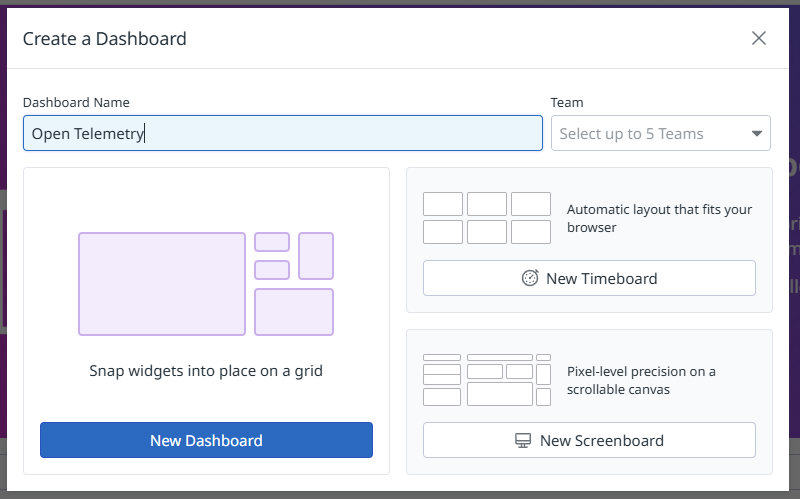

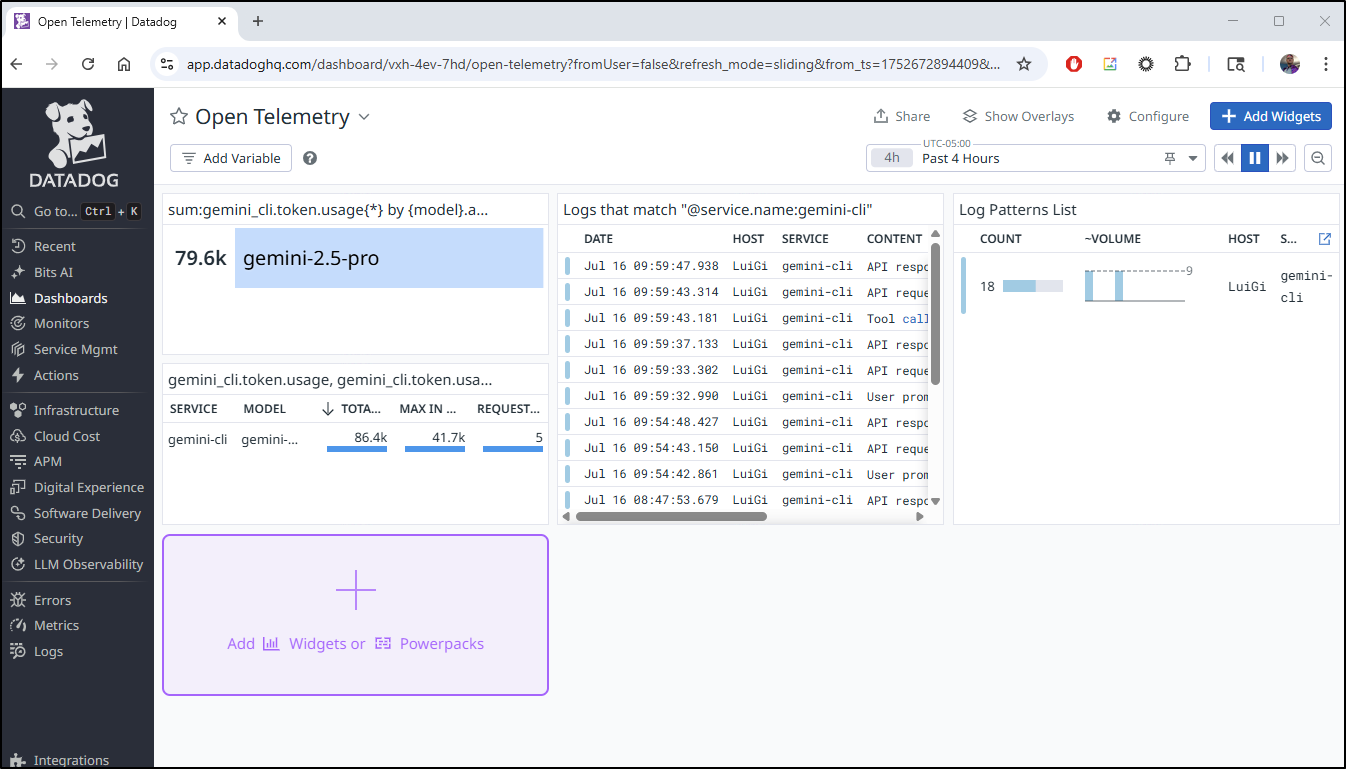

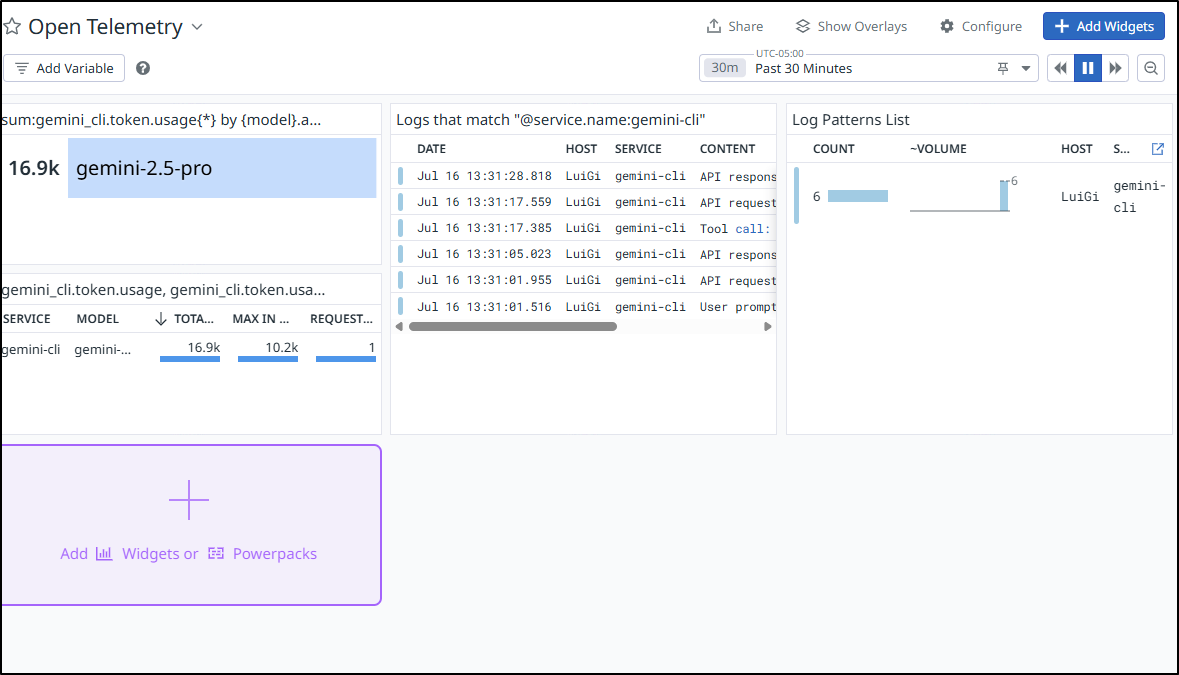

Let’s create a new Open Telemetry dashboard

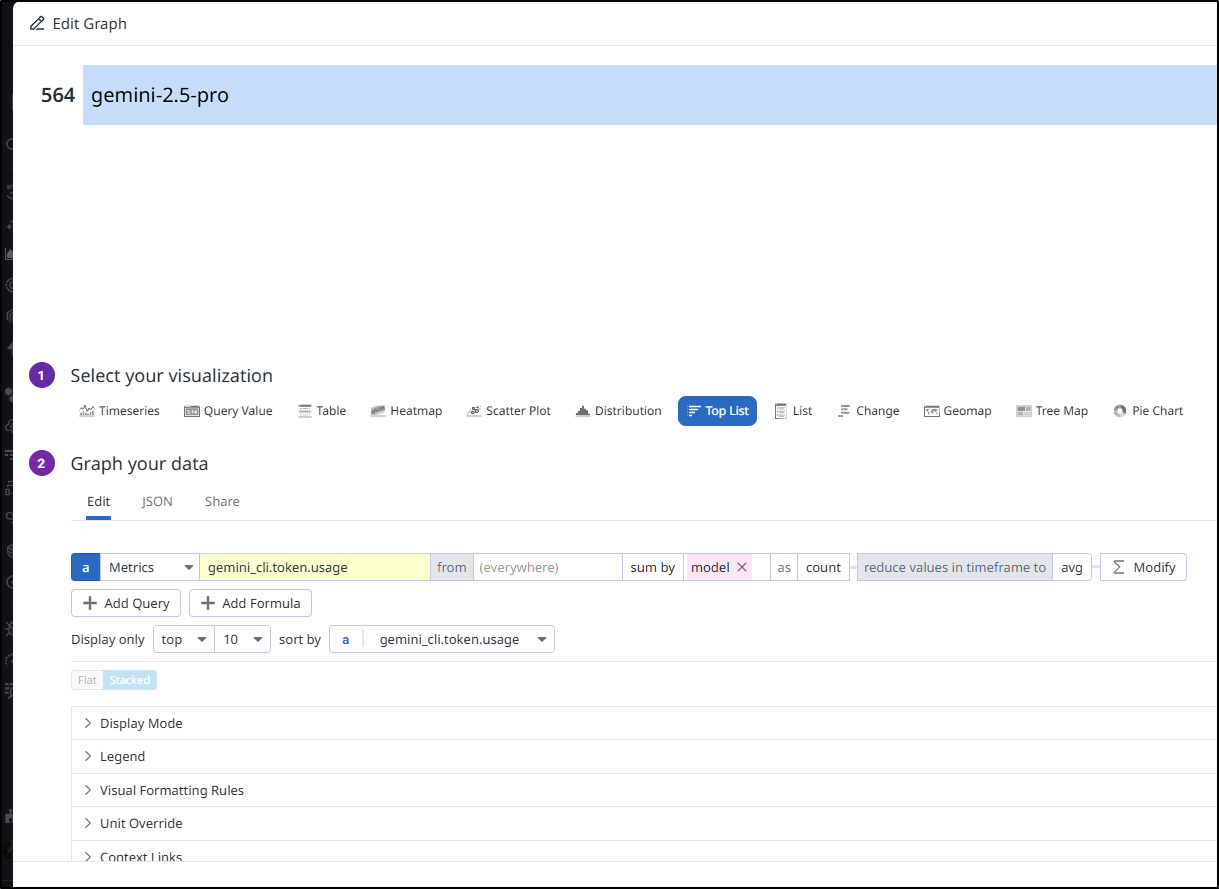

I’m going to create a new Top values graph and use a query to sum by model:

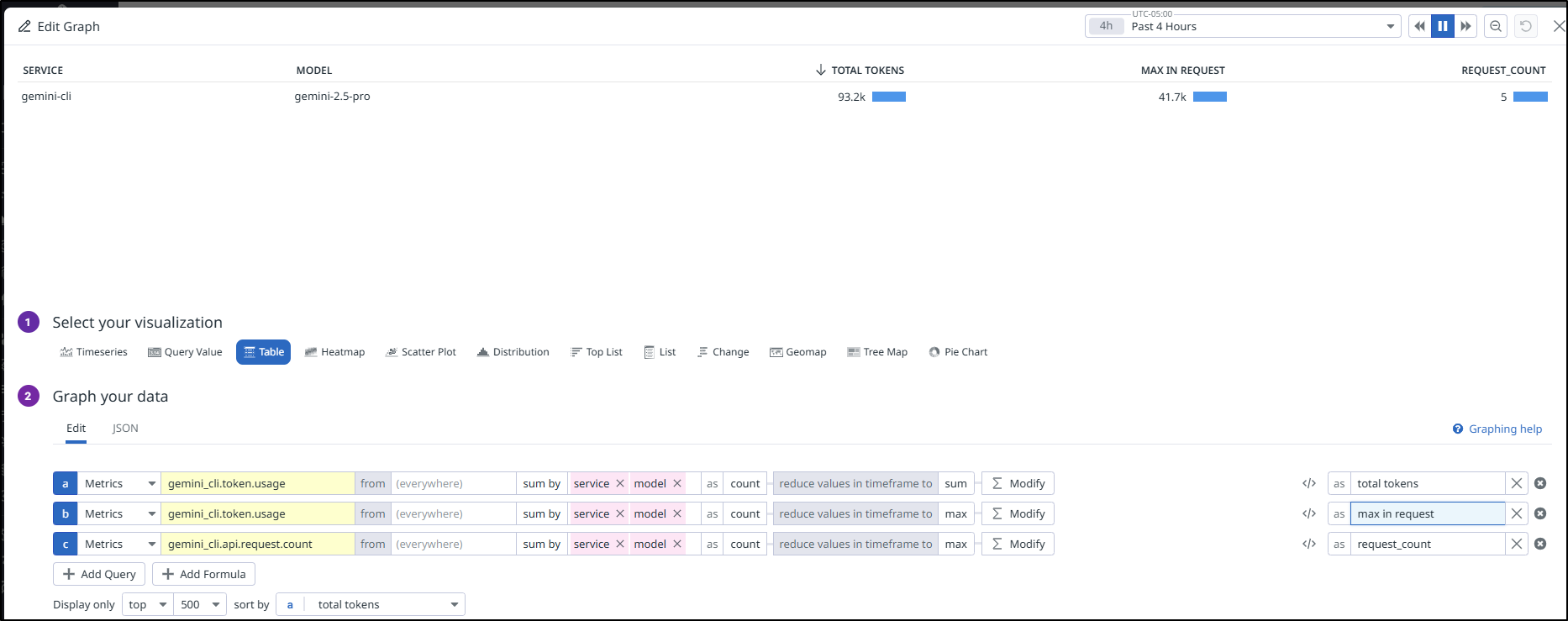

I can have a graph which is more of a table that really bubbles up the information I care most about - the totals, the max and the requests in the time period

I can also show the logs from Gemini in this period

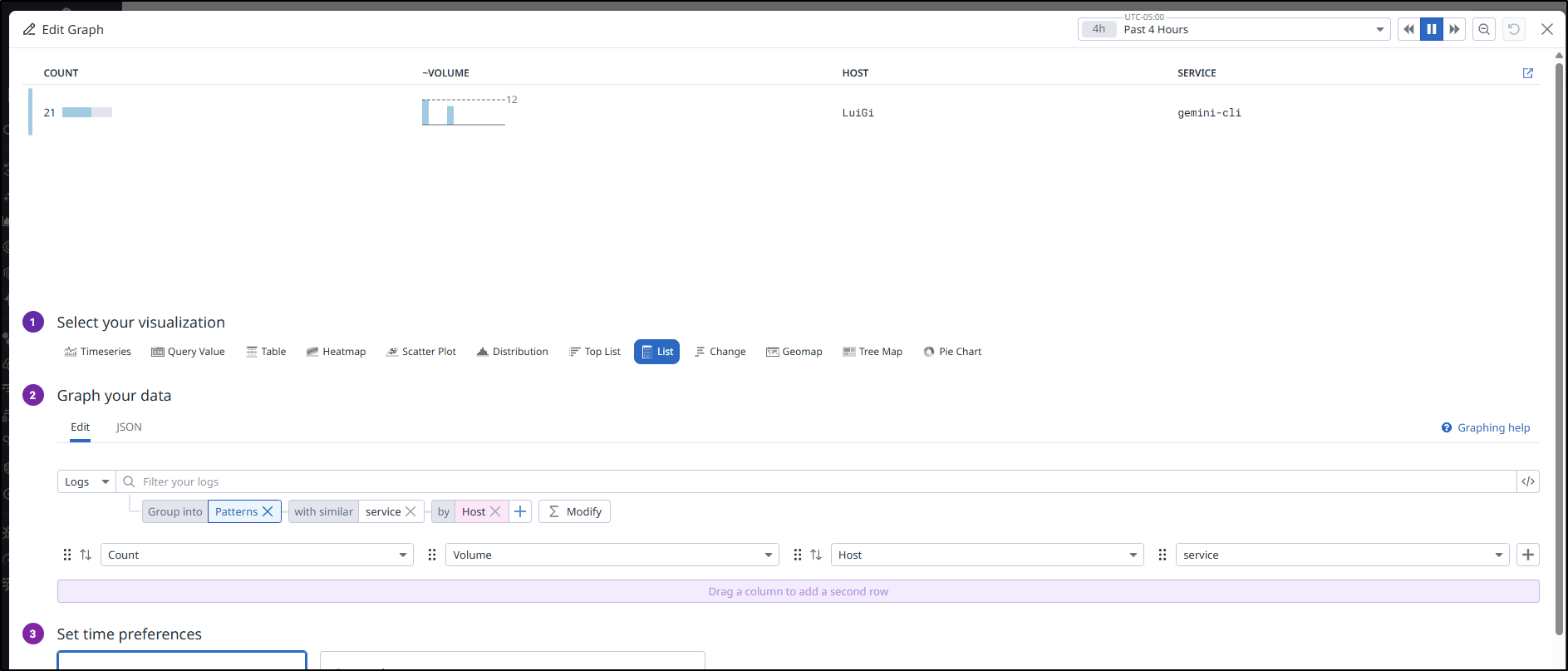

Another interesting list is to group queries by service and host - which computer is using Gemini in my account?

I now have a pretty actionable dashboard

Alloy circle back

Let’s come back to Alloy now that I definitely know the collector is working on port 30780 as a NodePort

I’ll set the alloy config

$ cat ./alloy.config

logging {

level = "info"

format = "logfmt"

}

// Send to Datadog

otelcol.exporter.otlphttp "datadog" {

client {

endpoint = "http://192.168.1.33:30780"

}

}

// Collect OTLP

otelcol.receiver.otlp "httpreceiver" {

grpc {

endpoint = "0.0.0.0:4317"

}

http {

endpoint = "0.0.0.0:4318"

}

output {

metrics = [otelcol.processor.batch.default.input]

logs = [otelcol.processor.batch.default.input]

traces = [otelcol.processor.batch.default.input]

}

}

// Process and send

otelcol.processor.batch "default" {

output {

metrics = [otelcol.exporter.otlphttp.datadog.input]

logs = [otelcol.exporter.otlphttp.datadog.input]

traces = [otelcol.exporter.otlphttp.datadog.input]

}

}

discovery.kubernetes "podmetrics" {

role = "pod"

}

discovery.kubernetes "nodes" {

role = "node"

}

discovery.kubernetes "services" {

role = "service"

}

discovery.kubernetes "endpoints" {

role = "endpoints"

}

then apply it

builder@LuiGi:~/Workspaces/alloy-config$ !2111

kubectl get po -n grafana -l app.kubernetes.io/instance=alloy && kubectl delete cm alloy-config -n grafana && kubectl create configmap -n grafana alloy-config "--from-file=config.alloy=./alloy.config" && kubectl get po -n grafana -l app.kubernetes.io/instance=alloy && kubectl delete po -n grafana -l app.kubernetes.io/instance=alloy && sleep 10 && kubectl get po -n grafana -l app.kubernetes.io/instance=alloy

NAME READY STATUS RESTARTS AGE

alloy-pb8dw 2/2 Running 0 3h55m

alloy-pfs5q 2/2 Running 0 3h55m

alloy-tg66c 2/2 Running 0 3h55m

alloy-xdkrv 2/2 Running 0 3h55m

configmap "alloy-config" deleted

configmap/alloy-config created

NAME READY STATUS RESTARTS AGE

alloy-pb8dw 2/2 Running 0 3h55m

alloy-pfs5q 2/2 Running 0 3h55m

alloy-tg66c 2/2 Running 0 3h55m

alloy-xdkrv 2/2 Running 0 3h55m

pod "alloy-pb8dw" deleted

pod "alloy-pfs5q" deleted

pod "alloy-tg66c" deleted

pod "alloy-xdkrv" deleted

NAME READY STATUS RESTARTS AGE

alloy-4xhq7 1/2 Running 0 12s

alloy-k7kzn 2/2 Running 0 12s

alloy-m7lsb 1/2 Running 0 11s

alloy-x225b 2/2 Running 0 13s

However it would just complain on malformed requests from Alloy to the Datadog agent regardless of which IP I used

ts=2025-07-16T17:55:26.124327875Z level=info msg="Exporting failed. Will retry the request after interval." component_path=/ component_id=otelcol.exporter.otlphttp.datadog error="failed to make an HTTP request: Post \"http://192.168.1.34:30780/v1/metrics\": net/http: HTTP/1.x transport connection broken: malformed HTTP response \"\\x00\\x00\\x06\\x04\\x00\\x00\\x00\\x00\\x00\\x00\\x05\\x00\\x00@\\x00\"" interval=35.892464973s

ts=2025-07-16T17:55:27.655630317Z level=info msg="Exporting failed. Will retry the request after interval." component_path=/ component_id=otelcol.exporter.otlphttp.datadog error="failed to make an HTTP request: Post \"http://192.168.1.34:30780/v1/metrics\": net/http: HTTP/1.x transport connection broken: malformed HTTP response \"\\x00\\x00\\x06\\x04\\x00\\x00\\x00\\x00\\x00\\x00\\x05\\x00\\x00@\\x00\"" interval=23.77060577s

ts=2025-07-16T17:55:31.435903445Z level=info msg="Exporting failed. Will retry the request after interval." component_path=/ component_id=otelcol.exporter.otlphttp.datadog error="failed to make an HTTP request: Post \"http://192.168.1.34:30780/v1/metrics\": net/http: HTTP/1.x transport connection broken: malformed HTTP response \"\\x00\\x00\\x06\\x04\\x00\\x00\\x00\\x00\\x00\\x00\\x05\\x00\\x00@\\x00\"" interval=41.336835712s

ts=2025-07-16T17:55:35.026326673Z level=info msg="Exporting failed. Will retry the request after interval." component_path=/ component_id=otelcol.exporter.otlphttp.datadog error="failed to make an HTTP request: Post \"http://192.168.1.34:30780/v1/logs\": net/http: HTTP/1.x transport connection broken: malformed HTTP response \"\\x00\\x00\\x06\\x04\\x00\\x00\\x00\\x00\\x00\\x00\\x05\\x00\\x00@\\x00\"" interval=27.828222935s

ts=2025-07-16T17:55:42.448470171Z level=info msg="Exporting failed. Will retry the request after interval." component_path=/ component_id=otelcol.exporter.otlphttp.datadog error="failed to make an HTTP request: Post \"http://192.168.1.34:30780/v1/logs\": net/http: HTTP/1.x transport connection broken: malformed HTTP response \"\\x00\\x00\\x06\\x04\\x00\\x00\\x00\\x00\\x00\\x00\\x05\\x00\\x00@\\x00\"" interval=38.358281107s

ts=2025-07-16T17:55:44.085226055Z level=info msg="Exporting failed. Will retry the request after interval." component_path=/ component_id=otelcol.exporter.otlphttp.datadog error="failed to make an HTTP request: Post \"http://192.168.1.34:30780/v1/metrics\": net/http: HTTP/1.x transport connection broken: malformed HTTP response \"\\x00\\x00\\x06\\x04\\x00\\x00\\x00\\x00\\x00\\x00\\x05\\x00\\x00@\\x00\"" interval=31.576356261s

Using GRPC showed

ts=2025-07-16T18:24:53.349133681Z level=info msg="Exporting failed. Will retry the request after interval." component_path=/ component_id=otelcol.exporter.otlp.datadog error="rpc error: code = Unavailable desc = connection error: desc = \"transport: authentication handshake failed: tls: first record does not look like a TLS handshake\"" interval=34.559877936s

ts=2025-07-16T18:24:57.087850048Z level=info msg="Exporting failed. Will retry the request after interval." component_path=/ component_id=otelcol.exporter.otlp.datadog error="rpc error: code = Unavailable desc = connection error: desc = \"transport: authentication handshake failed: tls: first record does not look like a TLS handshake\"" interval=20.112961453s

ts=2025-07-16T18:24:58.347788832Z level=info msg="Exporting failed. Will retry the request after interval." component_path=/ component_id=otelcol.exporter.otlp.datadog error="rpc error: code = Unavailable desc = connection error: desc = \"transport: authentication handshake failed: tls: first record does not look like a TLS handshake\"" interval=37.920345575s

However this triggered an idea - could Alloy be forcing TLS?

I disabled TLS for gRPC and pushed the config

$ cat alloy.config

logging {

level = "info"

format = "logfmt"

}

// Send to Datadog

otelcol.exporter.otlp "datadog" {

client {

endpoint = "192.168.1.33:30780"

tls {

insecure = true

}

}

}

// Collect OTLP

otelcol.receiver.otlp "httpreceiver" {

grpc {

endpoint = "0.0.0.0:4317"

}

http {

endpoint = "0.0.0.0:4318"

}

output {

metrics = [otelcol.processor.batch.default.input]

logs = [otelcol.processor.batch.default.input]

traces = [otelcol.processor.batch.default.input]

}

}

// Process and send

otelcol.processor.batch "default" {

output {

metrics = [otelcol.exporter.otlp.datadog.input]

logs = [otelcol.exporter.otlp.datadog.input]

traces = [otelcol.exporter.otlp.datadog.input]

}

}

discovery.kubernetes "podmetrics" {

role = "pod"

}

discovery.kubernetes "nodes" {

role = "node"

}

discovery.kubernetes "services" {

role = "service"

}

discovery.kubernetes "endpoints" {

role = "endpoints"

}

$ kubectl get po -n grafana -l app.kubernetes.io/instance=alloy && kubectl delete cm alloy-config -n grafana && kubectl create configmap -n grafana alloy-config "--from-file=config.alloy=./alloy.config" && kubectl get po -n grafana -l app.kubernetes.io/instance=alloy && kubectl delete po -n grafana -l app.kubernetes.io/instance=alloy && sleep 10 && kubectl get po -n grafana -l app.kubernetes.io/instance=alloy

NAME READY STATUS RESTARTS AGE

alloy-66p6m 2/2 Running 0 12m

alloy-9pnt2 2/2 Running 0 12m

alloy-m278t 2/2 Running 0 11m

alloy-smlcp 2/2 Running 0 12m

configmap "alloy-config" deleted

configmap/alloy-config created

NAME READY STATUS RESTARTS AGE

alloy-66p6m 2/2 Running 0 12m

alloy-9pnt2 2/2 Running 0 12m

alloy-m278t 2/2 Running 0 12m

alloy-smlcp 2/2 Running 0 12m

pod "alloy-66p6m" deleted

pod "alloy-9pnt2" deleted

pod "alloy-m278t" deleted

pod "alloy-smlcp" deleted

NAME READY STATUS RESTARTS AGE

alloy-27888 2/2 Running 0 12s

alloy-jr9ck 1/2 Running 0 11s

alloy-kd5lh 1/2 Running 0 10s

alloy-q8s4j 1/2 Running 0 11s

This time some Gemini usage succeeded in pushing OTLP data through alloy onto the Datadog collector!

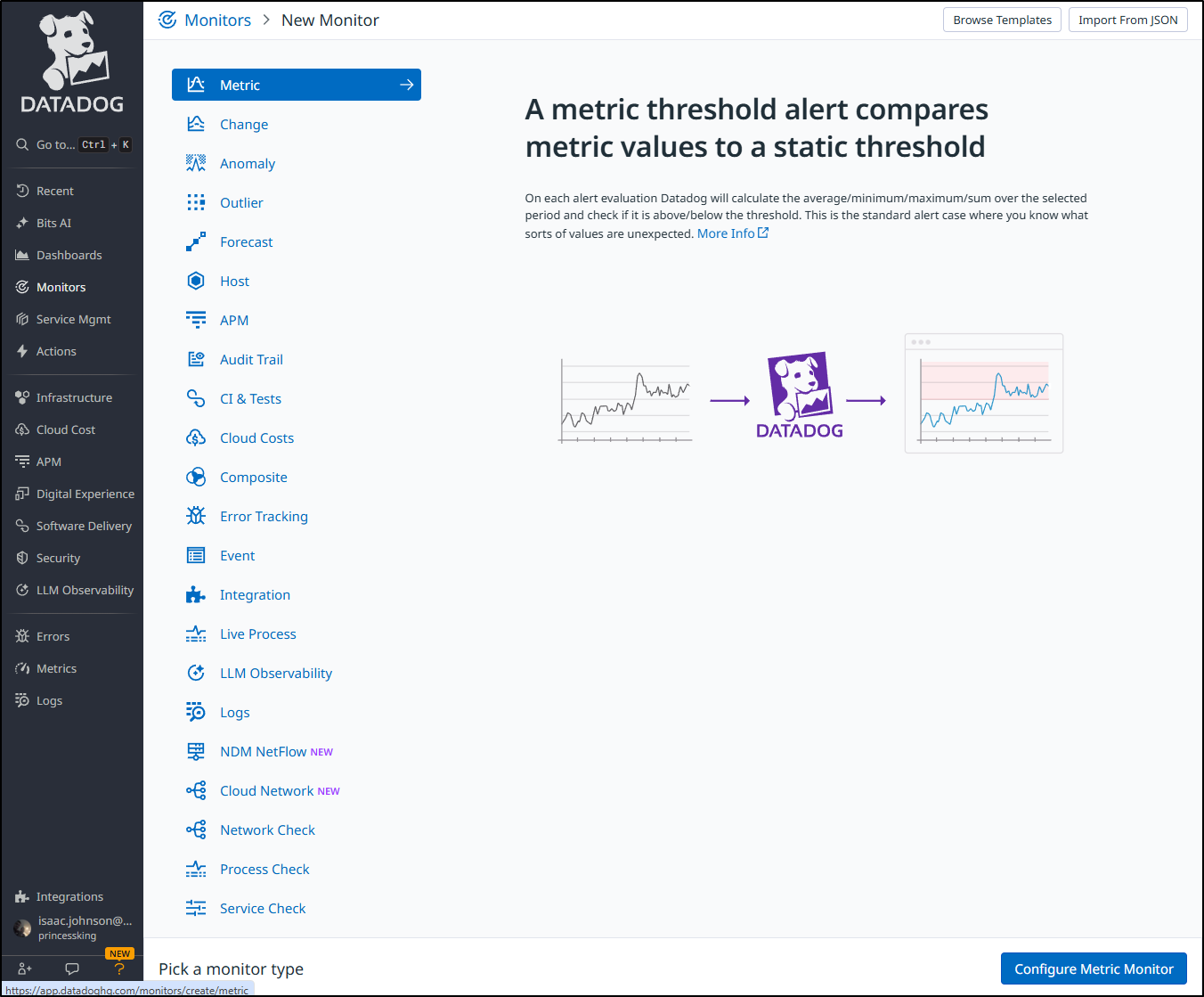

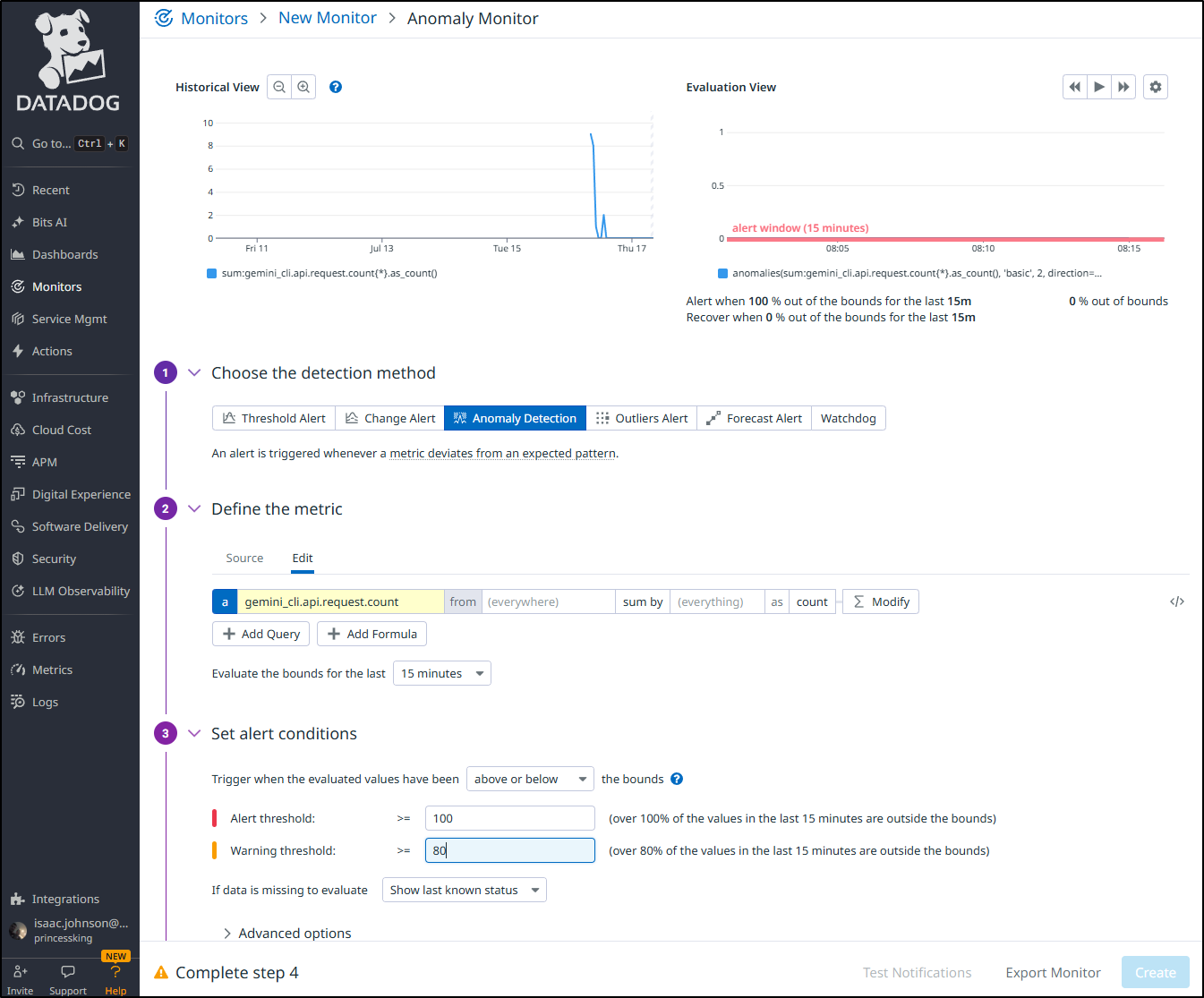

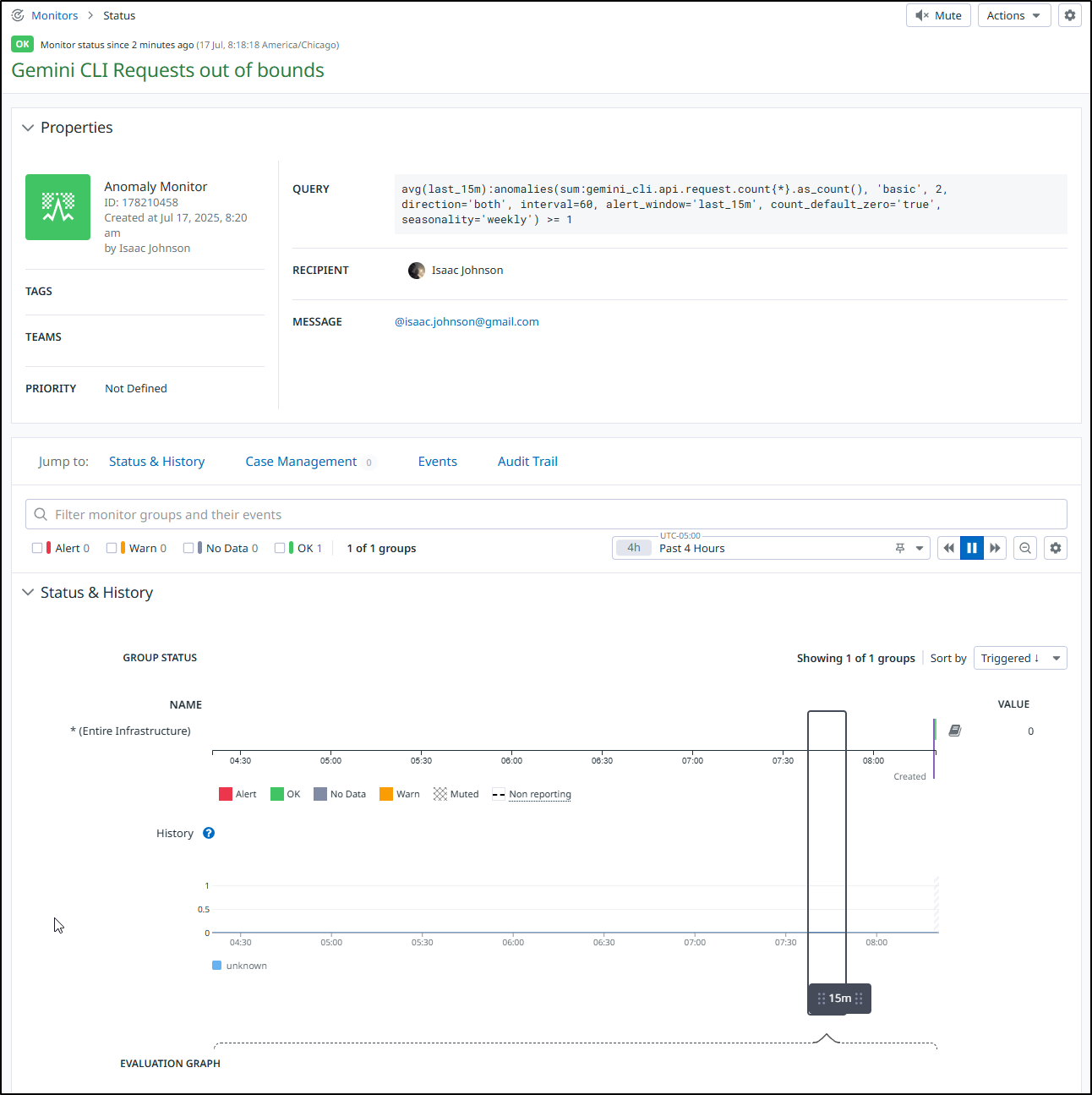

Alerts

Because these are metrics, we can create a new monitor based on a metric

Perhaps the first metric based one I might make is an anomaly detection one. This kind of monitor just looks for “out of norm” values and alerts. That way if my trend of requests grows, I won’t keep having to fiddle with the monitor values

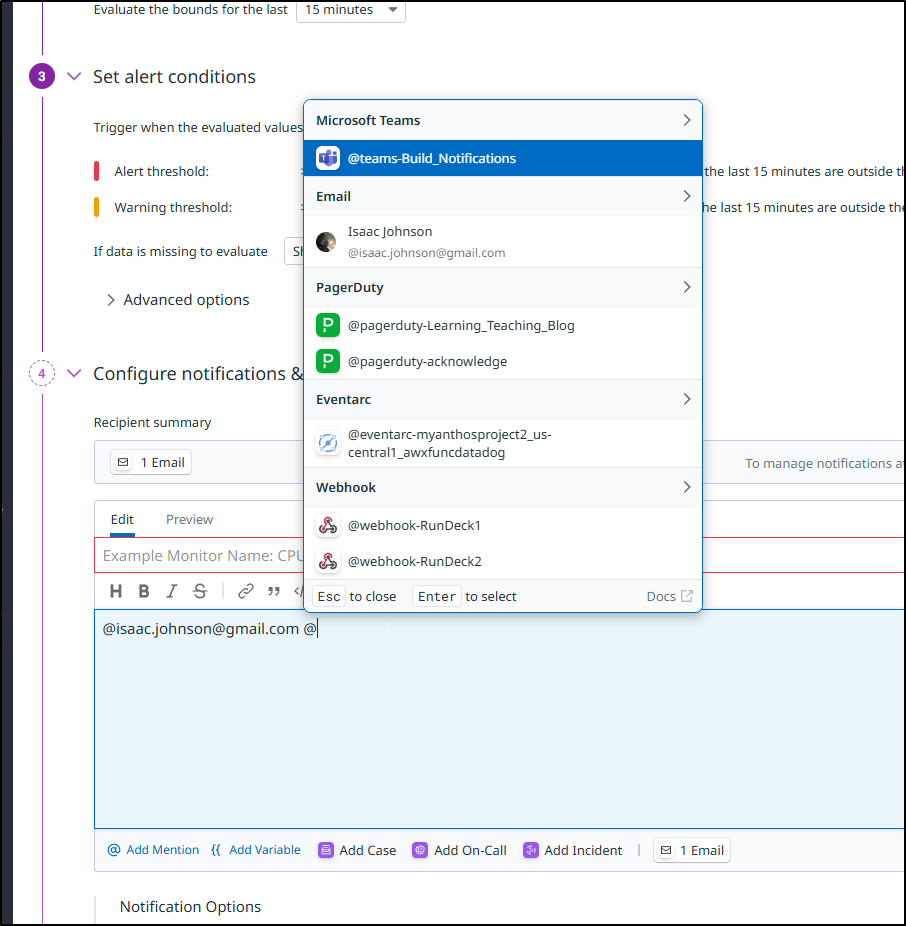

I can get an email or even trigger things like GCP EventArc (which triggers a Cloud Run function) or Pagerduty

I’ll save it

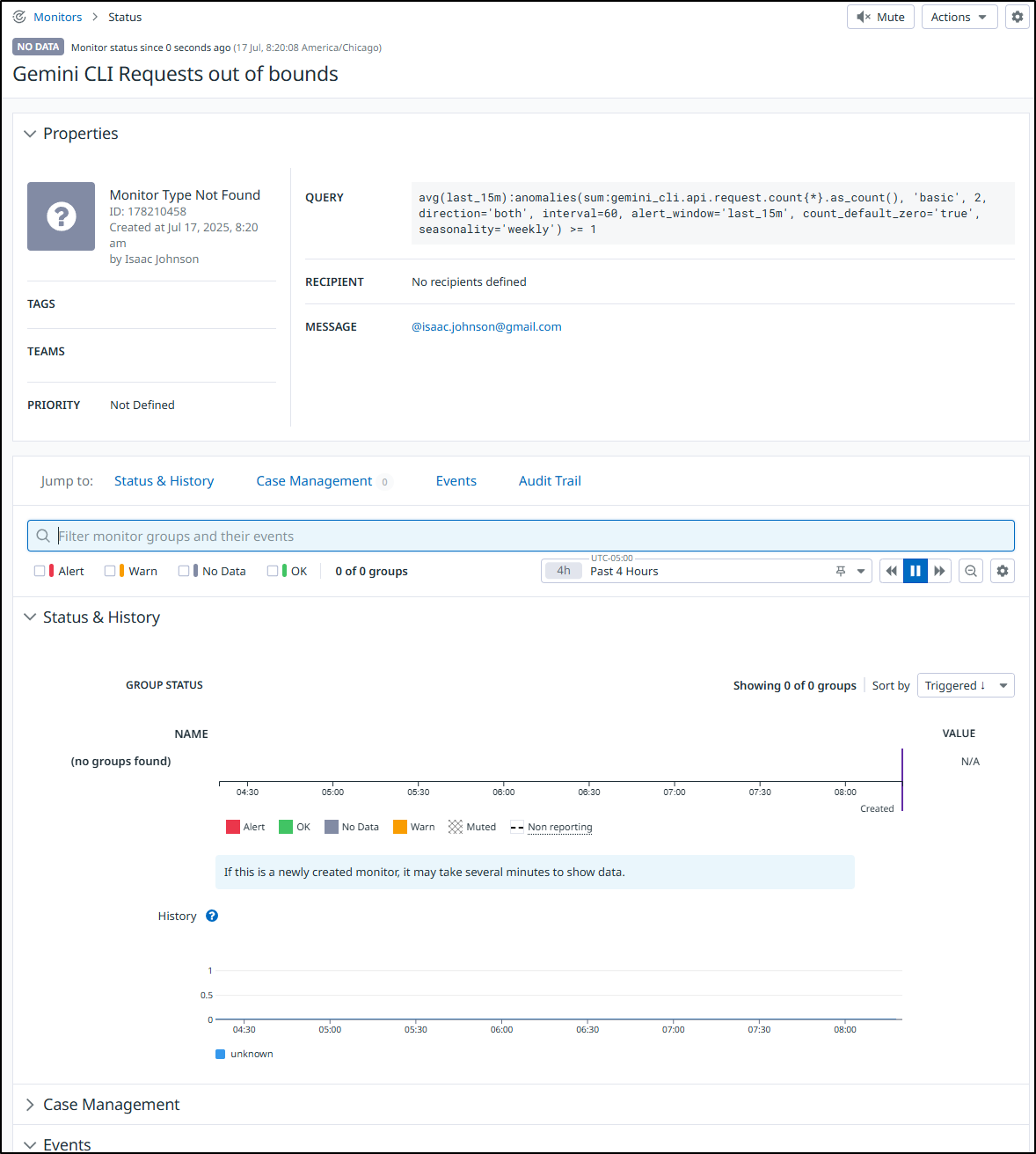

Then when I look back at the monitor I can see values, triggers, etc

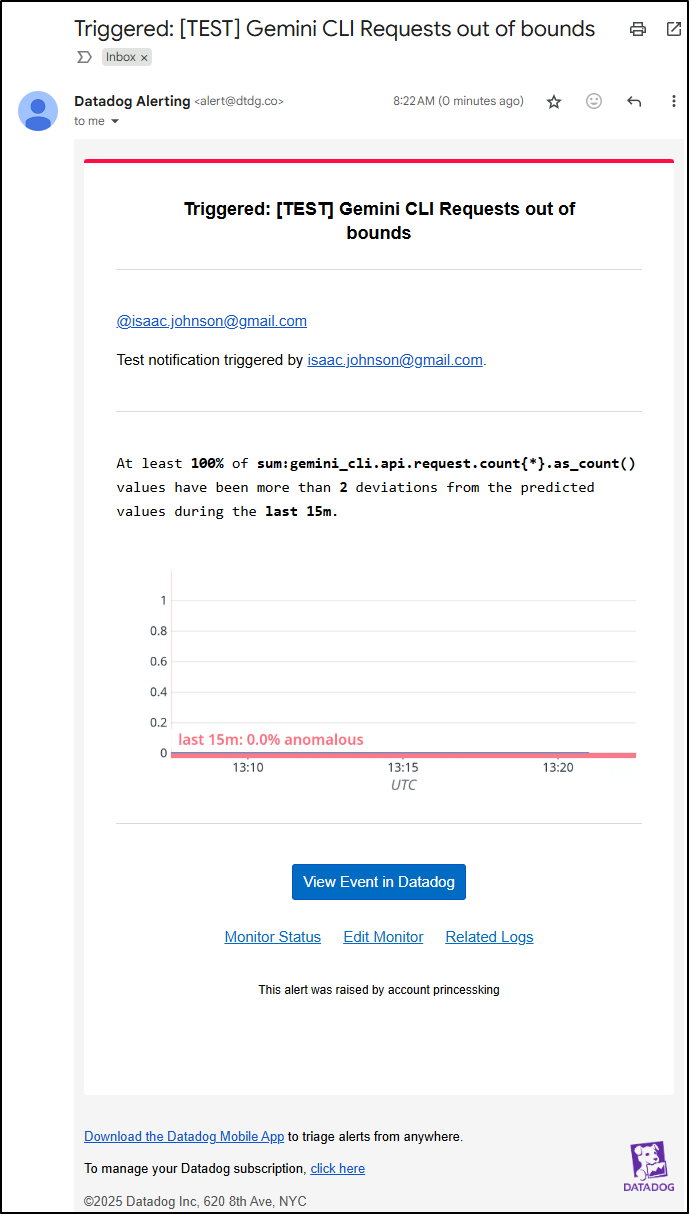

I ran a quick test and this is what the alert would look like when it comes in

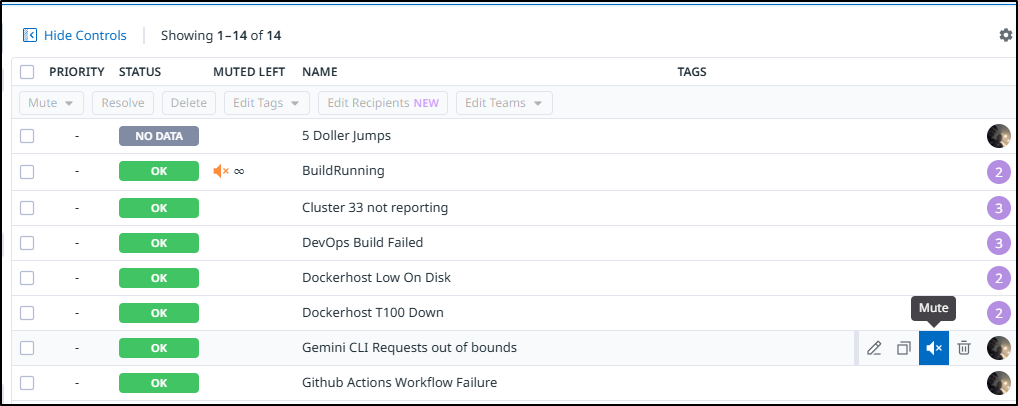

The other thing to point out is that if the alert gets a bit noisy, we can mute it

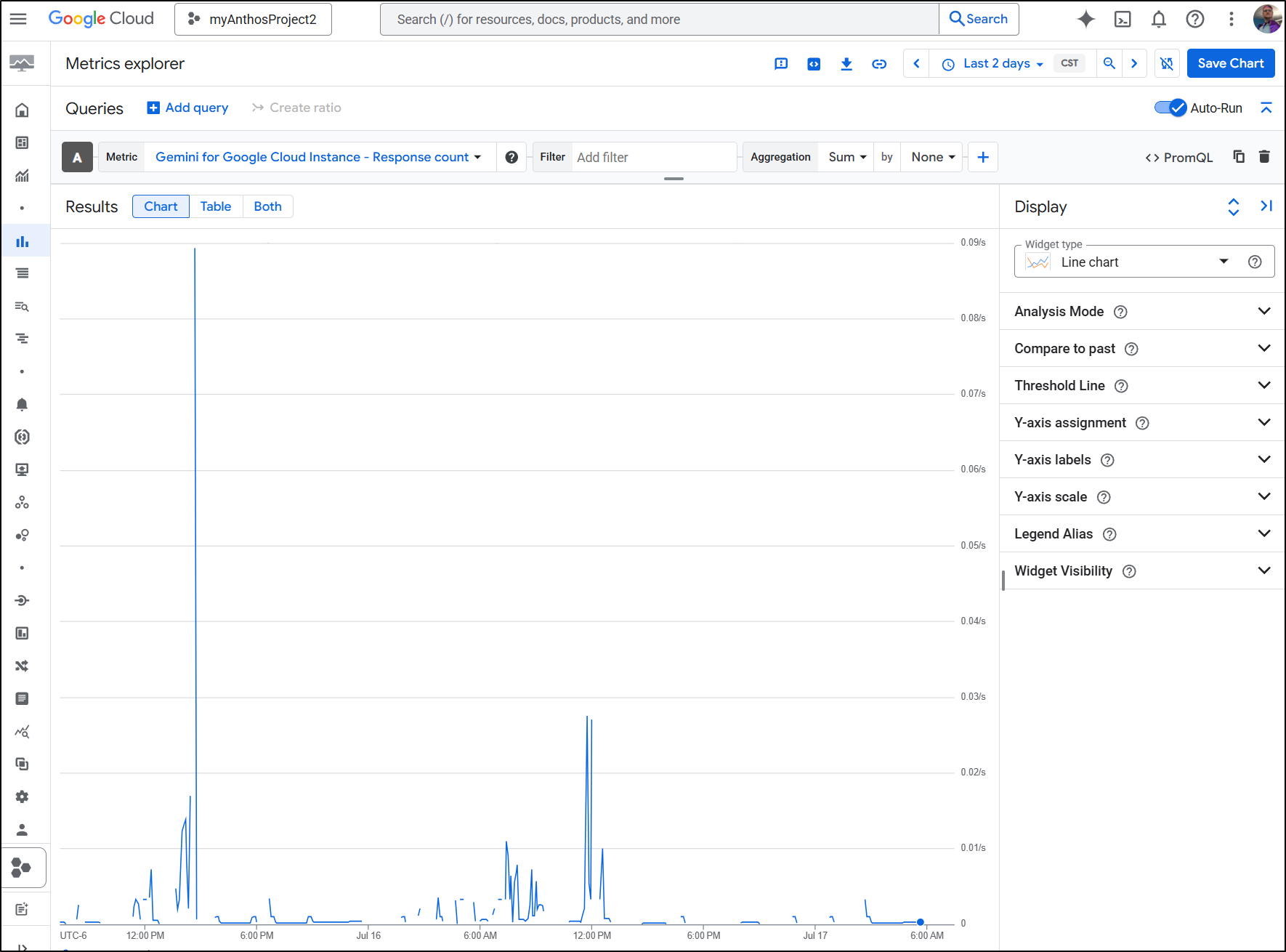

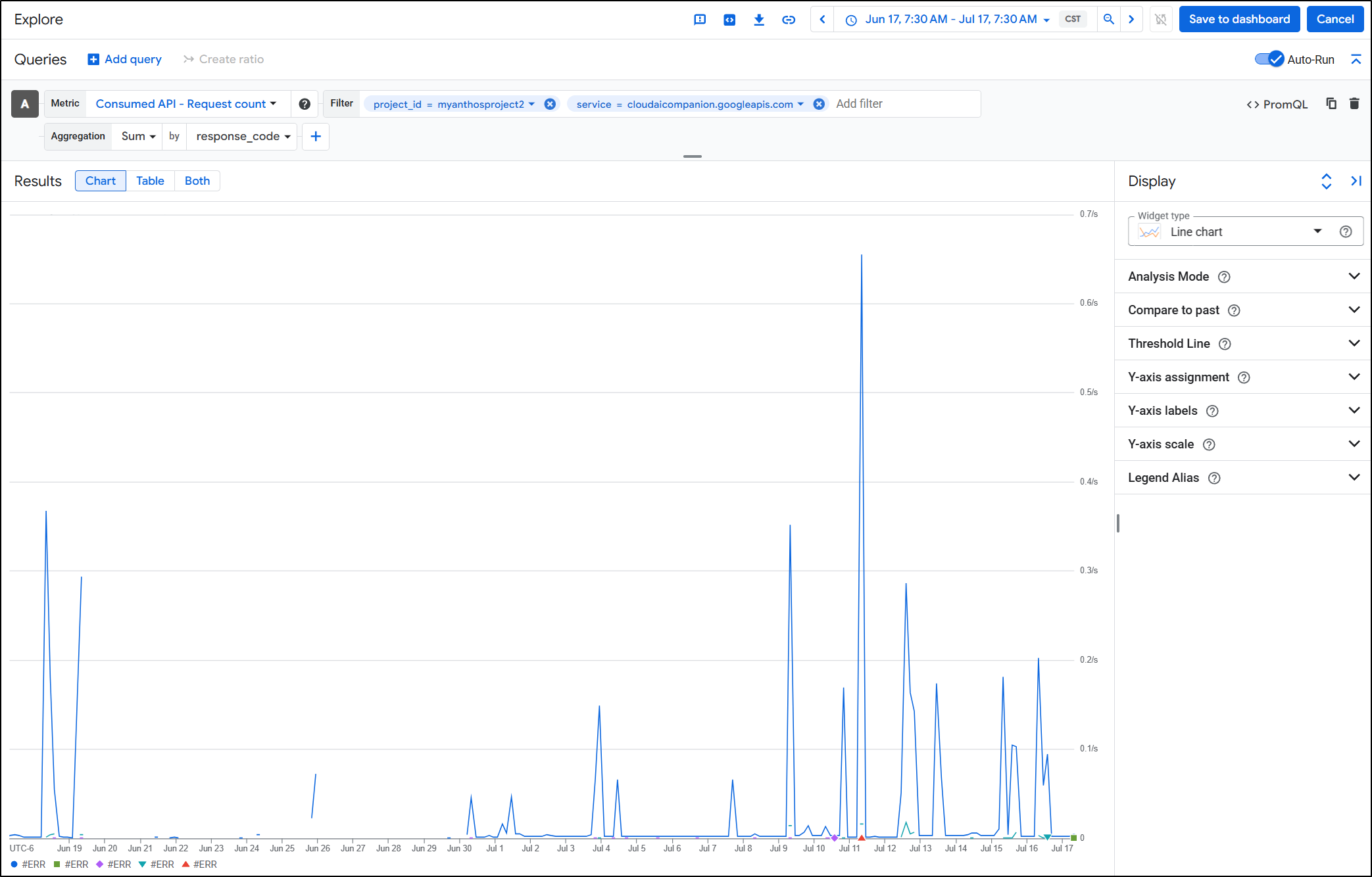

Google Cloud Monitoring

In the GCP Console, we can, of course, fetch the same results natively in Stackdriver for the “Response count”

You can also view Gemini API usage by way of “cloudaicompanion.googleapis.com” but I would not be surprised to see that change again (used to be duet, bard etc).

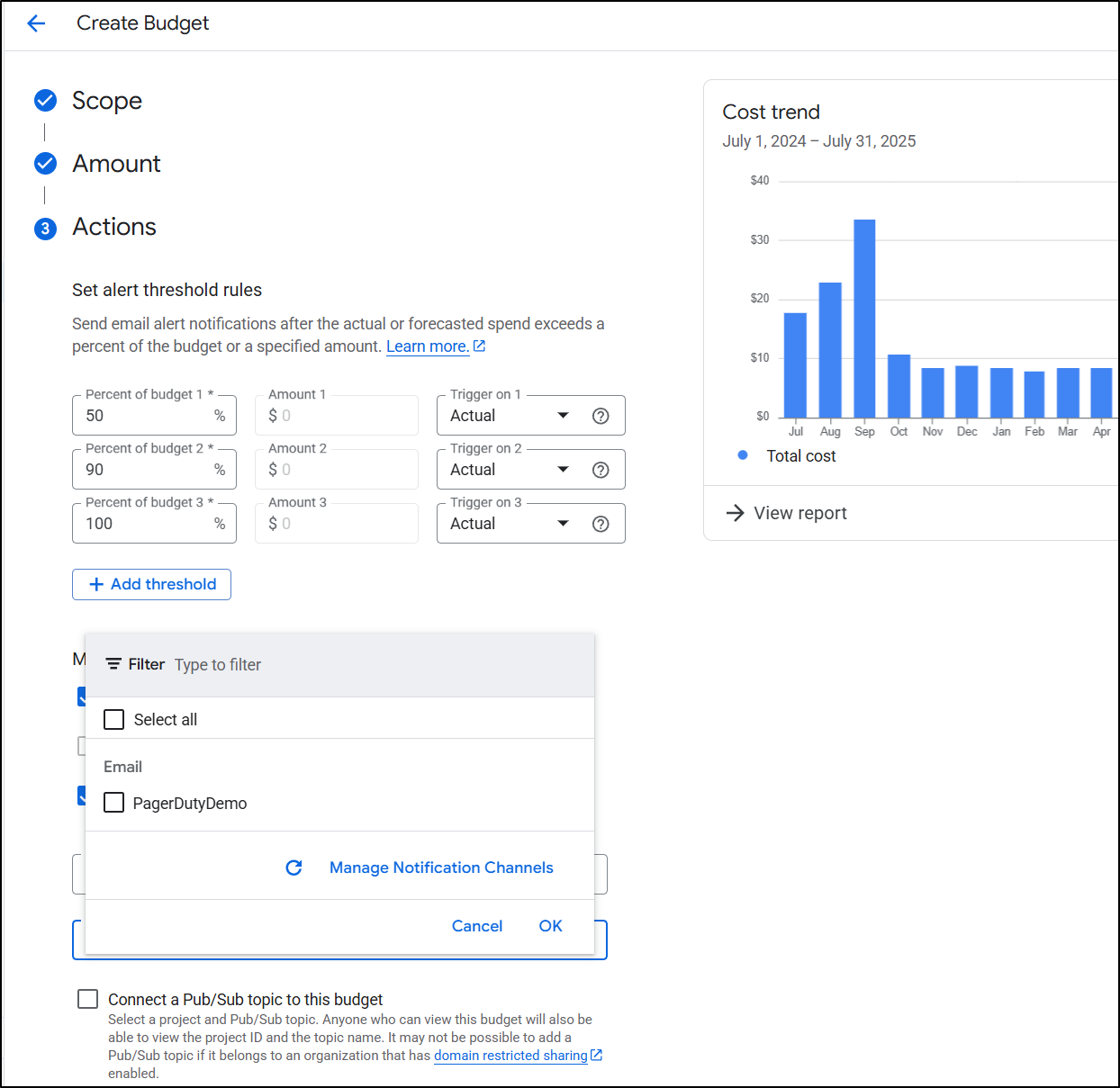

One can also use budgets with alerting rules to monitoring notification channels:

But be aware, there are costs involved.

If you are interested in how to set that up, look at Cloud Budgets and Alerts which covers AWS, Azure and GCP. and Cloud Spend: Find and Fix which covers a time in 2024 when my costs spiked (in GCP) and I needed to find and fix them.

Summary

Using the Datadog agent in Kubernetes was quite easy and setting it up to forward OTLP data was just a mere modification of the agent’s settings. What was a bit more difficult was working through the nuances of taking one OTLP collector, Alloy, and getting it to accurately point to another (Datadog).

I do believe most of my issues were because, unlike the standard Open Telemetry collector, the Grafana Alloy one assumes TLS/https which really isn’t the norm for OTL (the standard defined HTTP over TCP). When we did this with New Relic, it was skipped over as they use a public HTTPS endpoint with the license baked into the headers. With Datadog, the auth parts are in the collector so it wasn’t really necessary to do TLS. I believe that had I exposed the OTLP endpoint with a new TLS front door (e.g. creating something like fbotlp.tpk.pw and letting NGinx sort out HTTPS to HTTP), we would have been fine.

I found Datadog a bit easier to explore, metrics-wise, because of the way they organize fields and have live results. In my New Relic, I lacked AI (not included in free tier) so I had a lot of guessing to do. Datadog includes “Bits” even at the lowest tiers meaning we could have leveraged Datadog AI if needed.

I think both suites did equally good on the Dashboards. And both Datadog and New Relic were easier to build out then the OOTB Grafana ones.

Lastly, I, again, tend to find a bit of a Matthew 6:24 issue here. I really honestly like both Datadog and New Relic and have friends at both companies. I think a person debating the two would just need to really look at the full offering to compare suites. Even today I dance between the two for my personal needs.

Hopefully you found something useful here and that you can take forward into your own environments.