Published: Oct 28, 2025 by Isaac Johnson

Vert is an excellent media convertor that I found from Marius. It just is about converting types. I had a lot of old 3gpp files from “the olden days” of cell phones to try out.

Watchtower is a great container updating tool (itself a container) but for those that want more UI, there is a related project, Tugtainer which I found offers a bit better user experience.

Let’s try all these tools both locally in Docker and in some cases Kubernetes.

Vert.sh

I saw this Marius post a few weeks ago about Vert which is an open-source file converter

Let’s start with Docker:

$ docker run -d \

--restart unless-stopped \

-p 3000:80 \

--name "vert" \

ghcr.io/vert-sh/vert:latest

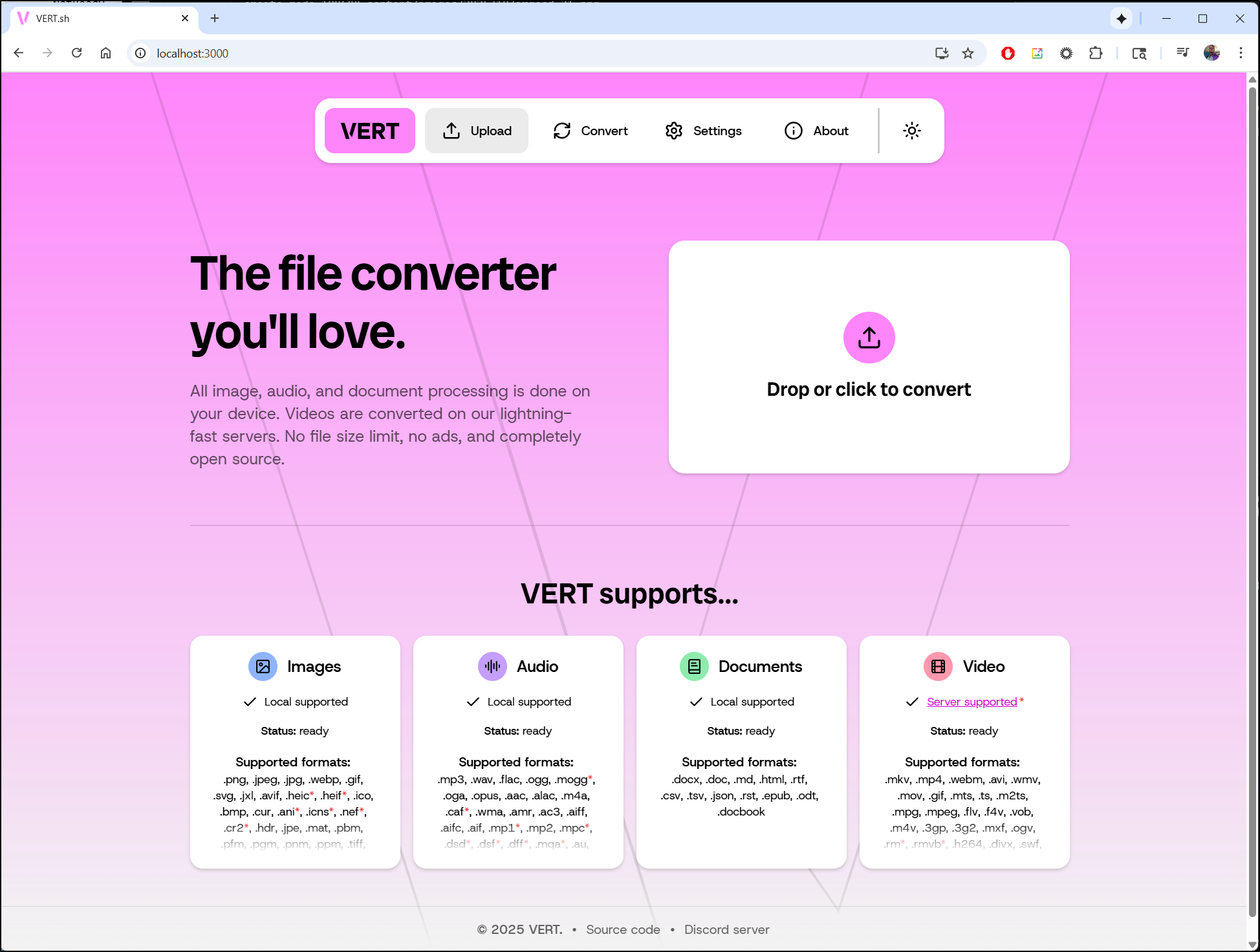

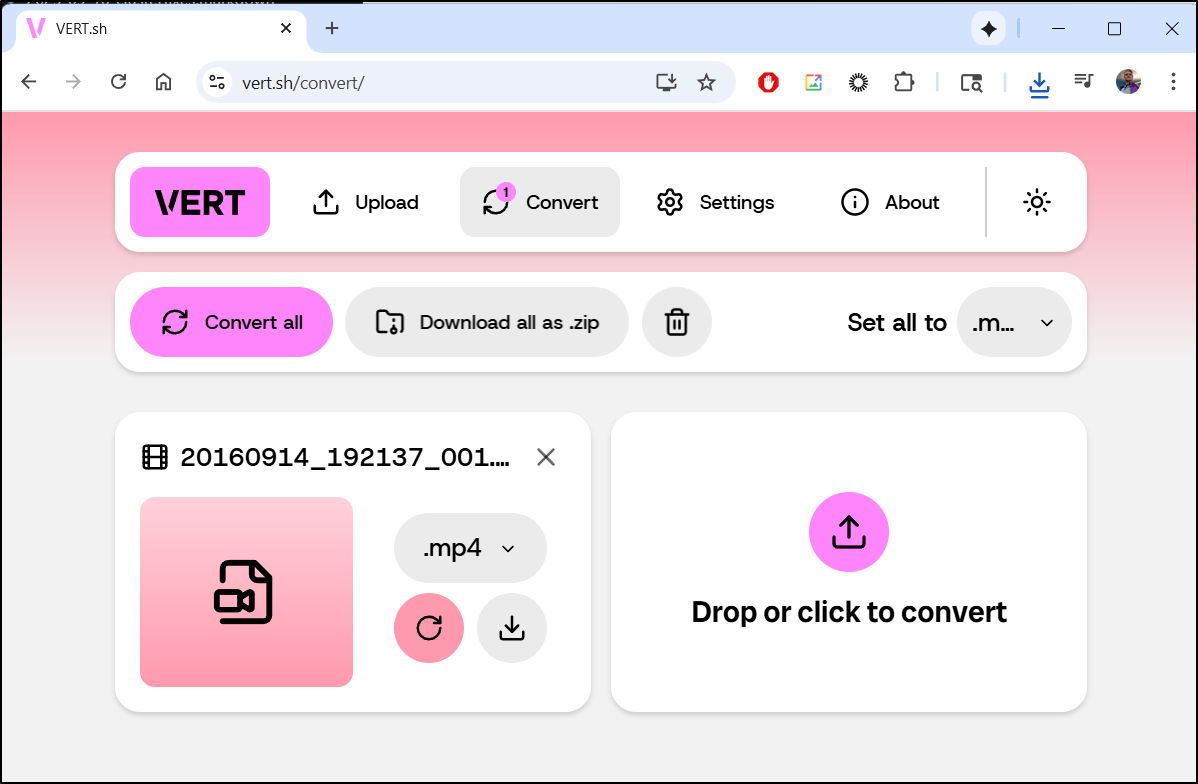

Which loads just fine

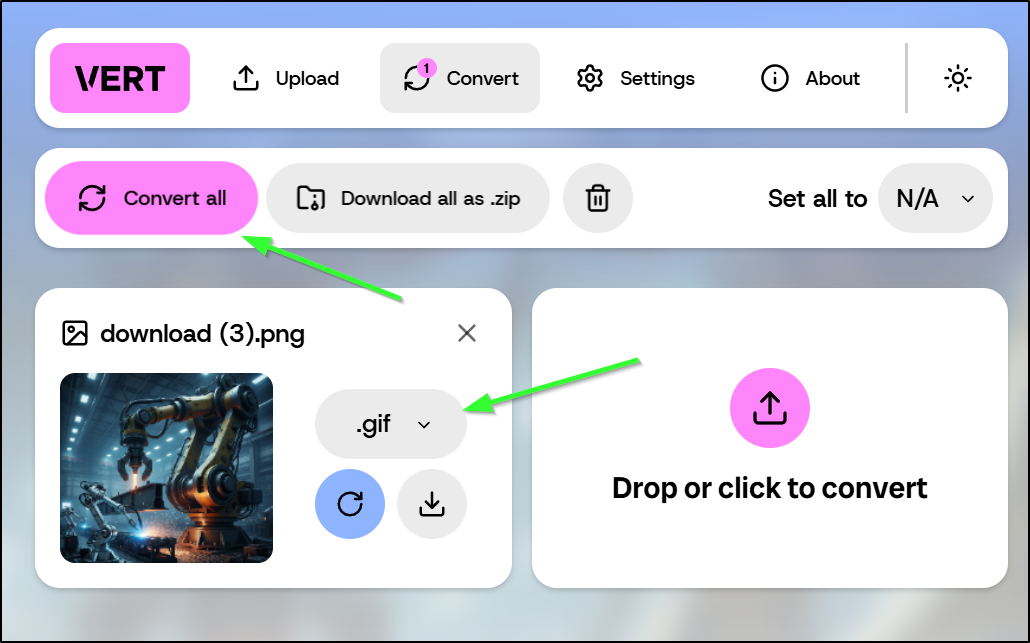

I now convert files using this local docker instance. For example, I can upload a PNG and then select GIF and convert all to convert it to a gif

I tried a few video files like AVI and old phone 3GP files

However, we can just use vert.sh directly

Watchtower

Watchtower is all about automating the update of a containerized app whenever a new version is pushed to a registry.

Let’s take a look at it’s usage docs to see how we might deploy this on a docker host.

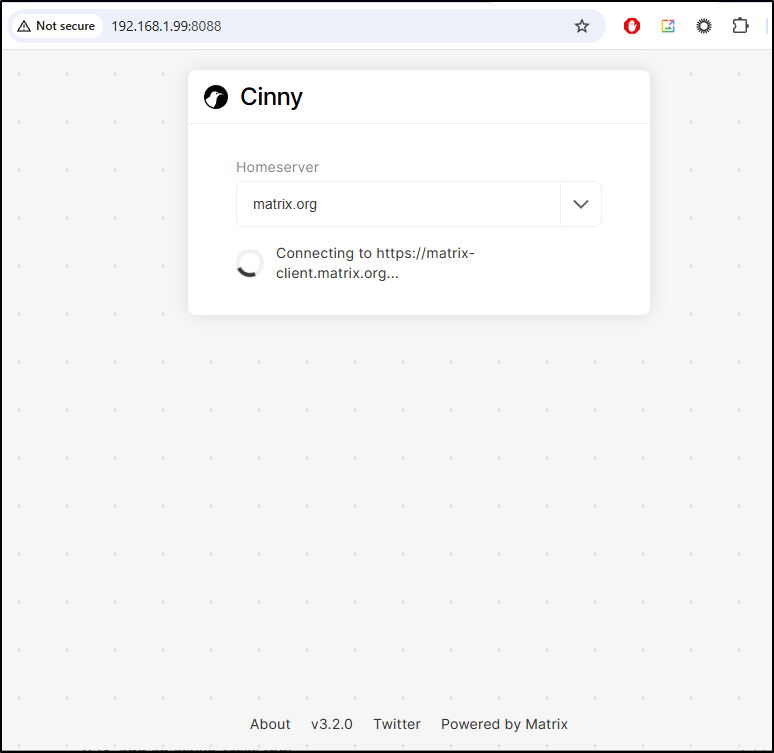

Let’s start with a container that is way out of date, Cinny

$ docker ps | grep cinny

9fe031d65142 ghcr.io/cinnyapp/cinny:latest "/docker-entrypoint.…" 21 months ago Up 4 weeks 0.0.0.0:8088->80/tcp, :::8088->80/tcp cinny

I should be able to run

docker run -d \

--name watchtower \

-v /var/run/docker.sock:/var/run/docker.sock \

containrrr/watchtower cinny --debug

It looks like it scheduled a check in 24hr

builder@builder-T100:~$ docker run -d \

--name watchtower \

-v /var/run/docker.sock:/var/run/docker.sock \

containrrr/watchtower cinny --debug

Unable to find image 'containrrr/watchtower:latest' locally

latest: Pulling from containrrr/watchtower

57241801ebfd: Pull complete

3d4f475b92a2: Pull complete

1f05004da6d7: Pull complete

Digest: sha256:6dd50763bbd632a83cb154d5451700530d1e44200b268a4e9488fefdfcf2b038

Status: Downloaded newer image for containrrr/watchtower:latest

9e91a2848c4948e52c6c76bf3afd0ff0462728a7e31174949f1426c70c3a9c8b

builder@builder-T100:~$ docker ps | grep watch

9e91a2848c49 containrrr/watchtower "/watchtower cinny -…" 7 seconds ago Up 7 seconds (health: starting) 8080/tcp watchtower

builder@builder-T100:~$ docker logs watchtower

time="2025-10-15T12:02:41Z" level=debug msg="Sleeping for a second to ensure the docker api client has been properly initialized."

time="2025-10-15T12:02:42Z" level=debug msg="Making sure everything is sane before starting"

time="2025-10-15T12:02:42Z" level=debug msg="Retrieving running containers"

time="2025-10-15T12:02:42Z" level=debug msg="There are no additional watchtower containers"

time="2025-10-15T12:02:42Z" level=debug msg="Watchtower HTTP API skipped."

time="2025-10-15T12:02:42Z" level=info msg="Watchtower 1.7.1"

time="2025-10-15T12:02:42Z" level=info msg="Using no notifications"

time="2025-10-15T12:02:42Z" level=info msg="Only checking containers which name matches \"cinny\""

time="2025-10-15T12:02:42Z" level=info msg="Scheduling first run: 2025-10-16 12:02:42 +0000 UTC"

time="2025-10-15T12:02:42Z" level=info msg="Note that the first check will be performed in 23 hours, 59 minutes, 59 seconds"

I’m patient so I’ll wait a day and see (after 8am Thur…)

I checked on Friday at 6:15a and saw it indeed updating the image.

time="2025-10-15T12:02:42Z" level=info msg="Note that the first check will be performed in 23 hours, 59 minutes, 59 seconds"

time="2025-10-16T12:02:42Z" level=debug msg="Checking containers for updated images"

time="2025-10-16T12:02:42Z" level=debug msg="Retrieving running containers"

time="2025-10-16T12:02:42Z" level=debug msg="Trying to load authentication credentials." container=/cinny image="ghcr.io/cinnyapp/cinny:latest"

time="2025-10-16T12:02:42Z" level=debug msg="No credentials for ghcr.io found" config_file=/config.json

time="2025-10-16T12:02:42Z" level=debug msg="Got image name: ghcr.io/cinnyapp/cinny:latest"

time="2025-10-16T12:02:42Z" level=debug msg="Checking if pull is needed" container=/cinny image="ghcr.io/cinnyapp/cinny:latest"

time="2025-10-16T12:02:42Z" level=debug msg="Built challenge URL" URL="https://ghcr.io/v2/"

time="2025-10-16T12:02:42Z" level=debug msg="Got response to challenge request" header="Bearer realm=\"https://ghcr.io/token\",service=\"ghcr.io\",scope=\"repository:user/image:pull\"" status="401 Unauthorized"

time="2025-10-16T12:02:42Z" level=debug msg="Checking challenge header content" realm="https://ghcr.io/token" service=ghcr.io

time="2025-10-16T12:02:42Z" level=debug msg="Setting scope for auth token" image=ghcr.io/cinnyapp/cinny scope="repository:cinnyapp/cinny:pull"

time="2025-10-16T12:02:42Z" level=debug msg="No credentials found."

time="2025-10-16T12:02:42Z" level=debug msg="Parsing image ref" host=ghcr.io image=cinnyapp/cinny normalized=ghcr.io/cinnyapp/cinny tag=latest

time="2025-10-16T12:02:42Z" level=debug msg="Doing a HEAD request to fetch a digest" url="https://ghcr.io/v2/cinnyapp/cinny/manifests/latest"

time="2025-10-16T12:02:42Z" level=debug msg="Found a remote digest to compare with" remote="sha256:8a7f4eee8164afc3e7ccf074ba5ac7d139fe415ae7612526e9bc9812789e622f"

time="2025-10-16T12:02:42Z" level=debug msg=Comparing local="sha256:419e4a03cb9e3b1547eeb0e7e14bf6b9ebc42bd0b499bbebcc5b489f2942f489" remote="sha256:8a7f4eee8164afc3e7ccf074ba5ac7d139fe415ae7612526e9bc9812789e622f"

time="2025-10-16T12:02:42Z" level=debug msg="Digests did not match, doing a pull."

time="2025-10-16T12:02:42Z" level=debug msg="Pulling image" container=/cinny image="ghcr.io/cinnyapp/cinny:latest"

time="2025-10-16T12:02:45Z" level=info msg="Found new ghcr.io/cinnyapp/cinny:latest image (ffe77a51da41)"

time="2025-10-16T12:02:45Z" level=info msg="Stopping /cinny (9fe031d65142) with SIGTERM"

time="2025-10-16T12:02:55Z" level=debug msg="Removing container 9fe031d65142"

time="2025-10-16T12:02:56Z" level=info msg="Creating /cinny"

time="2025-10-16T12:02:56Z" level=debug msg="Starting container /cinny (a1433993acb8)"

time="2025-10-16T12:02:56Z" level=info msg="Session done" Failed=0 Scanned=1 Updated=1 notify=no

time="2025-10-16T12:02:56Z" level=debug msg="Scheduled next run: 2025-10-17 12:02:42 +0000 UTC"

Evidence would suggest it did bounce the container at that time:

$ docker ps | grep cinny

a1433993acb8 ghcr.io/cinnyapp/cinny:latest "/docker-entrypoint.…" 23 hours ago Up 23 hours 0.0.0.0:8088->80/tcp, :::8088->80/tcp cinny

9e91a2848c49 containrrr/watchtower "/watchtower cinny -…" 47 hours ago Up 47 hours (healthy) 8080/tcp

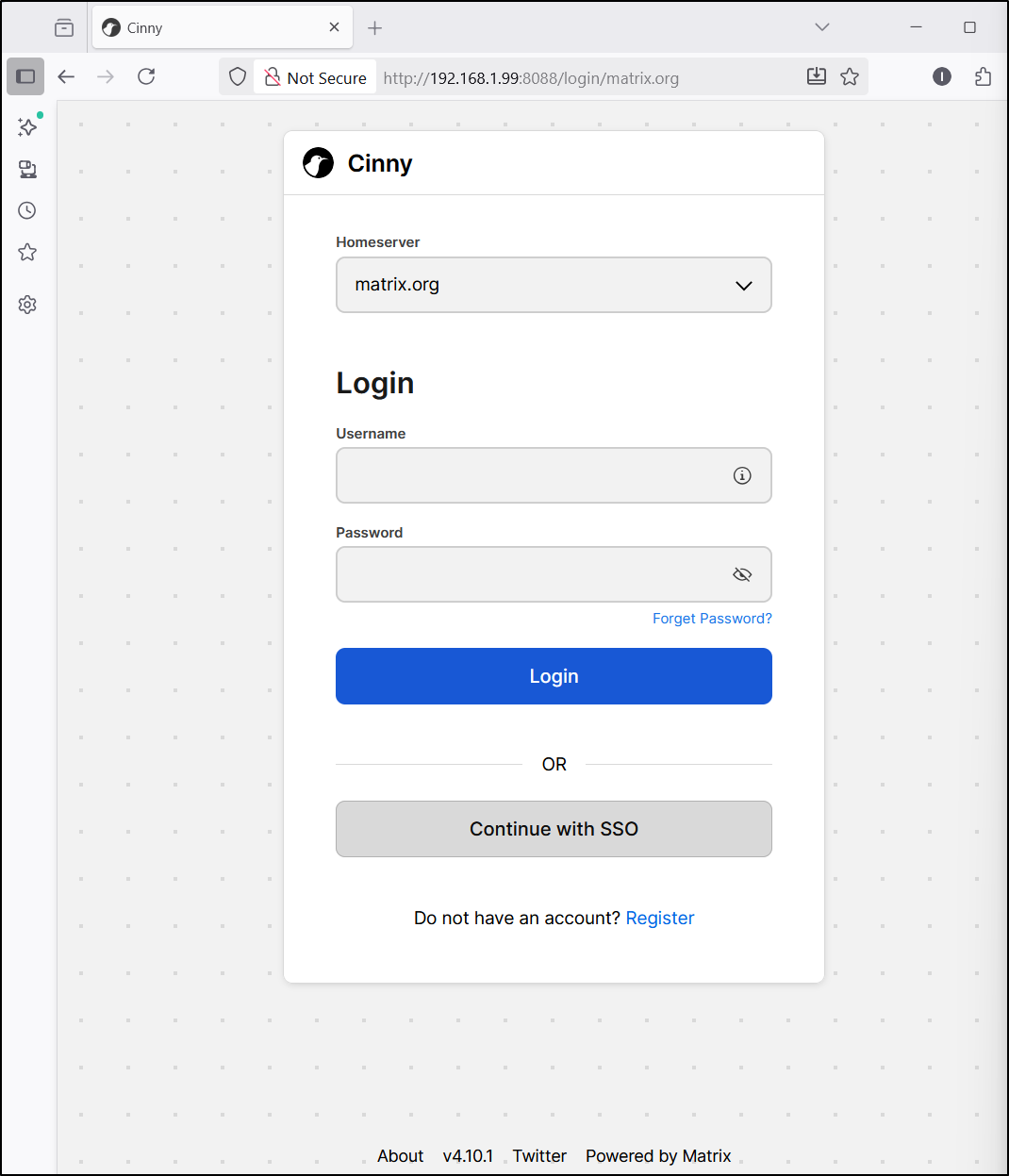

I can now see Cinny is up to version 4.10.1

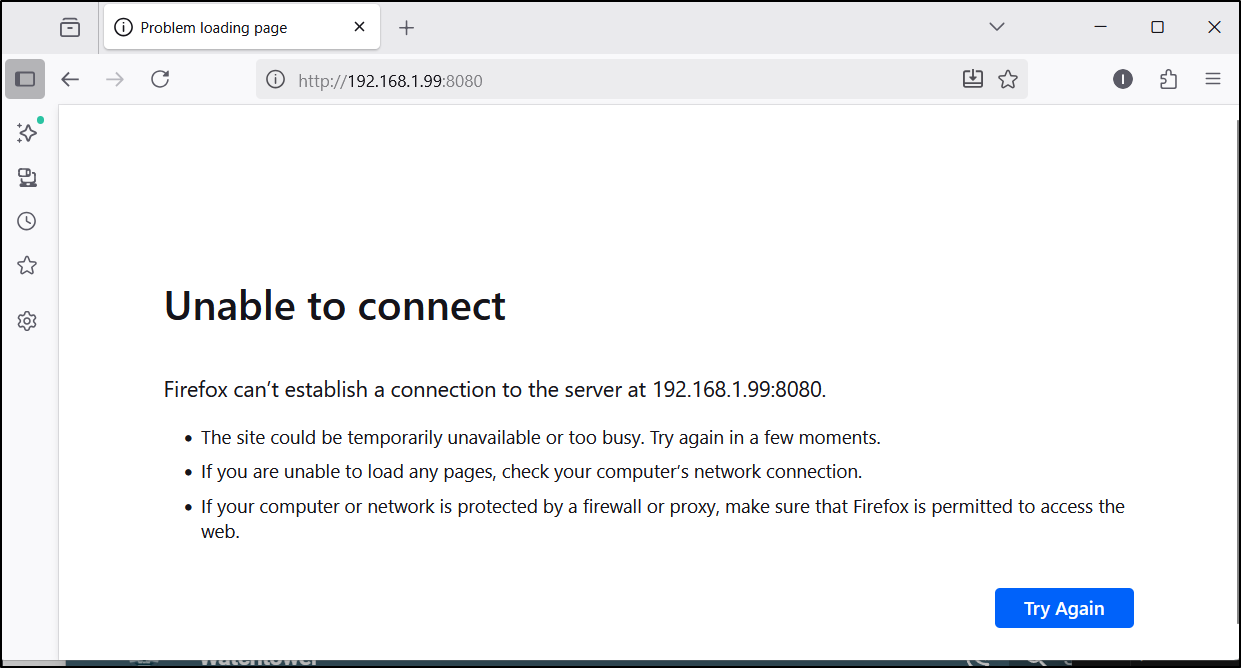

What is interesting to me is there seems to be a TCP port slated for watchtower, 8080:

$ docker ps | grep 8080

9e91a2848c49 containrrr/watchtower "/watchtower cinny -…" 47 hours ago Up 47 hours (healthy) 8080/tcp watchtower

But it does not serve anything web traffic

I saw some docs about an HTTP API mode, but that too didn’t do anything

I even double checked the container logs just in case it did trigger but didn’t respond on the web ui

builder@builder-T100:~$ docker logs watchtower 2>&1 | tail -n 5

time="2025-10-16T12:02:55Z" level=debug msg="Removing container 9fe031d65142"

time="2025-10-16T12:02:56Z" level=info msg="Creating /cinny"

time="2025-10-16T12:02:56Z" level=debug msg="Starting container /cinny (a1433993acb8)"

time="2025-10-16T12:02:56Z" level=info msg="Session done" Failed=0 Scanned=1 Updated=1 notify=no

time="2025-10-16T12:02:56Z" level=debug msg="Scheduled next run: 2025-10-17 12:02:42 +0000 UTC"

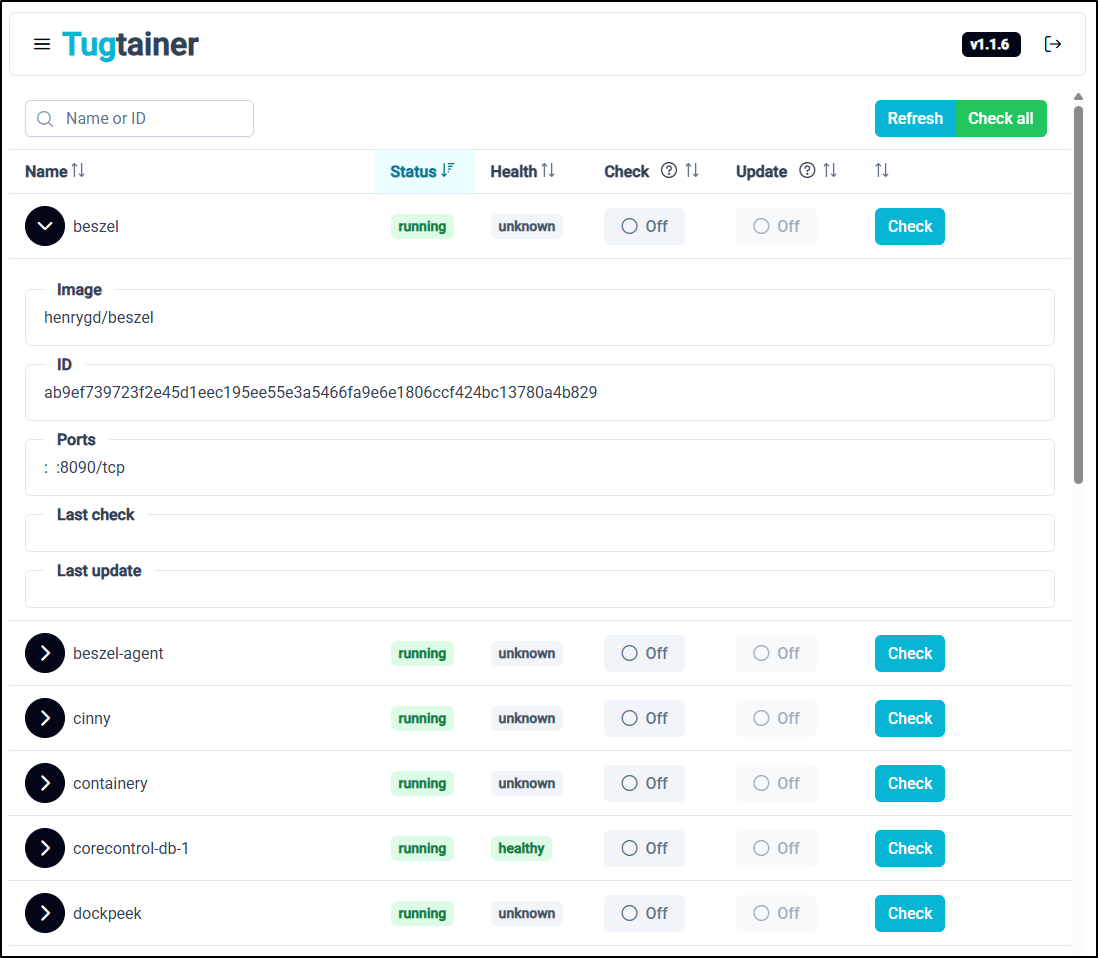

Tugtainer

Another interesting app that came on my radar is Tugtainer. This can be used to both list your docker containers and update them similar to Watchtower

It’s easy to install. Let’s add it to our docker host with a docker run command

builder@builder-T100:~/tugtainer$ mkdir data

builder@builder-T100:~/tugtainer$ docker run -d -p 9412:80 \

--name=tugtainer \

--restart=unless-stopped \

-v ./data:/tugtainer \

-v /var/run/docker.sock:/var/run/docker.sock \

quenary/tugtainer:latest

Unable to find image 'quenary/tugtainer:latest' locally

latest: Pulling from quenary/tugtainer

8c7716127147: Already exists

eb47ef3fe06b: Already exists

326ad4d2abf0: Already exists

97385090cab0: Already exists

1157ce8d3c4a: Pull complete

d248418bcf38: Pull complete

e147d769b83c: Pull complete

271d55492ae9: Pull complete

5a37a23d9d0d: Pull complete

ef016fbb39d2: Pull complete

e15da9aca888: Pull complete

47a1f3722687: Pull complete

38c1499509de: Pull complete

Digest: sha256:3bb2dc4e3b428719b015252253ae88fd4efdc224b93bfb089946c198bd779586

Status: Downloaded newer image for quenary/tugtainer:latest

8cf5956d4904246de02449fcc095c1f96153433e0da3f76159853db7976f5047

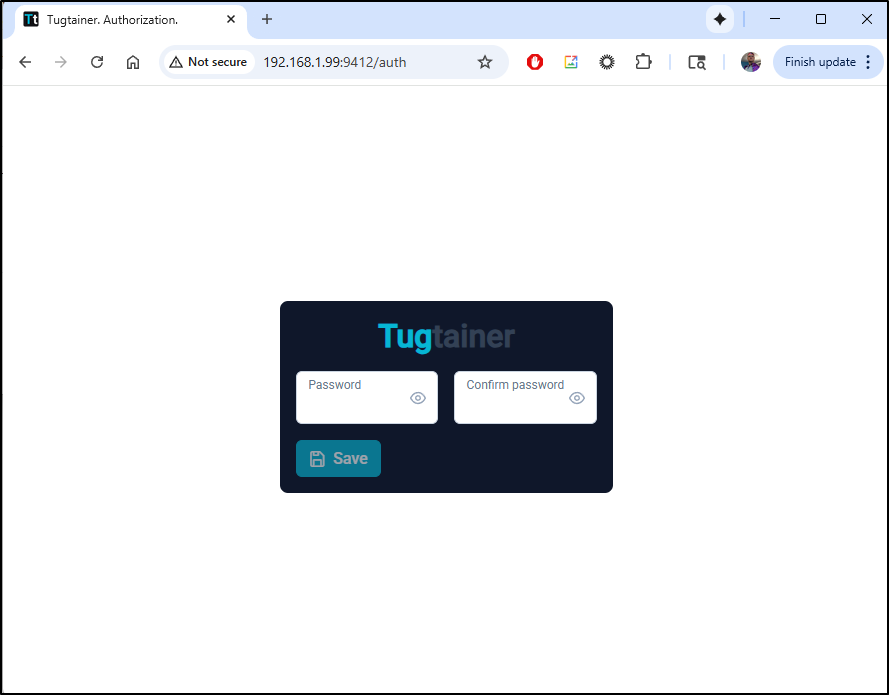

I’m now presented with the login page where I can create an admin password

Then I use it to sign back in

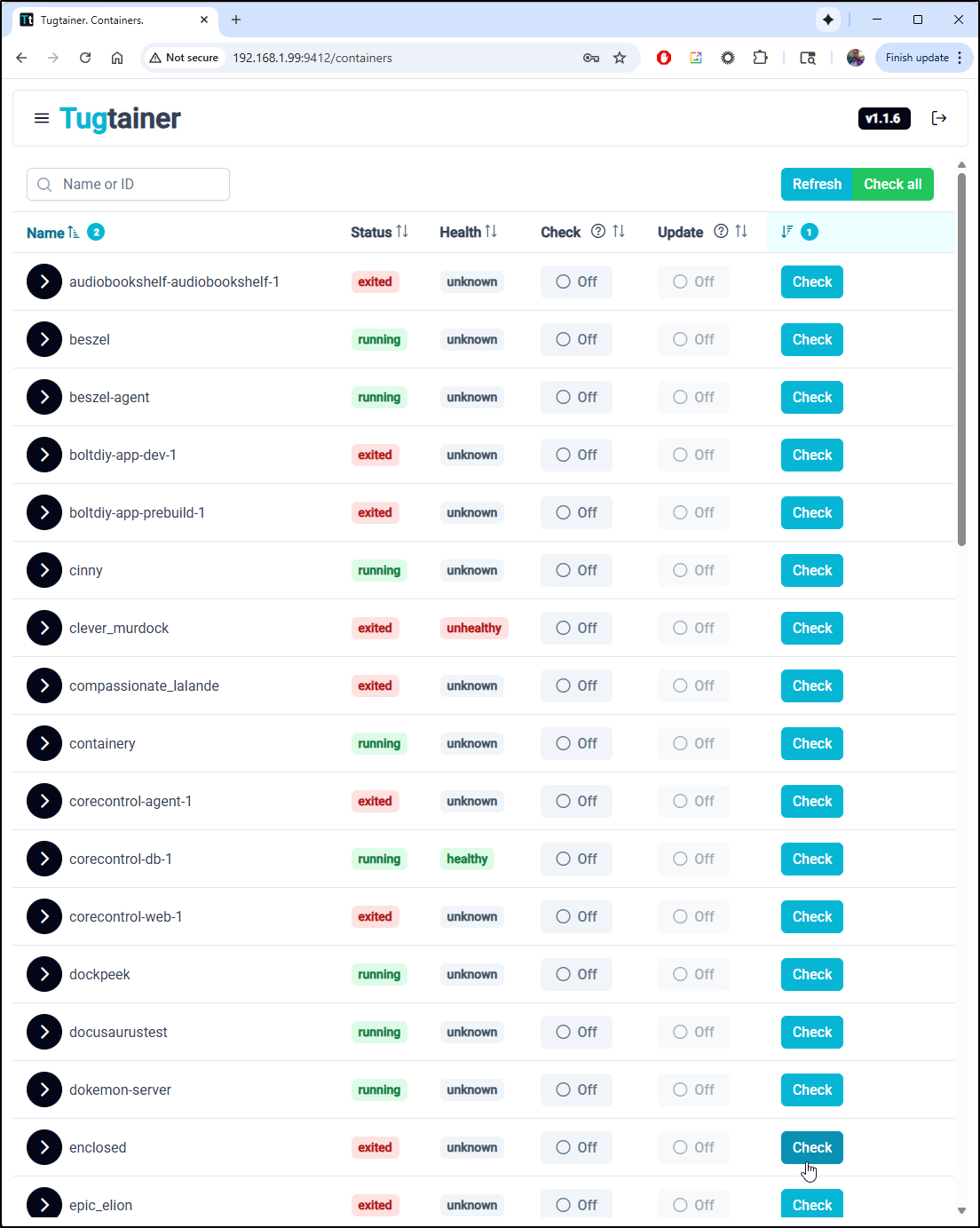

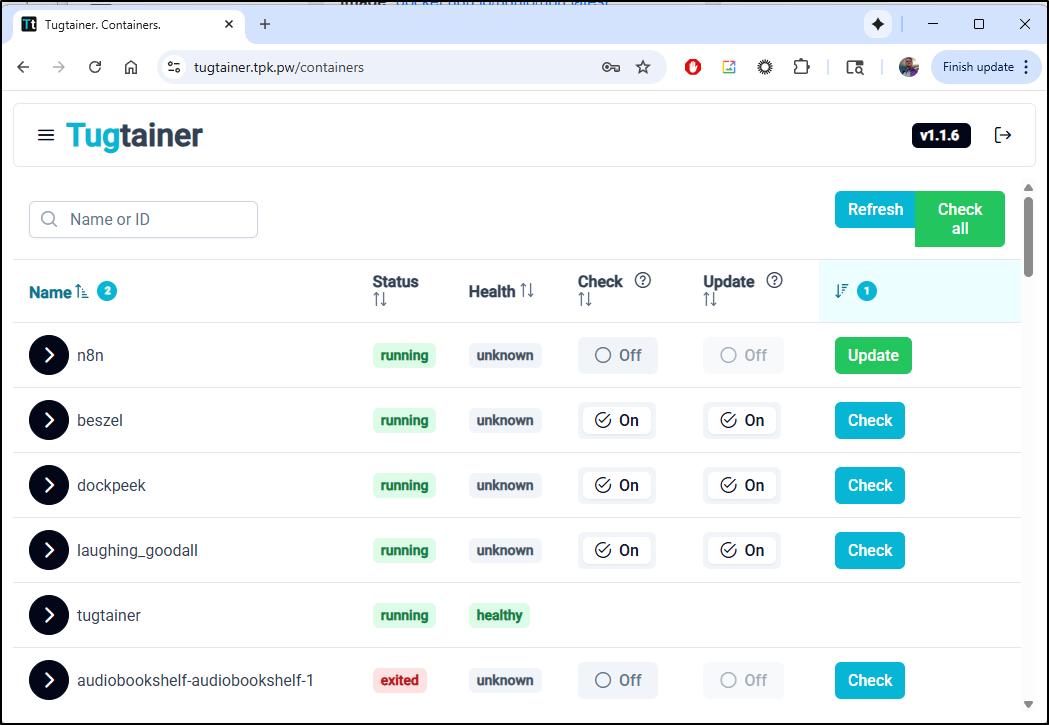

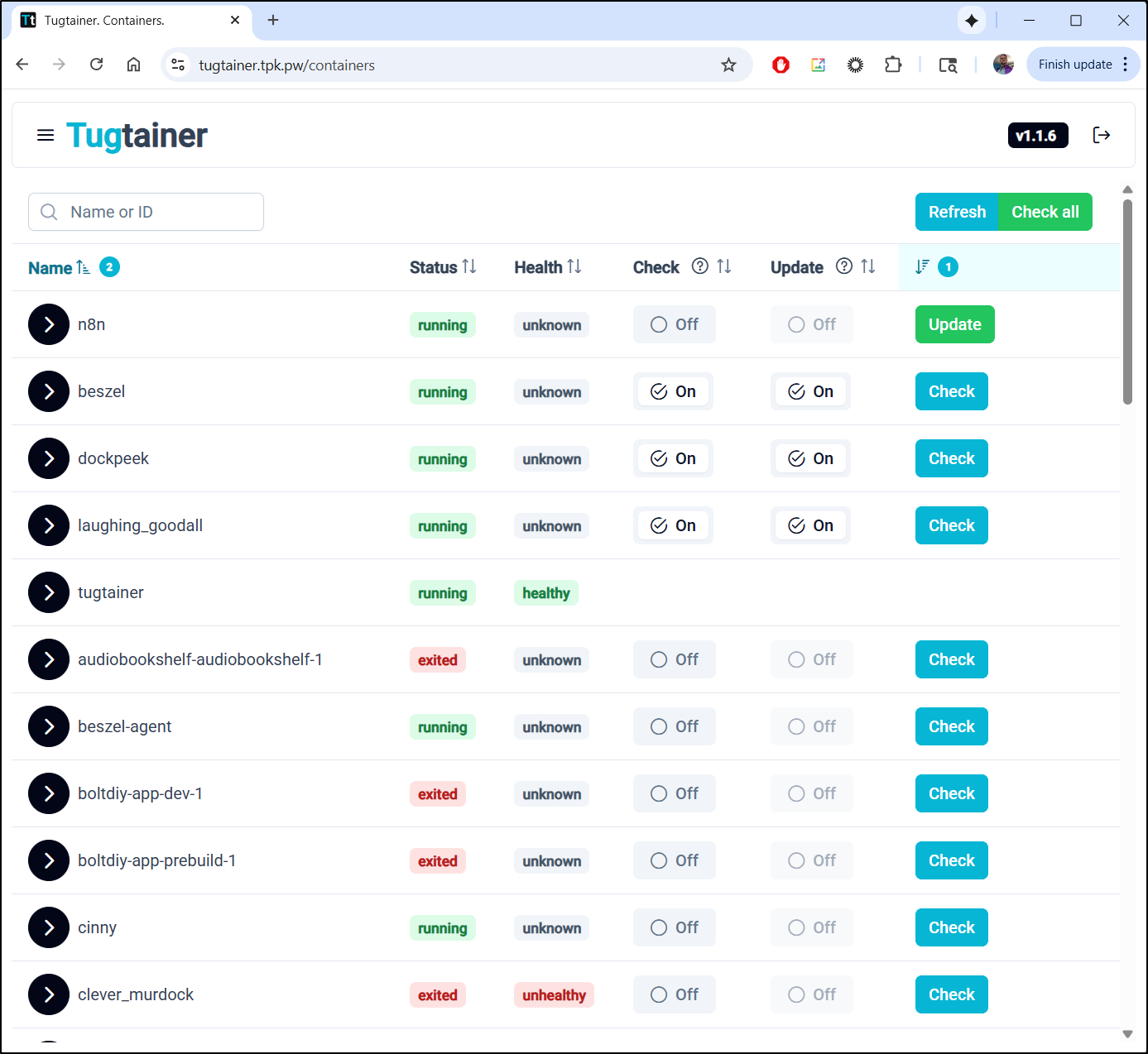

I’m now presented with a large list of all my running containers

For any service I can expand to see what ports it serves (if any)

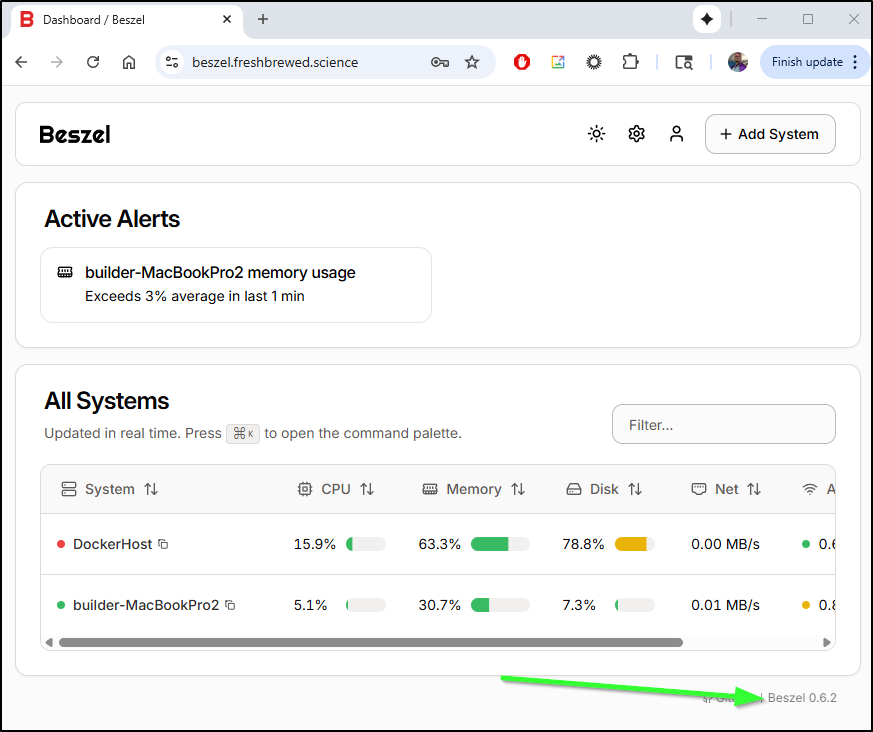

Let’s test with Beszel. I can see I do not have a tag pinned in the docker compose and it’s been 6 months since I fired it up.

builder@builder-T100:~/beszel$ cat docker-compose.yaml

services:

beszel:

image: 'henrygd/beszel'

container_name: 'beszel'

restart: unless-stopped

ports:

- '8095:8090'

volumes:

- ./beszel_data:/beszel_data

builder@builder-T100:~/beszel$ docker ps | grep besz

ab9ef739723f henrygd/beszel "/beszel serve --htt…" 6 months ago Up 4 weeks 0.0.0.0:8095->8090/tcp, :::8095->8090/tcp beszel

c3f76f918df1 henrygd/beszel-agent "/agent" 11 months ago Up 4 weeks beszel-agent

It looks like presently I’m running 0.6.2

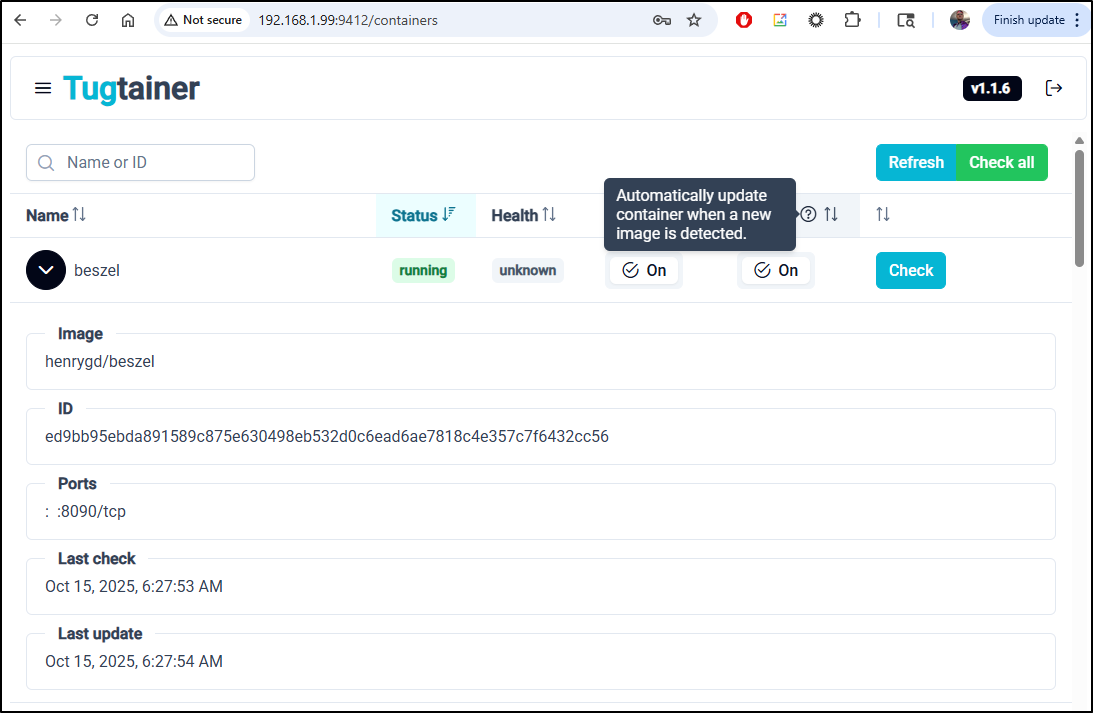

As we can see, that was incredibly easy to use to update a containerized app:

I can now tick the “Check” and “Update” boxes to have Tugtainer automatically check for updates and update

Another app I have tied to “latest” is dockpeek

builder@builder-T100:~/dockpeek$ cat docker-compose.yml

services:

dockpeek:

image: ghcr.io/dockpeek/dockpeek:latest

container_name: dockpeek

environment:

- SECRET_KEY=my_secret_key # Set secret key

- USERNAME=builder # Change default username

- PASSWORD=Redliub$1Redliub$1 # Change default password

ports:

- "3420:8000"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

restart: unless-stopped

Here I’ll just set the auto-update and watch it work

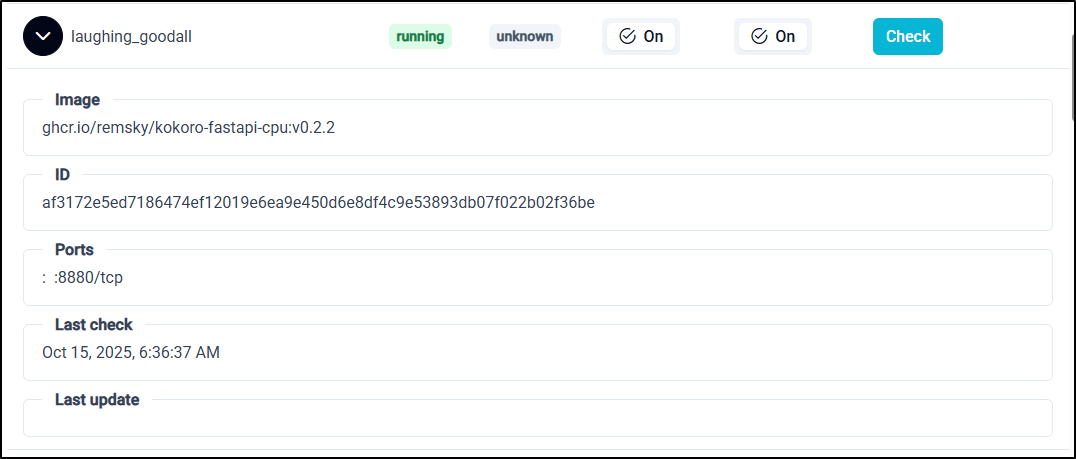

For containers that are pinned to a service, this won’t do much, like my kokoro instance

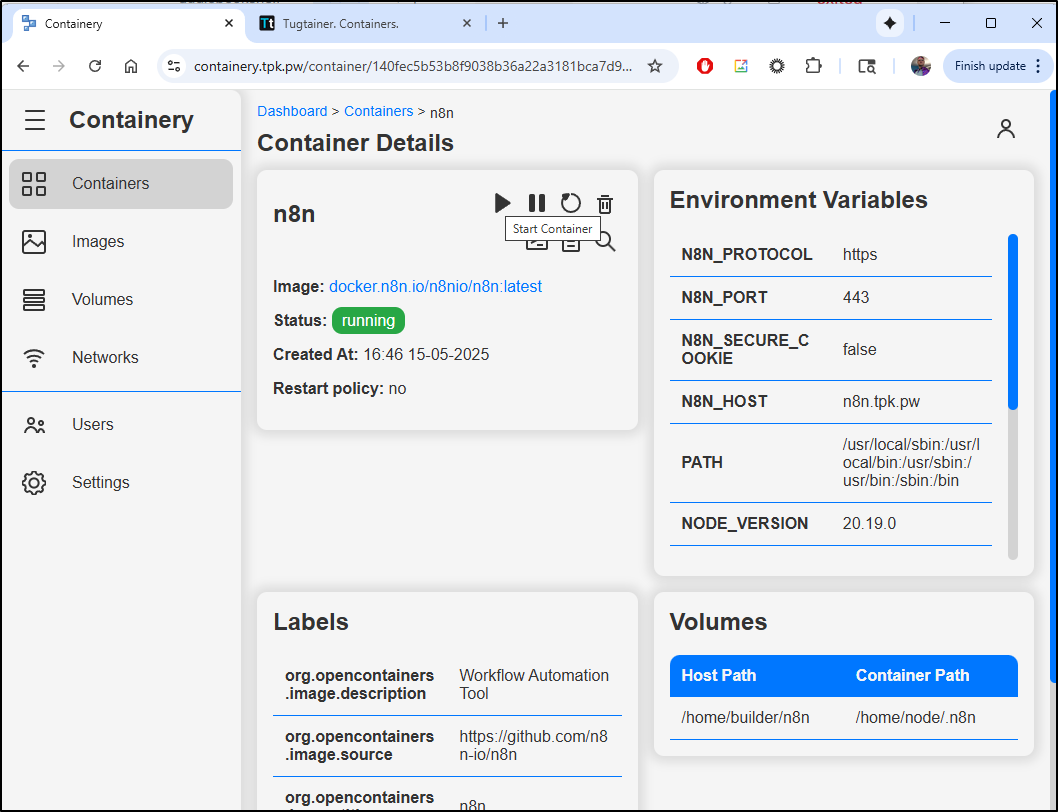

While Tugtainer can update images, it cannot stop/start them if they are down. I find it’s best to pair up with Containery for that

Exposing with TLS

Let’s fire up an A record in Azure DNS

az account set --subscription "Pay-As-You-Go" && az network dns record-set a add-record -g idjdnsrg -z tpk.pw -a 75.72.233.202 -n tugtainer

{

"ARecords": [

{

"ipv4Address": "75.72.233.202"

}

],

"TTL": 3600,

"etag": "5657cb50-4a98-4329-a957-849990a8881a",

"fqdn": "tugtainer.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/tugtainer",

"name": "tugtainer",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"trafficManagementProfile": {},

"type": "Microsoft.Network/dnszones/A"

}

I can now create the ingres, service and endpoint to hand off traffic

$ cat ./tugtainer-ingress.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: tugtainer-external-ip

subsets:

- addresses:

- ip: 192.168.1.99

ports:

- name: tugtainerint

port: 9412

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: tugtainer-external-ip

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

- IPv6

ipFamilyPolicy: RequireDualStack

ports:

- name: tugtainer

port: 80

protocol: TCP

targetPort: 9412

sessionAffinity: None

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

nginx.org/websocket-services: tugtainer-external-ip

generation: 1

name: tugtaineringress

spec:

rules:

- host: tugtainer.tpk.pw

http:

paths:

- backend:

service:

name: tugtainer-external-ip

port:

number: 80

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- tugtainer.tpk.pw

secretName: tugtainer-tls

$ kubectl apply -f ./tugtainer-ingress.yaml

endpoints/tugtainer-external-ip created

service/tugtainer-external-ip created

Warning: annotation "kubernetes.io/ingress.class" is deprecated, please use 'spec.ingressClassName' instead

ingress.networking.k8s.io/tugtaineringress created

Once the cert is live

$ kubectl get cert tugtainer-tls

NAME READY SECRET AGE

tugtainer-tls True tugtainer-tls 10m

I can just login with the URL to check on containers

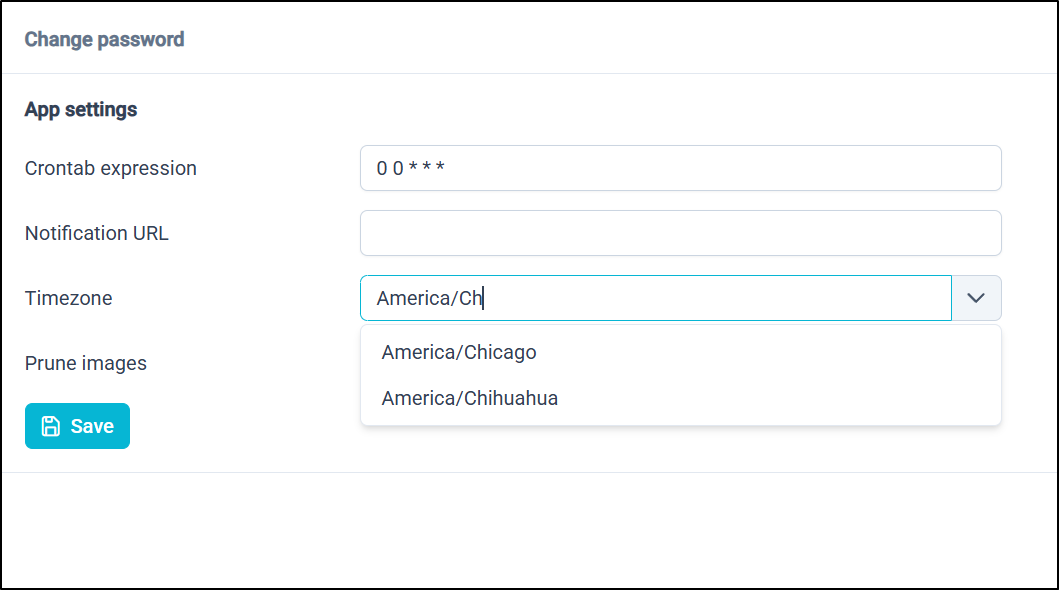

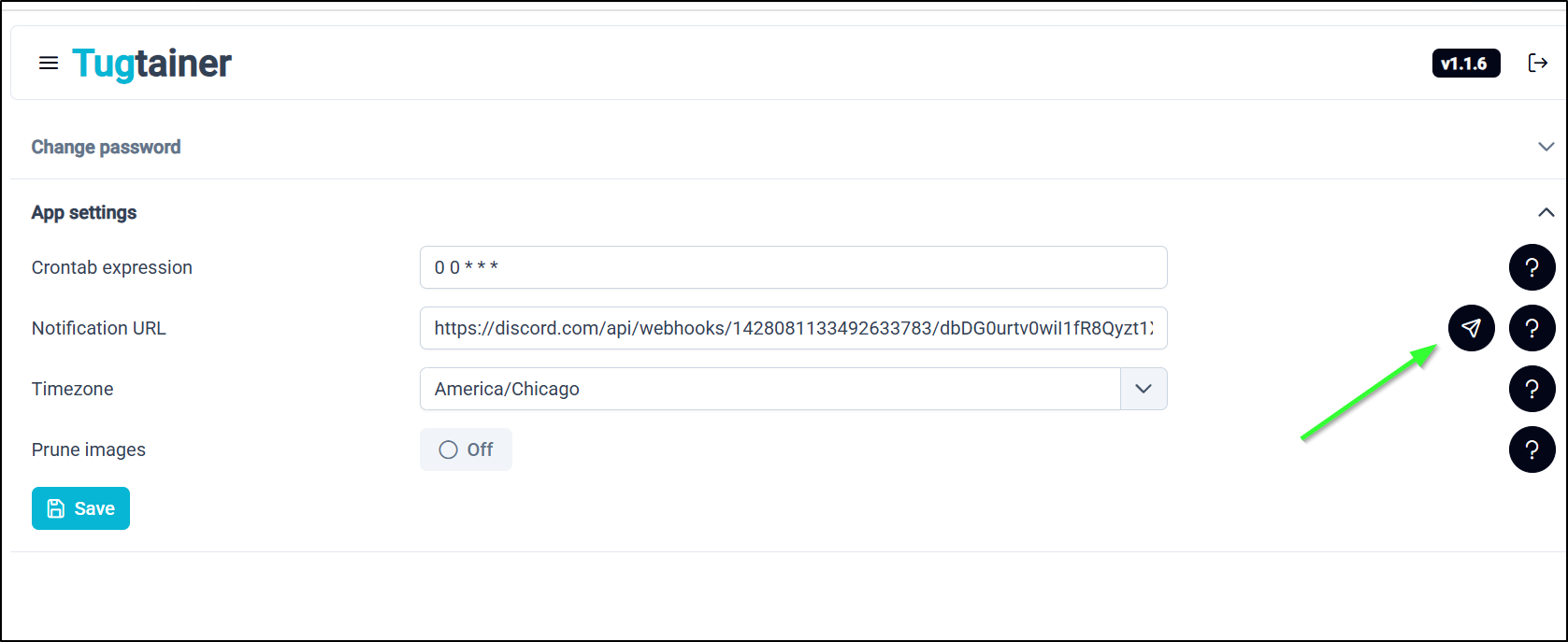

As far as settings go, we can change from dark to light mode

We can set our timezone

As well as crontab or whether to prune images

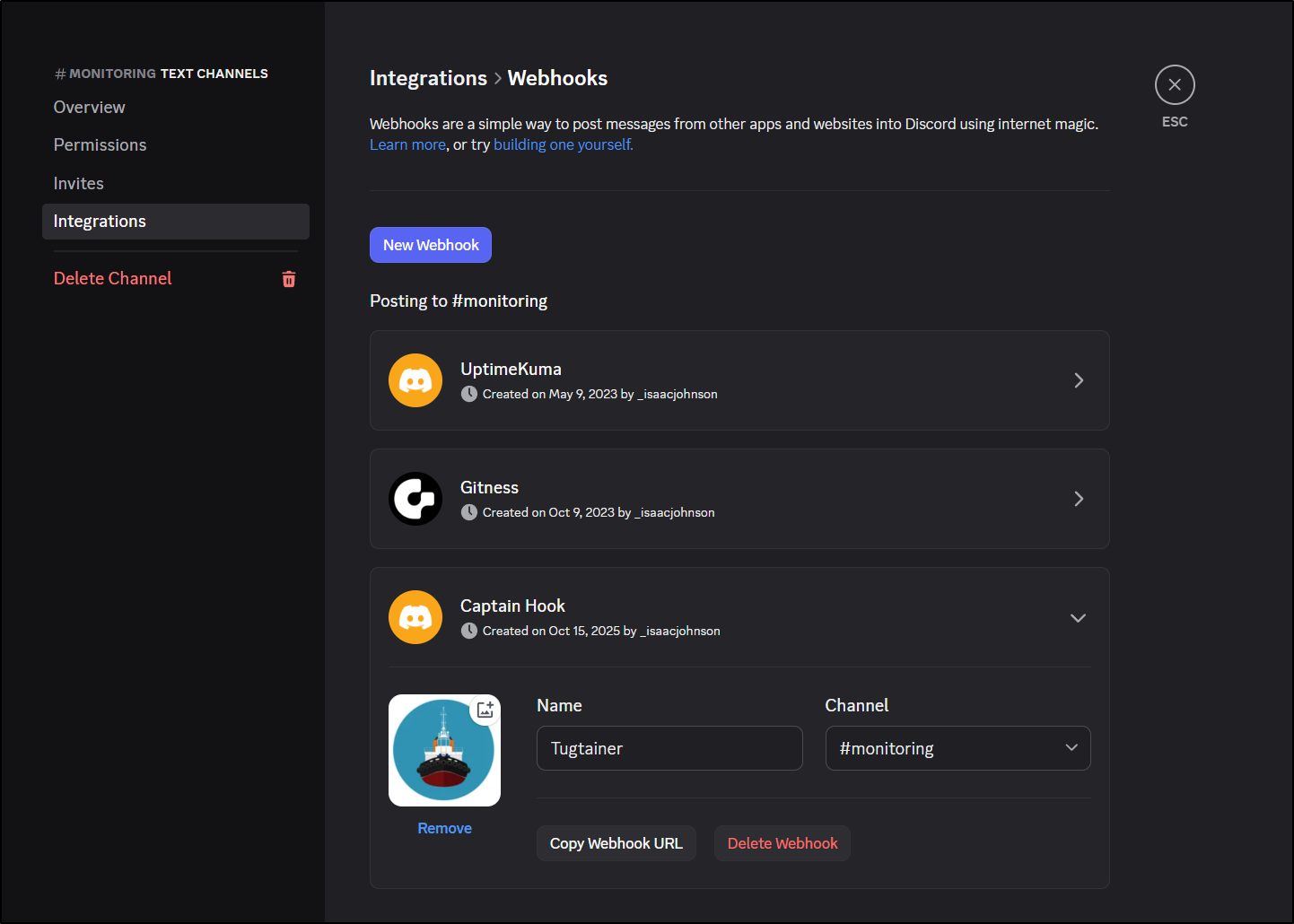

Because the notifications link uses Apprise we can do something like create a Discord Webhook integration for Tugtainer

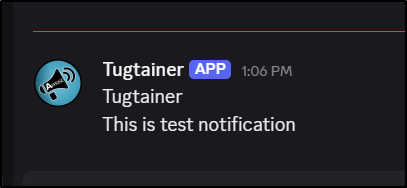

Then send a test

and see it show up in our Discord channel

Summary

Today we looked into 3 containerized apps - Vert, Watchtower and Tugtainer. Vert is a great tool for converting media such as old camera phone videos or unique image formats.

Watchtower and Tugtainer serve much of the same purpose: finding and updating containerized apps to the newest version. We looked at the basic WT usage of updating a named container, but it can also work in a docker compose setup to update containers automatically.

This would work to host my MCP server and check for newer containers (and refresh) every 5 minutes.

version: "3"

services:

cavo:

image: docker pull harbor.freshbrewed.science/library/vikunjamcp@latest

ports:

- "8000:8000"

watchtower:

image: containrrr/watchtower

volumes:

- /var/run/docker.sock:/var/run/docker.sock

command: --interval 3600

Tugtainer has a very nice web interface and can easily check and update containers.

I did find it handy to have containery in my stack so I could look at logs and manually stop/start containers.