In our last post we covered the creation and testing of a powershell based Azure Function. It was able to list contents of a single storage account. But how might we extend that to manipulate files, such as copying blobs elsewhere? And once created, what are our options for invokation and automation? Lastly, once we have a RESTful endpoint, what are some of the ways we can secure it to make it usuable outside of development sandbox environments?

Updating our Function

We showed a listing of files last time, now lets update to actually show the contents of the file.

We had a step to simply list files:

foreach($blob in $blobs)

{

$body += "`n`tblob: " + $blob.Name

}

we'll change the powershell section in run.ps1 to:

foreach($blob in $blobs)

{

$body += "`n`tblob: " + $blob.Name

$myPath = $env:TEMP + "/" + $blob.Name

Get-AzStorageBlobContent -Force -Context $ctx -CloudBlob $blob.ICloudBlob -Destination $env:TEMP

Get-ChildItem $env:TEMP

$body += "`n`n"

$body += type "$myPath"

}

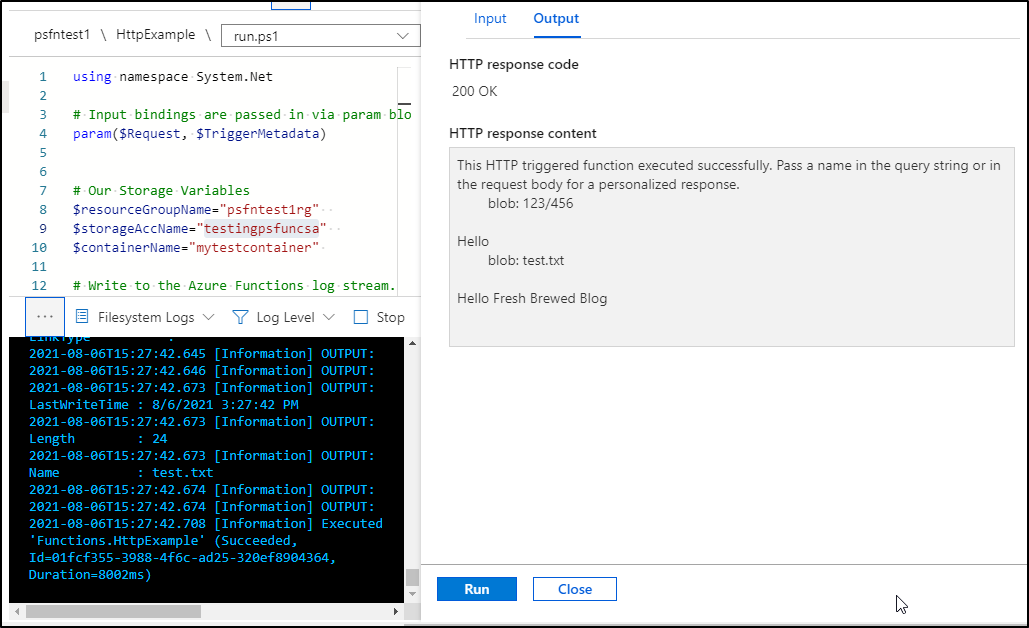

We can see this showed the contents of the text file:

We can list the files using the following. Note, if you want the Azure CLI to stop haranguing you about lack of storage keys, you can do it inline as such:

$ az storage blob list -c mytestcontainer --account-name testingpsfuncsa -o table --account-key `az storage account keys list -g psfntest1rg --account-name testingpsfuncsa -o json | jq

-r '.[0].value' | tr -d '\n'`

Name Blob Type Blob Tier Length Content Type Last Modified Snapshot

-------- ----------- ----------- -------- -------------- ------------------------- ----------

test.txt BlockBlob Hot 24 text/plain 2021-08-05T16:47:19+00:00

Validation

Let's now upload a file into a folder. Blobs don't really have folders, but rather treat separator characters as logical folders:

builder@US-5CG92715D0:~$ az storage blob upload -c mytestcontainer --file ./test.txt -n 123/456 --account-name testingpsfuncsa --account-key `az storage account keys list -g psfntest1rg --account-name testingpsfuncsa -o json | jq -r '.[0].value' | tr -d '\n'`

Finished[#############################################################] 100.0000%

{

"etag": "\"0x8D958EE6208A566\"",

"lastModified": "2021-08-06T15:25:11+00:00"

}

builder@US-5CG92715D0:~$ az storage blob list -c mytestcontainer --account-name testingpsfuncsa -o table --account-key `az storage account keys list -g psfntest1rg --account-name testingpsfuncsa -o json | jq -r '.[0].value' | tr -d '\n'`

Name Blob Type Blob Tier Length Content Type Last Modified Snapshot

-------- ----------- ----------- -------- -------------- ------------------------- ----------

123/456 BlockBlob Hot 6 text/plain 2021-08-06T15:25:11+00:00

test.txt BlockBlob Hot 24 text/plain 2021-08-05T16:47:19+00:00

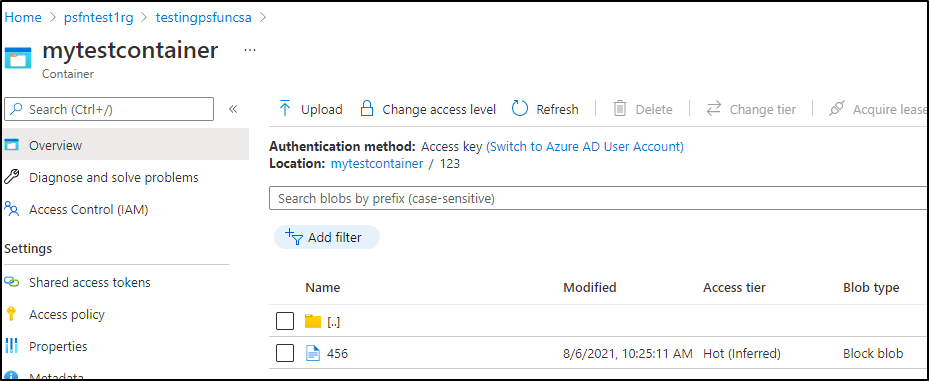

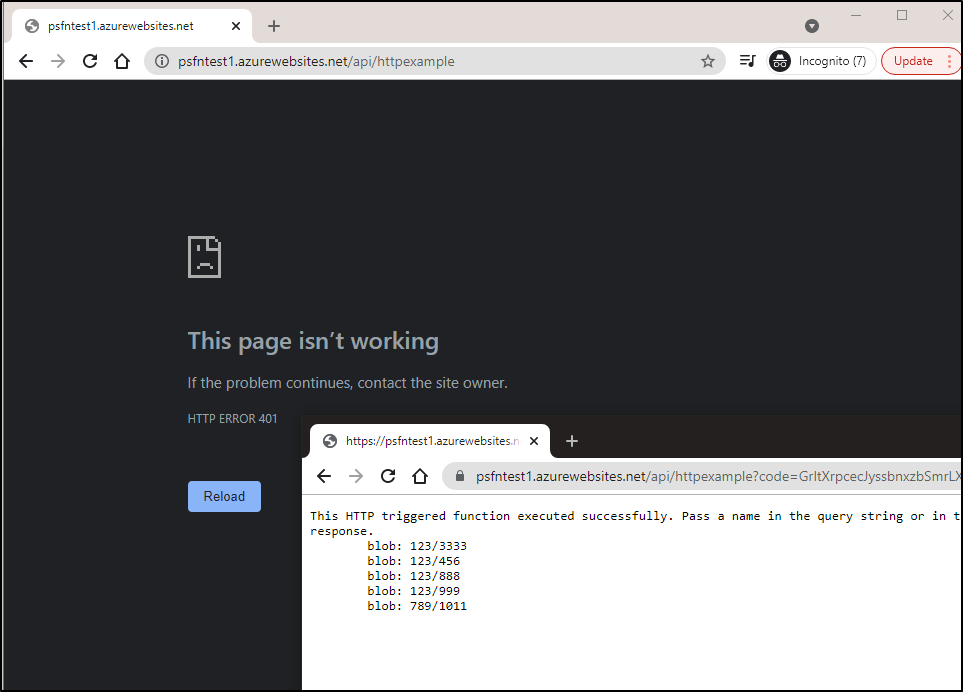

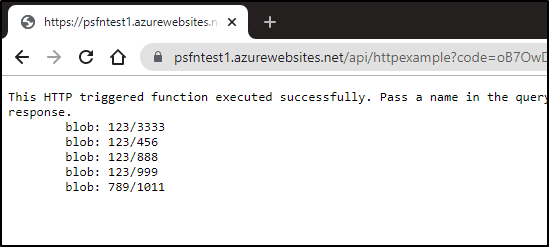

which we see reflected:

Testing our function we see that our path works just fine:

Copying Files

Now let's make it more complicated.. we will want to copy the file to a different storage account.

Let's create one in a different location:

builder@US-5CG92715D0:~$ az storage account create -n testingpsfuncsa2 -g psfntest1rg --sku Standard_LRS --location westus

{

"accessTier": "Hot",

"allowBlobPublicAccess": null,

…

$ az storage container create -n mynewcontainer --account-name testingpsfuncsa2 --account-key `az storage account keys list -g psfntest1rg --account-name testingpsfuncsa2 -o json | jq -r '.[0].value' | tr -d '\n'`

{

"created": true

}

Because this storage account is in the same Resource Group and we've granted access to the managed identity to the RG already, we need not modify the IAM on this new SA to grant access.

I tried using Copy-AzStorageBlob but encountered header errors repeatedly. Since I did not care to retrieve the destination object, I changed to Start-AzStorageBlobCopy and that worked far better:

$storageAcc = Get-AzStorageAccount -ResourceGroupName $resourceGroupName -Name $storageAccName

$ctx = $storageAcc.Context

$bkupStorageAcc = Get-AzStorageAccount -ResourceGroupName $resourceGroupName -Name $backupAccName

$bkupctx = $bkupStorageAcc.Context

$blobs = Get-AzStorageBlob -Container $containerName -Context $ctx

foreach($blob in $blobs)

{

$body += "`n`tblob: " + $blob.Name

$myPath = $env:TEMP + "/" + $blob.Name

Get-AzStorageBlobContent -Force -Context $ctx -CloudBlob $blob.ICloudBlob -Destination $env:TEMP

Get-ChildItem $env:TEMP

$body += "`n`n"

$body += type "$myPath"

#$destBlob = $blob | Copy-AzStorageBlob -DestContainer $backupContainerName -DestBlob $blob.Name -DestContext $bkupctx

#$destBlob = Copy-AzStorageBlob -SrcContainer $containerName -SrcBlob $blob.Name -Context $ctx -DestContainer $backupContainerName -DestBlob $blob.Name -DestContext $bkupctx

Start-AzStorageBlobCopy -Force -SrcBlob $blob.Name -SrcContainer $containerName -DestContainer $backupContainerName -Context $ctx -DestContext $bkupctx -DestBlob $blob.Name

}

Verification

we can see the results below, a listing before and after a run:

builder@US-5CG92715D0:~$ az storage blob list -c mynewcontainer --account-name testingpsfuncsa2 -o table --account-key `az storage account keys list -g psfntest1rg --account-name testingpsfuncsa2 -o json | jq -r '.[0].value' | tr -d '\n'`

builder@US-5CG92715D0:~$ az storage blob list -c mynewcontainer --account-name testingpsfuncsa2 -o table --account-key `az storage account keys list -g psfntest1rg --account-name testingpsfuncsa2 -o json | jq -r '.[0].value' | tr -d '\n'`

Name Blob Type Blob Tier Length Content Type Last Modified Snapshot

-------- ----------- ----------- -------- -------------- ------------------------- ----------

123/456 BlockBlob Hot 6 text/plain 2021-08-06T16:09:17+00:00

test.txt BlockBlob Hot 24 text/plain 2021-08-06T16:09:17+00:00

Cleaning up

Now if our goal has changed to copy files, it does not add value to download and display them.. so we can remove that code.

This reduces our code to simply:

$storageAcc = Get-AzStorageAccount -ResourceGroupName $resourceGroupName -Name $storageAccName

$ctx = $storageAcc.Context

$bkupStorageAcc = Get-AzStorageAccount -ResourceGroupName $resourceGroupName -Name $backupAccName

$bkupctx = $bkupStorageAcc.Context

$blobs = Get-AzStorageBlob -Container $containerName -Context $ctx

foreach($blob in $blobs)

{

$body += "`n`tblob: " + $blob.Name

Start-AzStorageBlobCopy -Force -SrcBlob $blob.Name -SrcContainer $containerName -DestContainer $backupContainerName -Context $ctx -DestContext $bkupctx -DestBlob $blob.Name

}

Testing CRUD

As you would expect, this backup is additive.. so let's delete a file, update a file then add a file to see that reflected.

We start with matching blob stores:

builder@US-5CG92715D0:~$ az storage blob list -c mynewcontainer --account-name testingpsfuncsa2 -o table --account-key `az storage account keys list -g psfntest1rg --account-name testingpsfuncsa2 -o json | jq -r '.[0].value' | tr -d '\n'`

Name Blob Type Blob Tier Length Content Type Last Modified Snapshot

-------- ----------- ----------- -------- -------------- ------------------------- ----------

123/456 BlockBlob Hot 6 text/plain 2021-08-06T16:09:17+00:00

test.txt BlockBlob Hot 24 text/plain 2021-08-06T16:09:17+00:00

builder@US-5CG92715D0:~$ az storage blob list -c mytestcontainer --account-name testingpsfuncsa -o table --account-key `az storage account keys list -g psfntest1rg --account-name testingpsfuncsa -o json | jq -r '.[0].value' | tr -d '\n'`

Name Blob Type Blob Tier Length Content Type Last Modified Snapshot

-------- ----------- ----------- -------- -------------- ------------------------- ----------

123/456 BlockBlob Hot 6 text/plain 2021-08-06T15:25:11+00:00

test.txt BlockBlob Hot 24 text/plain 2021-08-05T16:47:19+00:00

Now we create, update and delete a blob:

builder@US-5CG92715D0:~$ echo "updated this file" > test.txt

builder@US-5CG92715D0:~$ az storage blob upload -c mytestcontainer --file ./test.txt -n 123/456 --account-name testingpsfuncsa --account-key `az storage account keys list -g psfntest1rg --account-name testingpsfuncsa -o json | jq -r '.[0].value' | tr -d '\n'`

Finished[#############################################################] 100.0000%

{

"etag": "\"0x8D958F53E2A0C9A\"",

"lastModified": "2021-08-06T16:14:18+00:00"

}

builder@US-5CG92715D0:~$ az storage blob delete -c mytestcontainer -n test.txt --account-name testingpsfuncsa --account-key `az storage account keys list -g psfntest1rg --account-name testingpsfuncsa -o json | jq -r '.[0].value' | tr -d '\n'`

builder@US-5CG92715D0:~$ az storage blob upload -c mytestcontainer --file ./test.txt -n 789/1011 --account-name testingpsfuncsa --account-key `az storage account keys list -g psfntest1rg --account-name testi

ngpsfuncsa -o json | jq -r '.[0].value' | tr -d '\n'`

Finished[#############################################################] 100.0000%

{

"etag": "\"0x8D958F55E01F172\"",

"lastModified": "2021-08-06T16:15:11+00:00"

}

builder@US-5CG92715D0:~$ az storage blob list -c mytestcontainer --account-name testingpsfuncsa -o table --account-key `az storage account keys list -g psfntest1rg --account-name testingpsfuncsa -o json | jq -r '.[0].value' | tr -d '\n'`

Name Blob Type Blob Tier Length Content Type Last Modified Snapshot

-------- ----------- ----------- -------- -------------- ------------------------- ----------

123/456 BlockBlob Hot 18 text/plain 2021-08-06T16:14:18+00:00

789/1011 BlockBlob Hot 18 text/plain 2021-08-06T16:15:11+00:00

we will use curl to hit the function:

builder@US-5CG92715D0:~$ curl -X GET https://psfntest1.azurewebsites.net/api/HttpExample?

This HTTP triggered function executed successfully. Pass a name in the query string or in the request body for a personalized response.

blob: 123/456

blob: 789/1011

and see indeed its updated:

$ az storage blob list -c mynewcontainer --account-name testingpsfuncsa2 -o table --account-key `az storage account keys list -g psfntest1rg --account-name testingpsfuncsa2 -o json | jq -r '.[0].value' | tr -d '\n'`

Name Blob Type Blob Tier Length Content Type Last Modified Snapshot

-------- ----------- ----------- -------- -------------- ------------------------- ----------

123/456 BlockBlob Hot 18 text/plain 2021-08-06T16:16:26+00:00

789/1011 BlockBlob Hot 18 text/plain 2021-08-06T16:16:27+00:00

test.txt BlockBlob Hot 24 text/plain 2021-08-06T16:09:17+00:00

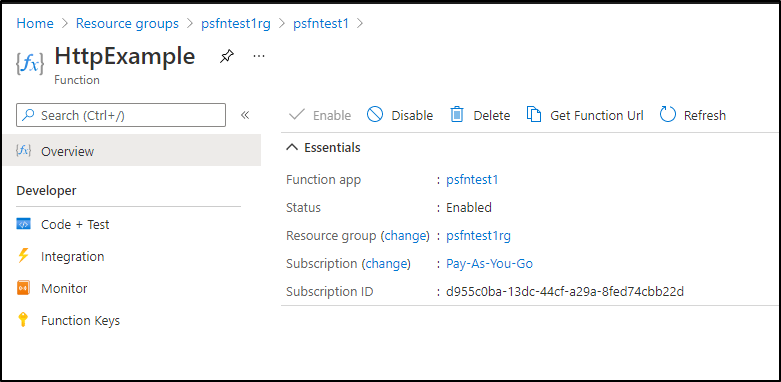

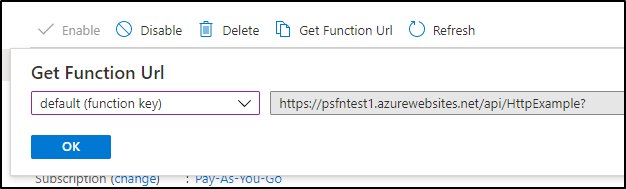

On CURL / Command Line Invokation

Web browser invokation is great, but if we wanted to use a simple curl command, we could retrieve the function URL

and use a simple curl command to trigger it.

builder@DESKTOP-QADGF36:~/Workspaces/psfntest1$ curl -H 'Accept: application/json' https://psfntest1.azurewebsites.net/api/httpexample

This HTTP triggered function executed successfully. Pass a name in the query string or in the request body for a personalized response.

blob: 123/456

blob: 789/1011

Automation

We will actually cover two ways to automate, one using a kubernetes cluster and the other Azure Event Grid.

K8s CronJob

Say we wished to schedule an update. If we have a k8s cluster, this is easy to do. Just add a cronjob:

$ cat k8s_batch.yaml

apiVersion: batch/v1

kind: CronJob

metadata:

name: sync-az-blob-stores

namespace: default

spec:

schedule: '*/15 * * * *'

jobTemplate:

spec:

template:

spec:

containers:

- name: curlcontainer

image: curlimages/curl:7.72.0

imagePullPolicy: IfNotPresent

command:

- /bin/sh

- -ec

- 'curl -H "Accept: application/json" https://psfntest1.azurewebsites.net/api/httpexample'

restartPolicy: OnFailure

To test, let's add a file:

$ az storage blob upload -c mytestcontainer --file ./test.txt -n 123/888 --account-name testingpsfuncsa --account-key `az storage account keys list -g psfntest1rg --account-name testingpsfuncsa -o json | jq -r '.[0].value

' | tr -d '\n'`

Finished[#############################################################] 100.0000%

{

"etag": "\"0x8D95DC29EF2F129\"",

"lastModified": "2021-08-12T18:54:32+00:00"

}

Now we can see it's the source by not destination (backup storage account):

$ az storage blob list -c mytestcontainer --account-name testingpsfuncsa -o table --account-key `az storage account keys list -g psfntest1rg --account-name testingpsfuncsa -o json | jq -r '.[0].value' | tr -d '\n'`

This command has been deprecated and will be removed in future release. Use 'az storage fs file list' instead. For more information go to https://github.com/Azure/azure-cli/blob/dev/src/azure-cli/azure/cli/command_modules/storage/docs/ADLS%20Gen2.md

The behavior of this command has been altered by the following extension: storage-preview

Name IsDirectory Blob Type Blob Tier Length Content Type Last Modified Snapshot

-------- ------------- ----------- ----------- -------- -------------- ------------------------- ----------

123/456 BlockBlob Hot 24 text/plain 2021-08-12T18:54:22+00:00

123/888 BlockBlob Hot 24 text/plain 2021-08-12T18:54:32+00:00

789/1011 BlockBlob Hot 18 text/plain 2021-08-06T16:15:11+00:00

and the backup SA

$ az storage blob list -c mynewcontainer --account-name testingpsfuncsa2 -o table --account-key `az storage account keys list -g psfntest1rg --account-name testingpsfuncsa2 -o json | jq -r '.[0].value' | tr -d '\n'`

This command has been deprecated and will be removed in future release. Use 'az storage fs file list' instead. For more information go to https://github.com/Azure/azure-cli/blob/dev/src/azure-cli/azure/cli/command_modules/storage/docs/ADLS%20Gen2.md

The behavior of this command has been altered by the following extension: storage-preview

Name IsDirectory Blob Type Blob Tier Length Content Type Last Modified Snapshot

-------- ------------- ----------- ----------- -------- -------------- ------------------------- ----------

123/456 BlockBlob Hot 18 text/plain 2021-08-12T18:46:14+00:00

789/1011 BlockBlob Hot 18 text/plain 2021-08-12T18:46:14+00:00

test.txt BlockBlob Hot 24 text/plain 2021-08-06T16:09:17+00:00

We can now apply the k8s cronjob:

$ kubectl apply -f k8s_batch.yaml

error: unable to recognize "k8s_batch.yaml": no matches for kind "CronJob" in version "batch/v1"

This just means I don't have a sufficiently new enough cluster, changing to v1beta1 will work:

apiVersion: batch/v1beta1

kind: CronJob

now apply

$ kubectl apply -f k8s_batch.yaml

cronjob.batch/sync-az-blob-stores created

This should run pretty quick:

builder@DESKTOP-QADGF36:~/Workspaces/psfntest1$ kubectl get pods | grep sync

sync-az-blob-stores-1628794800-nhrs8 0/1 ContainerCreating 0 15s

builder@DESKTOP-QADGF36:~/Workspaces/psfntest1$ kubectl get pods | grep sync

sync-az-blob-stores-1628794800-nhrs8 1/1 Running 0 24s

builder@DESKTOP-QADGF36:~/Workspaces/psfntest1$ kubectl get pods | grep sync

sync-az-blob-stores-1628794800-nhrs8 0/1 Completed 0 31s

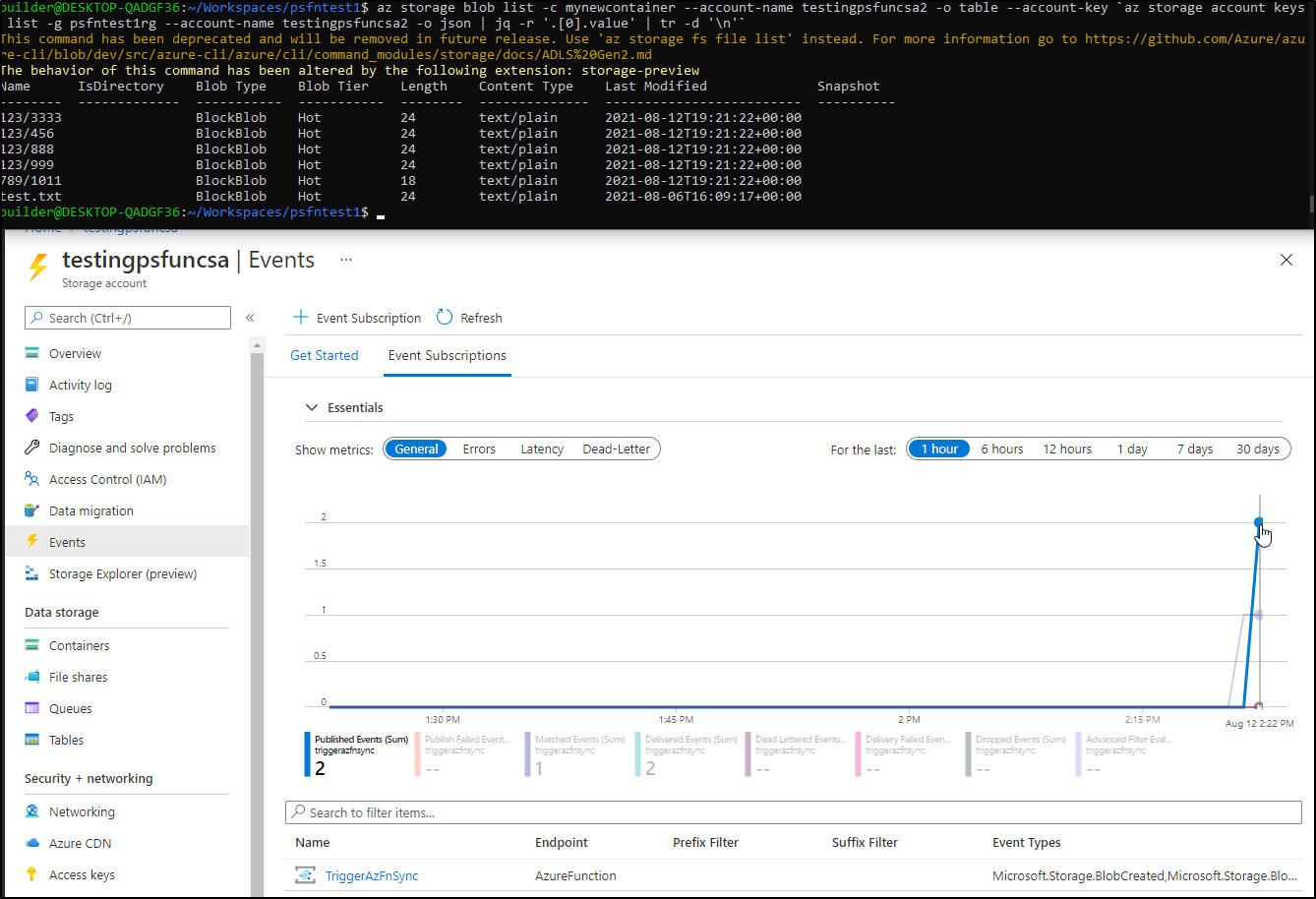

And now when i test i see the backup happened:

$ az storage blob list -c mynewcontainer --account-name testingpsfuncsa2 -o table --account-key `az storage account keys list -g psfntest1rg --account-name testingpsfuncsa2 -o json | jq -r '.[0].value' | tr -d '\n'`

This command has been deprecated and will be removed in future release. Use 'az storage fs file list' instead. For more information go to https://github.com/Azure/azure-cli/blob/dev/src/azure-cli/azure/cli/command_modules/storage/docs/ADLS%20Gen2.md

The behavior of this command has been altered by the following extension: storage-preview

Name IsDirectory Blob Type Blob Tier Length Content Type Last Modified Snapshot

-------- ------------- ----------- ----------- -------- -------------- ------------------------- ----------

123/456 BlockBlob Hot 24 text/plain 2021-08-12T19:00:31+00:00

123/888 BlockBlob Hot 24 text/plain 2021-08-12T19:00:31+00:00

789/1011 BlockBlob Hot 18 text/plain 2021-08-12T19:00:31+00:00

test.txt BlockBlob Hot 24 text/plain 2021-08-06T16:09:17+00:00

Azure Event Hub

This is great, but what if we rarely update this storage account, or if our updates come in spurts.. Could we improve the efficiency? What if we were to only trigger on updates? Here is where Events come into play

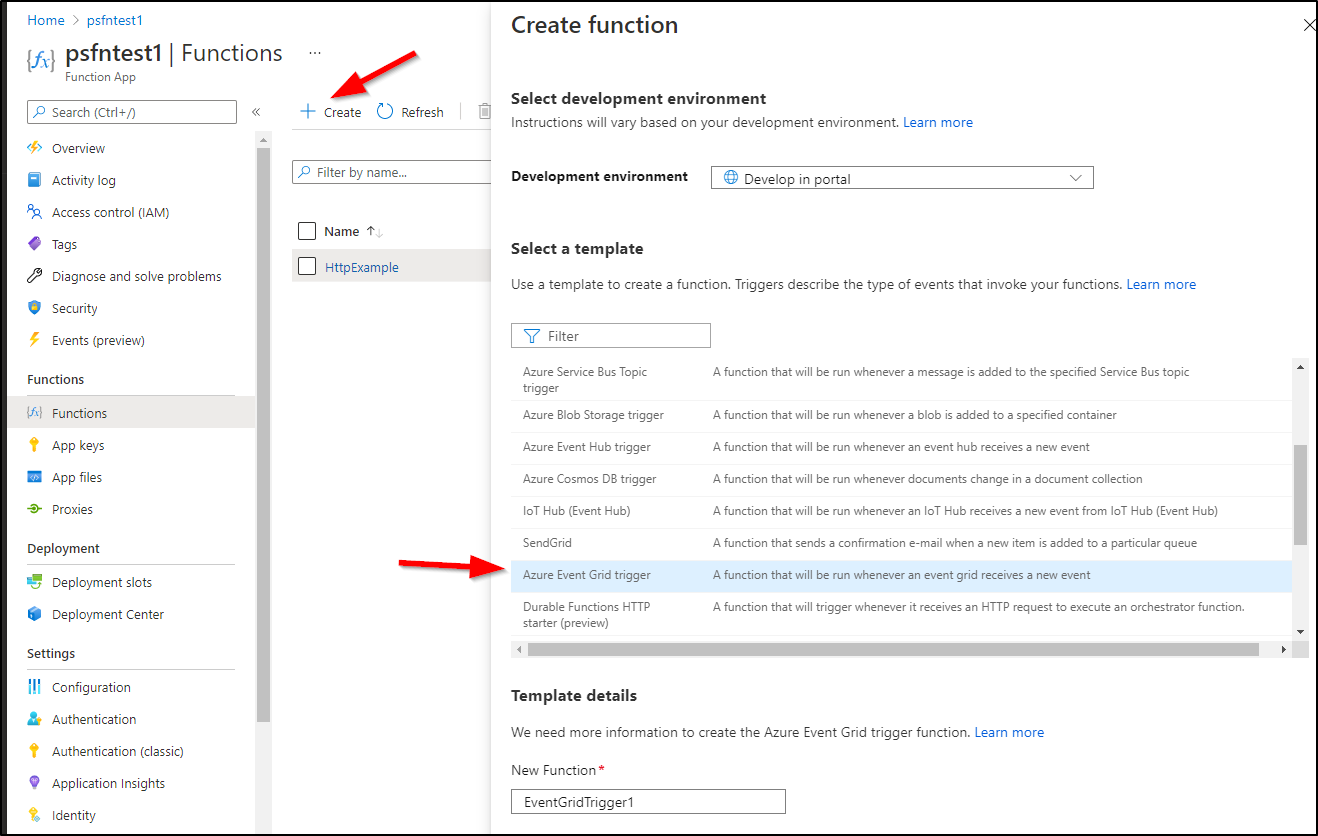

First we need to create a function that can trigger on event grid events. There are a few ways to do this, however, since we did the CLI last time, let's use the portal here:

Choose "Create" a new function and the template will be "Azure Event Grid trigger":

When i go to the event grid run.ps1, I'll add the section from my Http trigger code (between the param and evengridevent lines):

param($eventGridEvent, $TriggerMetadata)

# Our Storage Variables

$resourceGroupName="psfntest1rg"

$storageAccName="testingpsfuncsa"

$containerName="mytestcontainer"

$backupAccName="testingpsfuncsa2"

$backupContainerName="mynewcontainer"

$storageAcc = Get-AzStorageAccount -ResourceGroupName $resourceGroupName -Name $storageAccName

$ctx = $storageAcc.Context

$bkupStorageAcc = Get-AzStorageAccount -ResourceGroupName $resourceGroupName -Name $backupAccName

$bkupctx = $bkupStorageAcc.Context

$blobs = Get-AzStorageBlob -Container $containerName -Context $ctx

foreach($blob in $blobs)

{

Start-AzStorageBlobCopy -Force -SrcBlob $blob.Name -SrcContainer $containerName -DestContainer $backupContainerName -Context $ctx -DestContext $bkupctx -DestBlob $blob.Name

}

# Make sure to pass hashtables to Out-String so they're logged correctly

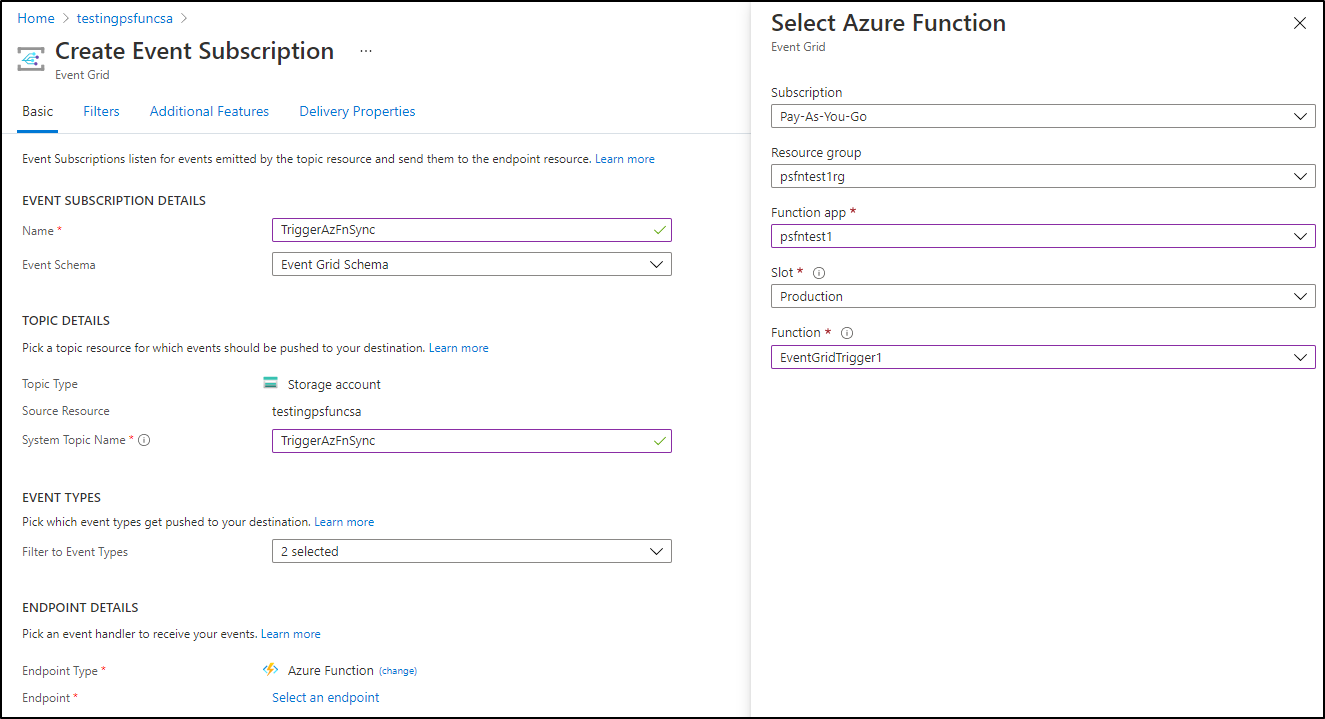

$eventGridEvent | Out-String | Write-HostNow i can create an Event Grid subscription from the "Events" section of the storage account:

Validation

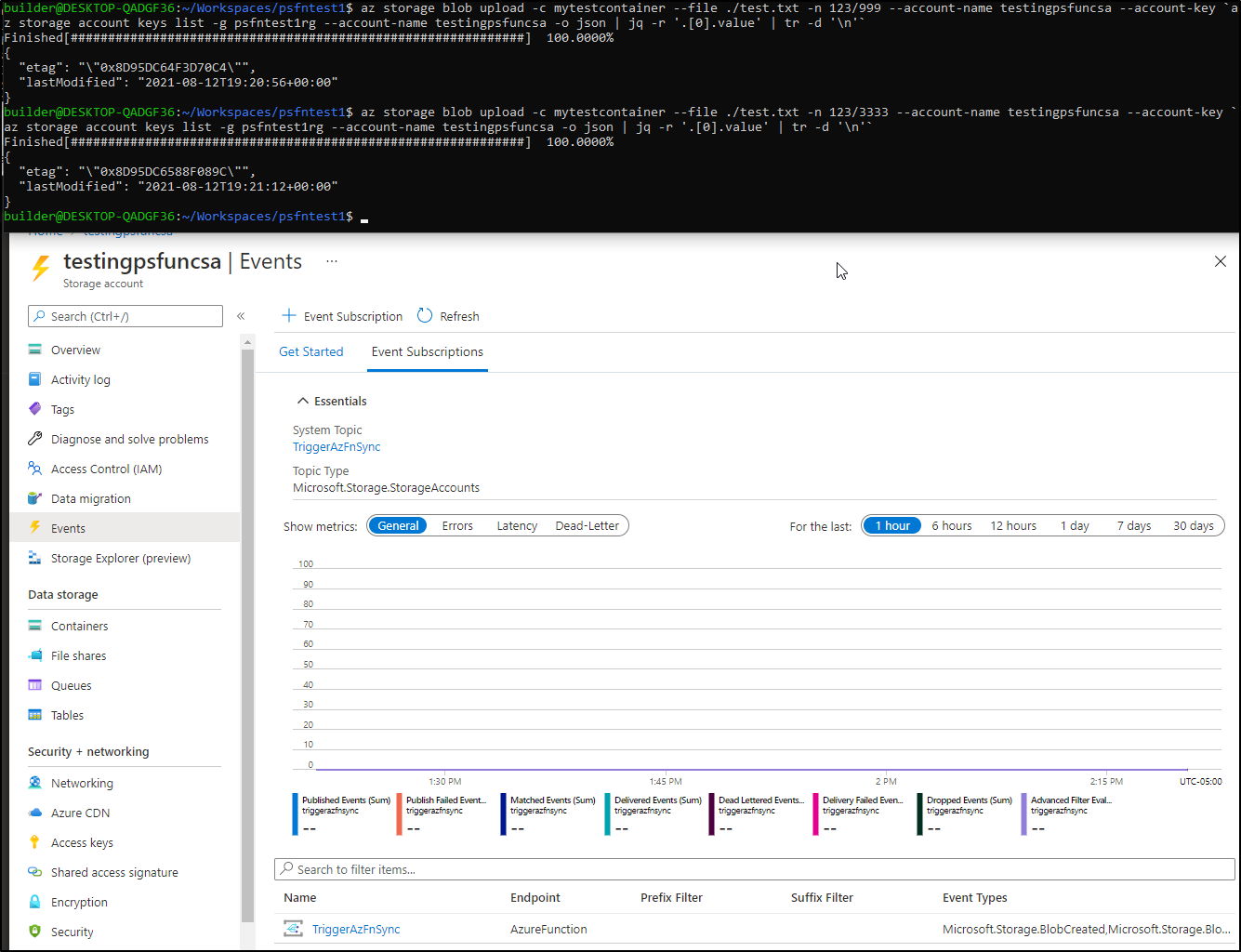

Next we can upload some files to trigger the event grid:

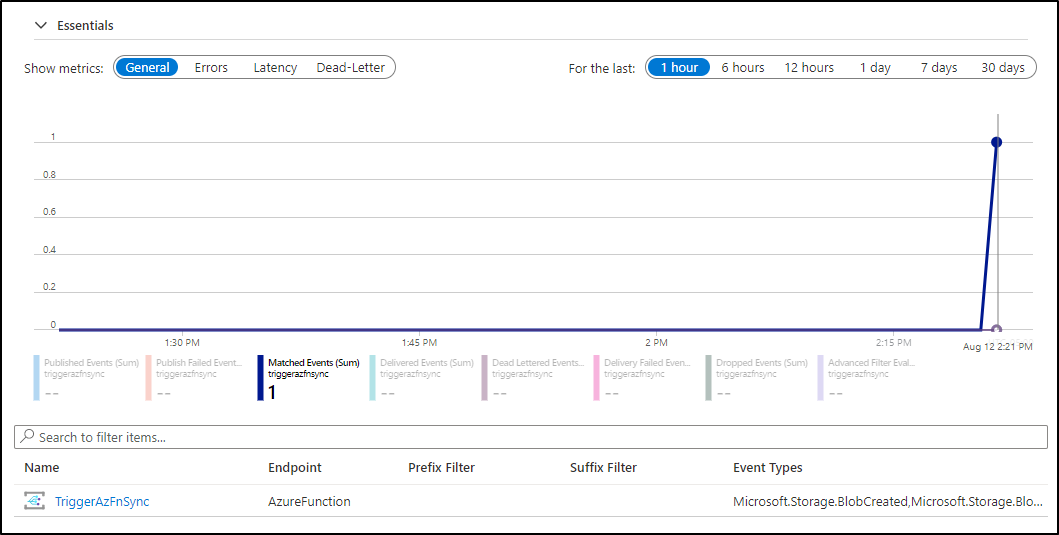

and after a moment, i see the function was invoked:

and checking back in a few seconds showed the sync occurred and the files are now there:

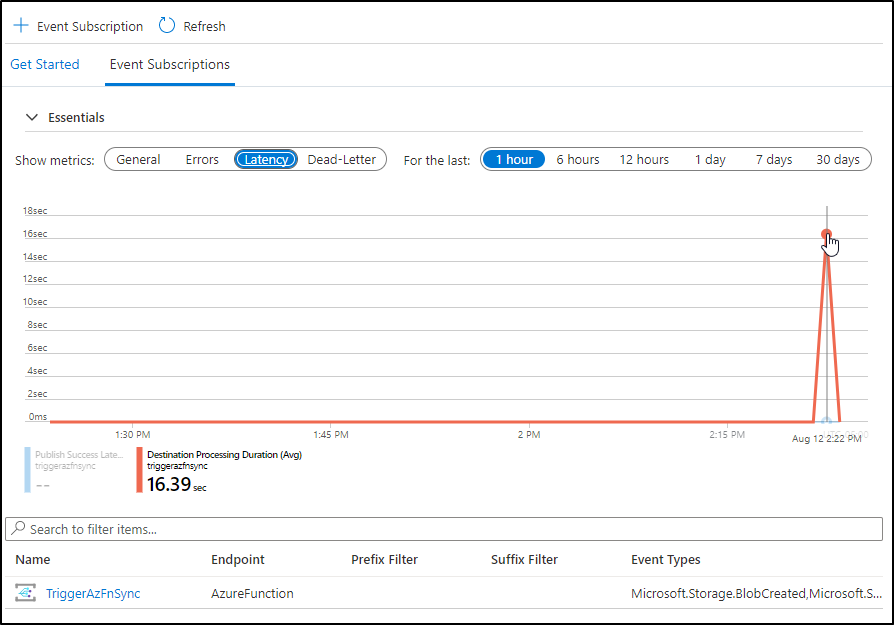

we can even check the latency to verify indeed it took just a few seconds to run

Now that we have an automated system in Azure, we can easily kill our Cronjob in kubernetes:

builder@DESKTOP-QADGF36:~/Workspaces/psfntest1$ kubectl get cronjob

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

sync-az-blob-stores */15 * * * * False 0 10m 25m

builder@DESKTOP-QADGF36:~/Workspaces/psfntest1$ kubectl delete cronjob sync-az-blob-stores

cronjob.batch "sync-az-blob-stores" deleted

Securing Endpoints

What if we wish to secure our function app? Sure it syncs files, but what if it did more and we don't want security through obscurity.

API Keys

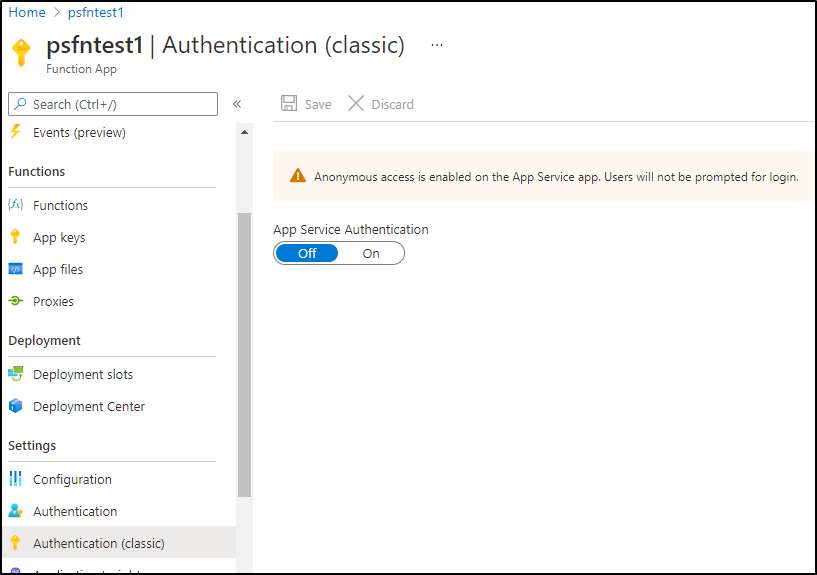

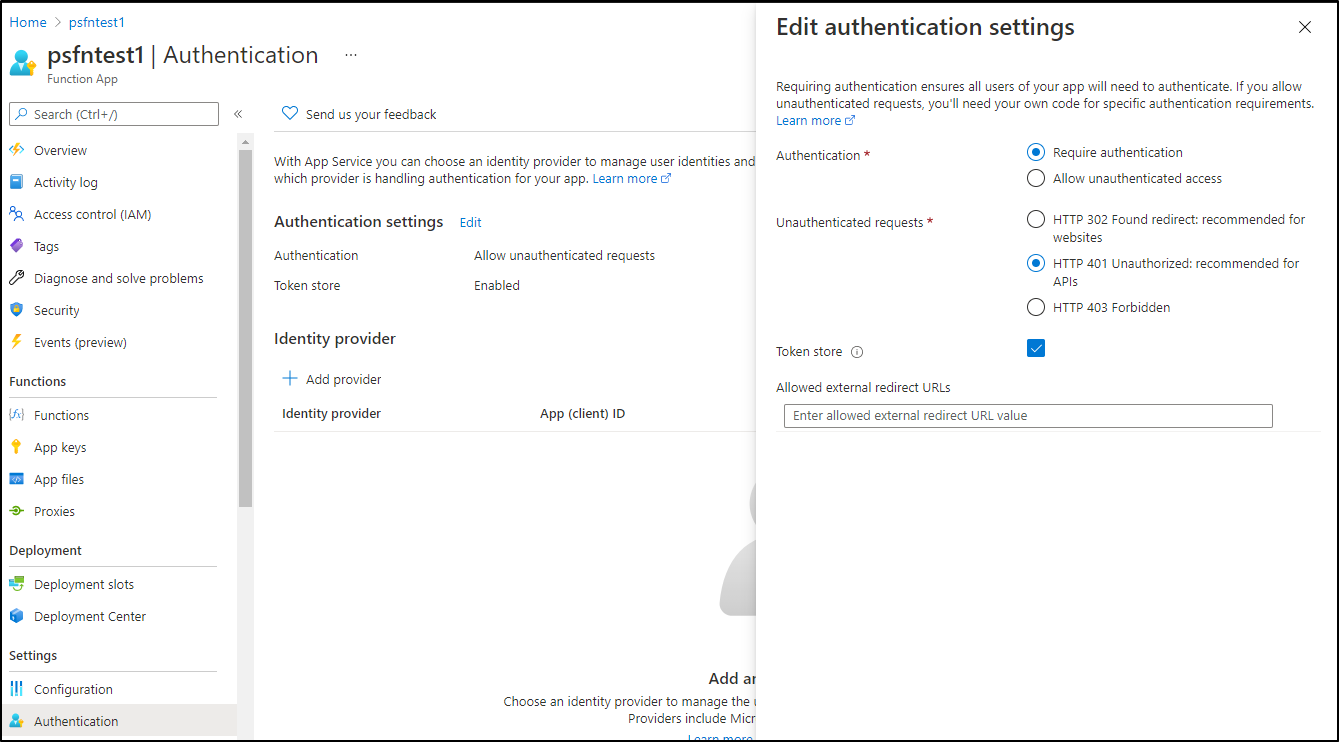

Here we can use the the Authentication (basic) to disable anonymous invokation:

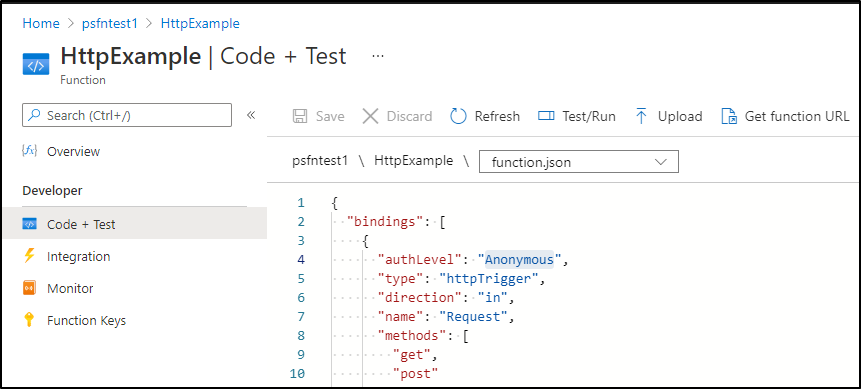

Change the function.json to require a function API key:

to

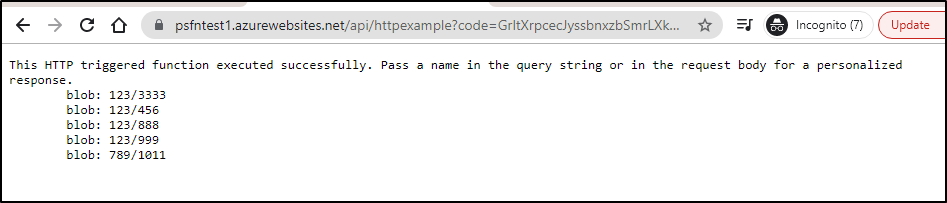

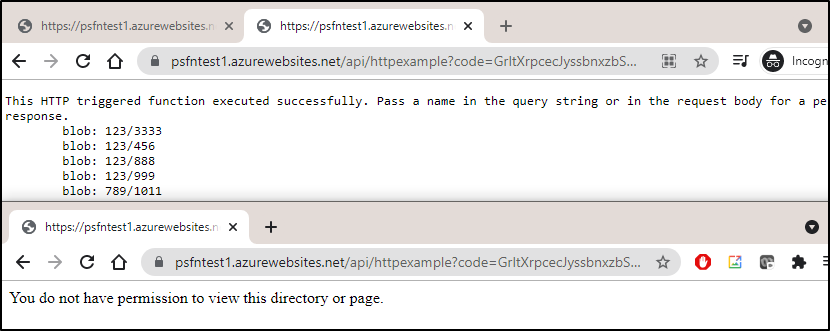

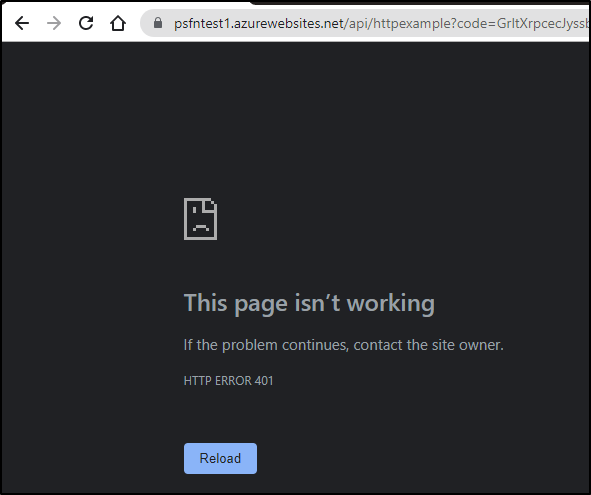

We can now see that when we don't add "?code=<api key>" it will fail, but passing it works:

We can affect the same thing with a functions key header in our curl invokation:

$ curl --header "Content-Type: application/json" --header "x-functions-key: GrItXrpcasdfsadfsadfsadfsadfasdfasdfasdfasdfasasdfasdfasdf==" --request GET https://psfntest1.azurewebsites.net/api/httpexample

This HTTP triggered function executed successfully. Pass a name in the query string or in the request body for a personalized response.

blob: 123/3333

blob: 123/456

blob: 123/888

blob: 123/999

blob: 789/1011

AAD Authentication

We can create an even tighter auth model by requiring auth via AAD.

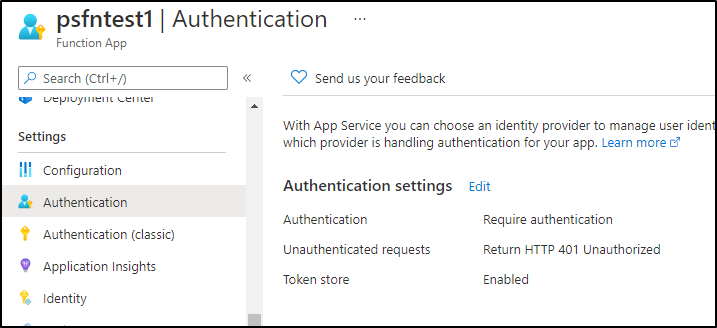

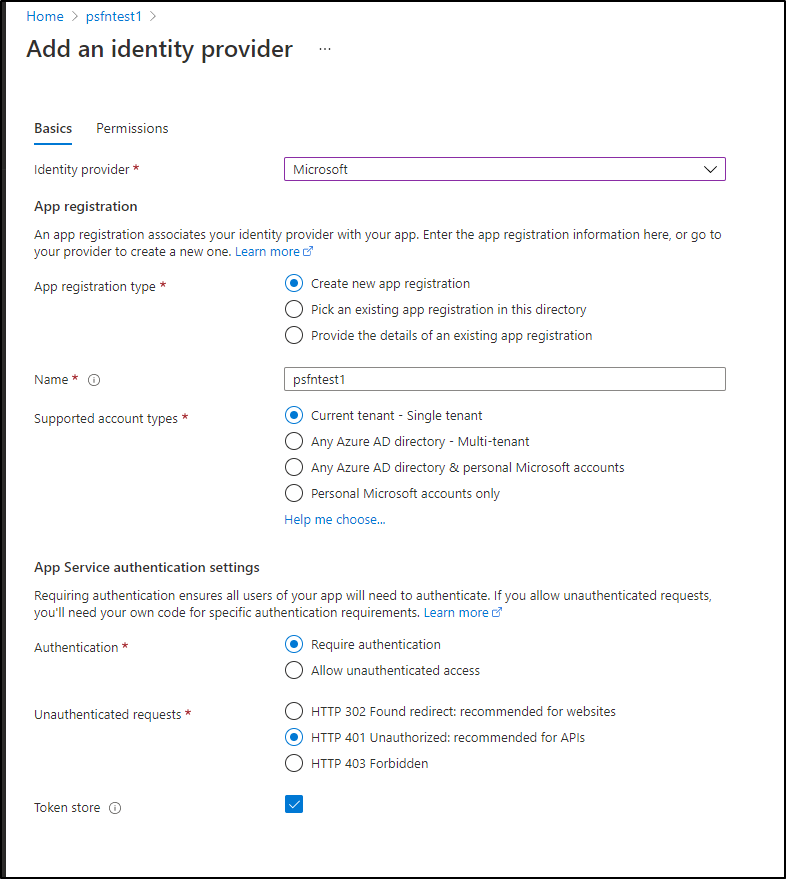

Here we use the Authentication area to require auth:

and we can see now Auth is required:

which we confirm refreshing the URL

We can then add an IdP. There are several built in (facebook, google, etc). We'll use Microsoft first:

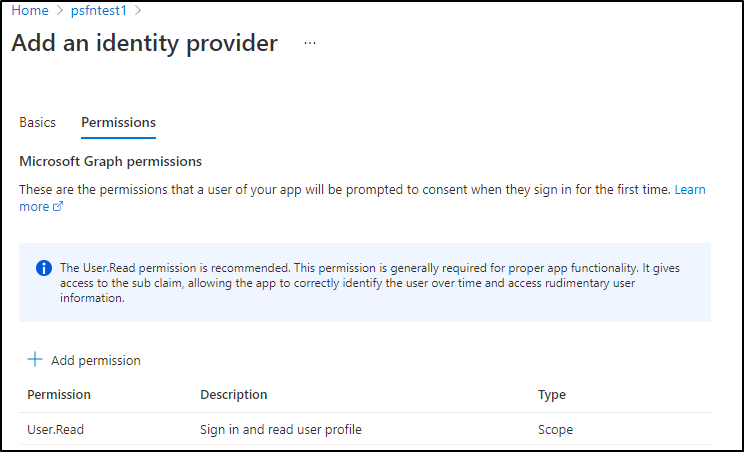

and we can scope the permissions on MS Graph:

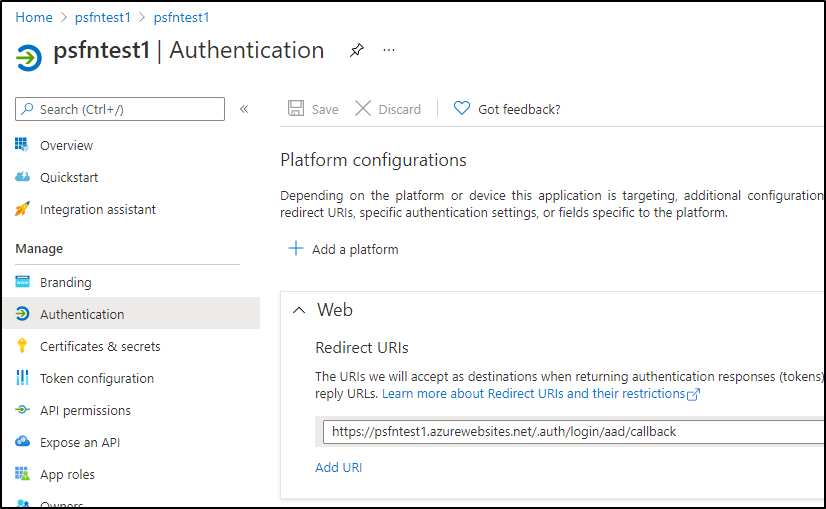

we can fetch the Redirect URI from the portal for the new App ID:

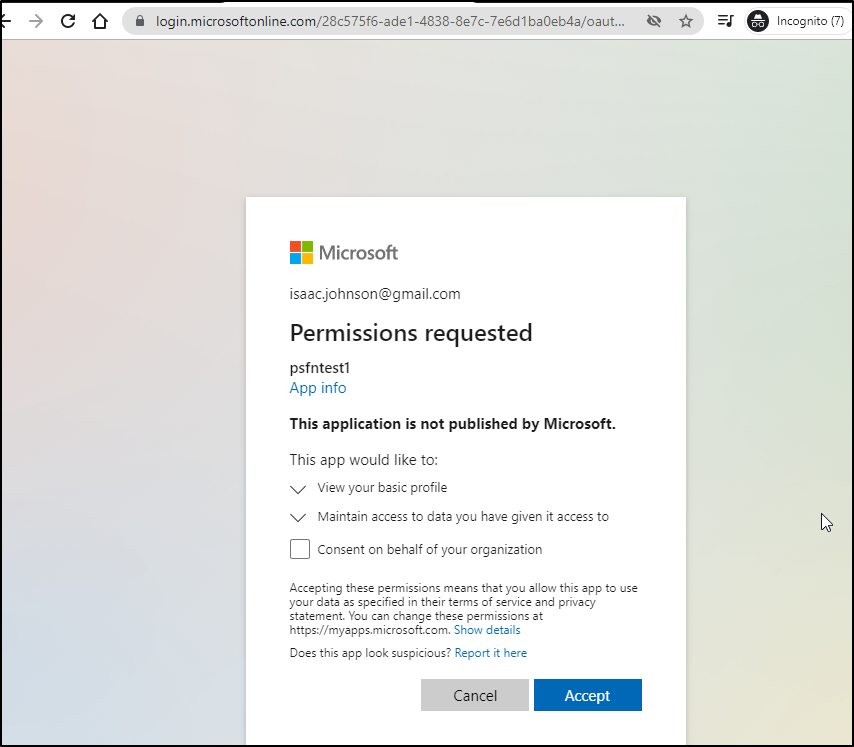

which when used, confirms permission:

And now, just in that incognito window (that is authed), i can hit the HTTP function URL (and since i still have the function.json requiring an API token, that too is required):

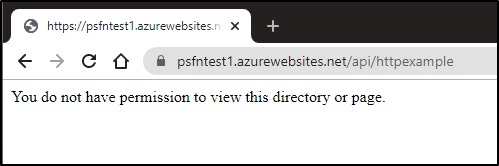

But we can see that a different window (browser session) with the same API key, it won't work:

Neither does the command line:

$ curl --header "Content-Type: application/json" --header "x-functions-key: Gasdfasdfasdfsadfasdfasdf==" --request GET https://psfntest1.azurewebsites.net/api/httpexample

You do not have permission to view this directory or page.

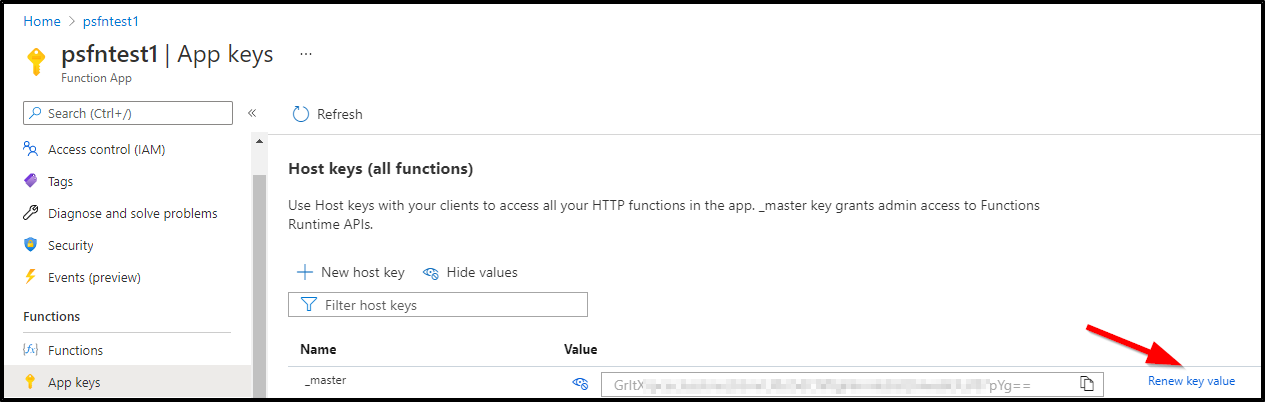

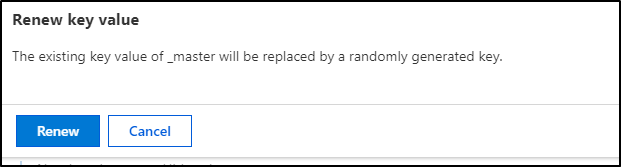

Rotating Keys:

If you did some debugging (or blogging as the case may be) and ought to rotate the key, you can do so from the App Keys window:

And when done, we can see uses of the old key are rejected:

But the new key is just fine

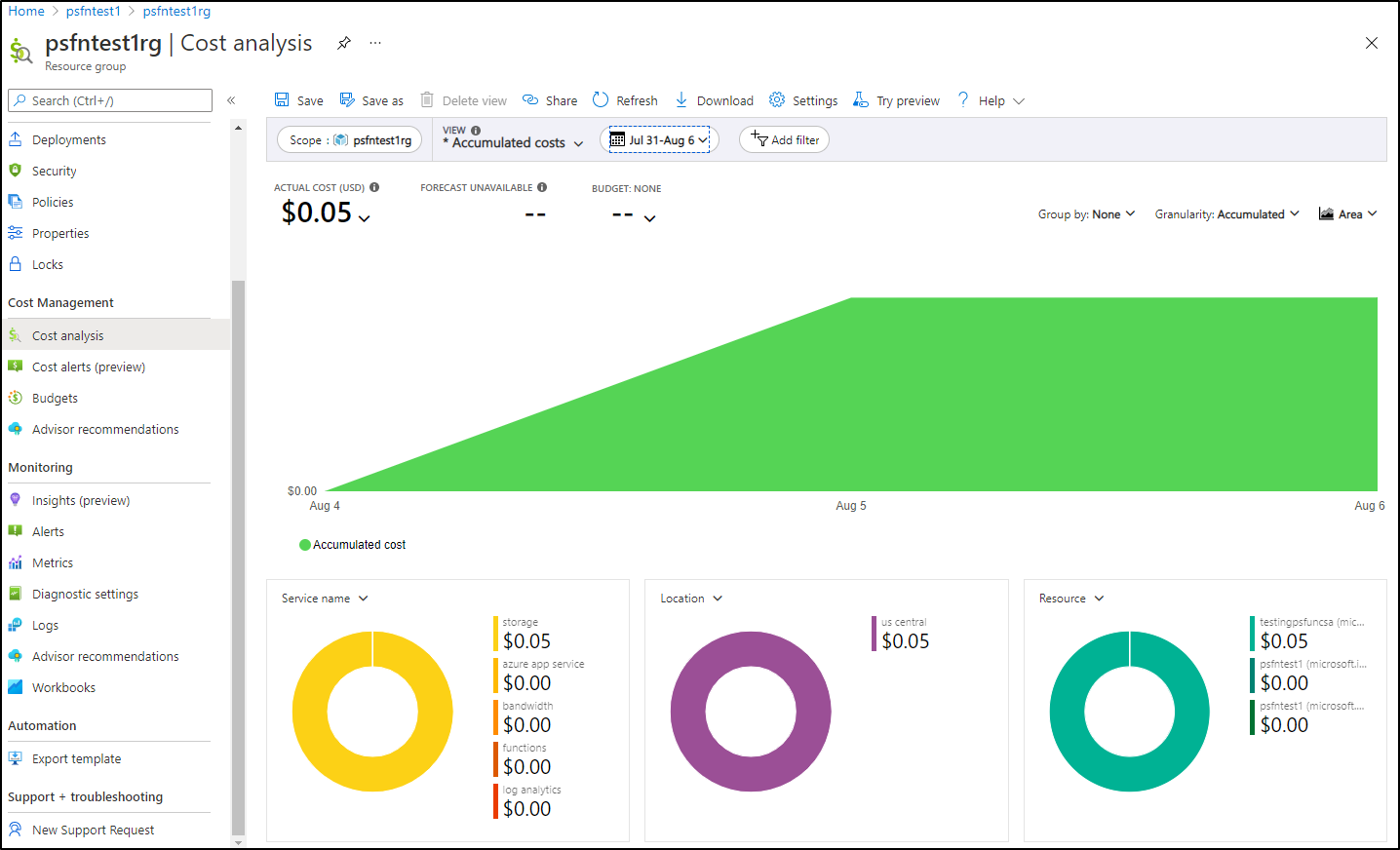

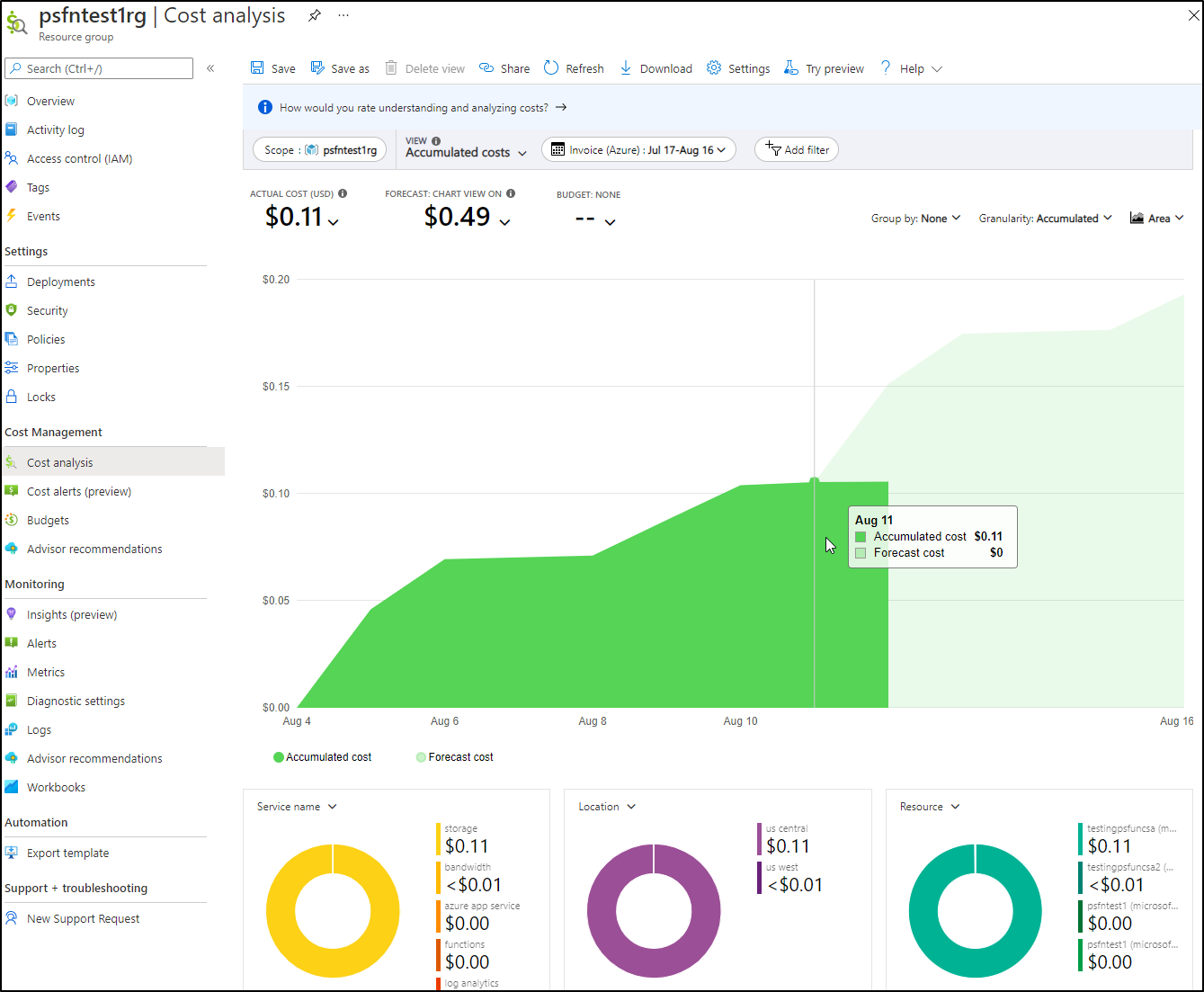

Cost

Running these functions (with testing) and multiple storage accounts and app service plans for a couple days has amounted to $0.05:

and after a couple weeks we can see we are on target for $0.50 for the month

Summary

We covered a lot of ground here. We changed our simple Powershell function to show the contents of files which might be useful to have a web based function that returns some calculated value or file. However, we wanted to do more. We updated to create a secondary storage account and changed our function to sync blobs over with Start-AZStorageBlobCopy.

Once we showed we could sync, we moved onto ways to invoke, from URLs in browsers to curl, then we considered automation and showed using both a CronJob and an Azure Event Grid trigger via a topic.

Lastly, we took on security considerations and addressed using API Tokens and adding AAD Authentication for federated IdP logins as well as rotating keys and using a mix of both.

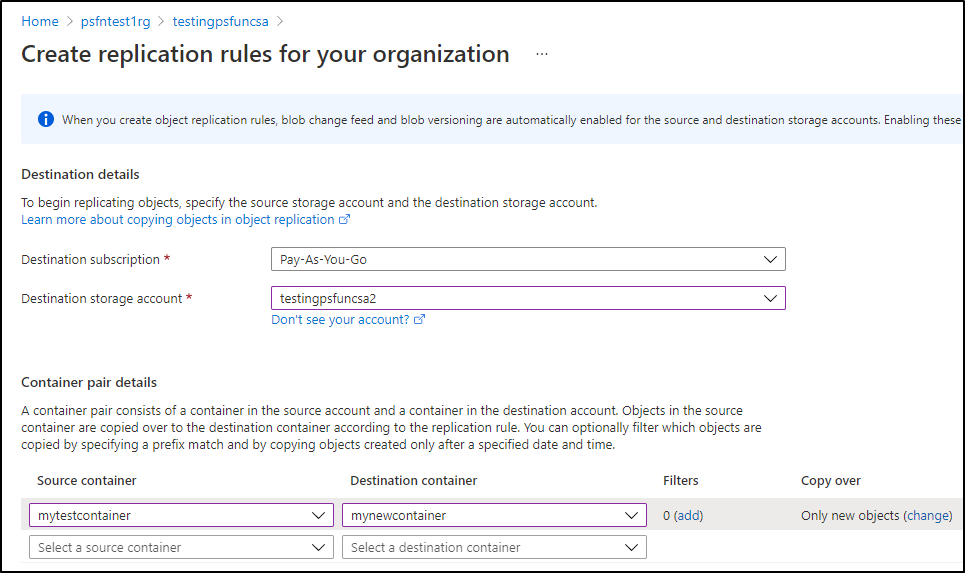

Now, if you are asking, "will it scale"? Chances are the answer is no, but this was to prove a point of access with files sync as a simple example. If I were attempting to really keep one storage account's container backed up to another, i would skip all this fun and just use a replication rule:

Hopefully I've shown a simple pattern using Powershell, a language in which most (at least Windows) Ops teams are quite familiar, to create serverless, secure and automated cloud functions.