Harbor is an open-source solution and graduated CNCF project. It's matured to having a solid helm chart and deployment model. In the past, I have tried to launch instances but due to it's heavier requirements, was unable to get it working. With Kubecon EU, I decide this was the week to sort these issues out and get it running.

We'll be using a simple on-prem K3s cluster (x86) and Nginx ingress to minimize dependencies. We will be creating some DNS entries so plan to have a TLD you can use to create subdomains.

Let's get started!

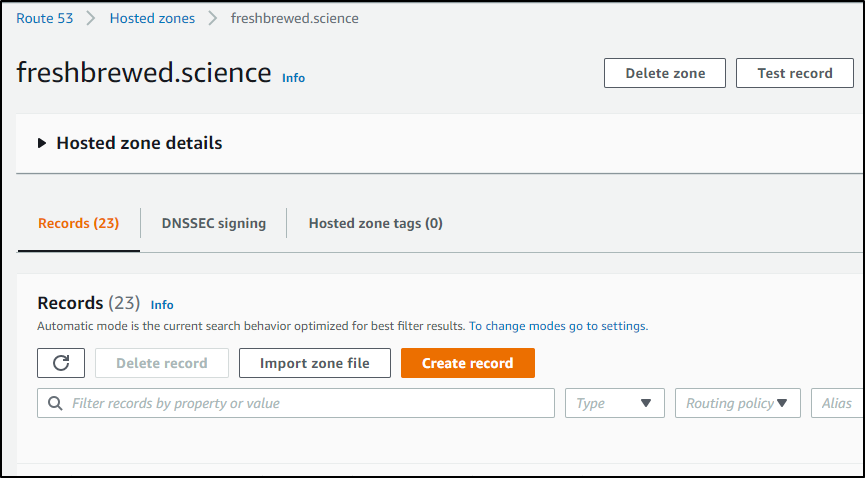

DNS Entries

Let's begin by getting our DNS entries sorted. I'm going to create a DNS entry for harbor in Route53.

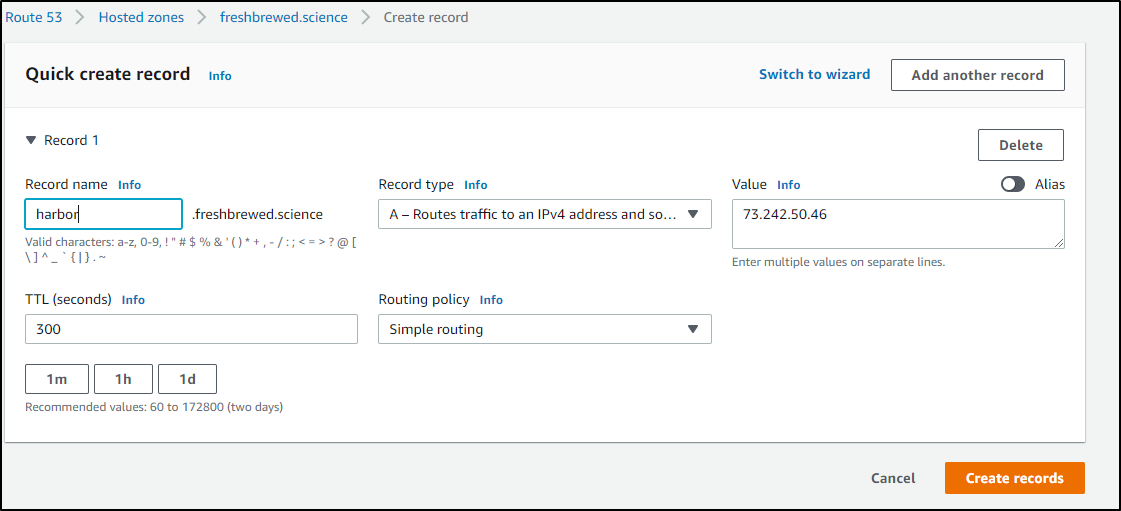

Go to Route53 and create a record:

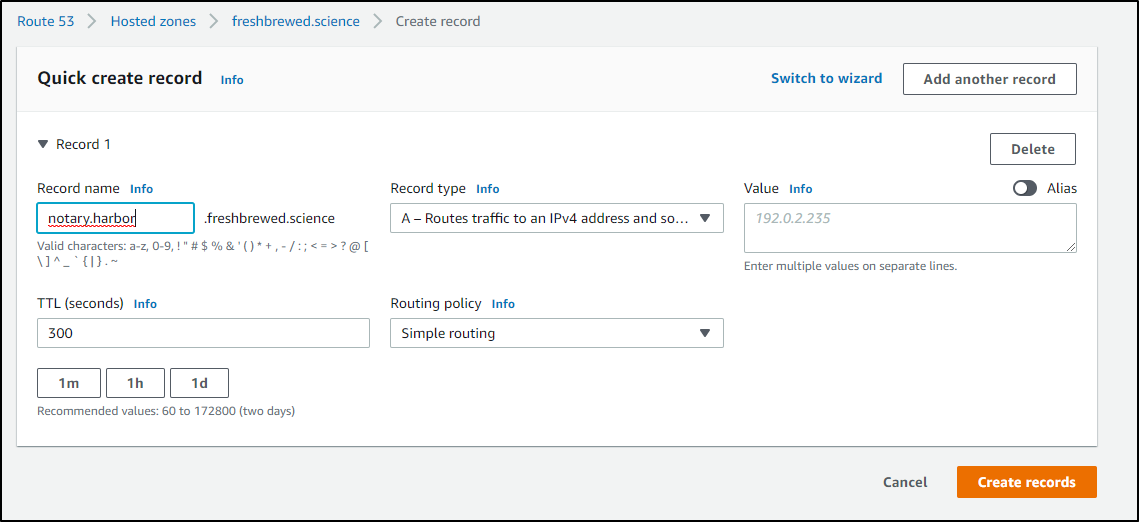

Next, let's create records for harbor, core and notary:

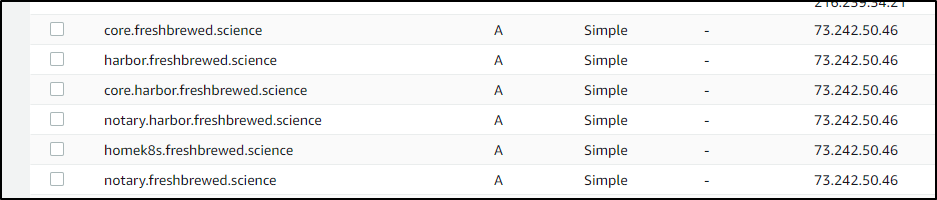

This might be wrong and i'll remove it.. but trying both core.freshbrewed.science and core.harbor.freshbrewed.science

and the resulting entries

Next we need to ensure cert manager is working so we will create the namespace and label it

builder@DESKTOP-JBA79RT:~$ kubectl create namespace harbor-system

namespace/harbor-system created

builder@DESKTOP-JBA79RT:~$ kubectl label namespace --overwrite harbor-system app=kubed

namespace/harbor-system labeled

In my case, I don't have cluster-issuer watching all namespaces to give certs. So let's create our certs directly

$ kubectl get clusterissuer

NAME READY AGE

letsencrypt-prod True 129d

While i don't think i need "core", i'm going to create it now anyhow. I know i'll need harbor and notary created (fyi, i didn't in the end)

builder@DESKTOP-JBA79RT:~/Workspaces$ cat harbor.fb.science.yaml

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: harbor-fb-science

namespace: default

spec:

commonName: harbor.freshbrewed.science

dnsNames:

- harbor.freshbrewed.science

issuerRef:

kind: ClusterIssuer

name: letsencrypt-prod

secretName: harbor.freshbrewed.science-cert

builder@DESKTOP-JBA79RT:~/Workspaces$ cat notary.fb.science.yaml

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: notary-fb-science

namespace: default

spec:

commonName: notary.freshbrewed.science

dnsNames:

- notary.freshbrewed.science

issuerRef:

kind: ClusterIssuer

name: letsencrypt-prod

secretName: notary.freshbrewed.science-cert

builder@DESKTOP-JBA79RT:~/Workspaces$ cat core.fb.science.yaml

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: core-fb-science

namespace: default

spec:

commonName: core.freshbrewed.science

dnsNames:

- core.freshbrewed.science

issuerRef:

kind: ClusterIssuer

name: letsencrypt-prod

secretName: core.freshbrewed.science-cert

Let's create and wait for the certs to get created

builder@DESKTOP-JBA79RT:~/Workspaces$ kubectl apply -f harbor.fb.science.yaml --validate=false

certificate.cert-manager.io/harbor-fb-science created

builder@DESKTOP-JBA79RT:~/Workspaces$ kubectl apply -f notary.fb.science.yaml --validate=false

certificate.cert-manager.io/notary-fb-science created

builder@DESKTOP-JBA79RT:~/Workspaces$ kubectl apply -f core.fb.science.yaml --validate=false

certificate.cert-manager.io/core-fb-science created

builder@DESKTOP-JBA79RT:~/Workspaces$ kubectl get certificate

NAME READY SECRET AGE

myk8s-tpk-best True myk8s.tpk.best-cert 32d

myk8s-tpk-pw True myk8s.tpk.pw-cert 16d

dapr-react-tpk-pw True dapr-react.tpk.pw-cert 16d

harbor-fb-science False harbor.freshbrewed.science-cert 22s

notary-fb-science False notary.freshbrewed.science-cert 14s

core-fb-science False core.freshbrewed.science-cert 7s

builder@DESKTOP-JBA79RT:~/Workspaces$ kubectl get certificate | grep fresh

harbor-fb-science True harbor.freshbrewed.science-cert 43s

notary-fb-science True notary.freshbrewed.science-cert 35s

core-fb-science False core.freshbrewed.science-cert 28s

builder@DESKTOP-JBA79RT:~/Workspaces$ kubectl get certificate | grep fresh

harbor-fb-science True harbor.freshbrewed.science-cert 48s

notary-fb-science True notary.freshbrewed.science-cert 40s

core-fb-science True core.freshbrewed.science-cert 33s

And here are our certs

$ kubectl get secrets | grep cert | tail -n3

harbor.freshbrewed.science-cert kubernetes.io/tls 2 43s

notary.freshbrewed.science-cert kubernetes.io/tls 2 38s

core.freshbrewed.science-cert kubernetes.io/tls 2 30s

Installing Harbor

First we need to create a values file

$ cat harbor.values.yaml

expose:

type: ingress

tls:

certSource: secret

secret:

secretName: harbor.freshbrewed.science-cert

notarySecretName: notary.freshbrewed.science-cert

ingress:

annotations:

cert-manager.io/cluster-issuer: letsencrypt-production

hosts:

core: harbor.freshbrewed.science

notary: notary.freshbrewed.science

harborAdminPassword: bm90IG15IHJlYWwgcGFzc3dvcmQK

externalURL: https://harbor.freshbrewed.science

secretKey: "bm90IG15IHJlYWwgc2VjcmV0IGVpdGhlcgo="

notary:

enabled: true

metrics:

enabled: true

Add the helm chart repo

builder@DESKTOP-JBA79RT:~/Workspaces$ helm repo add harbor https://helm.goharbor.io

"harbor" has been added to your repositories

builder@DESKTOP-JBA79RT:~/Workspaces$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "harbor" chart repository

...Successfully got an update from the "azure-samples" chart repository

...Successfully got an update from the "dapr" chart repository

...Successfully got an update from the "hashicorp" chart repository

...Successfully got an update from the "kedacore" chart repository

...Successfully got an update from the "nginx-stable" chart repository

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "datadog" chart repository

...Successfully got an update from the "datawire" chart repository

...Successfully got an update from the "openfaas" chart repository

...Successfully got an update from the "bitnami" chart repository

...Successfully got an update from the "stable" chart repository

Update Complete. ⎈Happy Helming!⎈

Install

$ helm upgrade --install harbor-registry harbor/harbor --values ./harbor.values.yaml

Release "harbor-registry" does not exist. Installing it now.

NAME: harbor-registry

LAST DEPLOYED: Wed May 5 06:42:58 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Please wait for several minutes for Harbor deployment to complete.

Then you should be able to visit the Harbor portal at https://harbor.freshbrewed.science

For more details, please visit https://github.com/goharbor/harbor

And we now can login

https://harbor.freshbrewed.science/harbor/sign-in?redirect_url=%2Fharbor%2Fprojects

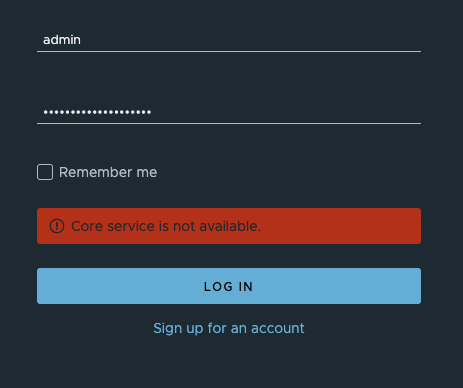

Working through issues

Unfortunately we get errors...

In checking, the first issue stemmed from harbor redis failing to launch

harbor-registry-harbor-jobservice-566476477d-9xflj 0/1 ContainerCreating 0 30m

harbor-registry-harbor-redis-0 0/1 ContainerCreating 0 30m

harbor-registry-harbor-trivy-0 0/1 ContainerCreating 0 30m

harbor-registry-harbor-portal-76bdcc7969-p2mgz 1/1 Running 0 30m

harbor-registry-harbor-chartmuseum-9f57db94d-w8lwg 1/1 Running 0 30m

harbor-registry-harbor-registry-8595485889-6dq49 2/2 Running 0 30m

harbor-registry-harbor-exporter-655dd658bb-mk5sj 0/1 CrashLoopBackOff 8 30m

harbor-registry-harbor-core-54875f69d8-6s76v 0/1 CrashLoopBackOff 8 30m

harbor-registry-harbor-notary-server-66ff6dd4c8-kjp8g 0/1 CrashLoopBackOff 9 30m

harbor-registry-harbor-notary-signer-5dff57c8f4-lvfc7 0/1 CrashLoopBackOff 9 30m

harbor-registry-harbor-database-0 0/1 CrashLoopBackOff 10 30m

I checked my PVCs and they all existed

$ kubectl get pvc | grep harbor

bitnami-harbor-registry Bound pvc-05b5d977-baa0-45b1-a421-7d9d79431551 5Gi RWO managed-nfs-storage 128d

bitnami-harbor-chartmuseum Bound pvc-cf46c907-2c05-4b2c-8831-cbb469d7a6d2 5Gi RWO managed-nfs-storage 128d

bitnami-harbor-jobservice Bound pvc-00febdcb-ccfd-4d1b-a6d8-081a4fd22456 1Gi RWO managed-nfs-storage 128d

data-bitnami-harbor-postgresql-0 Bound pvc-39a1c708-ae58-4546-bc2a-70326b5ffab6 8Gi RWO managed-nfs-storage 128d

redis-data-bitnami-harbor-redis-master-0 Bound pvc-73a7e833-90fb-41ab-b42c-7a1e7fd5aad3 8Gi RWO managed-nfs-storage 128d

data-bitnami-harbor-trivy-0 Bound pvc-aa741760-7dd5-4153-bd0e-d796c8e7abba 5Gi RWO managed-nfs-storage 128d

harbor-registry-harbor-jobservice Bound pvc-626f0e31-84fb-4ea2-9ae8-7456d0d65304 1Gi RWO managed-nfs-storage 35m

harbor-registry-harbor-registry Bound pvc-db3cbfd5-9446-4b22-8295-aa960518e539 5Gi RWO managed-nfs-storage 35m

harbor-registry-harbor-chartmuseum Bound pvc-e2f851ff-e95d-45a0-8d17-e6993e4ac066 5Gi RWO managed-nfs-storage 35m

database-data-harbor-registry-harbor-database-0 Bound pvc-1b7d9cd6-bd64-4574-b0a0-ba642aa025cc 1Gi RWO managed-nfs-storage 35m

data-harbor-registry-harbor-redis-0 Bound pvc-49b8855f-1b66-4fa2-b743-44601b44d6fe 1Gi RWO managed-nfs-storage 35m

data-harbor-registry-harbor-trivy-0 Bound pvc-2ca7d1a7-9538-453f-ba2b-11c1facd66de 5Gi RWO managed-nfs-storage 35m

and the redis pod logs:

errors..

Output: Running scope as unit: run-r6b941188356647ecafd13c51979bf2d5.scope

mount: /var/lib/kubelet/pods/484a45bd-1c11-4a29-aab5-71293a7a0268/volumes/kubernetes.io~nfs/pvc-49b8855f-1b66-4fa2-b743-44601b44d6fe: bad option; for several filesystems (e.g. nfs, cifs) you might need a /sbin/mount.<type> helper program.

Warning FailedMount 12m (x7 over 28m) kubelet Unable to attach or mount volumes: unmounted volumes=[data], unattached volumes=[data]: timed out waiting for the condition

Warning FailedMount 3m43s (x17 over 28m) kubelet (combined from similar events): MountVolume.SetUp failed for volume "pvc-49b8855f-1b66-4fa2-b743-44601b44d6fe" : mount failed: exit status 32

Mounting command: systemd-run

Mounting arguments: --description=Kubernetes transient mount for /var/lib/kubelet/pods/484a45bd-1c11-4a29-aab5-71293a7a0268/volumes/kubernetes.io~nfs/pvc-49b8855f-1b66-4fa2-b743-44601b44d6fe --scope -- mount -t nfs 192.168.1.129:/volume1/k3snfs/default-data-harbor-registry-harbor-redis-0-pvc-49b8855f-1b66-4fa2-b743-44601b44d6fe /var/lib/kubelet/pods/484a45bd-1c11-4a29-aab5-71293a7a0268/volumes/kubernetes.io~nfs/pvc-49b8855f-1b66-4fa2-b743-44601b44d6fe

Output: Running scope as unit: run-r0936ebd44e8244afbd8108ad5a2f334a.scope

This clued me in that perhaps the slower NFS PVC storage class i used did not meet needs in time. I rotated the redis pod and it can back just fine

$ kubectl logs harbor-registry-harbor-redis-0

1:C 05 May 12:16:10.167 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

1:C 05 May 12:16:10.167 # Redis version=4.0.14, bits=64, commit=00000000, modified=0, pid=1, just started

1:C 05 May 12:16:10.167 # Configuration loaded

_._

_.-``__ ''-._

_.-`` `. `_. ''-._ Redis 4.0.14 (00000000/0) 64 bit

.-`` .-```. ```\/ _.,_ ''-._

( ' , .-` | `, ) Running in standalone mode

|`-._`-...-` __...-.``-._|'` _.-'| Port: 6379

| `-._ `._ / _.-' | PID: 1

`-._ `-._ `-./ _.-' _.-'

|`-._`-._ `-.__.-' _.-'_.-'|

| `-._`-._ _.-'_.-' | http://redis.io

`-._ `-._`-.__.-'_.-' _.-'

|`-._`-._ `-.__.-' _.-'_.-'|

| `-._`-._ _.-'_.-' |

`-._ `-._`-.__.-'_.-' _.-'

`-._ `-.__.-' _.-'

`-._ _.-'

`-.__.-'

1:M 05 May 12:16:10.168 # Server initialized

1:M 05 May 12:16:10.168 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled.

1:M 05 May 12:16:10.169 * Ready to accept connections

I then rotated all the remaining hung pods…

$ kubectl get pods

...

harbor-registry-harbor-jobservice-566476477d-9xflj 0/1 ContainerCreating 0 33m

harbor-registry-harbor-trivy-0 0/1 ContainerCreating 0 33m

harbor-registry-harbor-portal-76bdcc7969-p2mgz 1/1 Running 0 33m

harbor-registry-harbor-chartmuseum-9f57db94d-w8lwg 1/1 Running 0 33m

harbor-registry-harbor-registry-8595485889-6dq49 2/2 Running 0 33m

harbor-registry-harbor-database-0 0/1 CrashLoopBackOff 10 33m

harbor-registry-harbor-exporter-655dd658bb-mk5sj 0/1 CrashLoopBackOff 9 33m

harbor-registry-harbor-notary-server-66ff6dd4c8-kjp8g 0/1 CrashLoopBackOff 10 33m

harbor-registry-harbor-core-54875f69d8-6s76v 0/1 CrashLoopBackOff 9 33m

harbor-registry-harbor-redis-0 1/1 Running 0 33s

harbor-registry-harbor-notary-signer-5dff57c8f4-lvfc7 1/1 Running 10 33m

builder@DESKTOP-JBA79RT:~/Workspaces$ kubectl delete pod harbor-registry-harbor-database-0 && kubectl delete pod harbor-registry-harbor-exporter-655dd658bb-mk5sj && kubectl delete pod harbor-registry-harbor-notary-server-66ff6dd4c8-kjp8g && kubectl delete pod harbor-registry-harbor-core-54875f69d8-6s76v && kubectl delete pod harbor-registry-harbor-trivy-0 && kubectl delete pod harbor-registry-harbor-jobservice-566476477d-9xflj && kubectl delete pod harbor-registry-harbor-notary-signer-5dff57c8f4-lvfc7

We are getting better…

harbor-registry-harbor-portal-76bdcc7969-p2mgz 1/1 Running 0 37m

harbor-registry-harbor-chartmuseum-9f57db94d-w8lwg 1/1 Running 0 37m

harbor-registry-harbor-registry-8595485889-6dq49 2/2 Running 0 37m

harbor-registry-harbor-redis-0 1/1 Running 0 4m34s

harbor-registry-harbor-notary-signer-5dff57c8f4-lvfc7 0/1 CrashLoopBackOff 10 37m

harbor-registry-harbor-trivy-0 0/1 ContainerCreating 0 2m24s

harbor-registry-harbor-jobservice-566476477d-hxsnt 0/1 ContainerCreating 0 2m24s

harbor-registry-harbor-database-0 0/1 CrashLoopBackOff 4 2m47s

harbor-registry-harbor-notary-server-66ff6dd4c8-rj285 1/1 Running 3 2m44s

harbor-registry-harbor-core-54875f69d8-xt9pf 0/1 Running 2 2m39s

harbor-registry-harbor-exporter-655dd658bb-btbb7 0/1 Running 2 2m47s

The main issue, and I tested using just an off the shelf postgres helm chart. We will need to use a different storage class to solve this

Switch default SC to local-storage

we can swap with a patch statement

kubectl patch storageclass managed-nfs-storage -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"false"}}}' && kubectl patch storageclass local-path -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}' && echo "switched back to local-storage"Then remove the outstanding PVCs causing issue (mostly database)

$ kubectl delete pvc database-data-harbor-registry-harbor-database-0

$ kubectl delete pvc data-harbor-registry-harbor-trivy-0

$ kubectl delete pvc data-harbor-registry-harbor-redis-0

$ kubectl delete pvc data-bitnami-harbor-postgresql-0

$ kubectl delete pvc data-bitnami-harbor-trivy-0

Then try again

Note, i ended up just patching the harbor template so i wouldn't need to patch storage classes :

$ helm pull harbor/harbor

$ harbor/templates/database$ cat database-ss.yaml | head -n51 | tail -n4

# args: ["-c", "[ -e /var/lib/postgresql/data/postgresql.conf ] && [ ! -d /var/lib/postgresql/data/pgdata ] && mkdir -m 0700 /var/lib/postgresql/data/pgdata && mv /var/lib/postgresql/data/* /var/lib/postgresql/data/pgdata/ || true"]

args: ["-c", "[ ! -d /var/lib/postgresql/data/pgdata ] && mkdir -m 0700 /var/lib/postgresql/data/pgdata && [ -e /var/lib/postgresql/data/postgresql.conf ] && mv /var/lib/postgresql/data/* /var/lib/postgresql/data/pgdata/ || chmod 0700 /var/lib/postgresql/data/pgdata && ls -l /var/lib/postgresql/data || true"]

volumeMounts:

- name: database-data

install

$ helm upgrade --install harbor-registry --values ./harbor.values.yaml ./harbor

In fact, even when i moved to the local-storage class, the other services also seemed to vomit on NFS storage…

harbor-registry-harbor-portal-76bdcc7969-qkpr8 1/1 Running 0 6m36s

harbor-registry-harbor-redis-0 1/1 Running 0 6m34s

harbor-registry-harbor-registry-f448867c4-h92gq 2/2 Running 0 6m35s

harbor-registry-harbor-trivy-0 1/1 Running 0 6m34s

harbor-registry-harbor-notary-server-b864fb677-rwk5d 1/1 Running 2 6m37s

harbor-registry-harbor-database-0 1/1 Running 0 6m37s

harbor-registry-harbor-exporter-655dd658bb-2pv48 1/1 Running 2 6m37s

harbor-registry-harbor-core-69d84dc44c-kjjff 1/1 Running 2 6m37s

harbor-registry-harbor-notary-signer-db48755d7-p8nsc 1/1 Running 0 2m56s

harbor-registry-harbor-jobservice-849cf67686-9k45r 0/1 ContainerCreating 0 110s

harbor-registry-harbor-chartmuseum-578c697bb-xqf5c 0/1 ContainerCreating 0 97s

the errors:

$ kubectl describe pod harbor-registry-harbor-jobservice-849cf67686-9k45r | tail -n7

Output: Running scope as unit: run-r94bd4319dede427ca96bdc606ca97e65.scope

mount: /var/lib/kubelet/pods/5904a5c9-6e9b-4ea9-8a64-e8e48f25d098/volumes/kubernetes.io~nfs/pvc-626f0e31-84fb-4ea2-9ae8-7456d0d65304: bad option; for several filesystems (e.g. nfs, cifs) you might need a /sbin/mount.<type> helper program.

Warning FailedMount 11s kubelet MountVolume.SetUp failed for volume "pvc-626f0e31-84fb-4ea2-9ae8-7456d0d65304" : mount failed: exit status 32

Mounting command: systemd-run

Mounting arguments: --description=Kubernetes transient mount for /var/lib/kubelet/pods/5904a5c9-6e9b-4ea9-8a64-e8e48f25d098/volumes/kubernetes.io~nfs/pvc-626f0e31-84fb-4ea2-9ae8-7456d0d65304 --scope -- mount -t nfs 192.168.1.129:/volume1/k3snfs/default-harbor-registry-harbor-jobservice-pvc-626f0e31-84fb-4ea2-9ae8-7456d0d65304 /var/lib/kubelet/pods/5904a5c9-6e9b-4ea9-8a64-e8e48f25d098/volumes/kubernetes.io~nfs/pvc-626f0e31-84fb-4ea2-9ae8-7456d0d65304

Output: Running scope as unit: run-re78628302ad34275bda8c731c9cb36c0.scope

mount: /var/lib/kubelet/pods/5904a5c9-6e9b-4ea9-8a64-e8e48f25d098/volumes/kubernetes.io~nfs/pvc-626f0e31-84fb-4ea2-9ae8-7456d0d65304: bad option; for several filesystems (e.g. nfs, cifs) you might need a /sbin/mount.<type> helper program.

And my PVCs

harbor-registry-harbor-jobservice Bound pvc-626f0e31-84fb-4ea2-9ae8-7456d0d65304 1Gi RWO managed-nfs-storage 25h

harbor-registry-harbor-registry Bound pvc-db3cbfd5-9446-4b22-8295-aa960518e539 5Gi RWO managed-nfs-storage 25h

harbor-registry-harbor-chartmuseum Bound pvc-e2f851ff-e95d-45a0-8d17-e6993e4ac066 5Gi RWO managed-nfs-storage 25h

local-path-pvc Bound pvc-931eedae-24e1-4958-b8b1-707820fd99f4 128Mi RWO local-path 15m

data-harbor-registry-harbor-redis-0 Bound pvc-61ddc767-92c4-4f1e-96c0-e86ce1d0d9ee 1Gi RWO local-path 6m51s

data-harbor-registry-harbor-trivy-0 Bound pvc-21362cc0-ee72-4c49-afbd-d93d432fb76e 5Gi RWO local-path 6m51s

database-data-harbor-registry-harbor-database-0 Bound pvc-27a306fc-0136-42fc-a8ac-4e868d0d2edf 1Gi RWO local-path 6m53s

To rotate, just delete the old release:

$ helm delete harbor-registry

manifest-15

manifest-16

manifest-17

release "harbor-registry" uninstalled

Then manually remove the PVCs (they wont auto-remove)

$ kubectl delete pvc harbor-registry-harbor-jobservice && kubectl delete pvc harbor-registry-harbor-registry && kubectl delete pvc harbor-registry-harbor-chartmuseum

persistentvolumeclaim "harbor-registry-harbor-jobservice" deleted

persistentvolumeclaim "harbor-registry-harbor-registry" deleted

persistentvolumeclaim "harbor-registry-harbor-chartmuseum" deleted

That finally worked:

$ kubectl get pods | tail -n11

harbor-registry-harbor-redis-0 1/1 Running 0 3m31s

harbor-registry-harbor-registry-86dbbfd48f-khljs 2/2 Running 0 3m31s

harbor-registry-harbor-trivy-0 1/1 Running 0 3m31s

harbor-registry-harbor-portal-76bdcc7969-sg6lv 1/1 Running 0 3m31s

harbor-registry-harbor-chartmuseum-559bd98f8f-t6k4z 1/1 Running 0 3m31s

harbor-registry-harbor-notary-server-779c6bddd5-kgczg 1/1 Running 2 3m31s

harbor-registry-harbor-notary-signer-c97648889-zxct6 1/1 Running 1 3m30s

harbor-registry-harbor-database-0 1/1 Running 0 3m31s

harbor-registry-harbor-core-7b4594d78d-tg5qs 1/1 Running 1 3m31s

harbor-registry-harbor-exporter-655dd658bb-ff8d7 1/1 Running 1 3m31s

harbor-registry-harbor-jobservice-95968c6d9-cdwfh 1/1 Running 1 3m31s

$ kubectl get pvc | grep harbor

redis-data-bitnami-harbor-redis-master-0 Bound pvc-73a7e833-90fb-41ab-b42c-7a1e7fd5aad3 8Gi RWO managed-nfs-storage 129d

data-harbor-registry-harbor-redis-0 Bound pvc-61ddc767-92c4-4f1e-96c0-e86ce1d0d9ee 1Gi RWO local-path 13m

data-harbor-registry-harbor-trivy-0 Bound pvc-21362cc0-ee72-4c49-afbd-d93d432fb76e 5Gi RWO local-path 13m

database-data-harbor-registry-harbor-database-0 Bound pvc-27a306fc-0136-42fc-a8ac-4e868d0d2edf 1Gi RWO local-path 13m

harbor-registry-harbor-registry Bound pvc-3405edb3-1d15-4aba-96eb-52c3297a8e53 5Gi RWO local-path 3m59s

harbor-registry-harbor-jobservice Bound pvc-0bf9d412-8485-4039-88be-54bf69b3efc7 1Gi RWO local-path 3m59s

harbor-registry-harbor-chartmuseum Bound pvc-4d993758-d4e9-49d1-a7cf-4f15c42623b6 5Gi RWO local-path 3m59s

and this time we can login

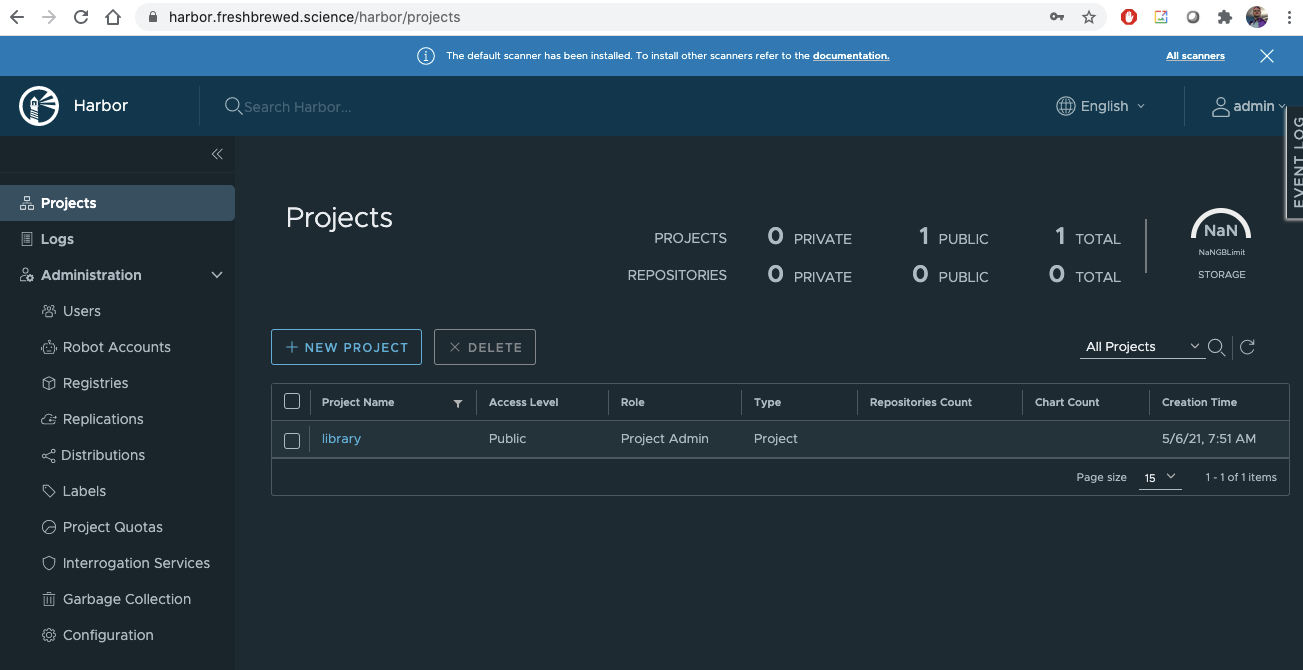

Using harbor

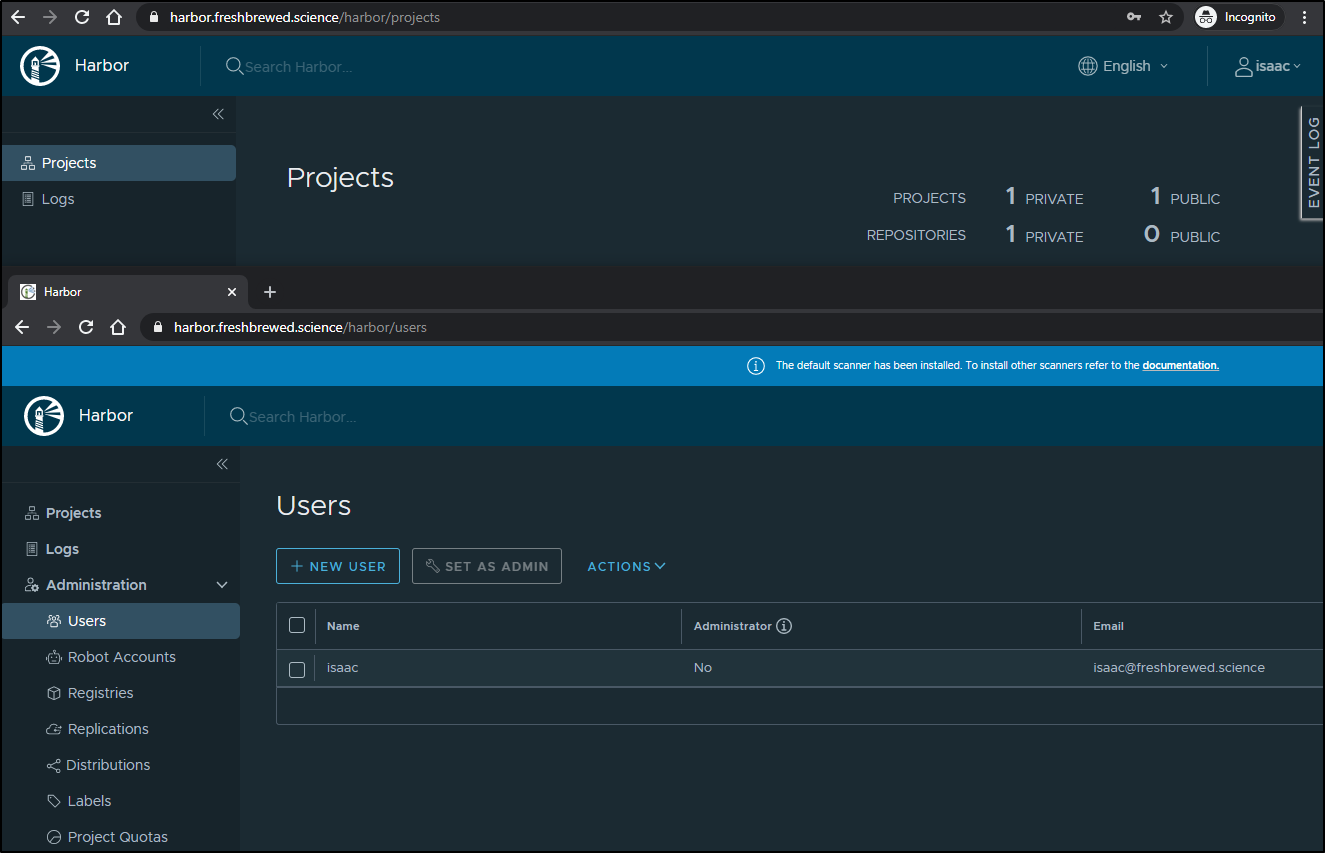

Once we login, we need to create a user that will push content into our registry.

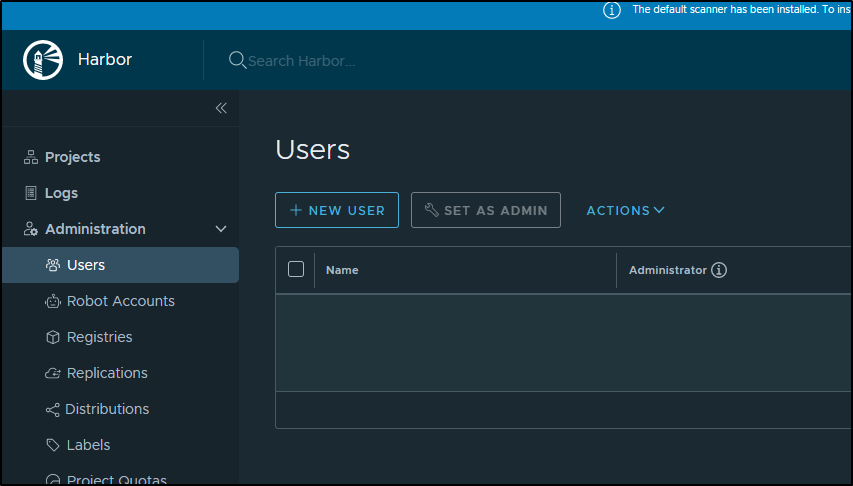

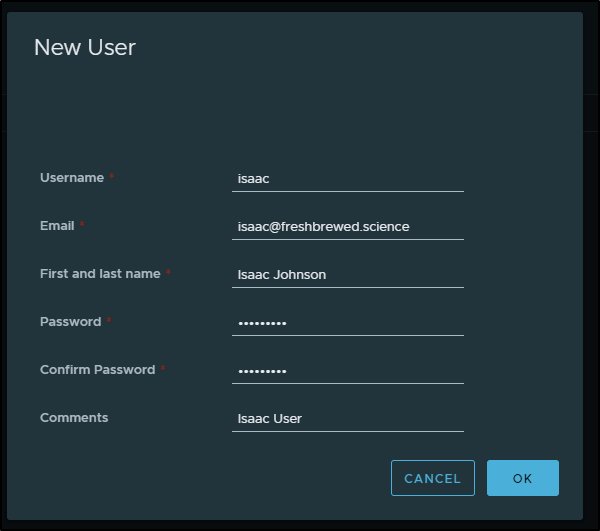

In Users (/harbor/users) create a new user:

We can just create a standard user:

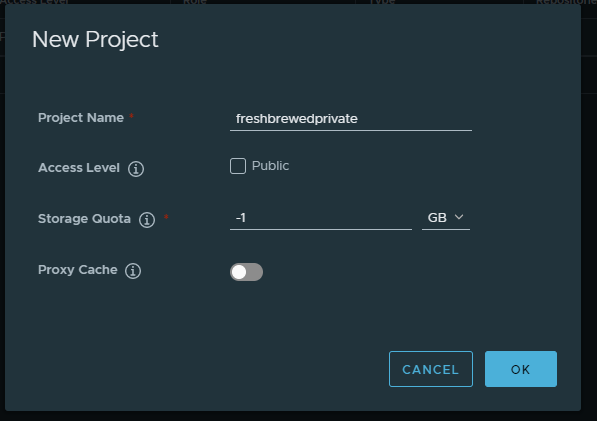

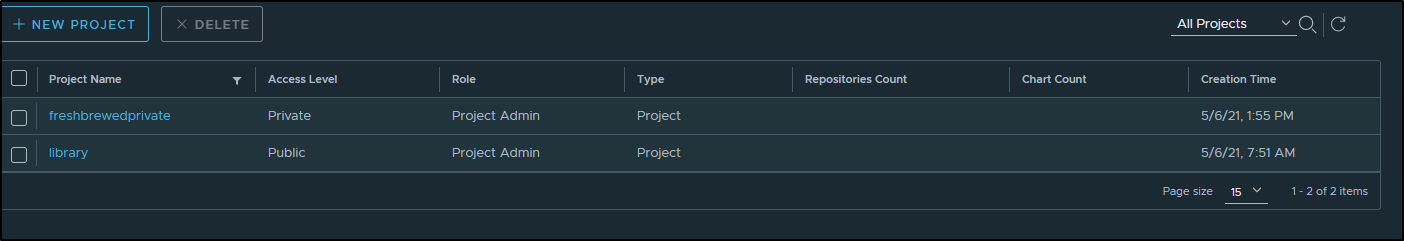

Next, lets create a project

and now we can see we have that

Testing Harbor

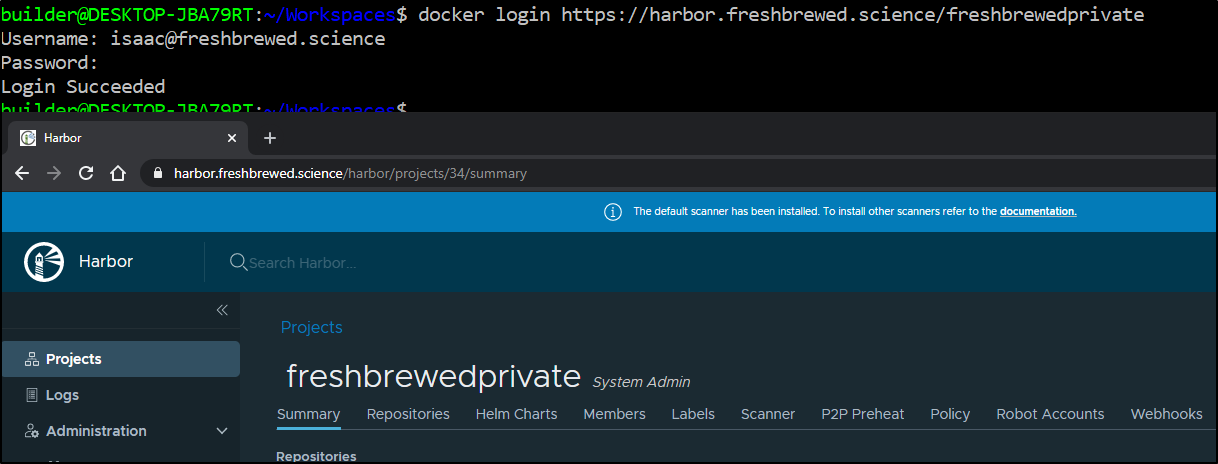

We can login with docker in a WSL command prompt

$ docker login https://harbor.freshbrewed.science/freshbrewedprivate

Username: isaac@freshbrewed.science

Password:

Login Succeeded

Note: You will need to login to the project URL, not the root of harbor.

For instance:

Next, we can tag an image and push:

builder@DESKTOP-JBA79RT:~/Workspaces$ docker images | tail -n10

ijk8senv5cr.azurecr.io/freshbrewed/webapp latest 05bd0d1575ab 6 months ago 429MB

freshbrewed/haproxy 1.6.11 66ce07f6e5f6 6 months ago 207MB

freshbrewed/haproxy 1.6.11-20201029152918 66ce07f6e5f6 6 months ago 207MB

freshbrewed/haproxy latest 66ce07f6e5f6 6 months ago 207MB

<none> <none> 2fbb4b4d7f8d 6 months ago 106MB

127.0.0.1:5000/hello-world latest bf756fb1ae65 16 months ago 13.3kB

127.0.0.1:8080/hello-world latest bf756fb1ae65 16 months ago 13.3kB

hello-world latest bf756fb1ae65 16 months ago 13.3kB

localhost:5000/hello-world latest bf756fb1ae65 16 months ago 13.3kB

us.icr.io/freshbrewedcr/hw_repo 2 bf756fb1ae65 16 months ago 13.3kB

builder@DESKTOP-JBA79RT:~/Workspaces$ docker tag hello-world:latest harbor.freshbrewed.science/freshbrewedprivate/hello-world:latest

builder@DESKTOP-JBA79RT:~/Workspaces$ docker push harbor.freshbrewed.science/freshbrewedprivate/hello-world:latest

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/hello-world]

9c27e219663c: Preparing

unauthorized: unauthorized to access repository: freshbrewedprivate/hello-world, action: push: unauthorized to access repository: freshbrewedprivate/hello-world, action: push

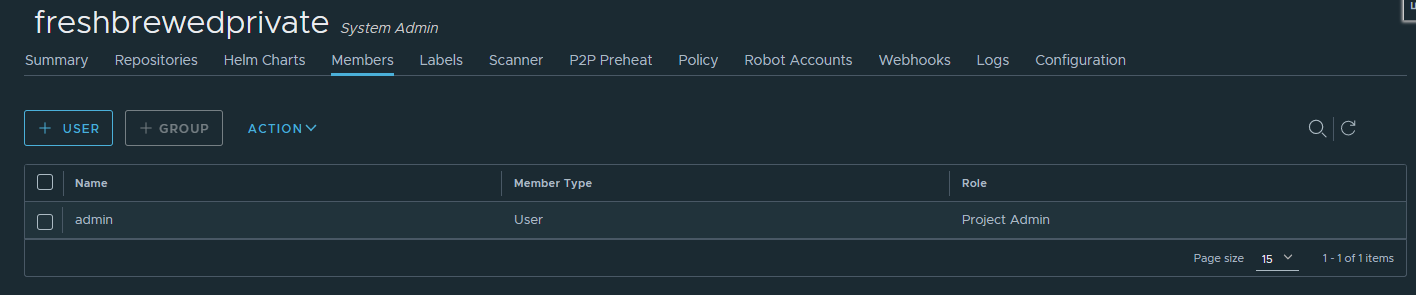

Ah, our user needs permission.

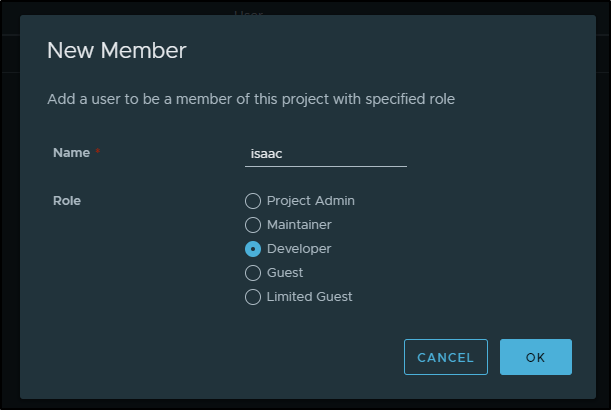

We can go to the members section of the project and add the user account:

then add our user as a developer

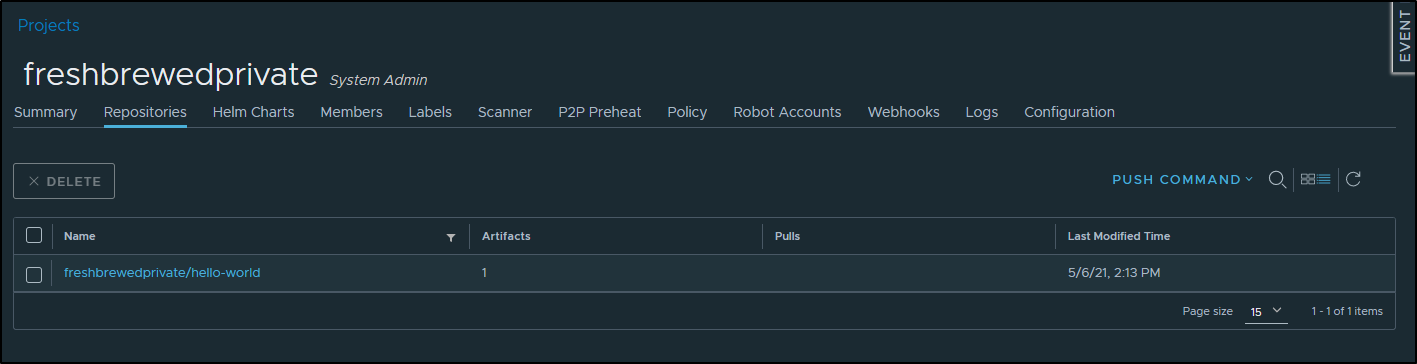

Now we can push

builder@DESKTOP-JBA79RT:~/Workspaces$ docker push harbor.freshbrewed.science/freshbrewedprivate/hello-world:latest

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/hello-world]

9c27e219663c: Pushed

latest: digest: sha256:90659bf80b44ce6be8234e6ff90a1ac34acbeb826903b02cfa0da11c82cbc042 size: 525

And now we can see that reflected in Harbor

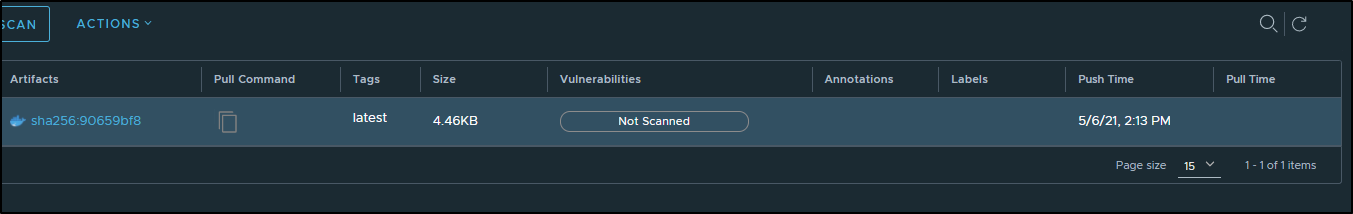

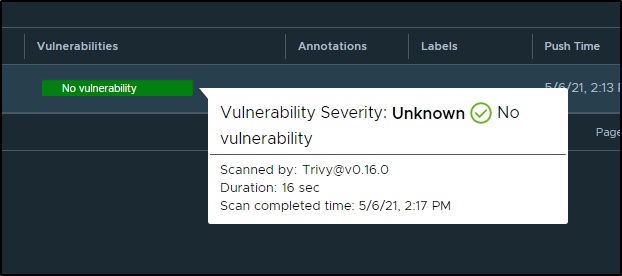

If we go to the hello-world repository now, we can scan the image we pushed:

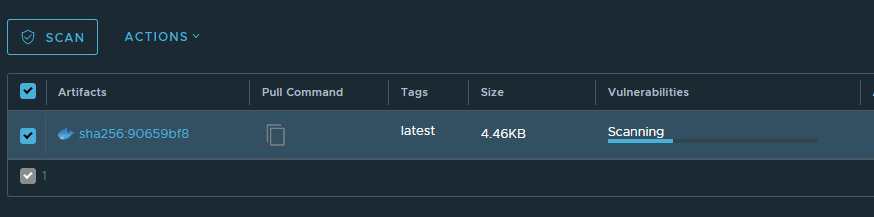

which has a nice scanning animation

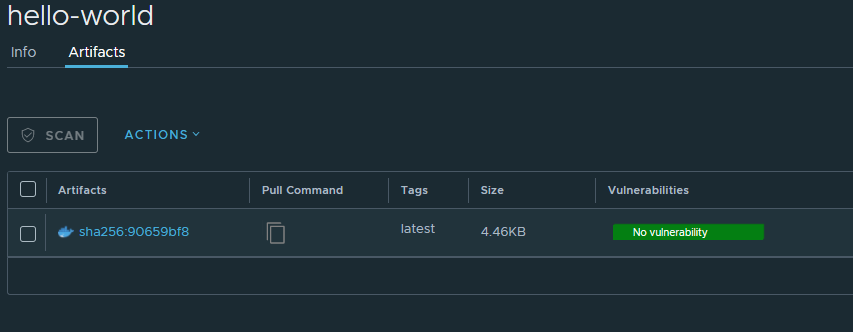

and then results:

and saying we wish to pull, we can copy the pull command under the pull command icon as well

docker pull harbor.freshbrewed.science/freshbrewedprivate/hello-world@sha256:90659bf80b44ce6be8234e6ff90a1ac34acbeb826903b02cfa0da11c82cbc042

builder@DESKTOP-JBA79RT:~/Workspaces$ docker pull harbor.freshbrewed.science/freshbrewedprivate/hello-world@sha256:90659bf80b44ce6be8234e6ff90a1ac34acbeb826903b02cfa0da11c82cbc042

harbor.freshbrewed.science/freshbrewedprivate/hello-world@sha256:90659bf80b44ce6be8234e6ff90a1ac34acbeb826903b02cfa0da11c82cbc042: Pulling from freshbrewedprivate/hello-world

Digest: sha256:90659bf80b44ce6be8234e6ff90a1ac34acbeb826903b02cfa0da11c82cbc042

Status: Image is up to date for harbor.freshbrewed.science/freshbrewedprivate/hello-world@sha256:90659bf80b44ce6be8234e6ff90a1ac34acbeb826903b02cfa0da11c82cbc042

harbor.freshbrewed.science/freshbrewedprivate/hello-world@sha256:90659bf80b44ce6be8234e6ff90a1ac34acbeb826903b02cfa0da11c82cbc042

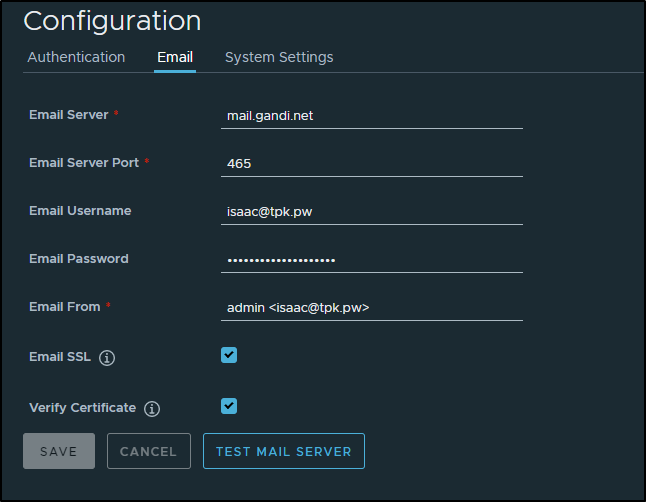

Also, you may wish to setup your outgoing mail server in configuration if you wish to enable users to reset their own password

Supposedly the mail server is used for users to reset password, but i have yet to see that feature actually work.

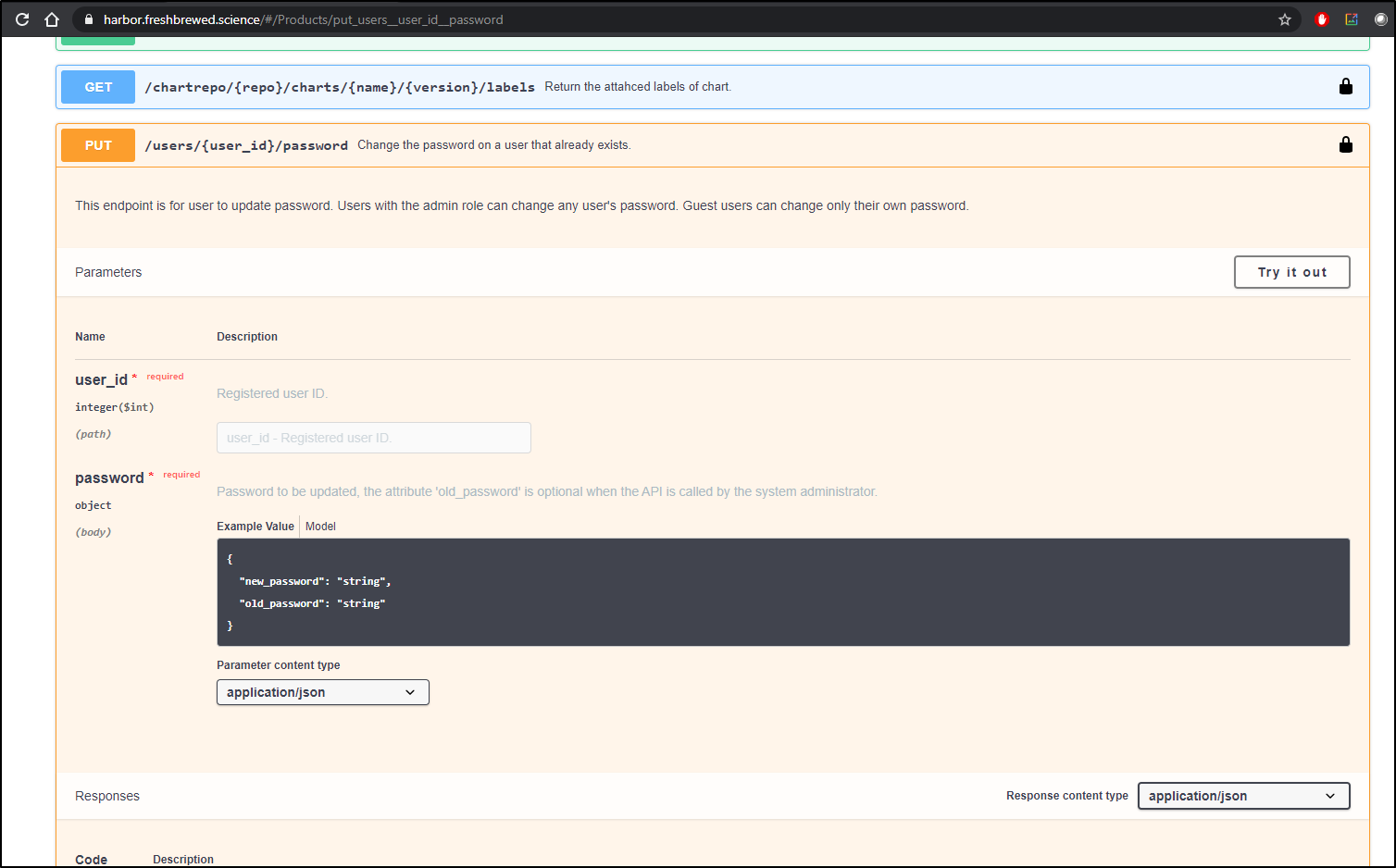

I've seen notes that /reset_password is a REST endpoint, but when i look at the 2.0 API Swagger, the only endpoint matches the UI with an old/new

Users vs Admins

You can see how the view for users differs from admins, mostly around the whole "Administration" area

Summary

GoHarbor.io is a pretty nice full featured container storage solution. Issues installing it stemmed from it's usage of PostgreSQL and PostgreSQL database not liking my storage class. This likely would not affect a production instance and moreover, the database can be externalized for a real production level deployment.

Using a cluster of old macs as a k3s cluster and local-storage, it worked. It was slow, but it worked and really what i wanted was a usable container store for on-prem work for which this satisfies.

Having user IDs and built-in container scanning with Trivy is a really great benefit:

We will see how this handles storage expansion as i only saw about 5Gb allocated thus far for storage

$ kubectl get pvc | grep harbor-registry

data-harbor-registry-harbor-redis-0 Bound pvc-61ddc767-92c4-4f1e-96c0-e86ce1d0d9ee 1Gi RWO local-path 24h

data-harbor-registry-harbor-trivy-0 Bound pvc-21362cc0-ee72-4c49-afbd-d93d432fb76e 5Gi RWO local-path 24h

database-data-harbor-registry-harbor-database-0 Bound pvc-27a306fc-0136-42fc-a8ac-4e868d0d2edf 1Gi RWO local-path 24h

harbor-registry-harbor-registry Bound pvc-3405edb3-1d15-4aba-96eb-52c3297a8e53 5Gi RWO local-path 24h

harbor-registry-harbor-jobservice Bound pvc-0bf9d412-8485-4039-88be-54bf69b3efc7 1Gi RWO local-path 24h

harbor-registry-harbor-chartmuseum Bound pvc-4d993758-d4e9-49d1-a7cf-4f15c42623b6 5Gi RWO local-path 24h

Harbor is a nice open-source offering, albeit a bit heavy for small team needs.