In our last blog entry I covered creating AzDO work items from a static feedback form and then using AWS SES and Azure Logic Apps to send email. However, we may want to ingest work from email. What if the external user who requested content has more feedback? How can we process that?

We'll assume you've covered the basics of SES setup from the last blog and jump into Rulesets of SES next.

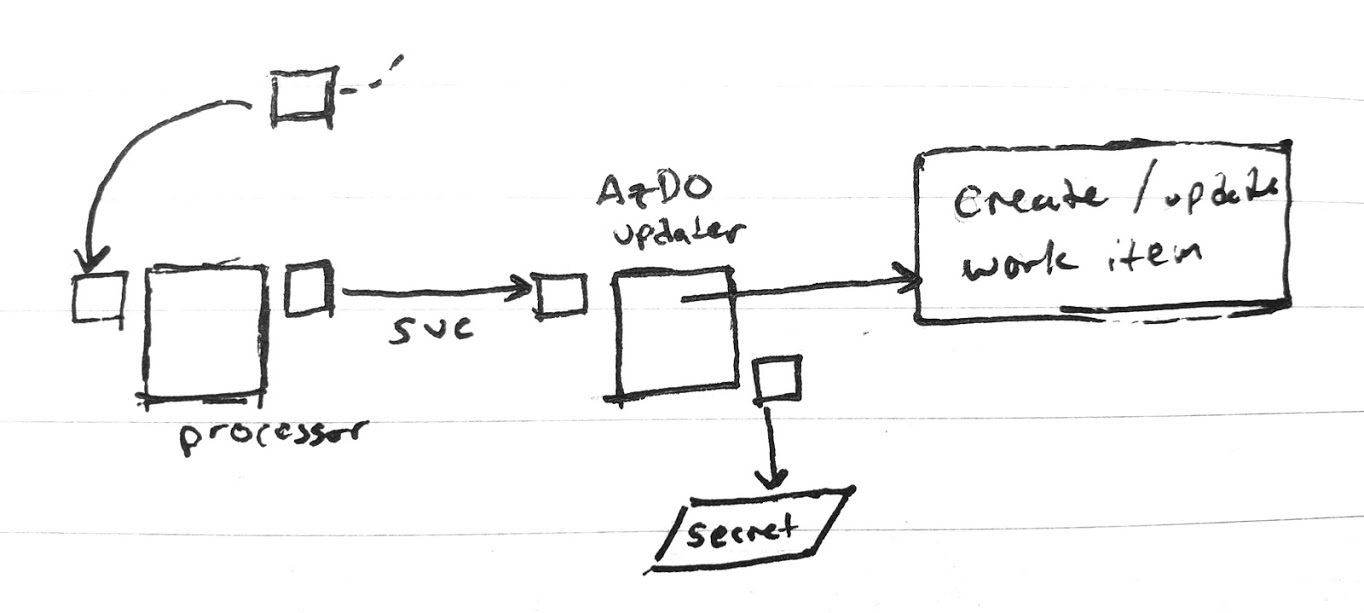

Design

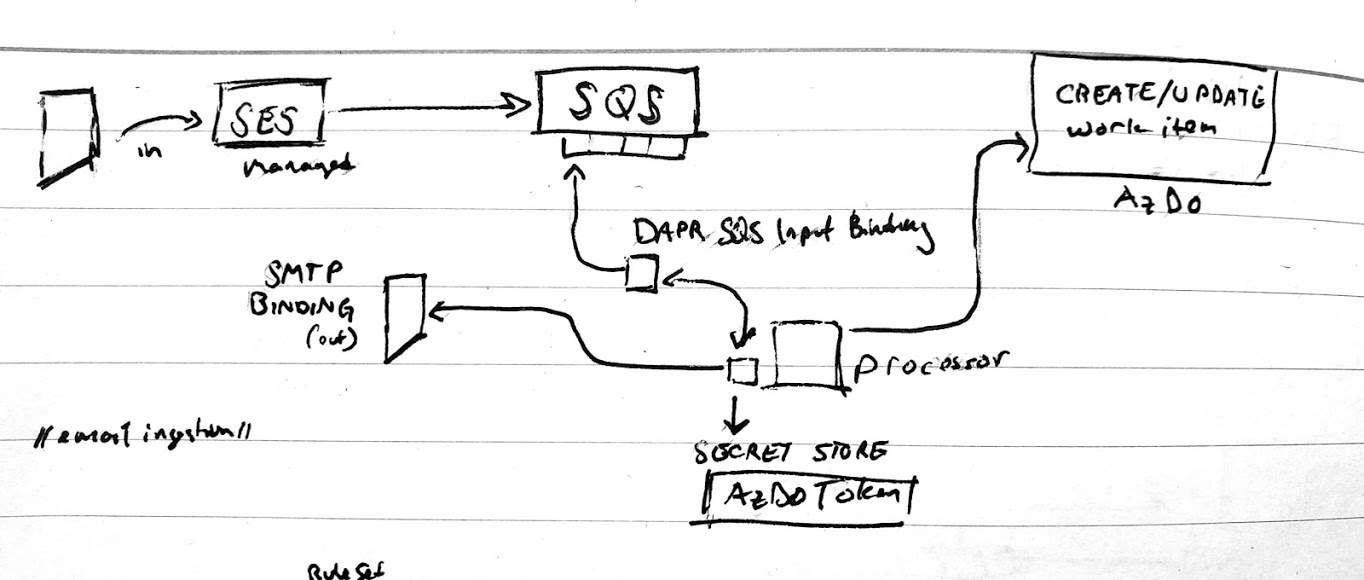

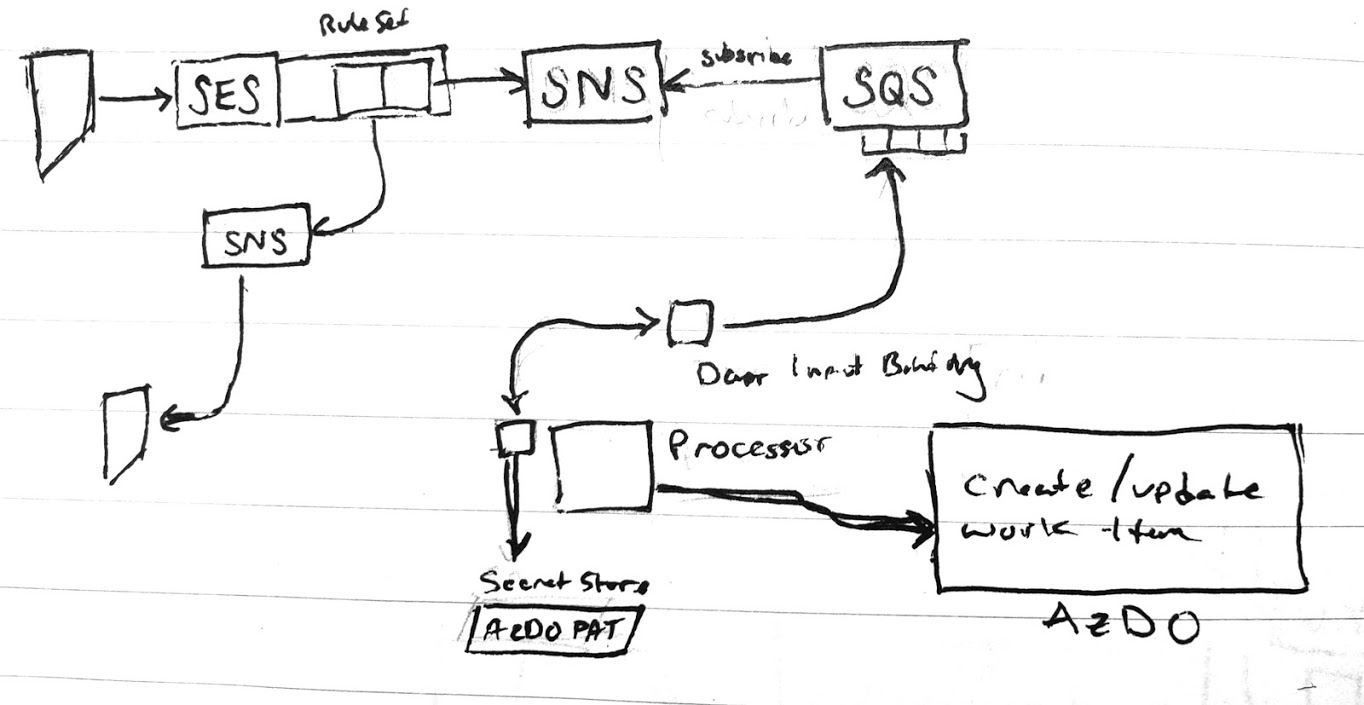

I envisioned it going one of two ways.

[1] We could trigger SQS from SES and put most of the brains in one single processor as triggered via a Dapr Binding

or [2] put SNS in the middle to handle notifications so the processor just focuses on updating AzDO

As I later realized, SES cannot reach SQS directly, rather SES can update a topic (SNS) and SQS can 'subscribe' to a topic (SNS) meaning i needed to pursue option 2.

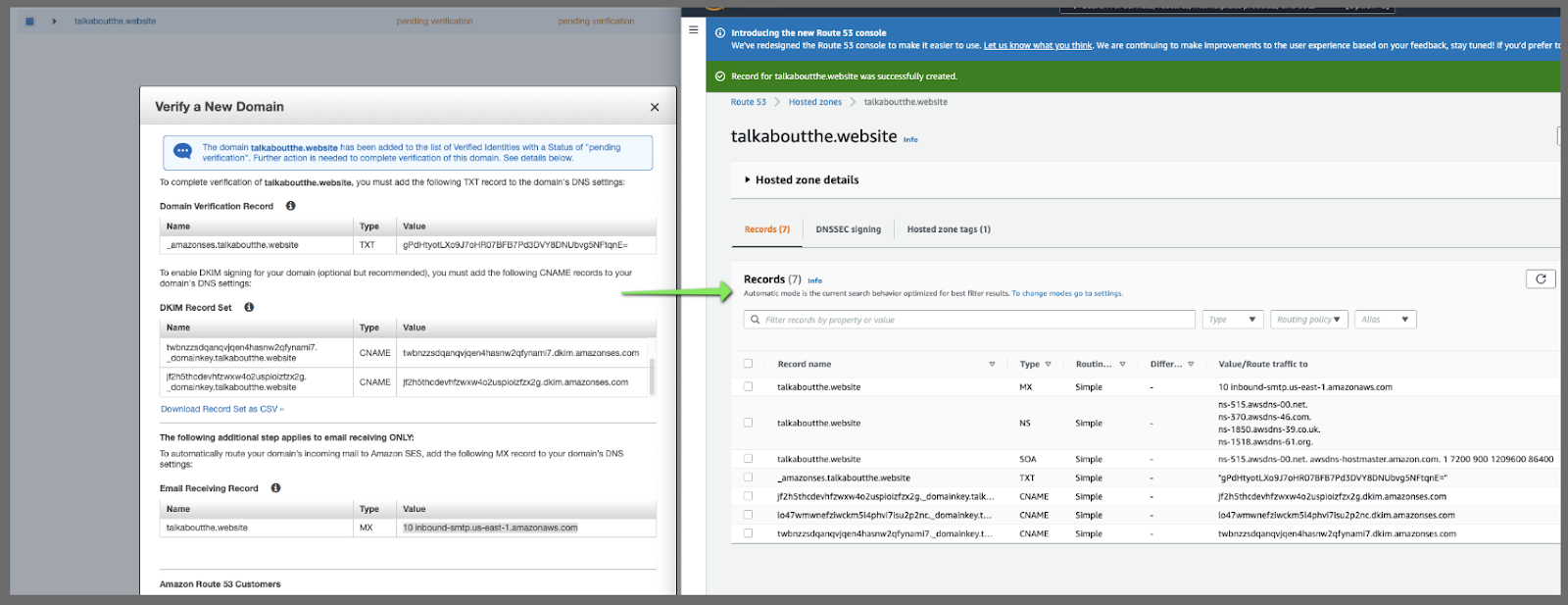

AWS SES Verified Domains

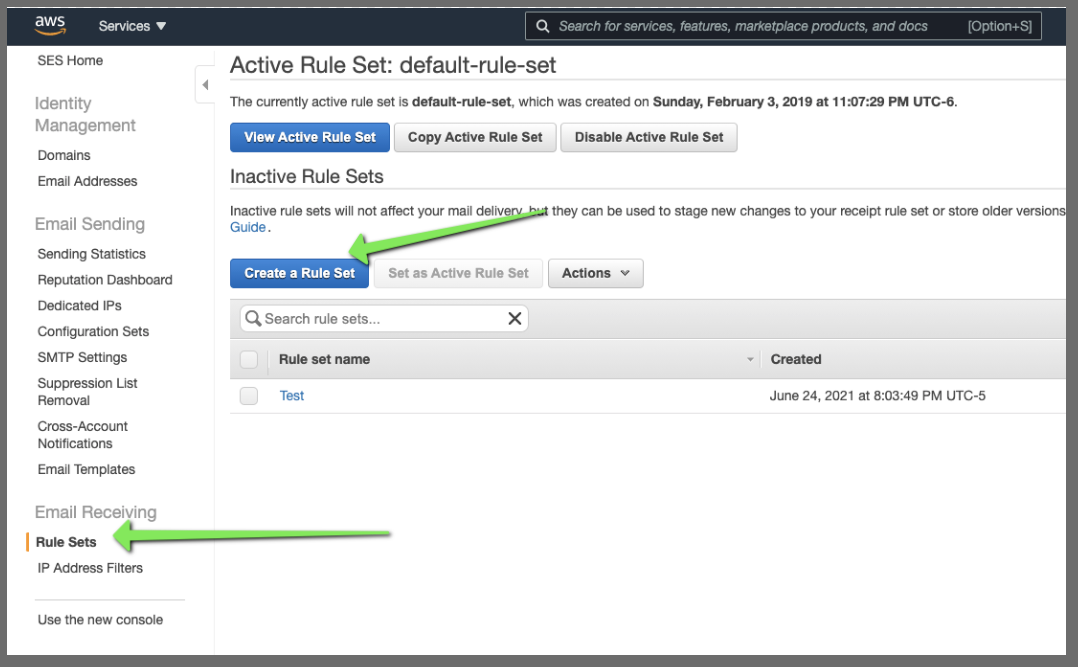

We can go to SES and create a rule set on verified domains to ingest and process emails.

And from Rule Sets we can setup the ingestion rule

However, this assumes you route your email to AWS SES. Namely, that an MX record exists.

We can follow this guide, but essentially I would need to set MX to inbound-smtp.us-east-1.amazonaws.com. So even though I setup a rule to process feedback

because I use Gandi for email I can't selectively forward emails.

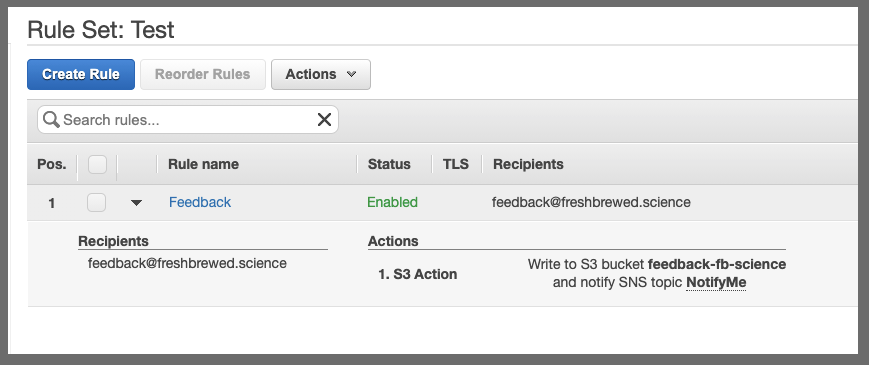

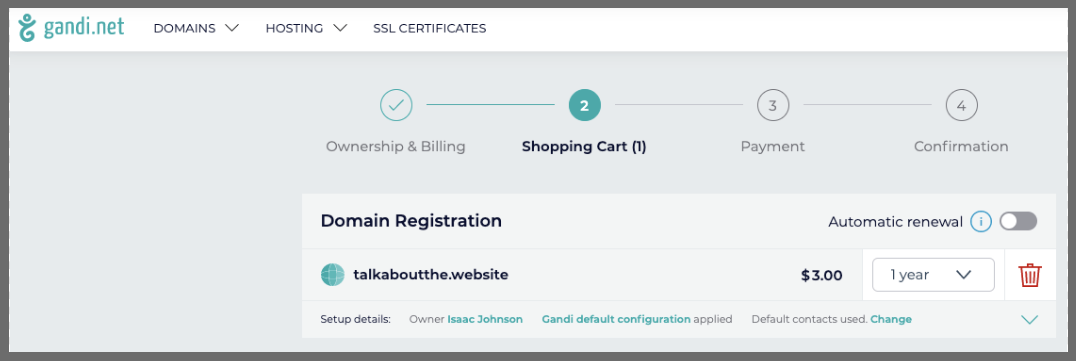

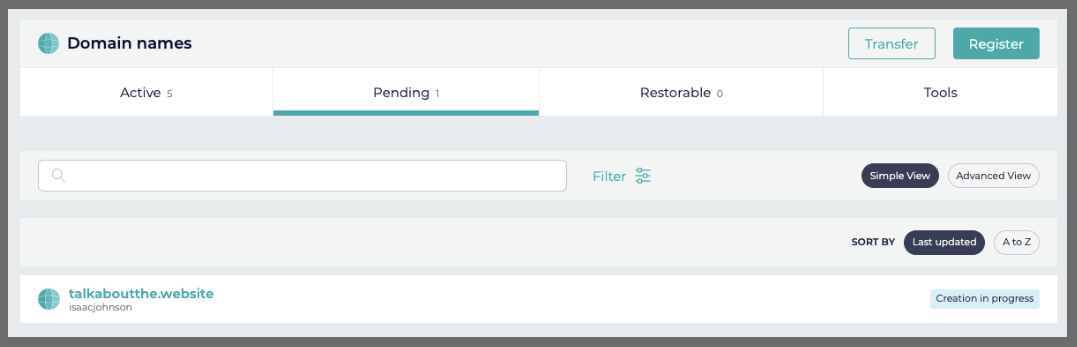

Setting up a fresh domain for emails

Let's purchase a fresh domain for this activity. I still prefer Gandi.net for this kind of thing.

Once we are past pending

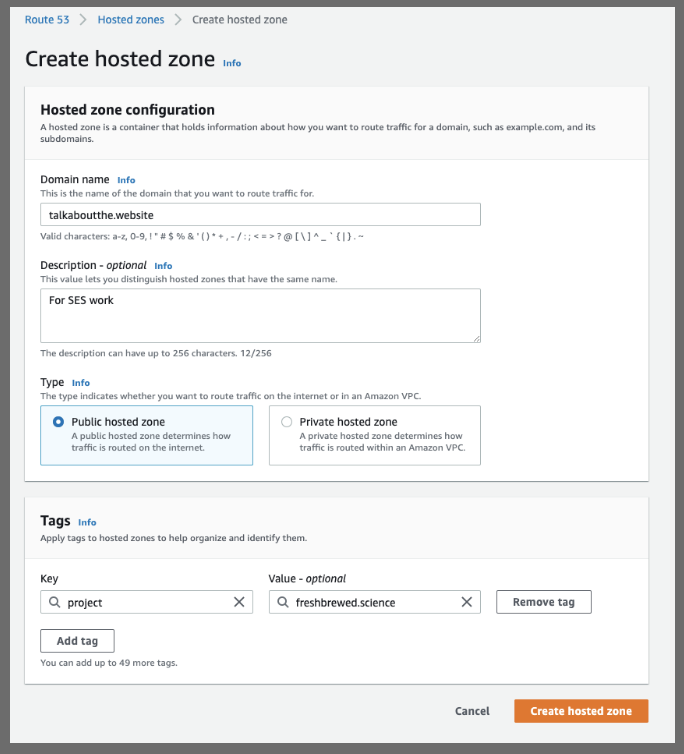

We can route this to AWS by creating a hosted zone

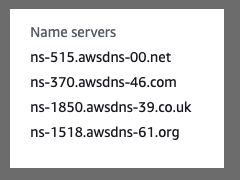

Once created, we see the NS we'll need to set at Gandi

Which we can change to AWS

Note: this can take 12-24hours to propagate.

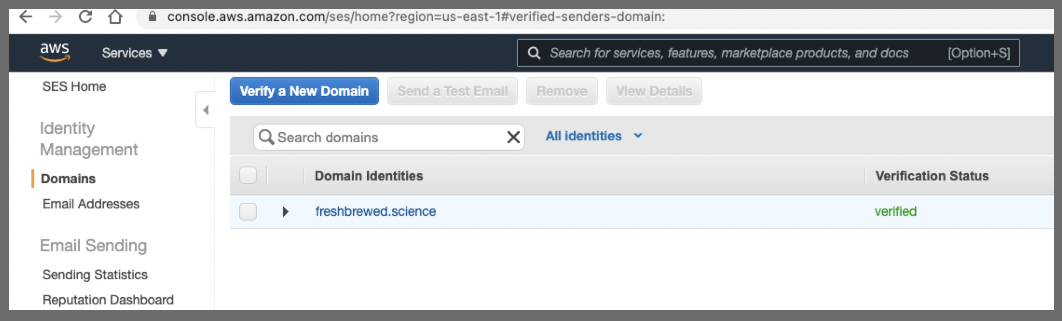

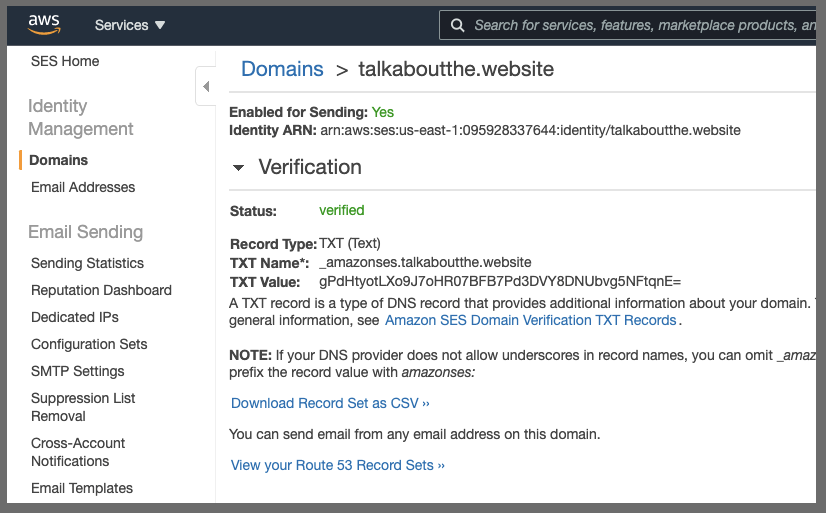

SES Domain Verification

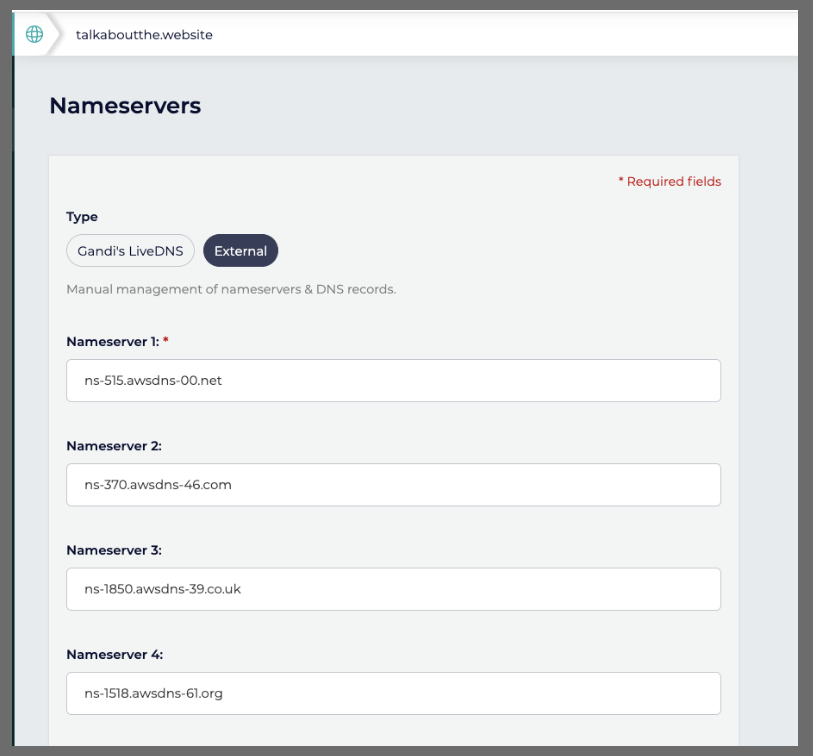

Next in SES we can verify

We will want to update R53 with these values. You can do it manually or click the "use Route53" to automate it.

This will then show verified:

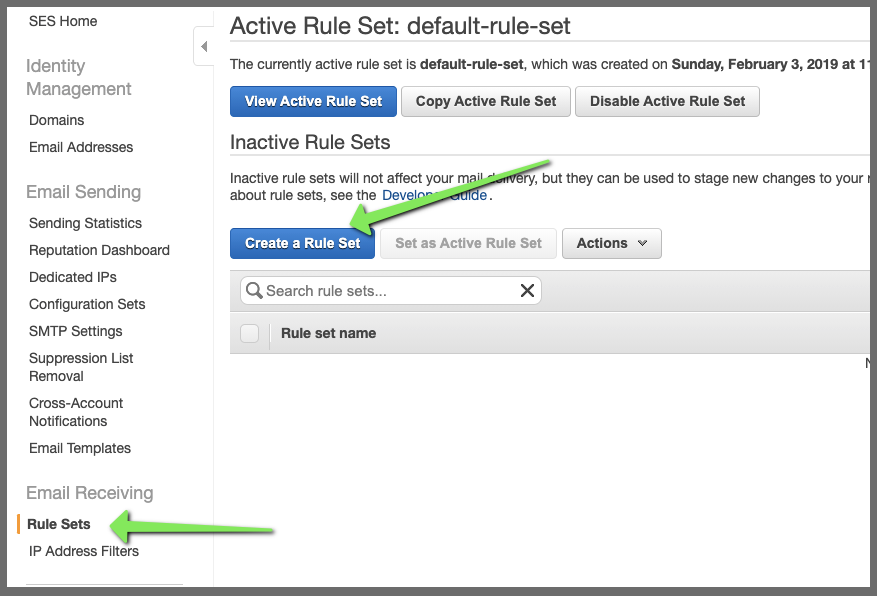

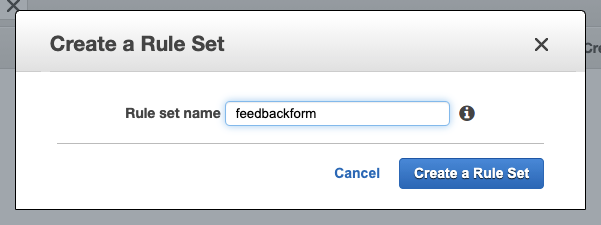

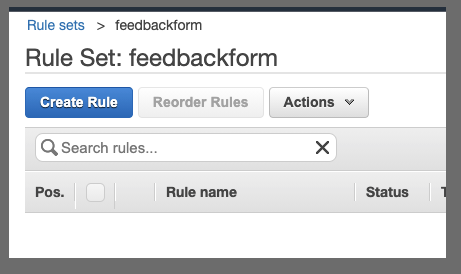

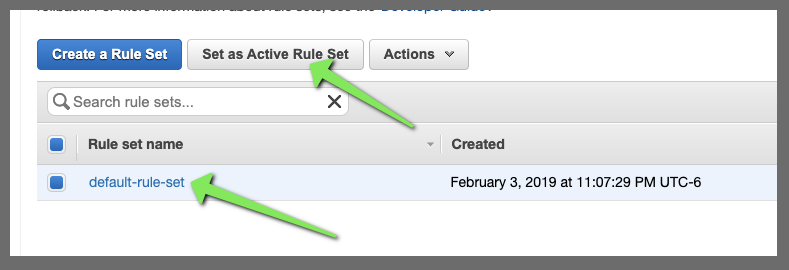

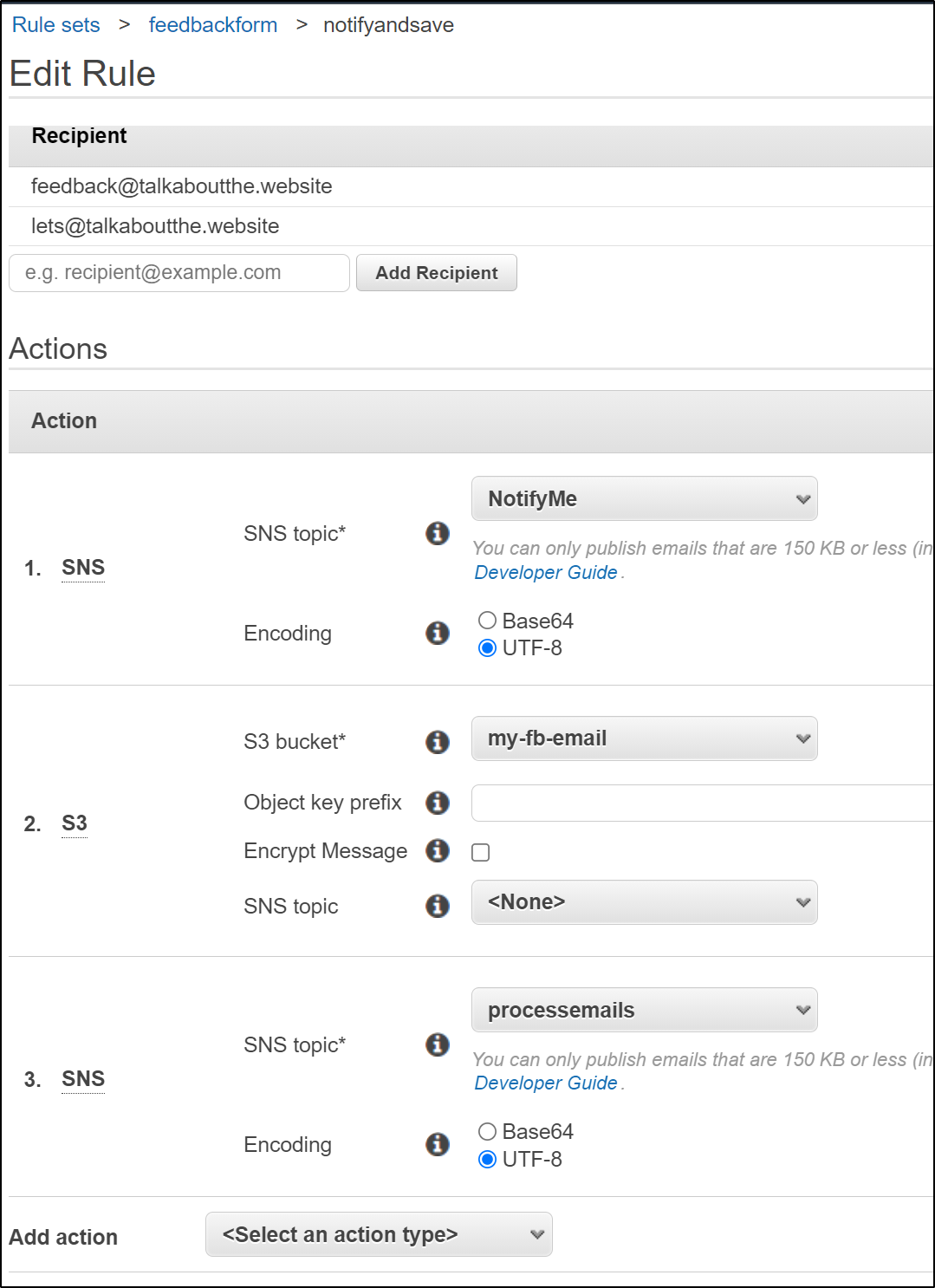

Creating a Rule Set

Next, let's create a Rule Set:

Give it a name

then click on the rule set to create a role

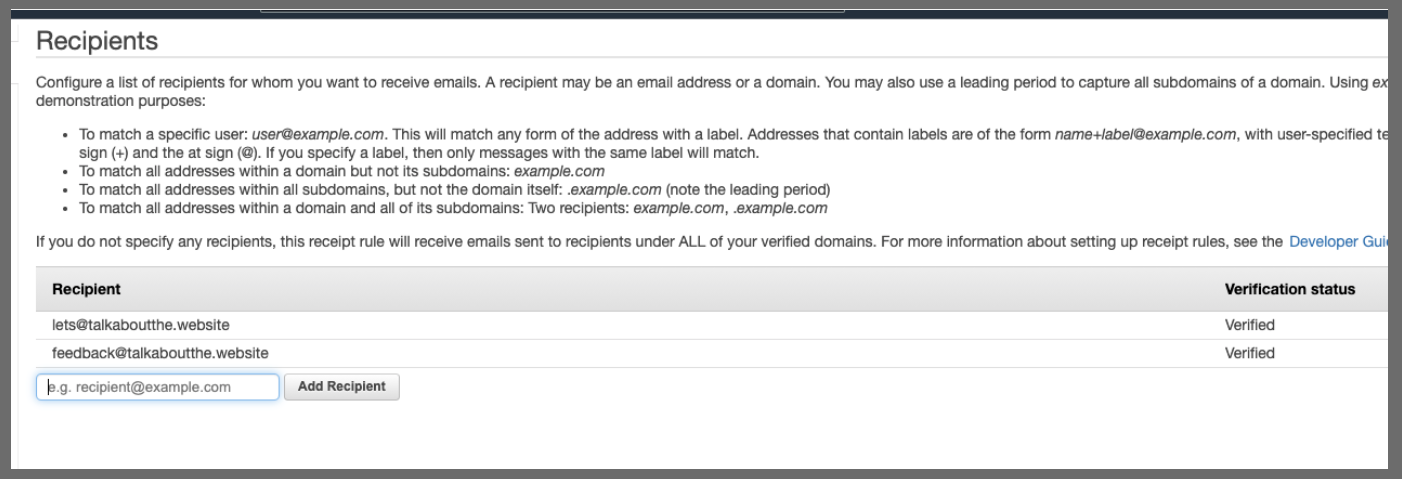

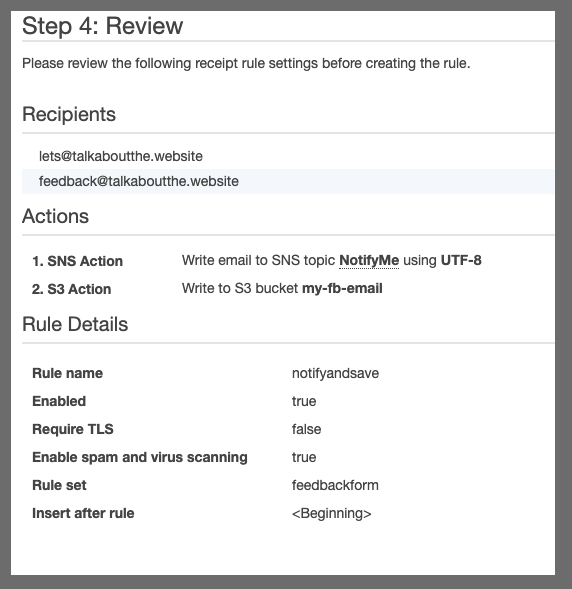

Next add some emails

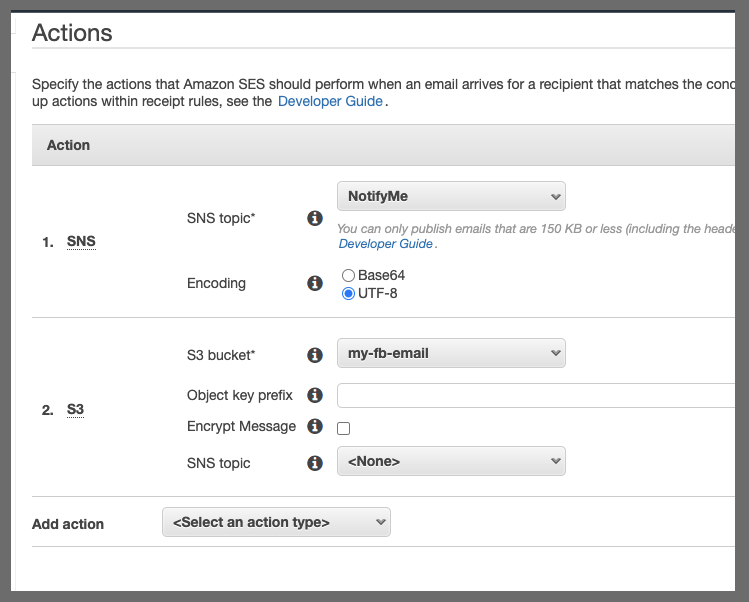

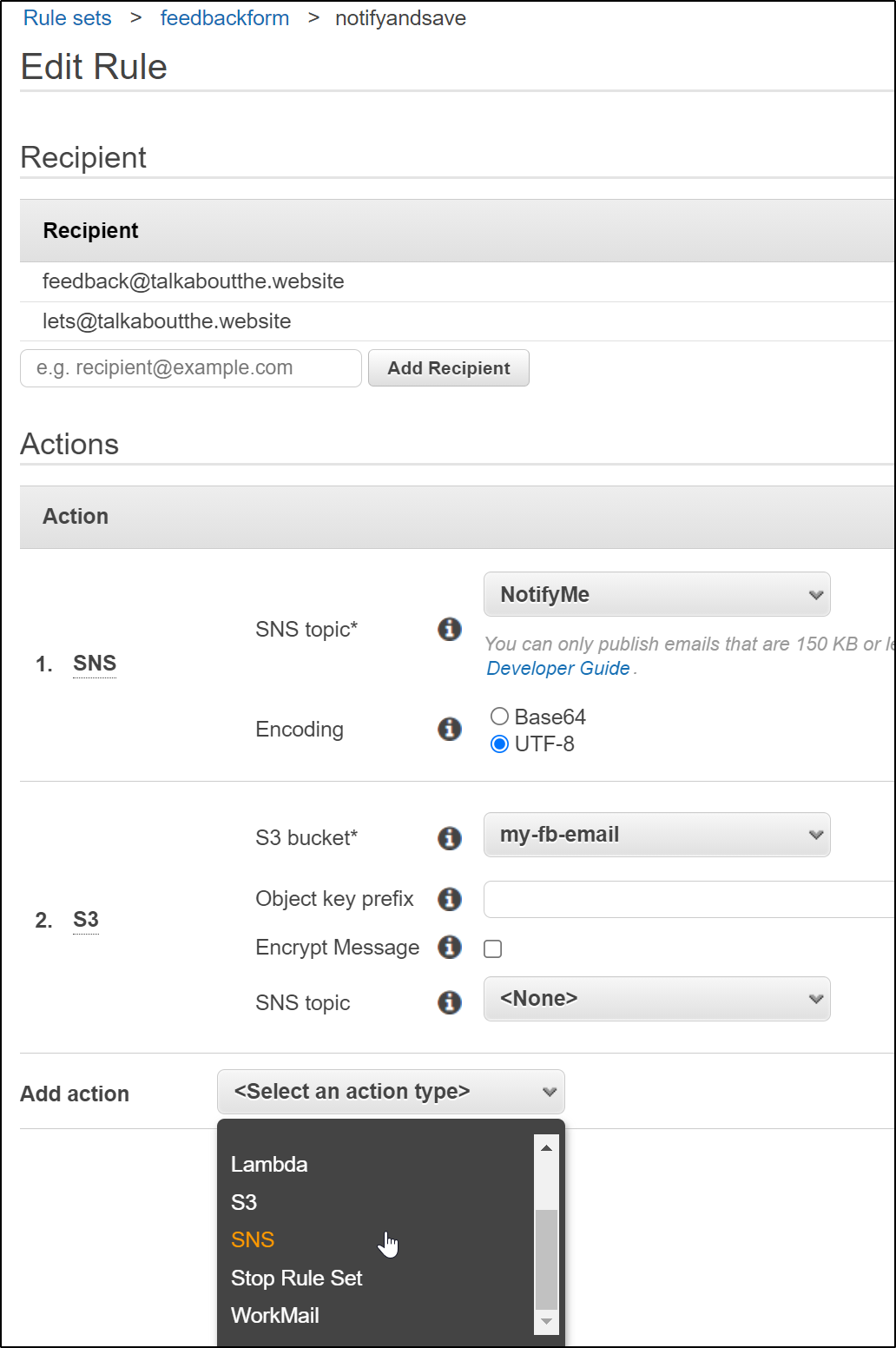

Next we specify our actions. We can do workmail, lambda, s3, sns, bounce, or 'add header.

Here we are going to update an SNS (that mails me) and then store the message in a bucket.

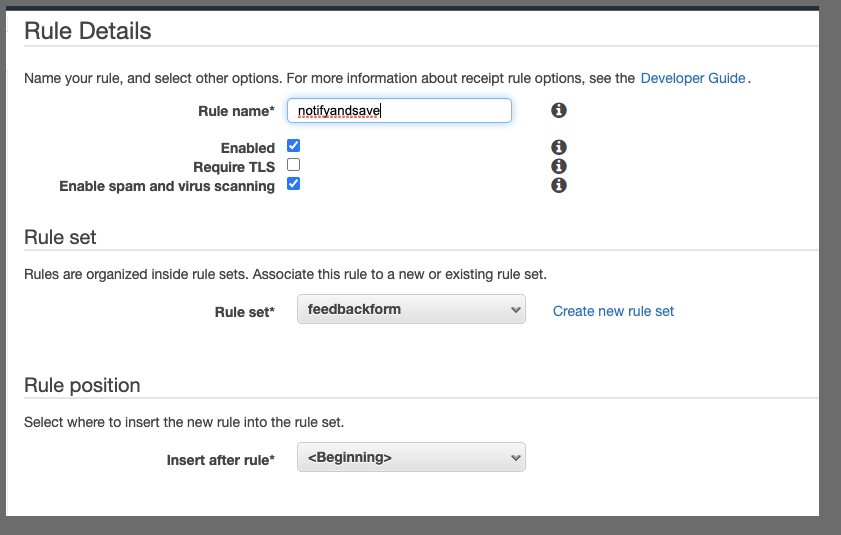

Then give it a name and say where it places in the flow.

review and save

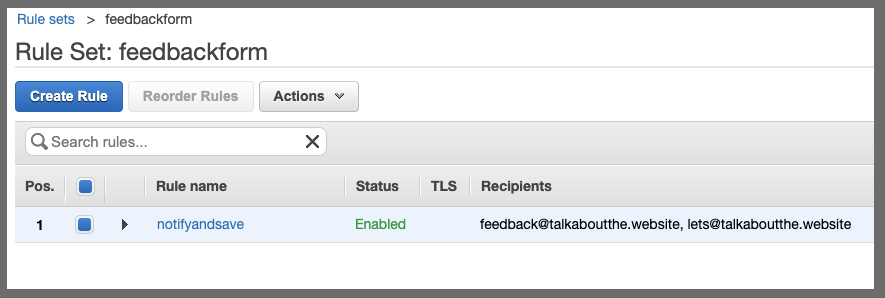

Lastly, we see the rule is enabled.

Verification

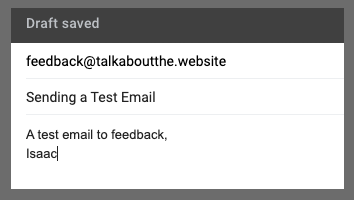

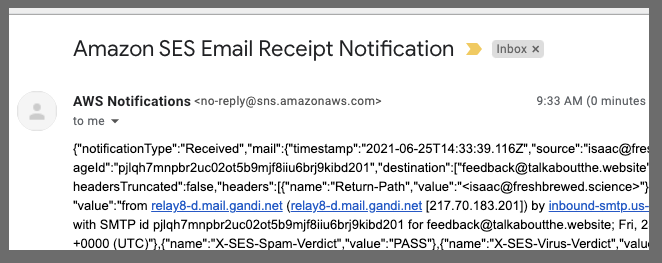

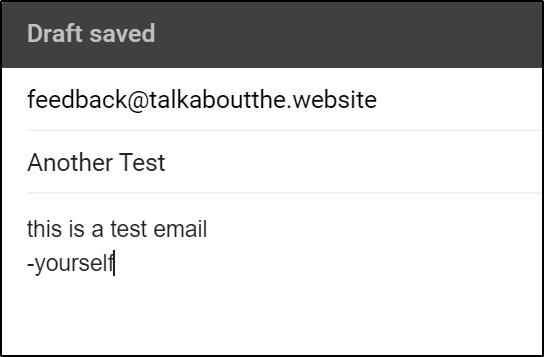

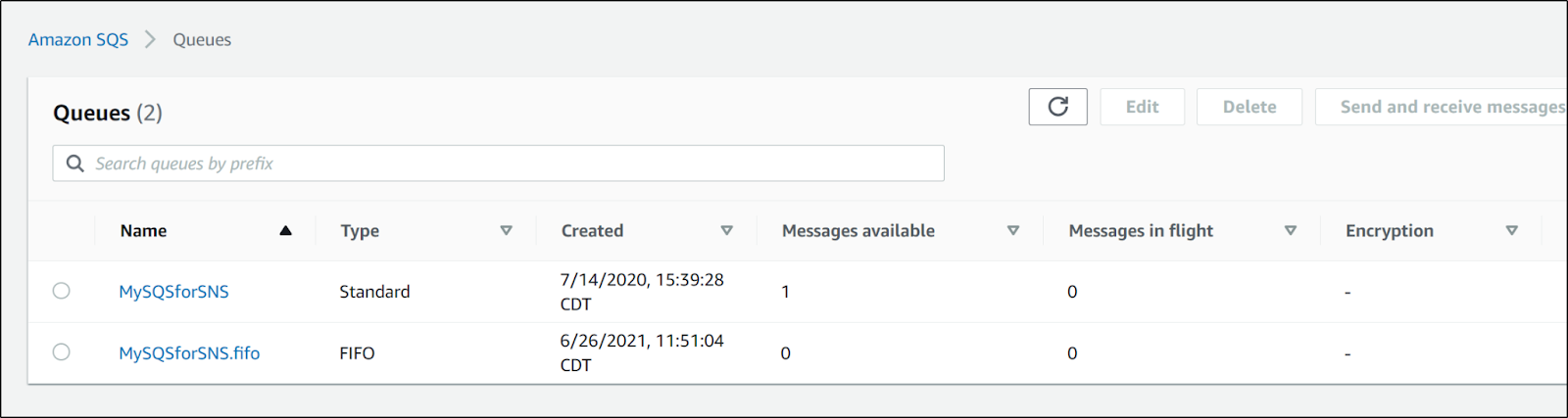

I'll send a quick test email

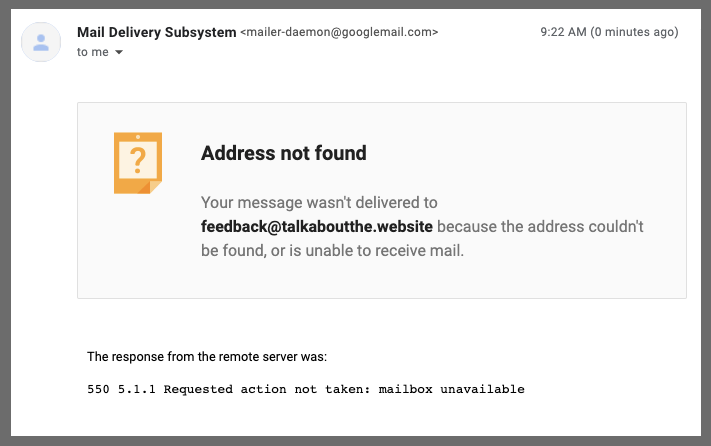

Doing this right away generated an error:

I realized later you need to make the ruleset ACTIVE to use it

Once activated, it worked

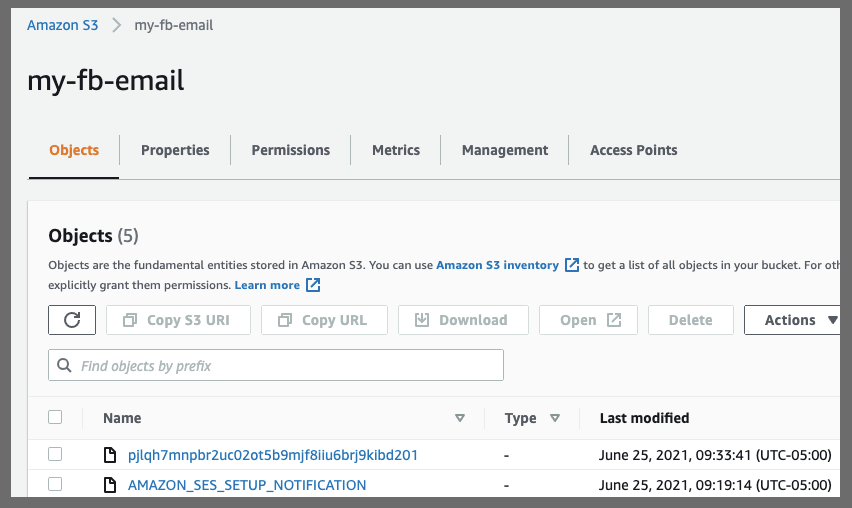

and i can see the S3 bucket is updated

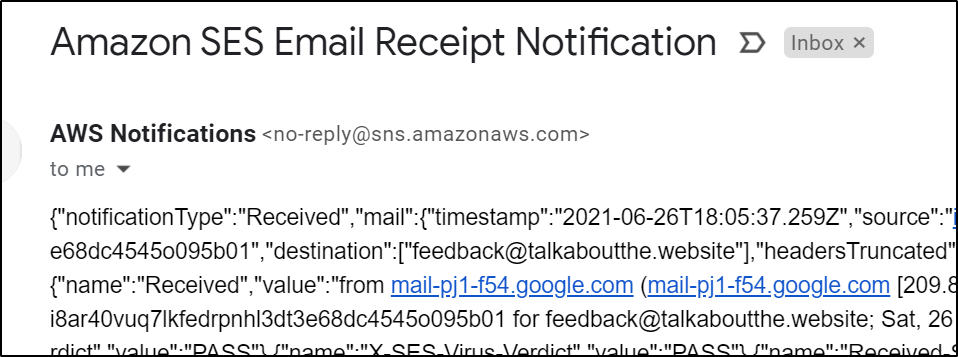

SNS to SQS

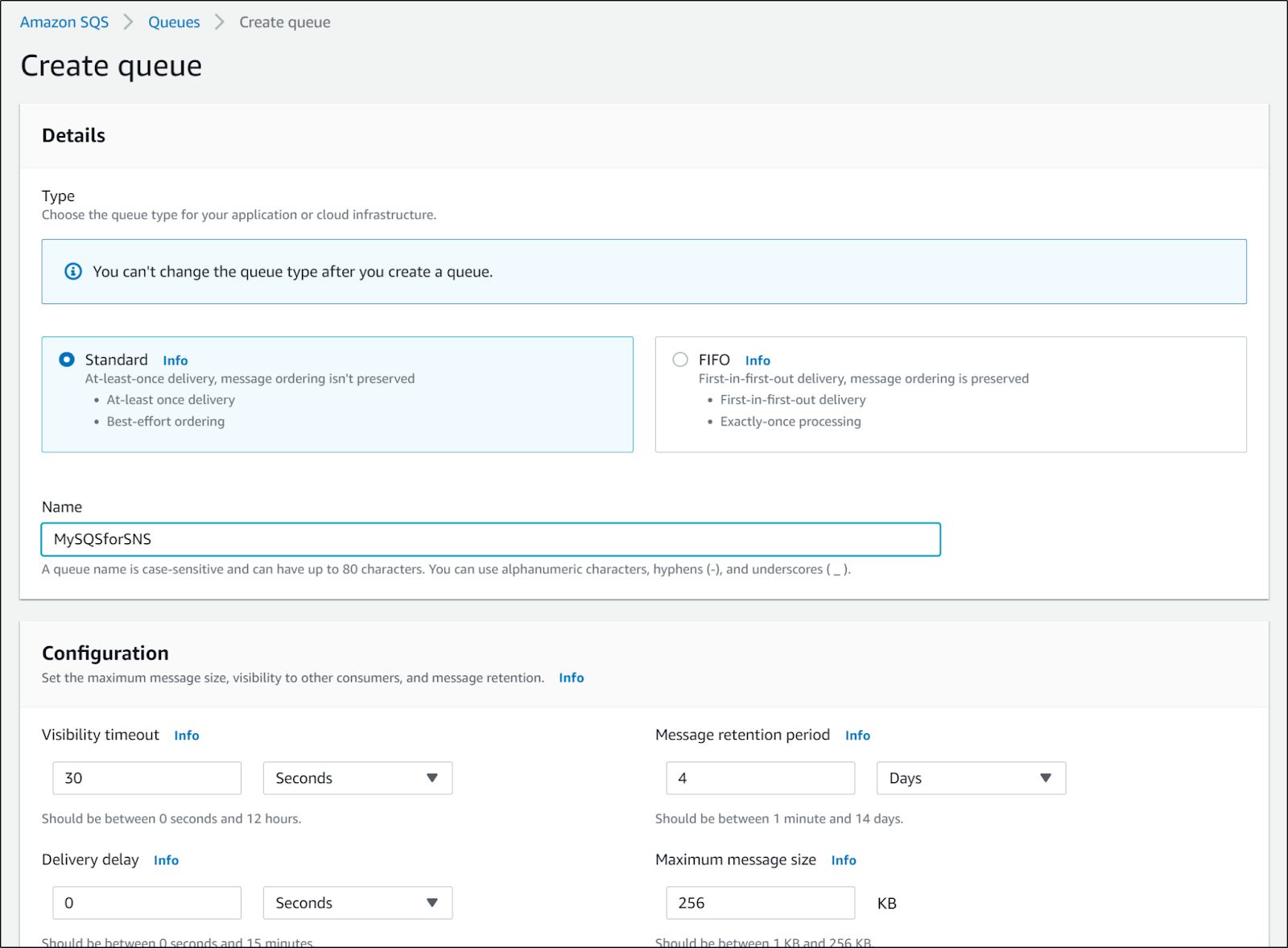

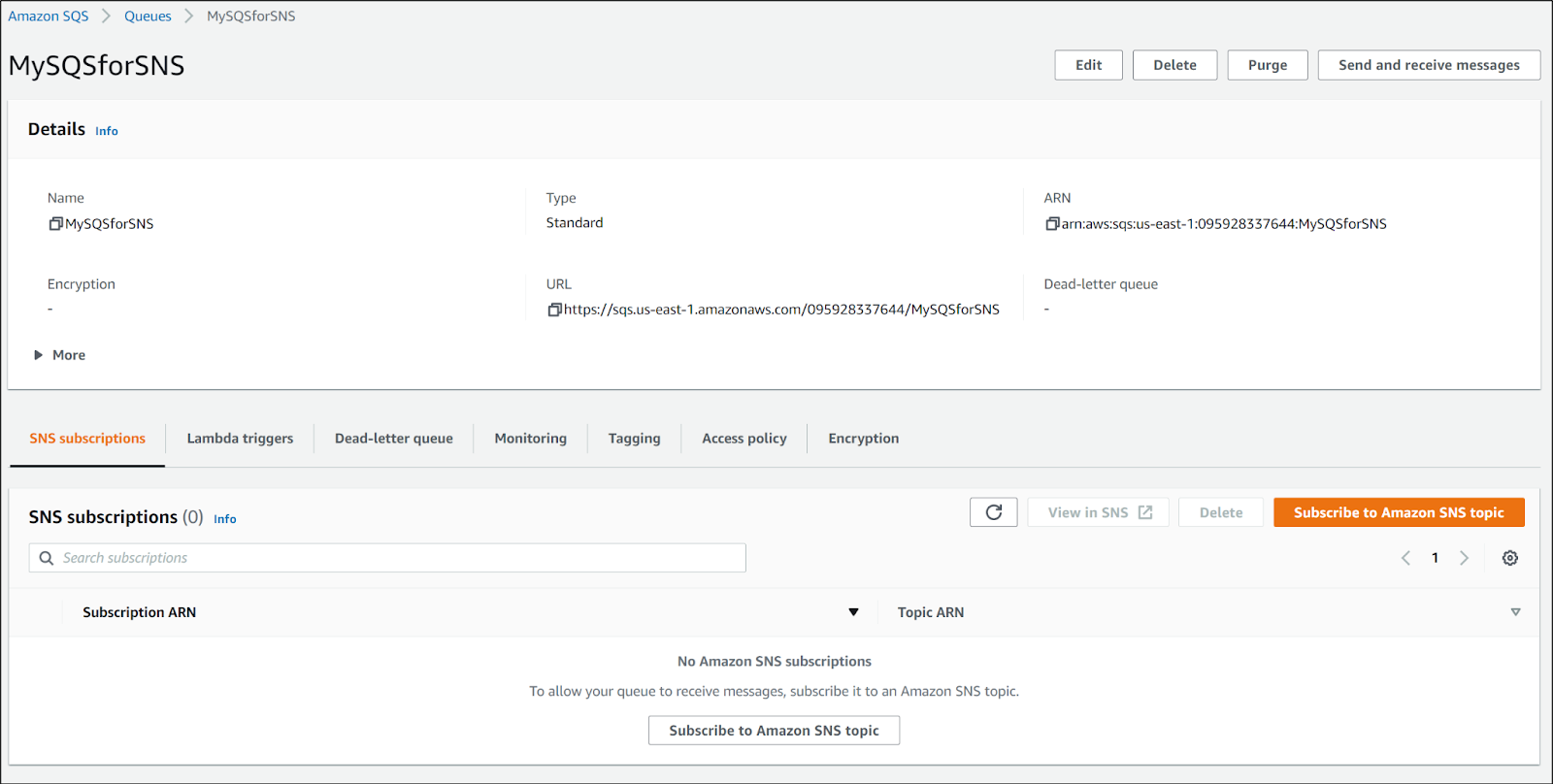

first, let's create an SQS queue for this work:

We'll leave default settings save for the name.

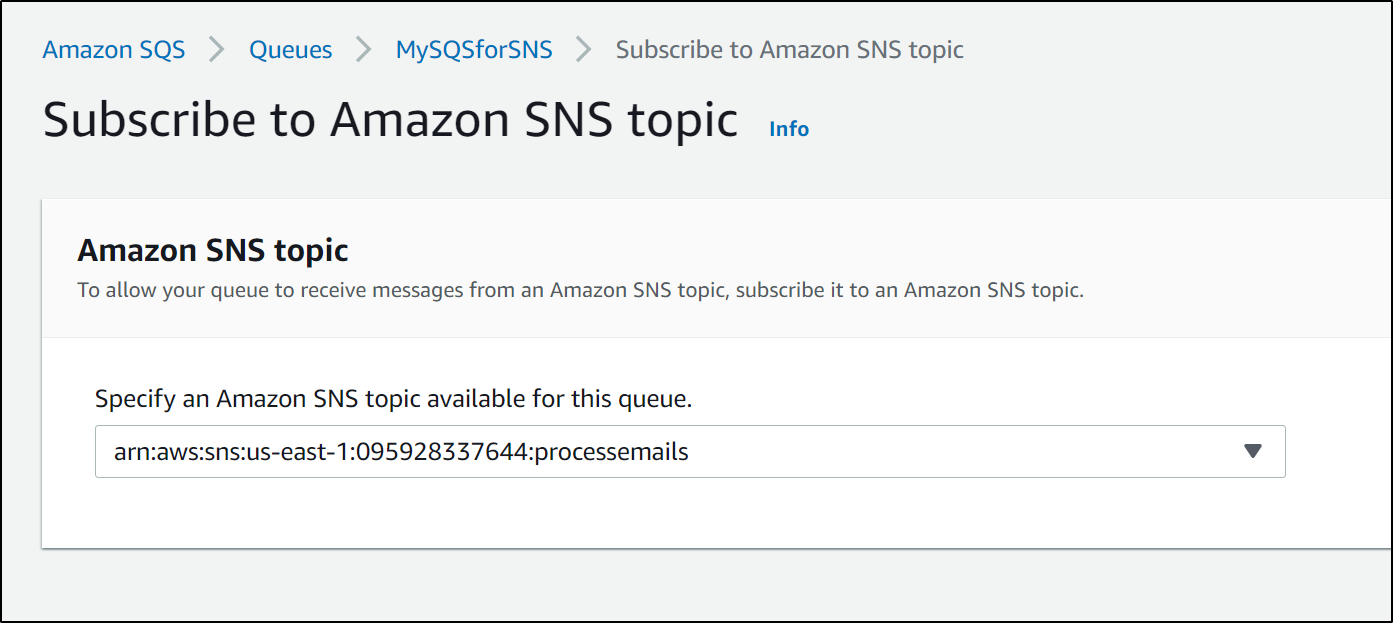

Once created, we can see there is a "subscribe to SNS topic" bottom on the lower right.

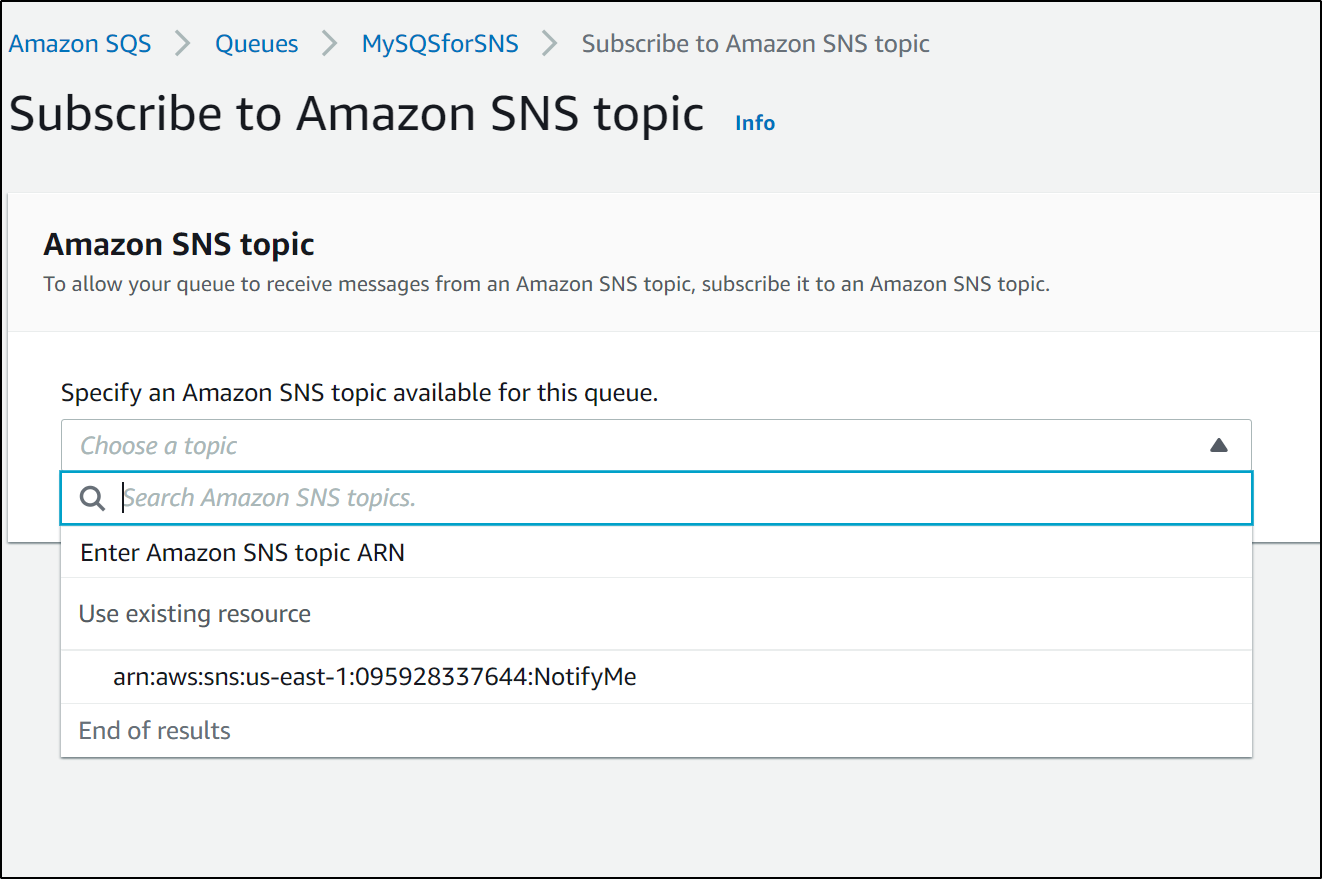

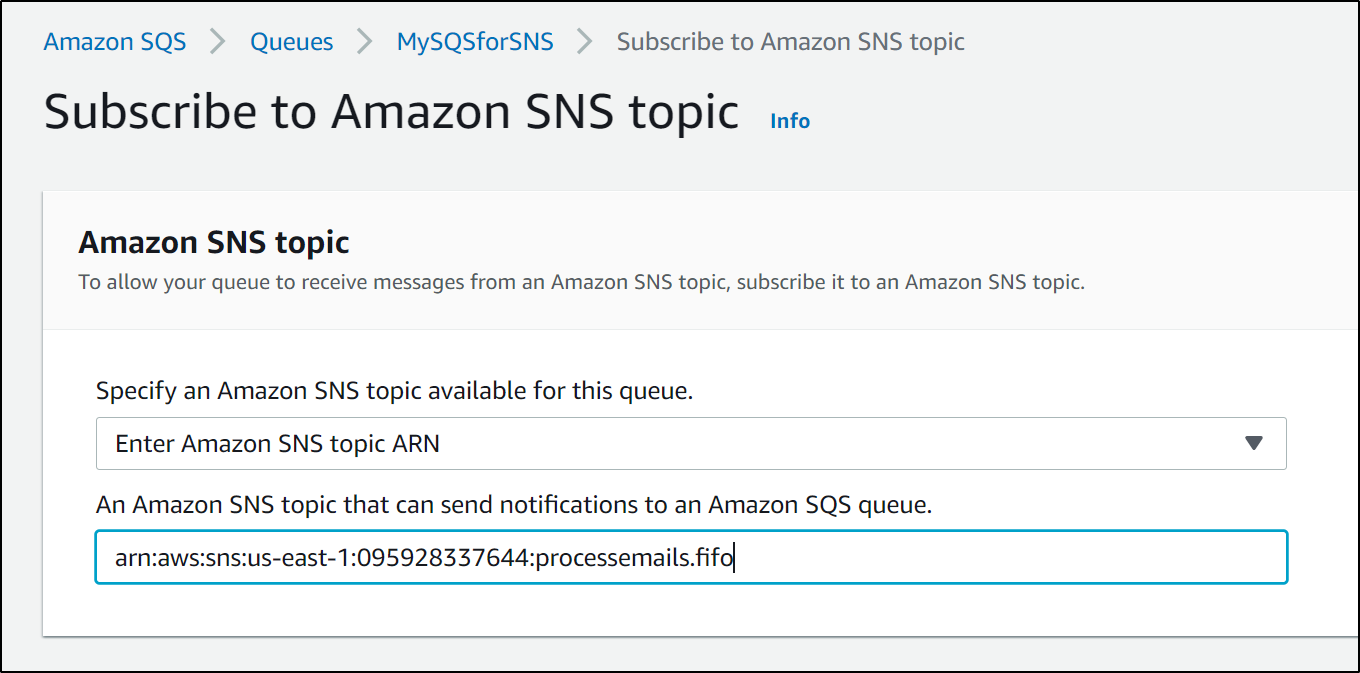

We could just subscribe to the existing topic (NotifyMe):

However, since I use that topic for a variety of things, I don't want every notification sent from Dapr and others to propagate to the queue.

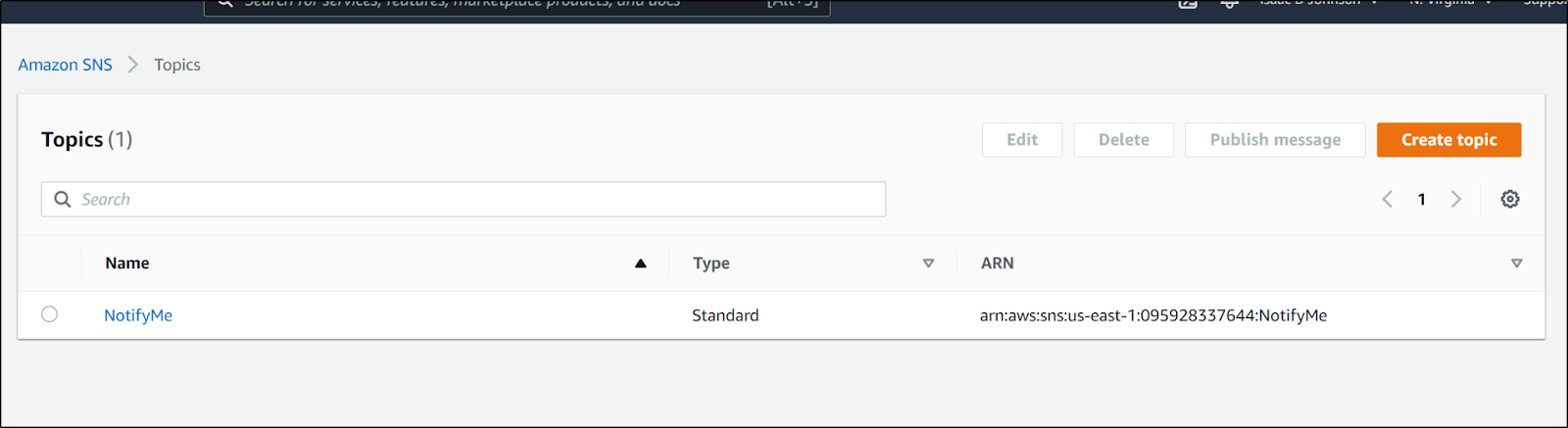

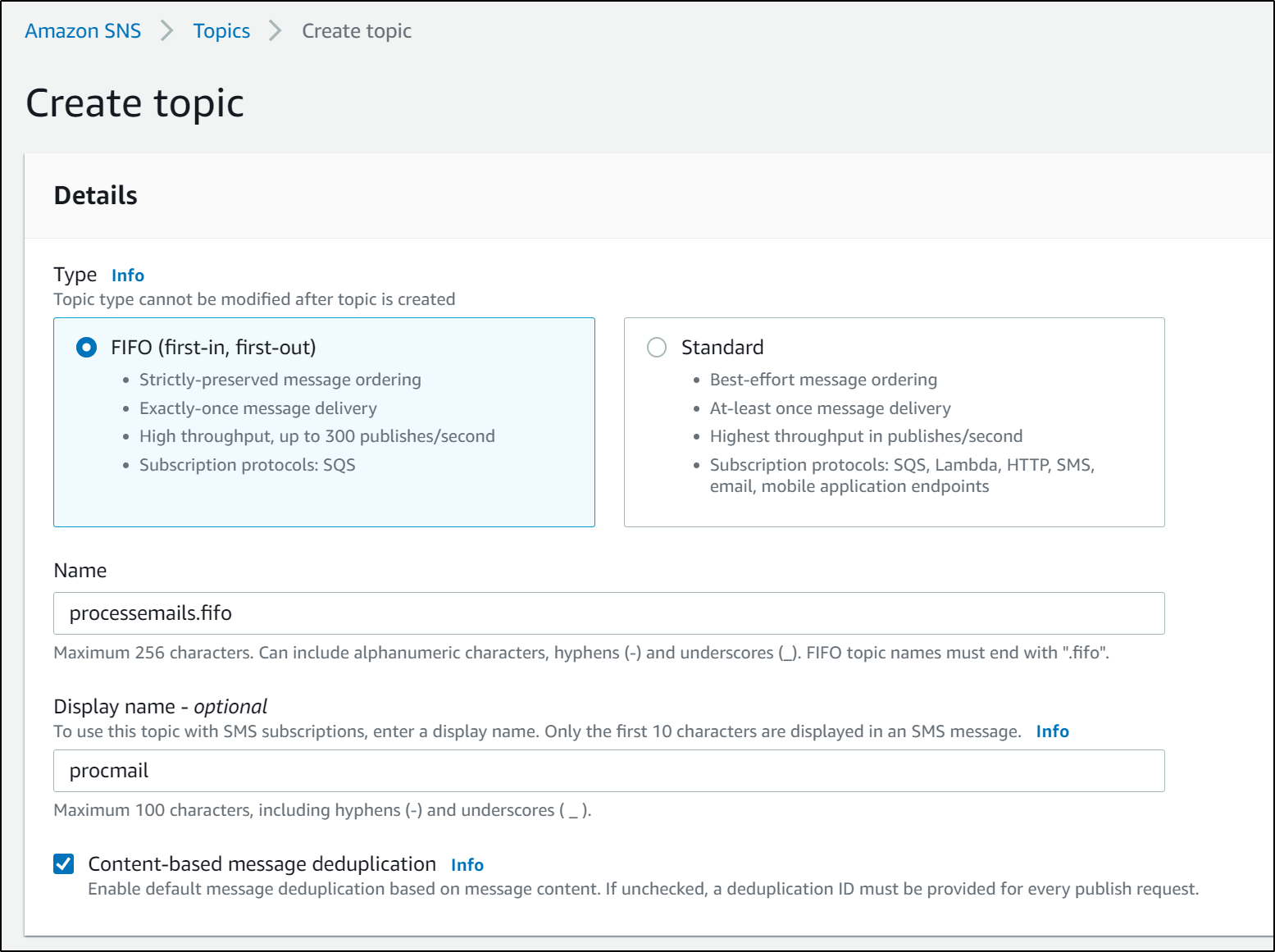

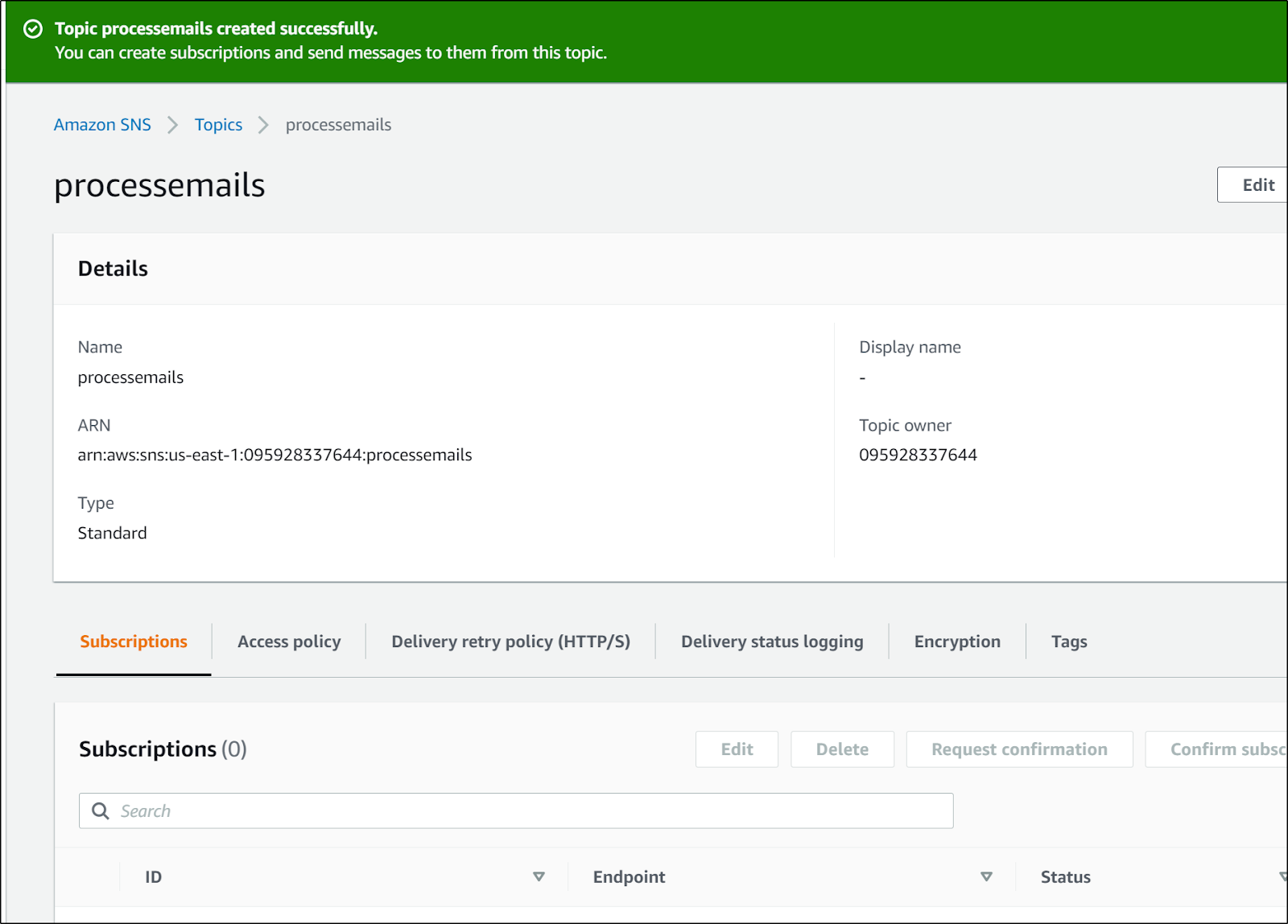

Let's create a new SNS topic

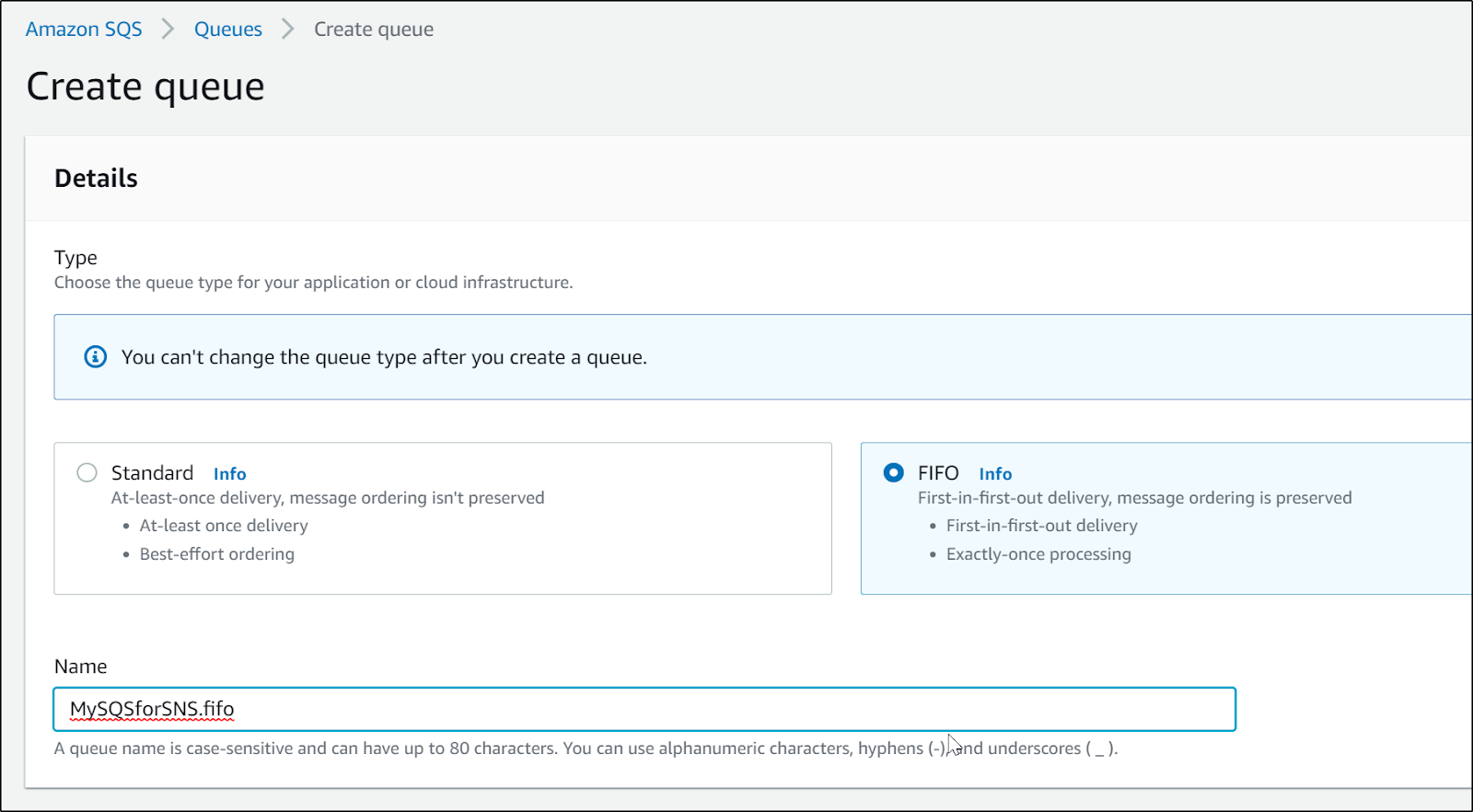

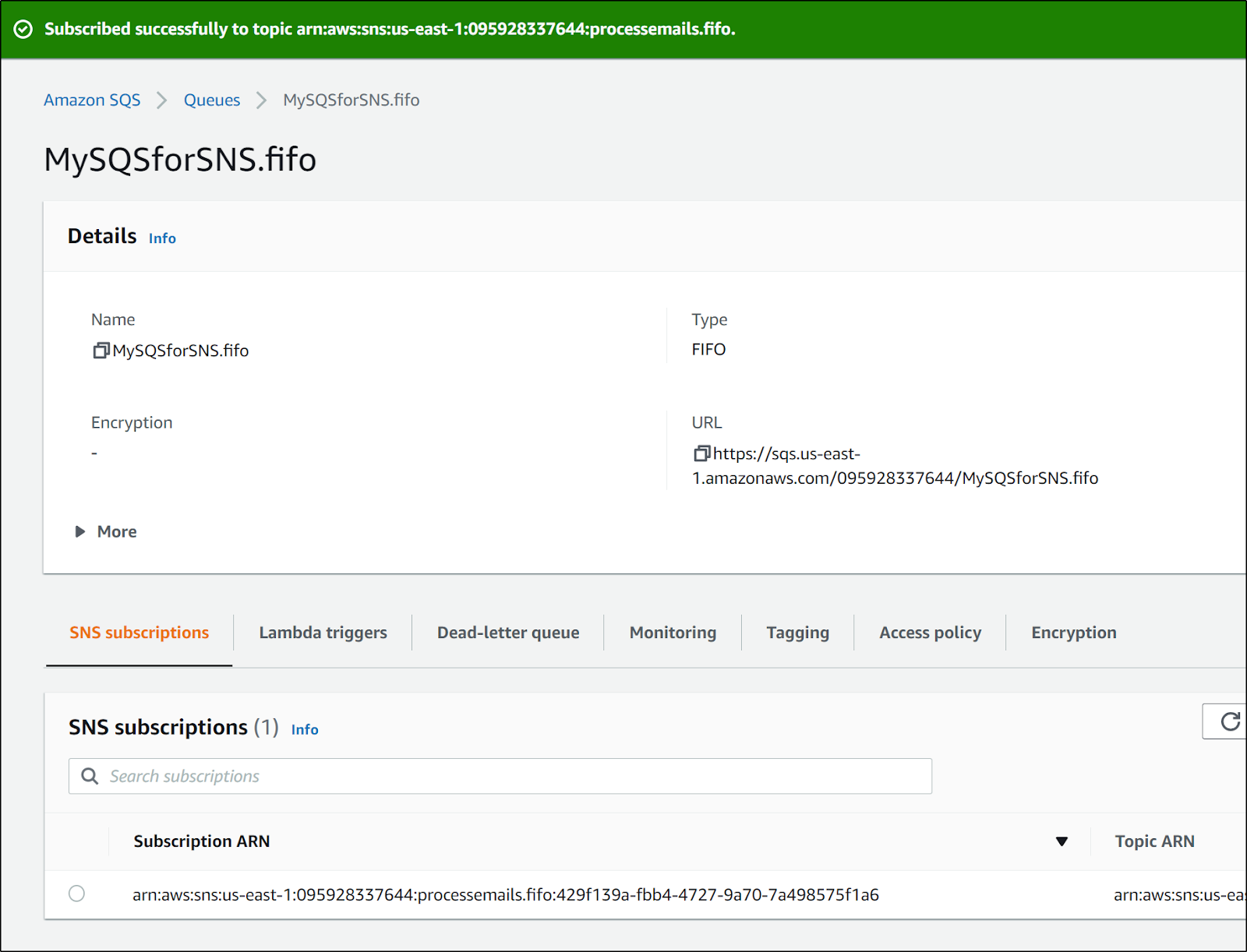

Note: these first steps show trying to use a First In First Out (FIFO) topic and queue which we soon discover does not work with SES. To see solution skip ahead to "Working Topic".

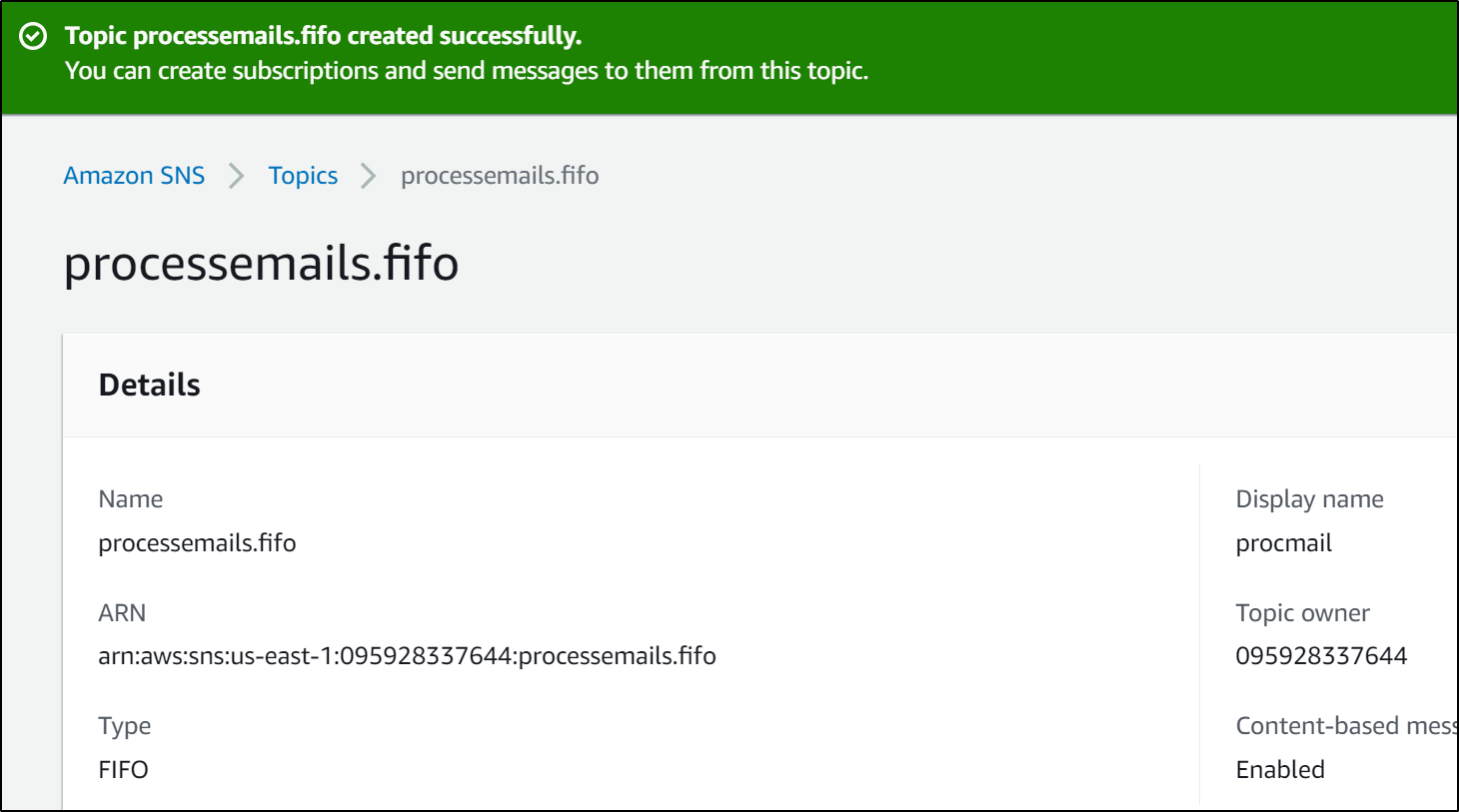

Let's create a basic FIFO SNS topic

which created https://console.aws.amazon.com/sns/v3/home?region=us-east-1#/topic/arn:aws:sns:us-east-1:095928337644:processemails.fifo

that didn't work since a standard queue cannot subscribe to a fifo sns topic. they need to match (standard for standard and FIFO for FIFO). Thus we need to create a new SQS queue

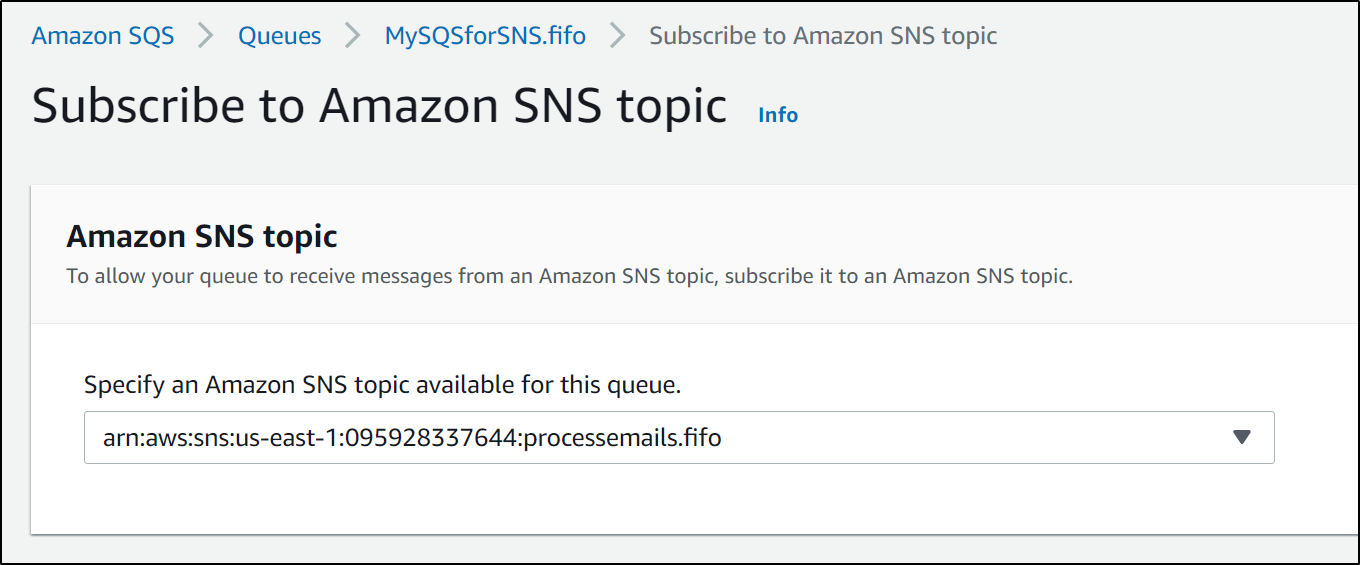

to which we can now subscribe to the SNS topic

and we see confirmation

Lastly, we need to go back to SES and add a rule for this new topic to our active ruleset

And our topic and save

Unfortunately that doesn't work. SES only supports standard, not FIFO topics (see: https://forums.aws.amazon.com/thread.jspa?messageID=969163 )

Working Topic

Let's create a standard SNS topic

and we can subscribe our Standard SQS to it

Lastly, let's edit the default rule set to add a 3rd step to transmit to the SNS topic

Verification

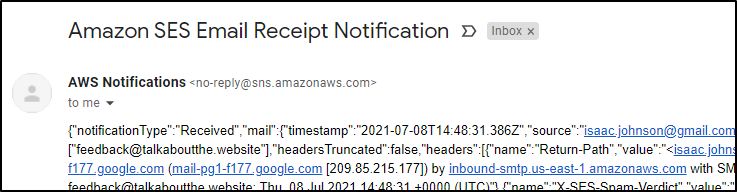

Now when we send an email to be processed by SES

We can see via our SES->SNS (notify me) it was received

And now we see a message is waiting for us

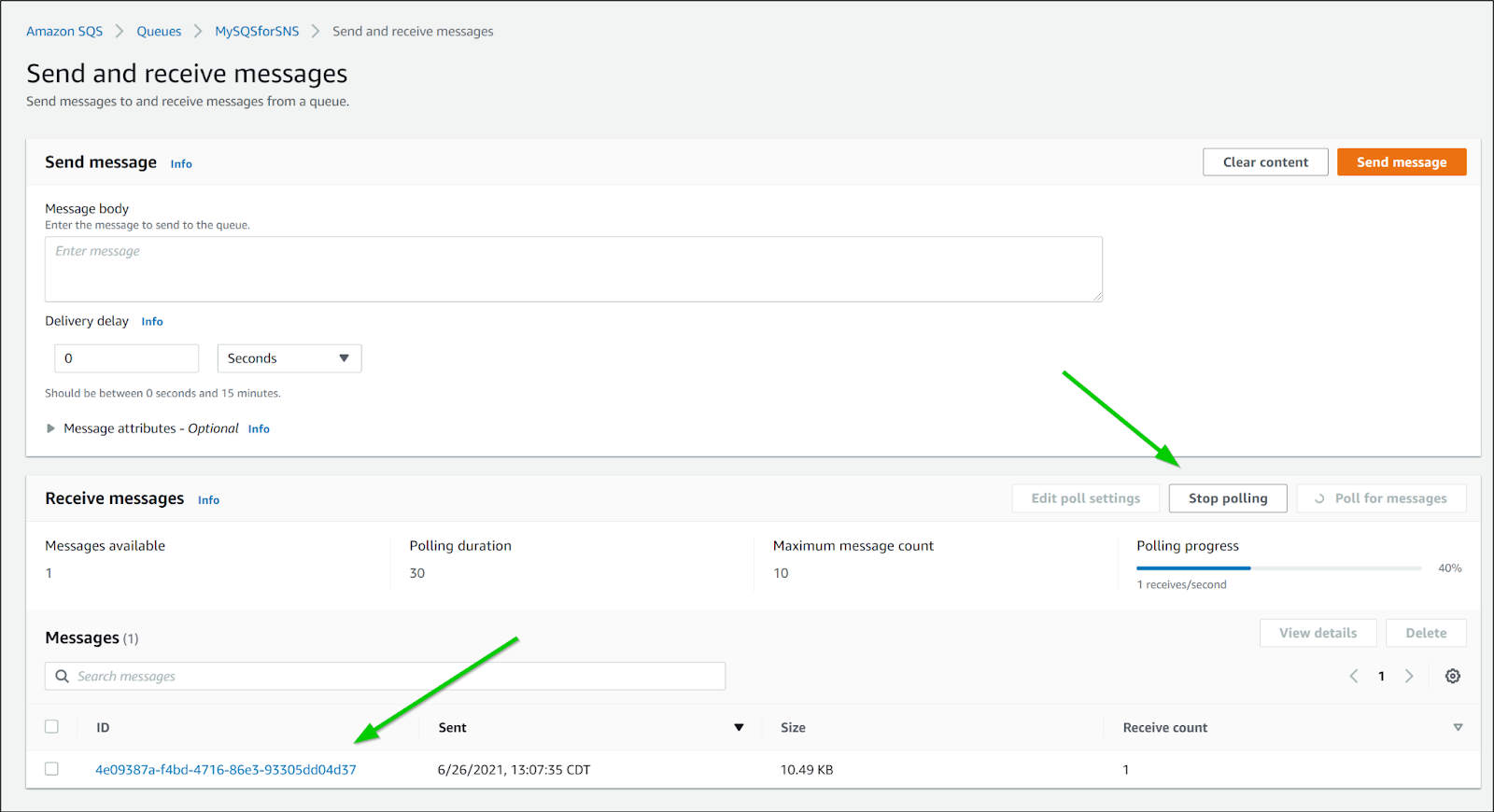

And if we go to the SQS, we can use "Send/Receive" messages to poll our queue and view the pending message

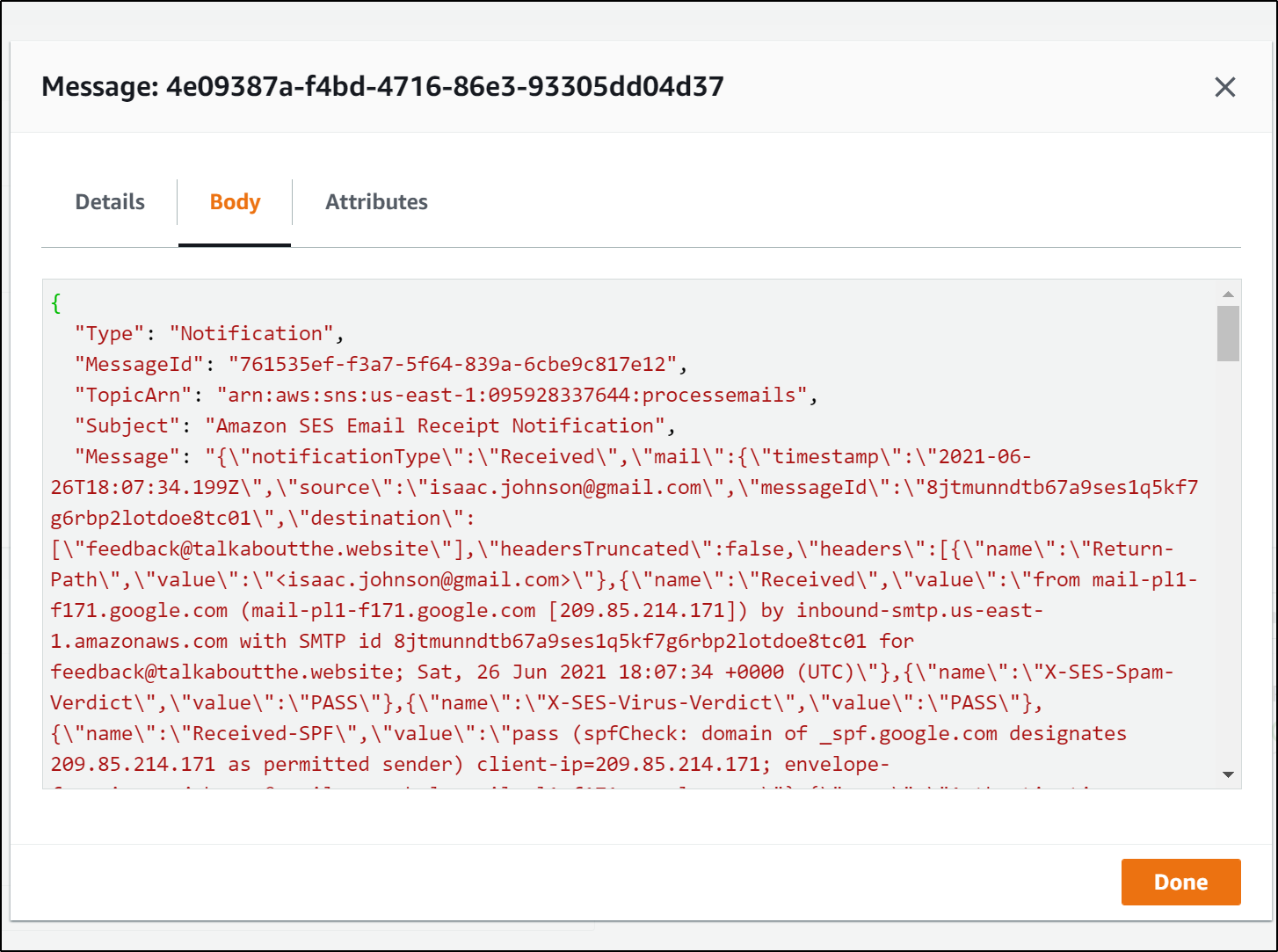

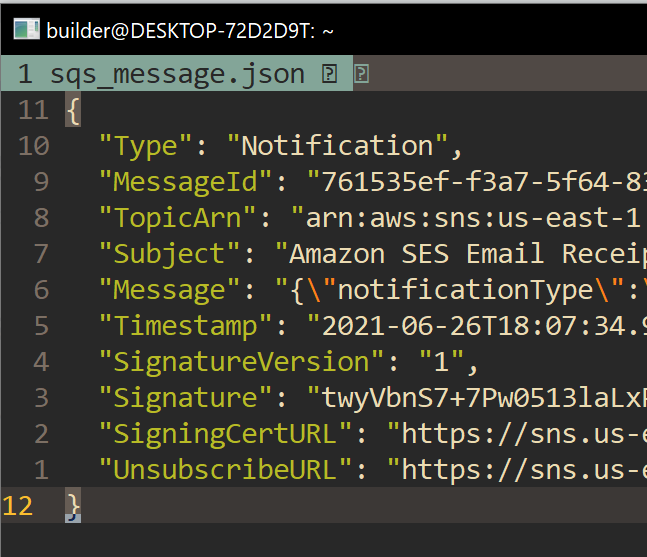

If we use an editor, we can see this has a few fields

we see "Message" is actually embedded JSON.. so we could get to the email we sent with JQ:

$ cat sqs_message.json | jq -r .Message | jq -r .content | tail -n 20

From: Isaac Johnson <isaac.johnson@gmail.com>

Date: Sat, 26 Jun 2021 13:07:21 -0500

Message-ID: <CAEccrgWUaetvL2QQOr--JRh_+aqmu5PJT=Bm1f041Y8X-Ls9CQ@mail.gmail.com>

Subject: Another Test

To: feedback@talkaboutthe.website

Content-Type: multipart/alternative; boundary="00000000000055ec4005c5af2137"

--00000000000055ec4005c5af2137

Content-Type: text/plain; charset="UTF-8"

Yet another test

-yourself

--00000000000055ec4005c5af2137

Content-Type: text/html; charset="UTF-8"

<div dir="ltr">Yet another test<div>-yourself</div></div>

--00000000000055ec4005c5af2137--

We can unpack this as it's just a MIME encoded message with munpack.

$ sudo apt update

$ sudo apt install mpack

$ munpack -f -t mimemessage.txt

part1 (text/plain)

part2 (text/html)

$ cat part1

Yet another test

-yourself

$ cat part2

<div dir="ltr">Yet another test<div>-yourself</div></div>

Dapr SQS Binding

You can follow along as I've made the code in these examples public in a GH repo: https://github.com/idjohnson/daprSQSMimeWorker

When creating the binding, if you don't need a session token, you'll still need to specify a blank one with double quotes

apiVersion: dapr.io/v1alpha1

kind: Component

metadata:

name: mysqsforsns

namespace: default

spec:

type: bindings.aws.sqs

version: v1

metadata:

- name: queueName

value: MySQSforSNS

- name: region

value: us-east-1

- name: accessKey

value: AKIA*********************

- name: secretKey

value: **************************************************

- name: sessionToken

value: ""

$ kubectl apply -f sqsbinding.yaml

component.dapr.io/mysqsforsns created

Containerized Microservice to Parse MIME payloads

Next we need to build a docker image to receive the SQS input binding. This will be very similar to our k8s watcher

Our Dockerfile

$ cat Dockerfile

FROM node:14

# Create app directory

WORKDIR /usr/src/app

# Install app dependencies

# A wildcard is used to ensure both package.json AND package-lock.json are copied

# where available (npm@5+)

COPY package*.json ./

RUN npm install

# If you are building your code for production

# RUN npm ci --only=production

# Bundle app source

COPY . .

EXPOSE 8080

CMD [ "node", "app.js" ]

And the app.js

$ cat app.js

const express = require('express')

const bodyParser = require('body-parser')

const app = express()

app.use(bodyParser.json())

const port = 8080

app.post('/mysqsforsns', (req, res) => {

console.log(req.body)

res.status(200).send()

})

app.listen(port, () => console.log(`sqs event consumer app listening on port ${port}!`))

$ cat package.json

{

"name": "daprsqsevents",

"version": "1.0.0",

"description": "trigger on sqs events",

"main": "app.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

"author": "Isaac Johnson",

"license": "MIT",

"dependencies": {

"body-parser": "^1.19.0",

"express": "^4.17.1"

}

}

Building the Dockerfile

docker build -f Dockerfile -t nodesqswatcher .

Sending build context to Docker daemon 22.02kB

Step 1/7 : FROM node:14

14: Pulling from library/node

199ebcd83264: Pull complete

ddbb155879c0: Pull complete

c194bbaa3d8b: Pull complete

6154ac87d7f3: Pull complete

0c283e88ced7: Pull complete

dba101298560: Pull complete

1d8bfd4e555f: Pull complete

757e41ffbdcc: Pull complete

6e055c4b8721: Pull complete

Digest: sha256:d8f90b676efb1260957a4170a9a0843fc003b673ae164f22df07eaee9bbc6223

Status: Downloaded newer image for node:14

---> 29aef864d313

Step 2/7 : WORKDIR /usr/src/app

---> Running in 01fe318ebde2

Removing intermediate container 01fe318ebde2

---> 40bc6e5d473d

Step 3/7 : COPY package*.json ./

---> 19e629184892

Step 4/7 : RUN npm install

---> Running in e4410a0adb56

npm WARN daprsqsevents@1.0.0 No repository field.

added 50 packages from 37 contributors and audited 50 packages in 2.847s

found 0 vulnerabilities

Removing intermediate container e4410a0adb56

---> fcaea1679a70

Step 5/7 : COPY . .

---> cf42f861c2b2

Step 6/7 : EXPOSE 8080

---> Running in 5d687a33a3df

Removing intermediate container 5d687a33a3df

---> 8642f833906e

Step 7/7 : CMD [ "node", "app.js" ]

---> Running in c6ae604ce3f2

Removing intermediate container c6ae604ce3f2

---> 6ecb886f0dc9

Successfully built 6ecb886f0dc9

Successfully tagged nodesqswatcher:latest

Use 'docker scan' to run Snyk tests against images to find vulnerabilities and learn how to fix them

Now we tag and push to our private harbor registry

$ docker tag nodesqswatcher:latest harbor.freshbrewed.science/freshbrewedprivate/nodesqswatcher:latest

$ docker push harbor.freshbrewed.science/freshbrewedprivate/nodesqswatcher:latest

The push refers to repository [harbor.freshbrewed.science/freshbrewedprivate/nodesqswatcher]

7bd7a161b478: Pushed

55ce6e69dc92: Pushed

db52d43fd298: Pushed

e7a46d8010f7: Pushed

2826100b114f: Pushed

c1e89830a17d: Pushed

c69ef78c4a6a: Pushed

d7aef11e5baf: Pushed

2c6cd9a0820c: Pushed

50c78a431907: Pushed

cccb1829e343: Pushed

14ce51dbae6e: Pushed

3125b578849a: Pushed

latest: digest: sha256:9850056227fcb8db060111ffe6fc25306eeeceb6237d9be0b75e954733539f78 size: 3049

Lastly, we just need to deploy it with a deployment yaml

$ cat sqswatcher.dep.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nodesqswatcher

name: nodesqswatcher-deployment

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: nodesqswatcher

template:

metadata:

annotations:

dapr.io/app-id: nodesqswatcher

dapr.io/app-port: "8080"

dapr.io/config: appconfig

dapr.io/enabled: "true"

labels:

app: nodesqswatcher

spec:

containers:

- env:

- name: PORT

value: "8080"

image: harbor.freshbrewed.science/freshbrewedprivate/nodesqswatcher:latest

imagePullPolicy: Always

name: nodesqswatcher

ports:

- containerPort: 8080

protocol: TCP

imagePullSecrets:

- name: myharborreg

$ kubectl apply -f sqswatcher.dep.yaml

deployment.apps/nodesqswatcher-deployment created

$ kubectl get pods -l app=nodesqswatcher

NAME READY STATUS RESTARTS AGE

nodesqswatcher-deployment-8ffdbb99c-n75ts 0/2 ContainerCreating 0 27s

$ kubectl get pods -l app=nodesqswatcher

NAME READY STATUS RESTARTS AGE

nodesqswatcher-deployment-8ffdbb99c-n75ts 2/2 Running 0 78s

we can check the logs to see indeed our Dapr pod has pulled the entry from SQS

$ kubectl logs nodesqswatcher-deployment-8ffdbb99c-n75ts nodesqswatcher

sqs event consumer app listening on port 8080!

{

Type: 'Notification',

MessageId: '761535ef-f3a7-5f64-839a-6cbe9c817e12',

TopicArn: 'arn:aws:sns:us-east-1:095928337644:processemails',

Subject: 'Amazon SES Email Receipt Notification',

Message: '{"notificationType":"Received","mail":{"timestamp":"2021-06-26T18:07:34.199Z","source":"isaac.johnson@gmail.com","messageId":"8jtmunndtb67a9ses1q5kf7g6rbp2lotdoe8tc01","destination":["feedback@....

Next let's update the Dockerfile to pull in the mime parser.

RUN apt update

RUN apt install -y mpack

Testing

builder@DESKTOP-72D2D9T:~/Workspaces/daprSQS$ kubectl get pods -l app=nodesqswatcher

NAME READY STATUS RESTARTS AGE

nodesqswatcher-deployment-8ffdbb99c-n75ts 2/2 Running 0 3h29m

nodesqswatcher-deployment-88dc6879d-cr4vr 0/2 ContainerCreating 0 22s

builder@DESKTOP-72D2D9T:~/Workspaces/daprSQS$ kubectl get pods -l app=nodesqswatcher

NAME READY STATUS RESTARTS AGE

nodesqswatcher-deployment-88dc6879d-cr4vr 2/2 Running 0 11m

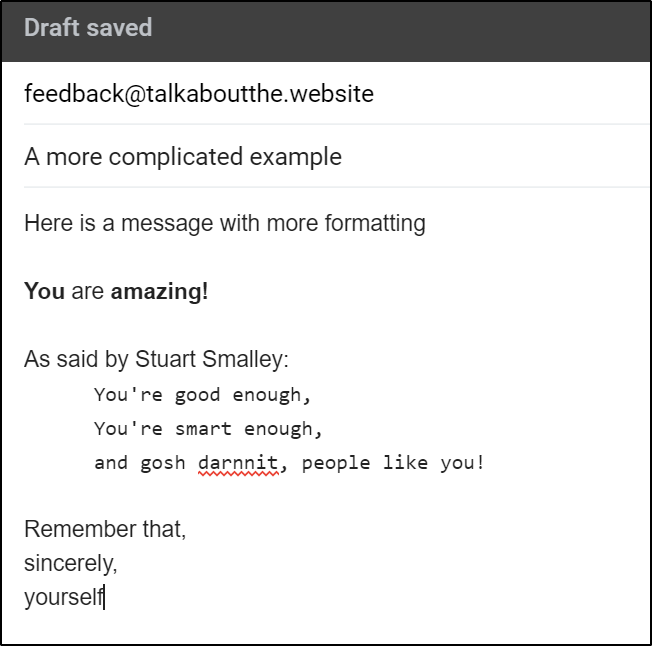

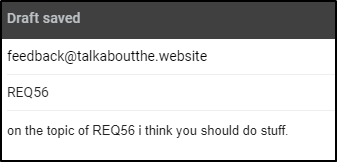

with the service running, let's send a test email

and we can see that come through the system and show

$ kubectl logs `kubectl get pods -l app=nodesqswatcher | tail -n1 | awk '{ print $1 }' | tr -d '\n'` nodesqswatcher | tail -n20

data:

Here is a message with more formatting

*You* are *amazing!*

As said by Stuart Smalley:

You're good enough,

You're smart enough,

and gosh darnnit, people like you!

Remember that,

sincerely,

yourself

data2:

<div dir="ltr">Here is a message with more formatting<div><br></div><div>�<b>You</b> are <b>amazing!</b></div><div><b><br></b></div><div>As said by S�tuart Smalley:</div><blockquote style="margin:0 0 0 40px;border:none;padd�ing:0px"><div><font face="monospace">You're good enough,</font></div>�<div><font face="monospace">You're smart enough,</font></div><div><fo�nt face="monospace">and gosh darnnit, people like you!</font></div></bloc�kquote><div><br></div><div>Remember that,</div><div>sincerely,</div><div>yo�urself</div></div>

I was concerned with the strange characters in the output and to see if that was just a logging problem, i hopped on the pod to check

$ kubectl exec -it nodesqswatcher-deployment-fdfb8f5f4-tpbsh -- bash

Defaulting container name to nodesqswatcher.

Use 'kubectl describe pod/nodesqswatcher-deployment-fdfb8f5f4-tpbsh -n default' to see all of the containers in this pod.

root@nodesqswatcher-deployment-fdfb8f5f4-tpbsh:/usr/src/app# ls

Dockerfile asdf messageExample mimefileraw package-lock.json part1 runit sqswatcher.dep.yaml

app.js fromPodmimefile mimefile node_modules package.json part2 sqsbinding.yaml

root@nodesqswatcher-deployment-fdfb8f5f4-tpbsh:/usr/src/app# cat part1

Here is a message with more formatting

*You* are *amazing!*

As said by Stuart Smalley:

You're good enough,

You're smart enough,

and gosh darnnit, people like you!

Remember that,

sincerely,

yourself

root@nodesqswatcher-deployment-fdfb8f5f4-tpbsh:/usr/src/app# cat part2

<div dir="ltr">Here is a message with more formatting<div><br></div><div><b>You</b> are <b>amazing!</b></div><div><b><br></b></div><div>As said by Stuart Smalley:</div><blockquote style="margin:0 0 0 40px;border:none;padding:0px"><div><font face="monospace">You're good enough,</font></div><div><font face="monospace">You're smart enough,</font></div><div><font face="monospace">and gosh darnnit, people like you!</font></div></blockquote><div><br></div><div>Remember that,</div><div>sincerely,</div><div>yourself</div></div>

This looks pretty good.

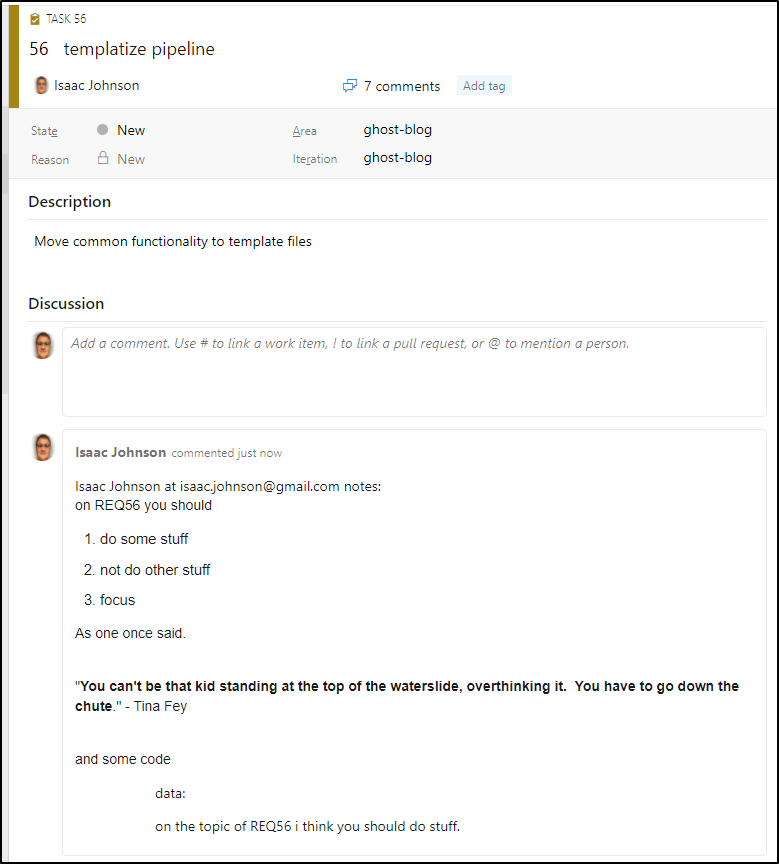

Updating AzDO Work Items

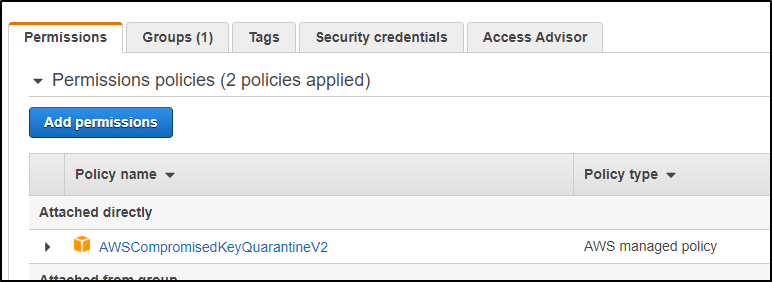

A quick side note: I know I tend to lean Azure over big orange.. But when i errantly added an IAM key in stored in the YAML binding to a public Github, while I corrected it within minutes, not only did AWS immediately quarantine the key:

but i got a call from Washington State from a human asking about it within 30m. I actually find that pretty damn good on them for watching out for bonehead moves from users.

AzDO setup

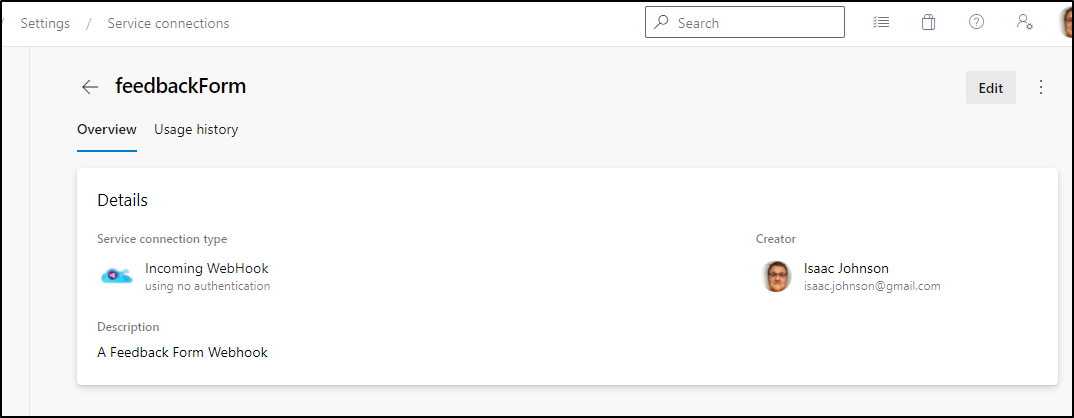

Our next step is to pivot back to AzDO for a moment and setup a webhook to process updates to existing work items.

As you recall, we setup a webhook for WI ingesting from a webform.

This allows us to trigger things without a persistent PAT. We want to follow this model for updates as well.

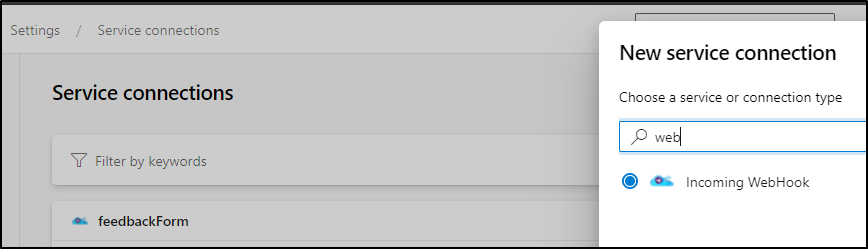

Rather than overload the existing webhook, let's add a new one.

Create the new webhook in Service Connections

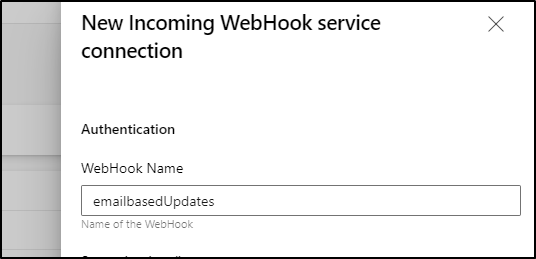

we can give it a name that clues us into the usage later

I'm going to leverage the current webhook repo and just make a branch

$ git checkout webhookprocessor

Branch 'webhookprocessor' set up to track remote branch 'webhookprocessor' from 'origin'.

Switched to a new branch 'webhookprocessor'

$ git pull

Already up to date.

$ git checkout -b emailwebhookprocessor

Switched to a new branch 'emailwebhookprocessor'

We can write the pipeline to use it as such:

# Payload as sent by webhook

resources:

webhooks:

- webhook: emailbasedUpdates

connection: emailbasedUpdates

pool:

vmImage: ubuntu-latest

variables:

- group: AZDOAutomations

steps:

- script: |

echo Add other tasks to build, test, and deploy your project.

echo "fromname: ${{ parameters.emailbasedUpdates.fromname }}"

echo "fromemail: ${{ parameters.emailbasedUpdates.fromemail }}"

echo "workitem: ${{ parameters.emailbasedUpdates.workitem }}"

echo "comment: ${{ parameters.emailbasedUpdates.comment }}"

cat >rawDescription <<EOOOOL

${{ parameters.emailbasedUpdates.comment }}

EOOOOL

cat rawDescription | sed ':a;N;$!ba;s/\n/<br\/>/g' | sed "s/'/\\\\'/g"> inputDescription

echo "input description: `cat inputDescription`"

cat >$(Pipeline.Workspace)/updatewi.sh <<EOL

set -x

export AZURE_DEVOPS_EXT_PAT=$(AZDOTOKEN)

az boards work-item update --org https://dev.azure.com/princessking --id ${{ parameters.emailbasedUpdates.workitem }} --discussion '${{ parameters.emailbasedUpdates.fromname }} at ${{ parameters.emailbasedUpdates.fromemail }} notes: `cat inputDescription | tr -d '\n'`' > azresp.json

EOL

chmod u+x updatewi.sh

echo "updatewi.sh:"

cat $(Pipeline.Workspace)/updatewi.sh

echo "have a nice day."

displayName: 'Check webhook payload'

- task: AzureCLI@2

displayName: 'Create feature ticket'

inputs:

azureSubscription: 'My-Azure-SubNew'

scriptType: 'bash'

scriptLocation: 'scriptPath'

scriptPath: '$(Pipeline.Workspace)/updatewi.sh'

- script: |

sudo apt-get update

sudo apt-get install -y s-nail || true

set -x

export WIID=${{ parameters.emailbasedUpdates.workitem }}

export USERTLD=`echo "${{ parameters.emailbasedUpdates.fromemail }}" | sed 's/^.*@//'`

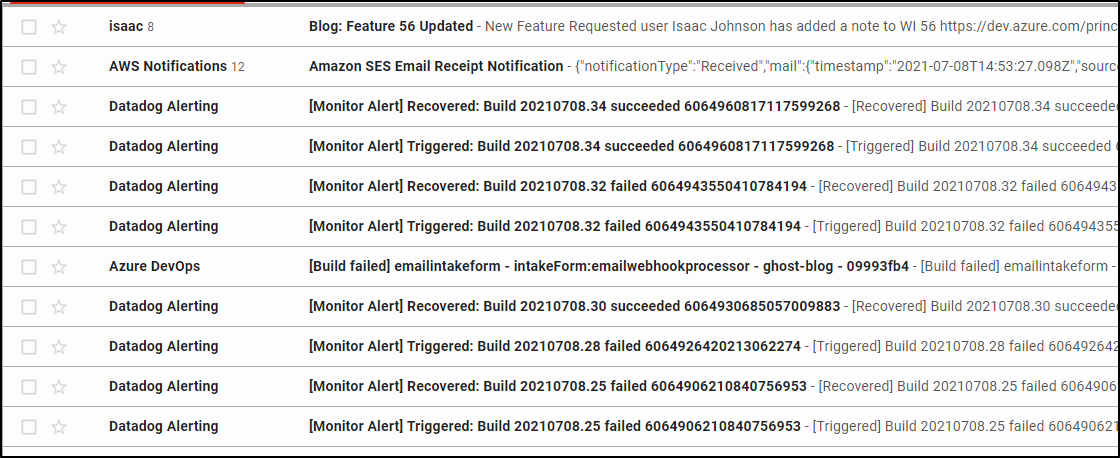

if [[ "$USERTLD" == "dontemailme.com" ]]; then

# do not CC user

echo "<h1>New Feature Requested</h1><p>user ${{ parameters.emailbasedUpdates.fromname }} has added a note to WI ${{ parameters.emailbasedUpdates.workitem }} </p><p>https://dev.azure.com/princessking/ghost-blog/_workitems/edit/$WIID/</p><br/><br/>Kind Regards,<br/>Isaac Johnson" | s-nail -s "Blog: Feature $WIID Updated" -M "text/html" -S smtp=email-smtp.us-east-1.amazonaws.com:587 -S smtp-use-starttls -S ssl-verify=ignore -S smtp-auth=login -S smtp-auth-user=$(SMTPAUTHUSER) -S smtp-auth-password=$(SMPTAUTHPASS) -c isaac.johnson@gmail.com -r isaac@freshbrewed.science isaac.johnson@gmail.com

else

# may not work

echo "<h1>New Feature Requested</h1><p>user ${{ parameters.emailbasedUpdates.fromname }} has added a note to WI ${{ parameters.emailbasedUpdates.workitem }}</p><p>https://dev.azure.com/princessking/ghost-blog/_workitems/edit/$WIID/</p><br/><br/>Kind Regards,<br/>Isaac Johnson" | s-nail -s "Blog: Feature $WIID Updated" -M "text/html" -S smtp=email-smtp.us-east-1.amazonaws.com:587 -S smtp-use-starttls -S ssl-verify=ignore -S smtp-auth=login -S smtp-auth-user=$(SMTPAUTHUSER) -S smtp-auth-password=$(SMPTAUTHPASS) -c ${{ parameters.emailbasedUpdates.fromemail }} -r isaac@freshbrewed.science isaac.johnson@gmail.com

fi

cat >valuesLA.json <<EOLA

{

"requestorEmail": "${{ parameters.emailbasedUpdates.fromemail }}",

"emailSummary": "Updated WI $WIID",

"workitemID": "$WIID",

"workitemURL": "https://dev.azure.com/princessking/ghost-blog/_workitems/edit/$WIID/",

"desitinationEmail": "isaac.johnson@gmail.com"

}

EOLA

#curl -v -X POST -d @valuesLA.json -H "Content-type: application/json" "https://prod-34.eastus.logic.azure.com:443/workflows/851f197339fa4f09b1517ebf712efdcc/triggers/manual/paths/invoke?api-version=2016-10-01&sp=%2Ftriggers%2Fmanual%2Frun&sv=1.0&sig=asdfasdfasdfasdfasdfasdfasdf"

displayName: 'Email Me'Then verify with curl:

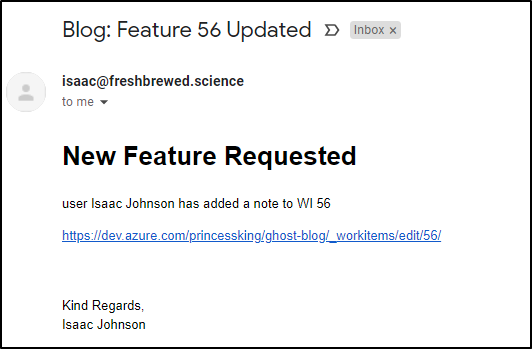

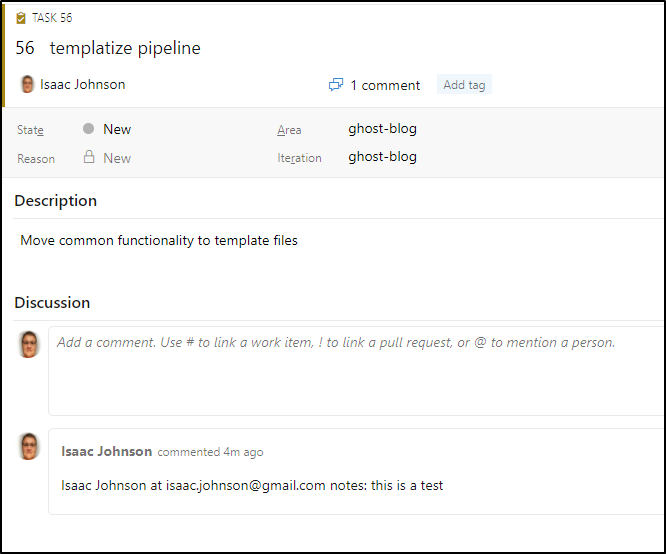

$ curl -X POST -H 'Content-Type: application/json' -d '{"fromname":"Isaac Johnson","fromemail":"isaac.johnson@gmail.com","workitem":"56","comment":"this is a test"}' https://dev.azure.com/princessking/_apis/public/distributedtask/webhooks/emailbasedUpdates?api-version=6.0-previewwhich shows we triggered the email notifications from SES

And lastly we can verify it worked with:

Updating Containerized NodeJS App

Tying this into the NodeJS app

// using @ronomon/mime

app.post('/mysqsforsns', (req, res) => {

var mymsg = JSON.parse(req.body.Message);

//console.log(mymsg.content);

///var mime = new MIME.Message(buffer);

var buf = Buffer.from(mymsg.content, 'utf8')

var mime = new MIME.Message(buf);

/*

console.log(mime.body.toString());

console.log(mime.to);

console.log(mime.from[0].name.toString());

console.log(mime.from[0].email.toString());

console.log(mime.parts[0].body.toString());

console.log(mime.parts[0].contentType.value);

*/

const re = /REQ(\d+)/i;

mime.parts.forEach(element => {

console.log("element...");

console.log(element.contentType.value);

console.log(element.body.toString());

if (element.contentType.value == "text/plain") {

console.log("HERE IS PLAIN")

}

var str = element.body.toString();

if (re.test(str)) {

console.log("MATCHED")

var found = str.match(re);

console.log(found[1]);

// due to all the complicated messages we may get, just base64 it and send that as the payload

let strbuff = Buffer.from(str, 'utf8')

let b64data = strbuff.toString('base64')

const data = JSON.stringify({

fromname: mime.from[0].name.toString(),

fromemail: mime.from[0].email.toString(),

workitem: found[1],

comment: b64data

});

console.log("str--------")

console.log(str)

console.log("b64data--------")

console.log(b64data)

const options = {

hostname: 'dev.azure.com',

port: 443,

path: '/princessking/_apis/public/distributedtask/webhooks/emailbasedUpdates?api-version=6.0-preview',

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Content-Length': data.length

}

}

const req = https.request(options, res => {

console.log(`statusCode: ${res.statusCode}`)

res.on('data', d => {

process.stdout.write(d)

})

})

req.on('error', error => {

console.error(error)

})

req.write(data)

req.end()

}

});

res.status(200).send()

})

The key thing I worked out over time is that trying to escape text to pass in the JSON payload was overly challenging. In the end, to KISS, i just base64 encoded it and sent it along.

This meant i needed to update my emailwebhook pipeline to decode it on the fly:

steps:

- script: |

echo Add other tasks to build, test, and deploy your project.

echo "fromname: ${{ parameters.emailbasedUpdates.fromname }}"

echo "fromemail: ${{ parameters.emailbasedUpdates.fromemail }}"

echo "workitem: ${{ parameters.emailbasedUpdates.workitem }}"

# passed in value is b64 encoded

echo ${{ parameters.emailbasedUpdates.comment }} | base64 --decode > rawDescription

set -x

echo "comment"

cat rawDescription

cat rawDescription | sed ':a;N;$!ba;s/\n/<br\/>/g' | sed "s/'/\'/g" | sed 's/"/\"/g' > inputDescription

echo "input description: `cat inputDescription`"

cat >$(Pipeline.Workspace)/updatewi.sh <<EOL

set -x

export AZURE_DEVOPS_EXT_PAT=$(AZDOTOKEN)

az boards work-item update --org https://dev.azure.com/princessking --id ${{ parameters.emailbasedUpdates.workitem }} --discussion '${{ parameters.emailbasedUpdates.fromname }} at ${{ parameters.emailbasedUpdates.fromemail }} notes: `cat inputDescription | tr -d '\n'`' > azresp.json

EOL

chmod u+x updatewi.sh

echo "updatewi.sh:"

cat $(Pipeline.Workspace)/updatewi.sh

echo "have a nice day."

displayName: 'Check webhook payload'

This line here:

cat rawDescription | sed ':a;N;$!ba;s/\n/<br\/>/g' | sed "s/'/\'/g" | sed 's/"/\"/g' > inputDescriptionjust removes the newlines and replaces single and double quotes that might trip up the AZ CLI.

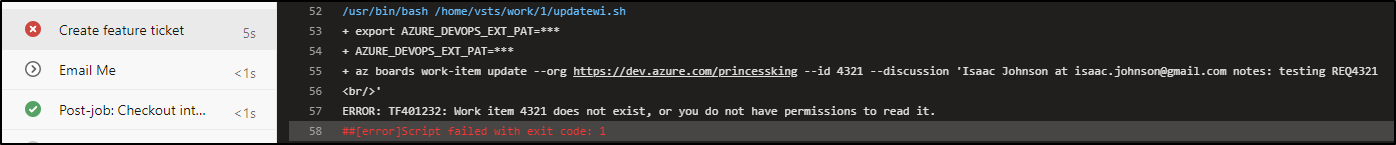

Note: if you use a non existent work item, that would fail the pipeline (as it should)

Testing

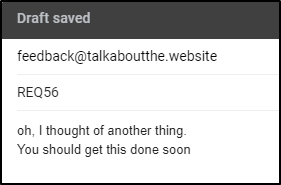

and quickly I see that SES was triggered:

And i can see the pod kicked off

$ kubectl logs nodesqswatcher-deployment-7b6d8c67dd-r8t7k nodesqswatcher

sqs event consumer app listening on port 8080!

--000000000000956f6405c69dbfd5

Content-Type: text/plain; charset="UTF-8"

oh, I thought of another thing.

You should get this done soon

--000000000000956f6405c69dbfd5

Content-Type: text/html; charset="UTF-8"

<div dir="ltr">oh, I thought of another thing.<div>You should get this done soon</div></div>

--000000000000956f6405c69dbfd5--

[ { name: '', email: 'feedback@talkaboutthe.website' } ]

Isaac Johnson

isaac.johnson@gmail.com

oh, I thought of another thing.

You should get this done soon

text/plain

element...

text/plain

oh, I thought of another thing.

You should get this done soon

HERE IS PLAIN

element...

text/html

<div dir="ltr">oh, I thought of another thing.<div>You should get this done soon</div></div>

[ { name: 'Isaac Johnson', email: 'isaac.johnson@gmail.com' } ]

mimefile created

stdout: part1 (text/plain)

part2 (text/html)

data:

oh, I thought of another thing.

You should get this done soon

data2:

<div dir="ltr">oh, I thought of another thing.<div>You should get this done soon</div></div>

But let's try mentioning it in the body instead of just the subject.

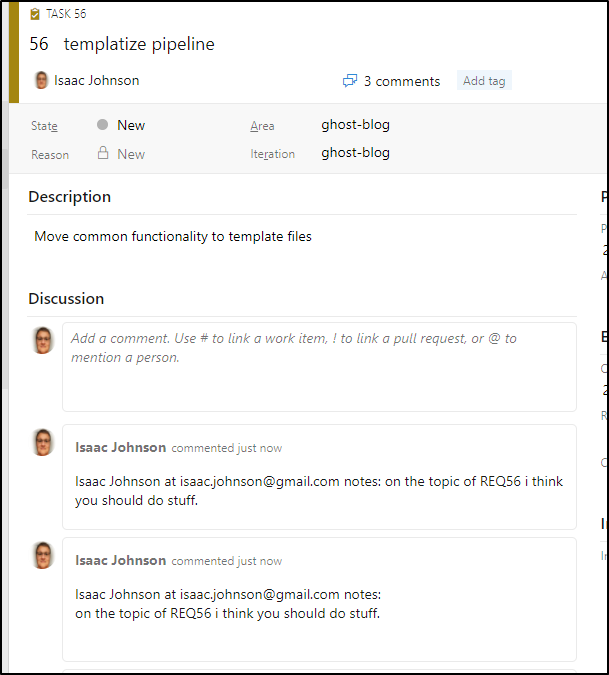

and we can see it updated. but since i do it for each body (and there is a TEXT and HTML), it updated twice

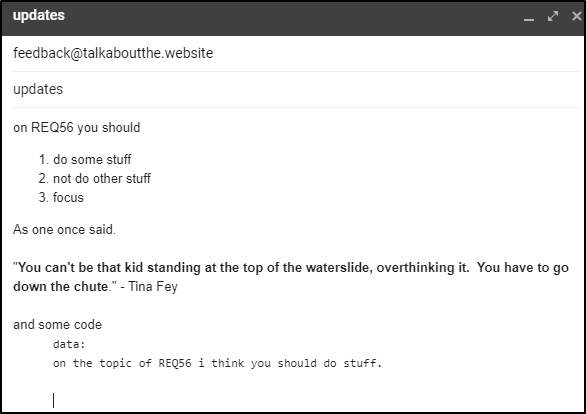

We can try a more complicated example as well

and we can see it set (albeit twice) in the ticket

Next steps:

I would want to externalize the webhook name so i would not need to rebuild the container to change it.

It might also be nice to handle email attachments.

It would also be nice to add more smarts for parsing, for instance, multiple tickets.

And certainly it needs to be made more robust with more unit tests before going live.

Summary

We created a brand new domain and associated it with AWS Simple Email Service (SES). We tied that to SNS then to another SNS that triggered SQS. Having a loaded message queue, we were able to use Dapr to trigger a containerized microservice that would then parse and if a work item was detected, update the work item with a comment of the email body.

By leveraging Webhooks, we avoided needing to use Personal Access Tokens (PAT) anywhere in the flow.

This system, when paired with our email ingestion form, can function as (at least the start of) an externalized support system that lets external users create and update work items without needing to be provided any access to the underlying DevOps system.

In fact, switching this to Github issues or JIRA tickets would be just a matter of changing CLIs in the container. The rest (SES, SNS, SQS, Dapr) would largely remain unchanged.

One could imagine moving the AzDO updater itself to just an externalized service (say, if the Webhook model was insufficient to an organizations security policies)

Once nice thing was throughout the testing, I had Datadog alerts setup to give me the heads up on pass/fails

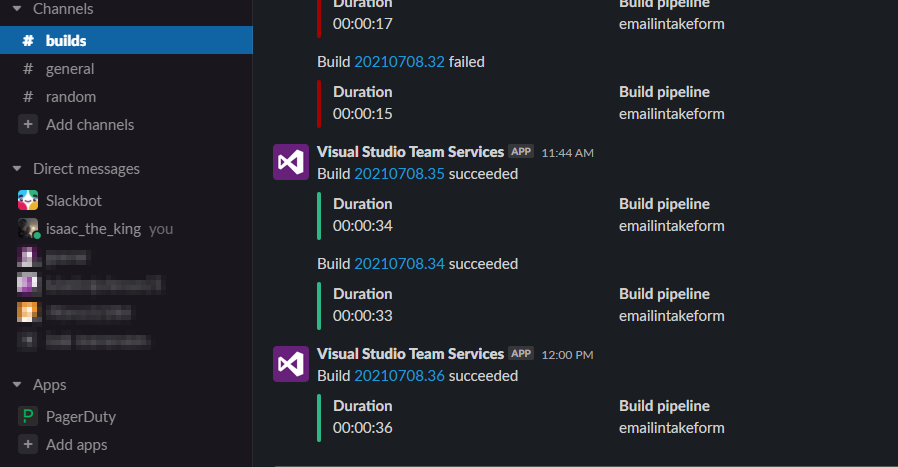

and I saw updates from our webhook in slack as well:

I only sometimes use Teams because Teams still is rather lousy at multiple organizations requiring me to sign in and sign out between home and work.