Published: Mar 12, 2024 by Isaac Johnson

Material for MKDocs is an actively developed documentation framework. It’s Open-Source (MIT) and has 17.5k stars and 3.2k forks on Github. The Getting Started page has quite an amazing landing page.

One can start using it as code simply by cloning the repo and running:

$ git clone https://github.com/squidfunk/mkdocs-material.git

$ pip install -e mkdocs-material

Today we’ll dig into docker before pivoting to Kubernetes, YAML and helm. We’ll even have some fun with a new chart that can dynamically populate cluster details!

MkDocs with Docker

Let’s start with Docker

I’ll make a directory for our docs

builder@builder-T100:~/mkdocs$ pwd

/home/builder/mkdocs

builder@builder-T100:~/mkdocs$ mkdir otherdocs

builder@builder-T100:~/mkdocs$ echo "# Howdy" > ./otherdocs/test.md

builder@builder-T100:~/mkdocs$

Create a basic configuration file

builder@builder-T100:~/mkdocs$ cat mkdocs.yml

site_name: My Documentation

docs_dir: otherdocs

Now start

$ docker run -d -p 4120:8000 --name=mkdocs -v /home/builder/mkdocs:/docs:rw --restart unless-stopped squidfunk/mkdocs-material

8a6409051b61df42fca4a2b08625e898c115cbe9916b60c1ff3d92da0d708996

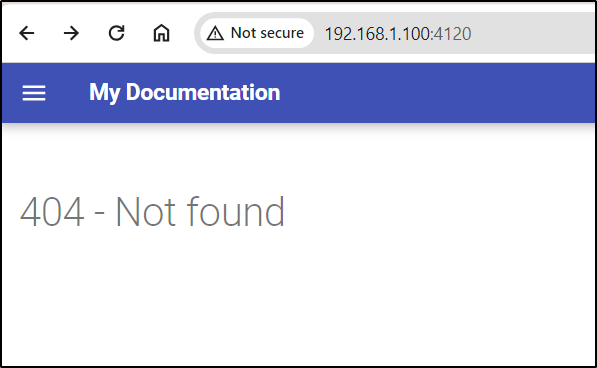

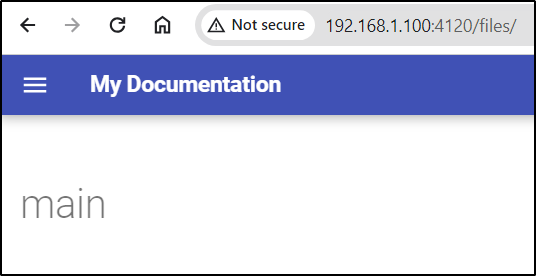

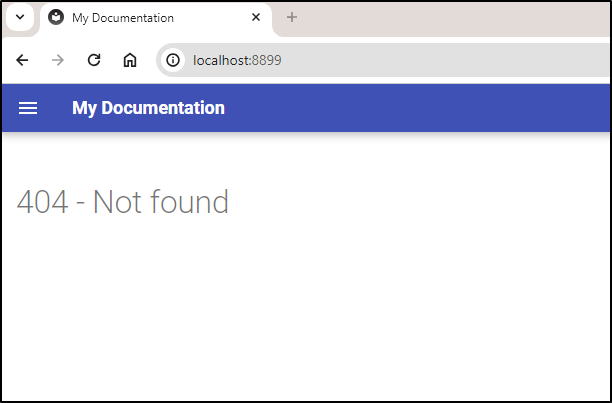

While we get a 404 Not Found

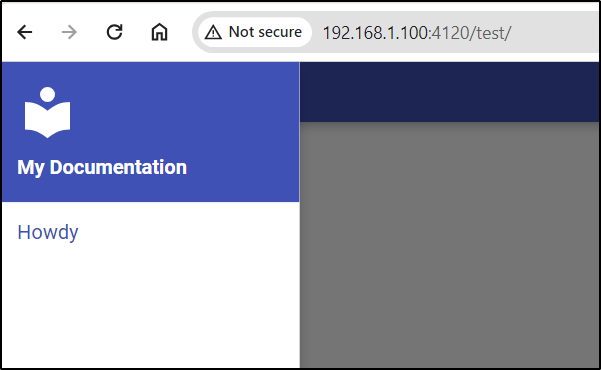

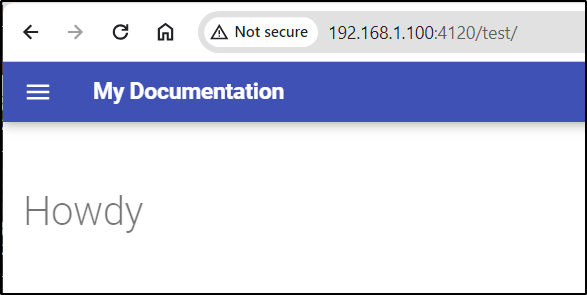

I can see the “Howdy” file

with contents

I’ll make something a bit better

$ echo -e "## My Introduction\n\nHi Buddy\n" > index.md

$ echo "# About FB Docs\n\n- Docs are fun\n-Docs are cool\n" > about.md

Then we can think about exposing a directory, so ill add some files there

builder@builder-T100:~/mkdocs$ mkdir files

builder@builder-T100:~/mkdocs$ echo "1" > files/1.md

builder@builder-T100:~/mkdocs$ echo "2" > files/2.md

Now to use them

$ cat mkdocs.yml

site_name: My Documentation

docs_dir: otherdocs

nav:

- Introduction: 'index.md'

- 'about.md'

- 'Issue Tracker': 'https://example.com/'

- 'Files': '/files/'

I quickly realized i neglected to put them in ‘otherdocs’ so i fixed that

builder@builder-T100:~/mkdocs$ mv index.md otherdocs/

builder@builder-T100:~/mkdocs$ mv about.md otherdocs/

builder@builder-T100:~/mkdocs$ mv files otherdocs/

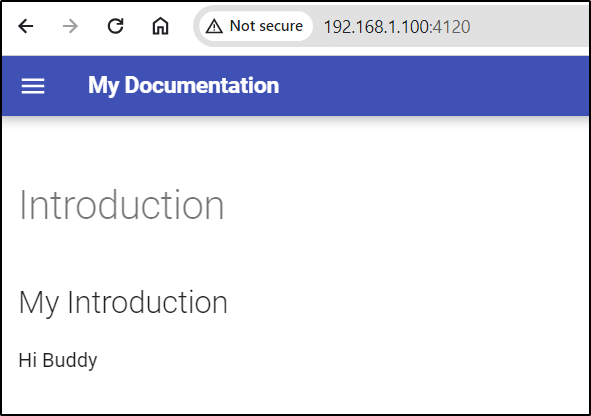

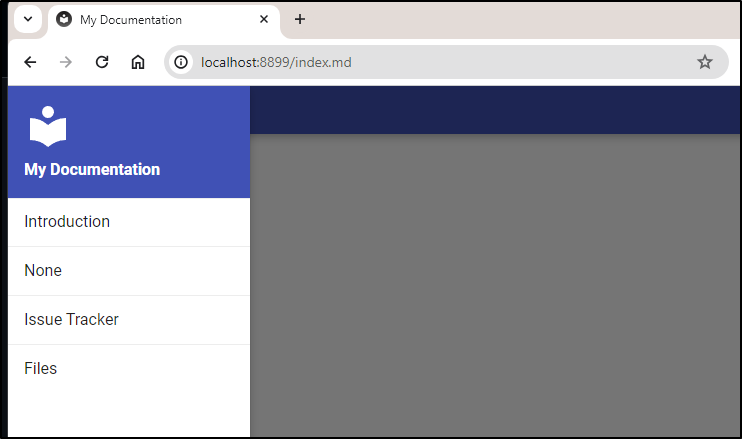

The Introduction page worked

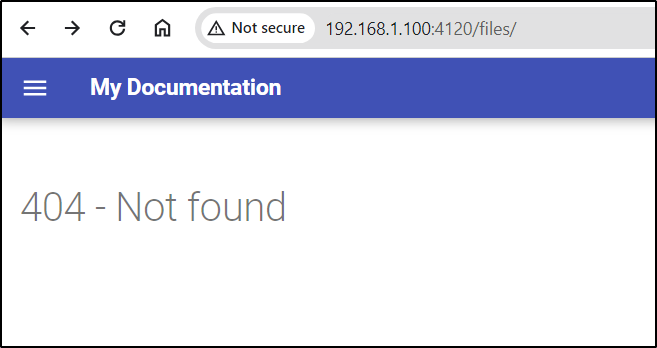

but files doesn’t work

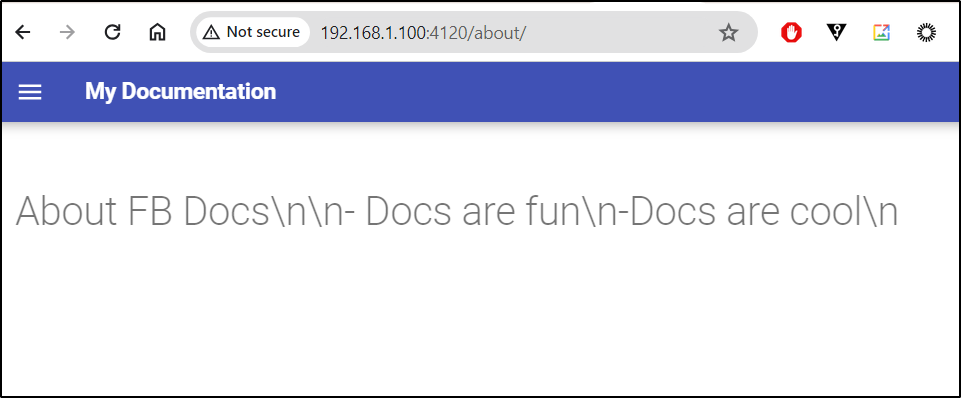

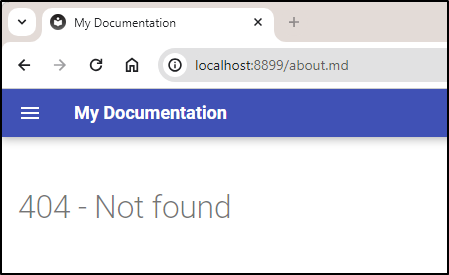

and the about page didn’t work

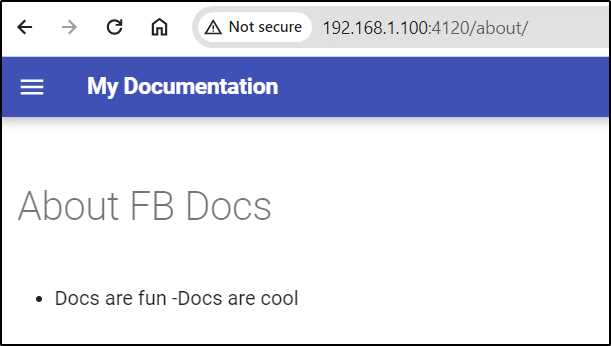

That was an easy fix

builder@builder-T100:~/mkdocs$ echo -e "# About FB Docs\n\n- Docs are fun\n-Docs are cool\n" > otherdocs/about.md

And then create an index.md

builder@builder-T100:~/mkdocs/otherdocs/files$ echo "# main" > index.md

Kubernetes

At first I thought of making a simple deployment with a PVC

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mkdocs-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: ""

resources:

requests:

storage: 5Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mkdocs

spec:

replicas: 1

selector:

matchLabels:

app: mkdocs

template:

metadata:

labels:

app: mkdocs

spec:

containers:

- name: mkdocs

image: squidfunk/mkdocs-material

ports:

- containerPort: 8000

volumeMounts:

- name: mkdocs-storage

mountPath: /docs

volumes:

- name: mkdocs-storage

persistentVolumeClaim:

claimName: mkdocs-pvc

But then realized I would need to configure the mkdocs.yaml file

So I changed that to use a Configmap instead

$ cat mkdocs.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: mkdocs-config

data:

mkdocs.yml: |

site_name: My Documentation

docs_dir: otherdocs

nav:

- Introduction: 'index.md'

- 'about.md'

- 'Issue Tracker': 'https://example.com/'

- 'Files': '/files/'

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mkdocs-files-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mkdocs-deployment

spec:

replicas: 1

selector:

matchLabels:

app: mkdocs

template:

metadata:

labels:

app: mkdocs

spec:

containers:

- name: mkdocs

image: squidfunk/mkdocs-material

ports:

- containerPort: 8000

volumeMounts:

- name: config-volume

mountPath: /docs/mkdocs.yml

subPath: mkdocs.yml

- name: files-volume

mountPath: /docs/otherdocs

volumes:

- name: config-volume

configMap:

name: mkdocs-config

- name: files-volume

persistentVolumeClaim:

claimName: mkdocs-files-pvc

---

apiVersion: v1

kind: Service

metadata:

name: mkdocs-service

spec:

selector:

app: mkdocs

ports:

- protocol: TCP

port: 80

targetPort: 8000

type: LoadBalancer

Now create

$ kubectl create ns mkdocs

namespace/mkdocs created

$ kubectl apply -f ./mkdocs.yaml -n mkdocs

configmap/mkdocs-config created

persistentvolumeclaim/mkdocs-files-pvc created

deployment.apps/mkdocs-deployment created

service/mkdocs-service created

That’s now running

$ kubectl get pods -n mkdocs

NAME READY STATUS RESTARTS AGE

mkdocs-deployment-746dc6857-rc6zt 1/1 Running 0 22s

I can now test accessing the service

$ kubectl port-forward svc/mkdocs-service 8899:80 -n mkdocs

Forwarding from 127.0.0.1:8899 -> 8000

Forwarding from [::1]:8899 -> 8000

Handling connection for 8899

Handling connection for 8899

Handling connection for 8899

While my files are absent, I can at least see the configuration is good

Populating content with cronjobs

Let’s do something interesting. How about a report of node details?

We’ll start by creating a CronJob that gets the node details, converts to markdown and then stores into a configmap

$ cat nodeDetailsCron.yaml

apiVersion: batch/v1

kind: CronJob

metadata:

name: node-details-cronjob

spec:

schedule: "*/5 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: node-details

image: bitnami/kubectl:latest

command:

- /bin/sh

- -c

- |

NODE_DETAILS=$(kubectl get nodes -o json | jq -r '.items[] | {name: .metadata.name, status: .status.conditions[] | select(.type == "Ready") .status, age: .metadata.creationTimestamp, version: .status.nodeInfo.kubeletVersion} | @tsv' | awk 'BEGIN{print "|Name|Status|Age|Version|\n|---|---|---|---|"} {print "|" $1 "|" $2 "|" $3 "|" $4 "|"}')

echo -e "apiVersion: v1\nkind: ConfigMap\nmetadata:\n name: mkdirs-node-details\ndata:\n node-details.md: |\n # Node Details\n\n $NODE_DETAILS" | kubectl apply -f -

restartPolicy: OnFailure

$ kubectl apply -f ./nodeDetailsCron.yaml -n mkdocs

cronjob.batch/node-details-cronjob created

This initially created an error due to missing CRDs

$ kubectl logs node-details-cronjob-28479895-zxxmj -n mkdocs

Error from server (Forbidden): nodes is forbidden: User "system:serviceaccount:mkdocs:default" cannot list resource "nodes" in API group "" at the cluster scope

error: error parsing STDIN: error converting YAML to JSON: yaml: line 9: did not find expected comment or line break

I’ll grant our pod SA those permissions and try again

$ cat crdsNodeDetails.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: node-list-role

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["list"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-list-rolebinding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: node-list-role

subjects:

- kind: ServiceAccount

name: default

namespace: mkdocs

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: mkdocs

name: configmap-reader

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get","edit","list","patch","create","delete"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: read-configmap

namespace: mkdocs

subjects:

- kind: ServiceAccount

name: default

namespace: mkdocs

roleRef:

kind: Role

name: configmap-reader

apiGroup: rbac.authorization.k8s.io

$ kubectl apply -f ./crdsNodeDetails.yaml

clusterrole.rbac.authorization.k8s.io/node-list-role unchanged

clusterrolebinding.rbac.authorization.k8s.io/node-list-rolebinding unchanged

role.rbac.authorization.k8s.io/configmap-reader created

rolebinding.rbac.authorization.k8s.io/read-configmap created

$ kubectl delete -f nodeDetailsCron.yaml -n mkdocs

cronjob.batch "node-details-cronjob" deleted

$ kubectl apply -f nodeDetailsCron.yaml -n mkdocs

cronjob.batch/node-details-cronjob created

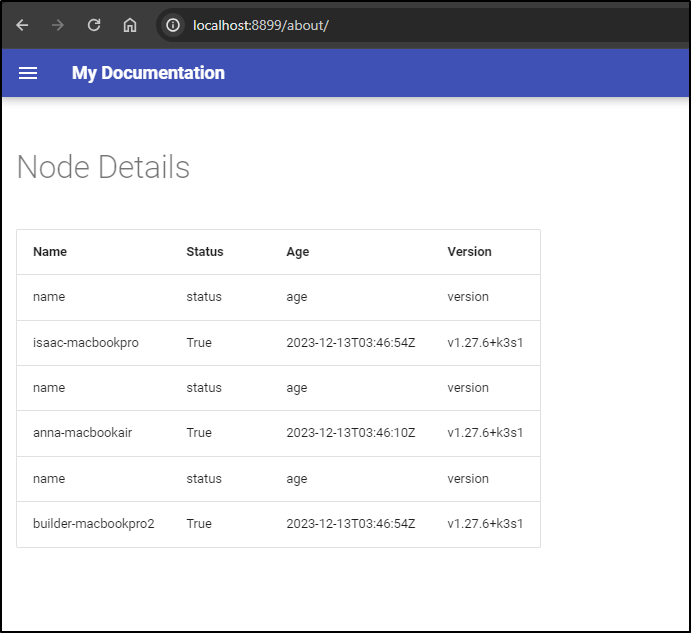

I can now see it worked and created a configmap

$ kubectl get cm -n mkdocs

NAME DATA AGE

kube-root-ca.crt 1 55m

mkdocs-config 1 55m

mkdirs-node-details 1 2m49s

$ kubectl get cm mkdirs-node-details -n mkdocs -o yaml

apiVersion: v1

data:

node-details.md: |

# Node Details

|Name|Status|Age|Version|

|---|---|---|---|

|name|status|age|version|

|isaac-macbookpro|True|2023-12-13T03:46:54Z|v1.27.6+k3s1|

|name|status|age|version|

|anna-macbookair|True|2023-12-13T03:46:10Z|v1.27.6+k3s1|

|name|status|age|version|

|builder-macbookpro2|True|2023-12-13T03:46:54Z|v1.27.6+k3s1|

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"node-details.md":"# Node Details\n\n|Name|Status|Age|Version|\n|---|---|---|---|\n|name|status|age|version|\n|isaac-macbookpro|True|2023-12-13T03:46:54Z|v1.27.6+k3s1|\n|name|status|age|version|\n|anna-macbookair|True|2023-12-13T03:46:10Z|v1.27.6+k3s1|\n|name|status|age|version|\n|builder-macbookpro2|True|2023-12-13T03:46:54Z|v1.27.6+k3s1|\n"},"kind":"ConfigMap","metadata":{"annotations":{},"name":"mkdirs-node-details","namespace":"mkdocs"}}

creationTimestamp: "2024-02-24T17:30:41Z"

name: mkdirs-node-details

namespace: mkdocs

resourceVersion: "45236354"

uid: e82f4e4d-c696-4946-bb8b-51ef92b5c660

While I thought I might mount the file in another PVC mount

$ cat mkdocs.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: mkdocs-config

data:

mkdocs.yml: |

site_name: My Documentation

docs_dir: otherdocs

nav:

- Introduction: 'index.md'

- 'about.md'

- 'Issue Tracker': 'https://example.com/'

- 'Files': '/files/'

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mkdocs-files-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mkdocs-deployment

spec:

replicas: 1

selector:

matchLabels:

app: mkdocs

template:

metadata:

labels:

app: mkdocs

spec:

containers:

- name: mkdocs

image: squidfunk/mkdocs-material

ports:

- containerPort: 8000

volumeMounts:

- name: config-volume

mountPath: /docs/mkdocs.yml

subPath: mkdocs.yml

- name: config-volume-nodedetails

mountPath: /docs/otherdocs/about.md

subPath: node-details.md

- name: files-volume

mountPath: /docs/otherdocs

volumes:

- name: config-volume

configMap:

name: mkdocs-config

- name: config-volume-nodedetails

configMap:

name: mkdirs-node-details

- name: files-volume

persistentVolumeClaim:

claimName: mkdocs-files-pvc

---

apiVersion: v1

kind: Service

metadata:

name: mkdocs-service

spec:

selector:

app: mkdocs

ports:

- protocol: TCP

port: 80

targetPort: 8000

type: LoadBalancer

That did not work

$ kubectl exec -it mkdocs-deployment-746dc6857-7vmh5 -n mkdocs -- /bin/sh

/docs # ls

mkdocs.yml otherdocs

/docs # ls otherdocs/

/docs #

However, I can create a sym link at startup to solve this

$ cat mkdocs.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: mkdocs-config

data:

mkdocs.yml: |

site_name: My Documentation

docs_dir: otherdocs

nav:

- Introduction: 'index.md'

- 'about.md'

- 'Issue Tracker': 'https://example.com/'

- 'Files': '/files/'

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mkdocs-files-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mkdocs-deployment

spec:

replicas: 1

selector:

matchLabels:

app: mkdocs

template:

metadata:

labels:

app: mkdocs

spec:

containers:

- name: mkdocs

image: squidfunk/mkdocs-material

ports:

- containerPort: 8000

volumeMounts:

- name: config-volume

mountPath: /docs/mkdocs.yml

subPath: mkdocs.yml

- name: config-volume-nodedetails

mountPath: /docs/about.md

subPath: node-details.md

- name: files-volume

mountPath: /docs/otherdocs

lifecycle:

postStart:

exec:

command: ["/bin/sh", "-c", "ln -s /docs/about.md /docs/otherdocs/about.md"]

volumes:

- name: config-volume

configMap:

name: mkdocs-config

- name: config-volume-nodedetails

configMap:

name: mkdirs-node-details

- name: files-volume

persistentVolumeClaim:

claimName: mkdocs-files-pvc

---

apiVersion: v1

kind: Service

metadata:

name: mkdocs-service

spec:

selector:

app: mkdocs

ports:

- protocol: TCP

port: 80

targetPort: 8000

type: LoadBalancer

$ kubectl delete deployment mkdocs-deployment -n mkdocs && kubectl apply -f ./mkdocs.yaml -n mkdocs

deployment.apps "mkdocs-deployment" deleted

configmap/mkdocs-config unchanged

persistentvolumeclaim/mkdocs-files-pvc unchanged

deployment.apps/mkdocs-deployment created

service/mkdocs-service unchanged

Then test

$ kubectl port-forward svc/mkdocs-service 8899:80 -n mkdocs

Forwarding from 127.0.0.1:8899 -> 8000

Forwarding from [::1]:8899 -> 8000

Handling connection for 8899

Handling connection for 8899

This worked, but would only update if I manually rotated the pod

$ kubectl get pods -n mkdocs

NAME READY STATUS RESTARTS AGE

mkdocs-deployment-84594df66-gl4ft 1/1 Running 0 103m

node-details-cronjob-28480044-bknhk 0/1 Completed 0 3m1s

node-details-cronjob-28480045-57xqc 0/1 Completed 0 2m1s

node-details-cronjob-28480046-rdn64 0/1 Completed 0 61s

node-details-cronjob-28480047-8f556 0/1 ContainerCreating 0 1s

I’ll update the CRDs to let the pod rotate other pods in the namespace

$ cat crdsNodeDetails.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: node-list-role

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["list"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-list-rolebinding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: node-list-role

subjects:

- kind: ServiceAccount

name: default

namespace: mkdocs

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: mkdocs

name: configmap-reader

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get","edit","list","patch","create","delete"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: read-configmap

namespace: mkdocs

subjects:

- kind: ServiceAccount

name: default

namespace: mkdocs

roleRef:

kind: Role

name: configmap-reader

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: mkdocs

name: podrotater

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get","edit","list","patch","create","delete"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: podrotater-role

namespace: mkdocs

subjects:

- kind: ServiceAccount

name: default

namespace: mkdocs

roleRef:

kind: Role

name: podrotater

apiGroup: rbac.authorization.k8s.io

$ kubectl apply -f crdsNodeDetails.yaml

clusterrole.rbac.authorization.k8s.io/node-list-role unchanged

clusterrolebinding.rbac.authorization.k8s.io/node-list-rolebinding unchanged

role.rbac.authorization.k8s.io/configmap-reader unchanged

rolebinding.rbac.authorization.k8s.io/read-configmap unchanged

role.rbac.authorization.k8s.io/podrotater created

rolebinding.rbac.authorization.k8s.io/podrotater-role created

I’ll then update to bounce pods after update

$ cat nodeDetailsCron.yaml

apiVersion: batch/v1

kind: CronJob

metadata:

name: node-details-cronjob

spec:

schedule: "* * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: node-details

image: bitnami/kubectl:latest

command:

- /bin/sh

- -c

- |

NODE_DETAILS=$(kubectl get nodes -o json | jq -r '.items[] | {name: .metadata.name, status: .status.conditions[] | select(.type == "Ready") .status, age: .metadata.creationTimestamp, version: .status.nodeInfo.kubeletVersion} | (keys_unsorted, [.[]]) | @tsv' | awk 'BEGIN{print "|Name|Status|Age|Version|\n |---|---|---|---|"} {print " |" $1 "|" $2 "|" $3 "|" $4 "|"}')

echo "apiVersion: v1\nkind: ConfigMap\nmetadata:\n name: mkdirs-node-details\ndata:\n node-details.md: |\n # Node Details\n\n $NODE_DETAILS" | kubectl apply -f -

kubectl delete pods -l app=mkdocs

restartPolicy: OnFailure

$ kubectl delete -f ./nodeDetailsCron.yaml -n mkdocs && sleep 1 && kubectl apply -f ./nodeDetailsCron.yaml -n mkdocs

cronjob.batch "node-details-cronjob" deleted

cronjob.batch/node-details-cronjob created

I tried a readinessProbe:

readinessProbe:

exec:

command: ["/bin/sh", "-c", "ln -s /docs/about.md /docs/otherdocs/about.md && exit 0"]

initialDelaySeconds: 5

periodSeconds: 2

timeoutSeconds: 1

successThreshold: 2

failureThreshold: 10

But that didn’t seem to work

NAME READY STATUS RESTARTS AGE

mkdocs-deployment-55545bdf9-dcvsd 0/1 Running 0 53s

I had to remove the old symbolic links stuck in the PV as it was blocking the probes

$ kubectl exec -it mkdocs-deployment-69c8dd564d-fcg8t -n mkdocs -- /bin/sh

/docs # rm -f ./otherdocs/about.md

/docs # rm -f ./otherdocs/about.m

/docs # exit

I tweaked the deployment to copy and exit.

$ cat mkdocs.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: mkdocs-config

data:

mkdocs.yml: |

site_name: My Documentation

docs_dir: otherdocs

nav:

- Introduction: 'index.md'

- 'about.md'

- 'Issue Tracker': 'https://example.com/'

- 'Files': '/files/'

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mkdocs-files-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mkdocs-deployment

spec:

replicas: 1

selector:

matchLabels:

app: mkdocs

template:

metadata:

labels:

app: mkdocs

spec:

containers:

- name: mkdocs

image: squidfunk/mkdocs-material

ports:

- name: app-port

containerPort: 8000

volumeMounts:

- name: config-volume

mountPath: /docs/mkdocs.yml

subPath: mkdocs.yml

- name: config-volume-nodedetails

mountPath: /docs/about.md

subPath: node-details.md

- name: files-volume

mountPath: /docs/otherdocs

startupProbe:

exec:

command: ["/bin/sh", "-c", "cp -f /docs/about.md /docs/otherdocs/about.md && exit 0"]

periodSeconds: 2

timeoutSeconds: 1

failureThreshold: 10

volumes:

- name: config-volume

configMap:

name: mkdocs-config

- name: config-volume-nodedetails

configMap:

name: mkdirs-node-details

- name: files-volume

persistentVolumeClaim:

claimName: mkdocs-files-pvc

---

apiVersion: v1

kind: Service

metadata:

name: mkdocs-service

spec:

selector:

app: mkdocs

ports:

- protocol: TCP

port: 80

targetPort: 8000

type: LoadBalancer

$ kubectl delete deployment mkdocs-deployment -n mkdocs && kubectl apply -f ./mkdocs.yaml -n mkdocs

deployment.apps "mkdocs-deployment" deleted

configmap/mkdocs-config unchanged

persistentvolumeclaim/mkdocs-files-pvc unchanged

deployment.apps/mkdocs-deployment created

service/mkdocs-service unchanged

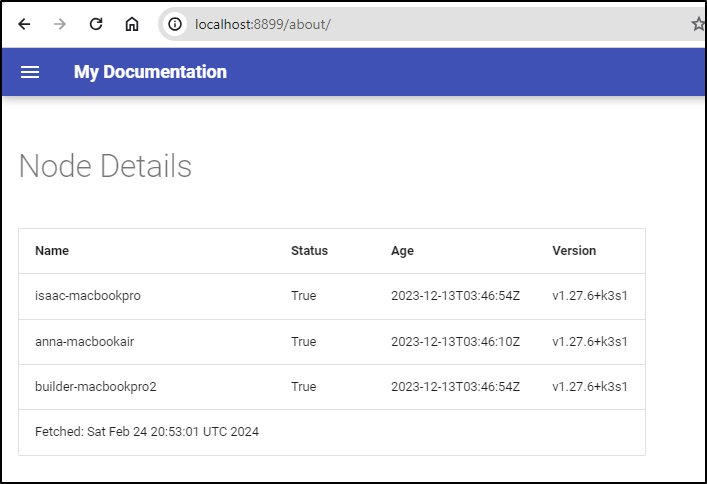

This is came up without issue

$ kubectl get pods -n mkdocs

NAME READY STATUS RESTARTS AGE

mkdocs-deployment-5c9c78f64d-gwxv4 1/1 Running 0 4m15s

I’ll hop in to check

$ kubectl port-forward svc/mkdocs-service 8899:80 -n mkdocs

Forwarding from 127.0.0.1:8899 -> 8000

Forwarding from [::1]:8899 -> 8000

Handling connection for 8899

Handling connection for 8899

This worked

I’ll add back the cron

$ kubectl apply -f nodeDetailsCron.yaml

cronjob.batch/node-details-cronjob created

Which works

Improvements

I had a couple more issues I wanted to contend with - there was a blip in cycling pods so I think a PDB and an increased replicaset would help.

You’ll notice the replica count is set to 2 and has a PDB now

$ cat mkdocs.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: mkdocs-config

data:

mkdocs.yml: |

site_name: My Documentation

docs_dir: otherdocs

nav:

- Introduction: 'index.md'

- 'about.md'

- 'Issue Tracker': 'https://example.com/'

- 'Files': '/files/'

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mkdocs-files-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mkdocs-deployment

spec:

replicas: 2

selector:

matchLabels:

app: mkdocs

template:

metadata:

labels:

app: mkdocs

spec:

containers:

- name: mkdocs

image: squidfunk/mkdocs-material

ports:

- name: app-port

containerPort: 8000

volumeMounts:

- name: config-volume

mountPath: /docs/mkdocs.yml

subPath: mkdocs.yml

- name: config-volume-nodedetails

mountPath: /docs/about.md

subPath: node-details.md

- name: files-volume

mountPath: /docs/otherdocs

startupProbe:

exec:

command: ["/bin/sh", "-c", "cp -f /docs/about.md /docs/otherdocs/about.md && exit 0"]

periodSeconds: 2

timeoutSeconds: 1

failureThreshold: 10

volumes:

- name: config-volume

configMap:

name: mkdocs-config

- name: config-volume-nodedetails

configMap:

name: mkdirs-node-details

- name: files-volume

persistentVolumeClaim:

claimName: mkdocs-files-pvc

---

apiVersion: v1

kind: Service

metadata:

name: mkdocs-service

spec:

selector:

app: mkdocs

ports:

- protocol: TCP

port: 80

targetPort: 8000

type: LoadBalancer

---

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: mkdocs-pdb

namespace: mkdocs

spec:

minAvailable: 1

selector:

matchLabels:

app: mkdocs

$ kubectl apply -f mkdocs.yaml

configmap/mkdocs-config unchanged

persistentvolumeclaim/mkdocs-files-pvc unchanged

deployment.apps/mkdocs-deployment configured

service/mkdocs-service unchanged

poddisruptionbudget.policy/mkdocs-pdb created

I noticed some errors in the cron

pod "mkdocs-deployment-5c9c78f64d-v79w7" deleted

E0224 20:29:02.004499 27 reflector.go:147] vendor/k8s.io/client-go/tools/watch/informerwatcher.go:146: Failed to watch *unstructured.Unstructured: unknown

E0224 20:29:03.335497 27 reflector.go:147] vendor/k8s.io/client-go/tools/watch/informerwatcher.go:146: Failed to watch *unstructured.Unstructured: unknown

E0224 20:29:05.576290 27 reflector.go:147] vendor/k8s.io/client-go/tools/watch/informerwatcher.go:146: Failed to watch *unstructured.Unstructured: unknown

E0224 20:29:11.370601 27 reflector.go:147] vendor/k8s.io/client-go/tools/watch/informerwatcher.go:146: Failed to watch *unstructured.Unstructured: unknown

So i updated the CRDs

$ cat crdsNodeDetails.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: node-list-role

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["list"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-list-rolebinding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: node-list-role

subjects:

- kind: ServiceAccount

name: default

namespace: mkdocs

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: mkdocs

name: configmap-reader

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get","edit","list","patch","create","delete"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: read-configmap

namespace: mkdocs

subjects:

- kind: ServiceAccount

name: default

namespace: mkdocs

roleRef:

kind: Role

name: configmap-reader

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: mkdocs

name: podrotater

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get","edit","list","patch","create","delete"]

- apiGroups: [""]

resources: [""]

resourceNames: ["assets-prod", "assets-test"]

verbs: ["get", "patch"]

- apiGroups: ["*"]

resources: ["*"]

verbs: ["list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: podrotater-role

namespace: mkdocs

subjects:

- kind: ServiceAccount

name: default

namespace: mkdocs

roleRef:

kind: Role

name: podrotater

apiGroup: rbac.authorization.k8s.io

$ kubectl apply -f crdsNodeDetails.yaml

clusterrole.rbac.authorization.k8s.io/node-list-role unchanged

clusterrolebinding.rbac.authorization.k8s.io/node-list-rolebinding unchanged

role.rbac.authorization.k8s.io/configmap-reader unchanged

rolebinding.rbac.authorization.k8s.io/read-configmap unchanged

role.rbac.authorization.k8s.io/podrotater configured

rolebinding.rbac.authorization.k8s.io/podrotater-role unchanged

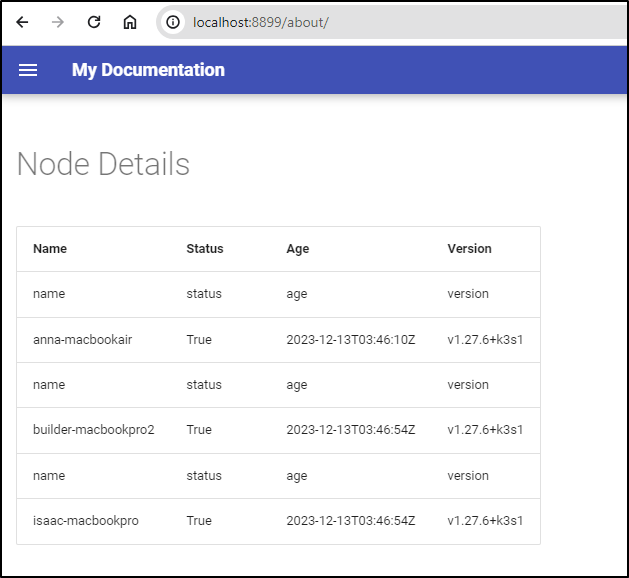

I also cleaned up the cron markdown output

$ cat nodeDetailsCron.yaml

apiVersion: batch/v1

kind: CronJob

metadata:

name: node-details-cronjob

spec:

schedule: "* * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: node-details

image: bitnami/kubectl:latest

command:

- /bin/sh

- -c

- |

NODE_DETAILS=$(kubectl get nodes -o json | jq -r '.items[] | {name: .metadata.name, status: .status.conditions[] | select(.type == "Ready") .status, age: .metadata.creationTimestamp, version: .status.nodeInfo.kubeletVersion} | ([.[]]) | @tsv' | awk 'BEGIN{print "|Name|Status|Age|Version|\n |---|---|---|---|"} {print " |" $1 "|" $2 "|" $3 "|" $4 "|"}')

echo "apiVersion: v1\nkind: ConfigMap\nmetadata:\n name: mkdirs-node-details\ndata:\n node-details.md: |\n # Node Details\n\n $NODE_DETAILS\n Fetched: $(date)"

echo "apiVersion: v1\nkind: ConfigMap\nmetadata:\n name: mkdirs-node-details\ndata:\n node-details.md: |\n # Node Details\n\n $NODE_DETAILS\n Fetched: $(date)" | kubectl apply -f -

kubectl delete pods -l app=mkdocs

restartPolicy: OnFailure

I could now see the CRDs solved the errors in the cron logs

$ kubectl logs node-details-cronjob-28480137-shg7q -n mkdocs

apiVersion: v1

kind: ConfigMap

metadata:

name: mkdirs-node-details

data:

node-details.md: |

# Node Details

|Name|Status|Age|Version|

|---|---|---|---|

|isaac-macbookpro|True|2023-12-13T03:46:54Z|v1.27.6+k3s1|

|anna-macbookair|True|2023-12-13T03:46:10Z|v1.27.6+k3s1|

|builder-macbookpro2|True|2023-12-13T03:46:54Z|v1.27.6+k3s1|

Fetched: Sat Feb 24 20:57:01 UTC 2024

configmap/mkdirs-node-details configured

pod "mkdocs-deployment-5c9c78f64d-r84zg" deleted

I now have a nice working system:

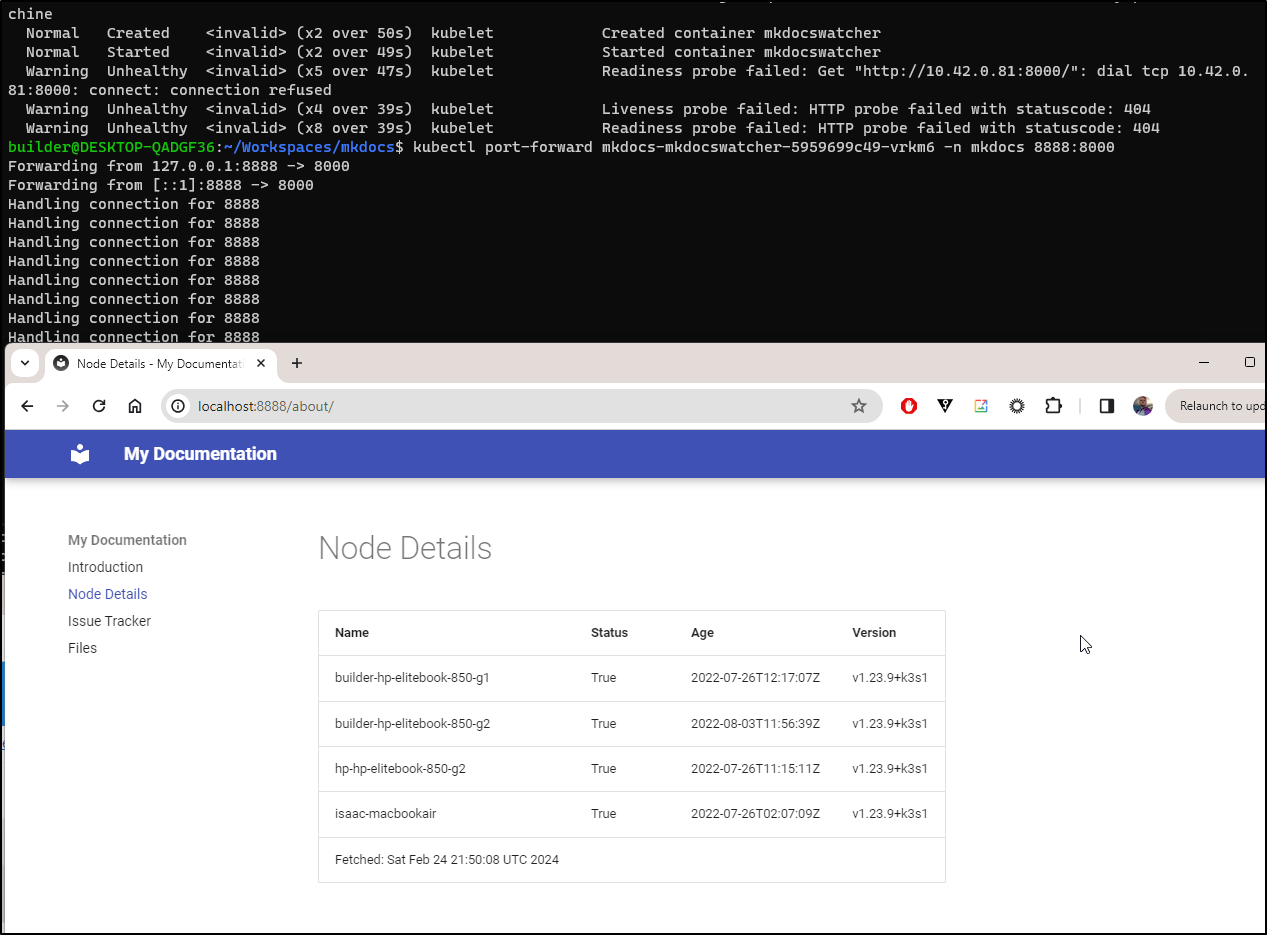

Helm

However, it still is a mess of Kubernetes YAML

I built a helm chart and installed.

$ helm install mkdocs --create-namespace -n mkdocs ./mkdocsWatcher/

NAME: mkdocs

LAST DEPLOYED: Sat Feb 24 15:39:30 2024

NAMESPACE: mkdocs

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace mkdocs -l "app.kubernetes.io/name=mkdocswatcher,app.kubernetes.io/instance=mkdocs" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace mkdocs $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace mkdocs port-forward $POD_NAME 8080:$CONTAINER_PORT

With the default 5min cron, it took 5 min to get my first error

NAME READY STATUS RESTARTS AGE

mkdocs-mkdocswatcher-cronjob-28480185-vp2b7 0/1 Completed 0 101s

mkdocs-mkdocswatcher-756bbcf6c5-sgpk8 0/1 ErrImagePull 0 6m32s

From the describe

Warning Failed <invalid> (x3 over 14s) kubelet Failed to pull image "squidfunk:mkdocs-material": rpc error: code = Unknown desc = failed to pull and unpack image "docker.io/library/squidfunk:mkdocs-material": failed to resolve reference "docker.io/library/squidfunk:mkdocs-material": pull access denied, repository does not exist or may require authorization: server message: insufficient_scope: authorization failed

While I see some probe failures, I can see that it is working

I fixed the probes by defining proper rediness and livenessprobes. Namely to check for the created file from the startupProbe and then that python was running

containers:

- name: {{ .Chart.Name }}

securityContext:

{{- toYaml .Values.securityContext | nindent 12 }}

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

ports:

- name: http

containerPort: {{ .Values.service.port }}

protocol: TCP

readinessProbe:

exec:

command: ["/bin/sh", "-c", "test -f /docs/otherdocs/about.md"]

initialDelaySeconds: 5

periodSeconds: 5

failureThreshold: 3

livenessProbe:

exec:

command: ["/bin/sh", "-c", "pgrep python"]

initialDelaySeconds: 15

periodSeconds: 20

failureThreshold: 3

startupProbe:

exec:

command: ["/bin/sh", "-c", "cp -f /docs/about.md /docs/otherdocs/about.md && exit 0"]

periodSeconds: 2

timeoutSeconds: 1

failureThreshold: 10

volumeMounts:

- name: config-volume

mountPath: /docs/mkdocs.yml

subPath: mkdocs.yml

- name: config-volume-nodedetails

mountPath: /docs/about.md

subPath: node-details.md

- name: files-volume

mountPath: /docs/otherdocs

resources:

{{- toYaml .Values.resources | nindent 12 }}

A quick check showed my image was errant. I updated the values yaml

image:

repository: squidfunk/mkdocs-material

pullPolicy: IfNotPresent

# Overrides the image tag whose default is the chart appVersion.

tag: "latest"

And re-ran the install

$ helm upgrade --install mkdocs --create-namespace -n mkdocs ./mkdocsWatcher/

Release "mkdocs" has been upgraded. Happy Helming!

NAME: mkdocs

LAST DEPLOYED: Sat Feb 24 15:48:53 2024

NAMESPACE: mkdocs

STATUS: deployed

REVISION: 2

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace mkdocs -l "app.kubernetes.io/name=mkdocswatcher,app.kubernetes.io/instance=mkdocs" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace mkdocs $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace mkdocs port-forward $POD_NAME 8080:$CONTAINER_PORT

This is now looking good

$ kubectl get pods -n mkdocs

NAME READY STATUS RESTARTS AGE

mkdocs-mkdocswatcher-cronjob-28480185-vp2b7 0/1 Completed 0 14m

mkdocs-mkdocswatcher-cronjob-28480190-4pxf8 0/1 Completed 0 9m30s

mkdocs-mkdocswatcher-cronjob-28480195-fsp7c 0/1 Completed 0 4m30s

mkdocs-mkdocswatcher-774496dc87-sv4gr 1/1 Running 0 74s

Ingress

We’ve usually set A records in AWS, but let’s use Azure here.

$ az account set --subscription "Pay-As-You-Go"

$ az network dns record-set a add-record -g idjdnsrg -z tpk.pw -n mkdocwatcher -a 75.73.224.240

{

"ARecords": [

{

"ipv4Address": "75.73.224.240"

}

],

"TTL": 3600,

"etag": "c40f5a9f-c895-4070-8188-d390e4d898eb",

"fqdn": "mkdocwatcher.tpk.pw.",

"id": "/subscriptions/d955c0ba-13dc-44cf-a29a-8fed74cbb22d/resourceGroups/idjdnsrg/providers/Microsoft.Network/dnszones/tpk.pw/A/mkdocwatcher",

"name": "mkdocwatcher",

"provisioningState": "Succeeded",

"resourceGroup": "idjdnsrg",

"targetResource": {},

"type": "Microsoft.Network/dnszones/A"

}

I’ll then define and apply an ingress

$ cat ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.org/websocket-services: mkdocs-mkdocswatcher

name: mkdocs-mkdocswatcher

spec:

rules:

- host: mkdocwatcher.tpk.pw

http:

paths:

- backend:

service:

name: mkdocs-mkdocswatcher

port:

number: 8000

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- mkdocwatcher.tpk.pw

secretName: mkdocs-mkdocswatcher-tls

$ kubectl apply -f ./ingress.yaml -n mkdocs

ingress.networking.k8s.io/mkdocs-mkdocswatcher created

Once I saw the cert created

$ kubectl get cert -n mkdocs

NAME READY SECRET AGE

mkdocs-mkdocswatcher-tls True mkdocs-mkdocswatcher-tls 97s

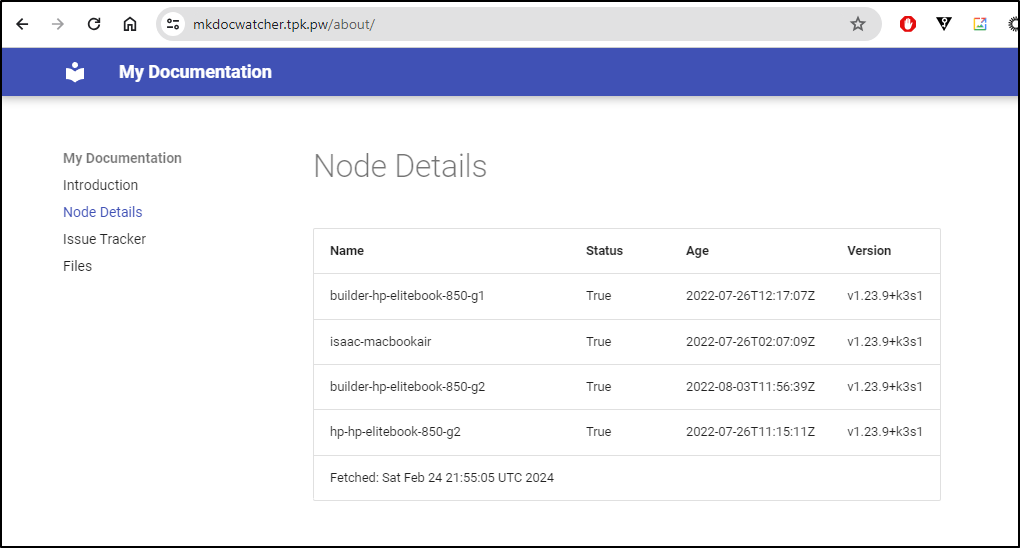

I could go to https://mkdocwatcher.tpk.pw/about/ and pull up the details

I now have something I’m satisfied enough to share.

I uploaded the chart to a new public Github repo at https://github.com/idjohnson/mkdocClusterWatcher.

Uploading helm to a CR

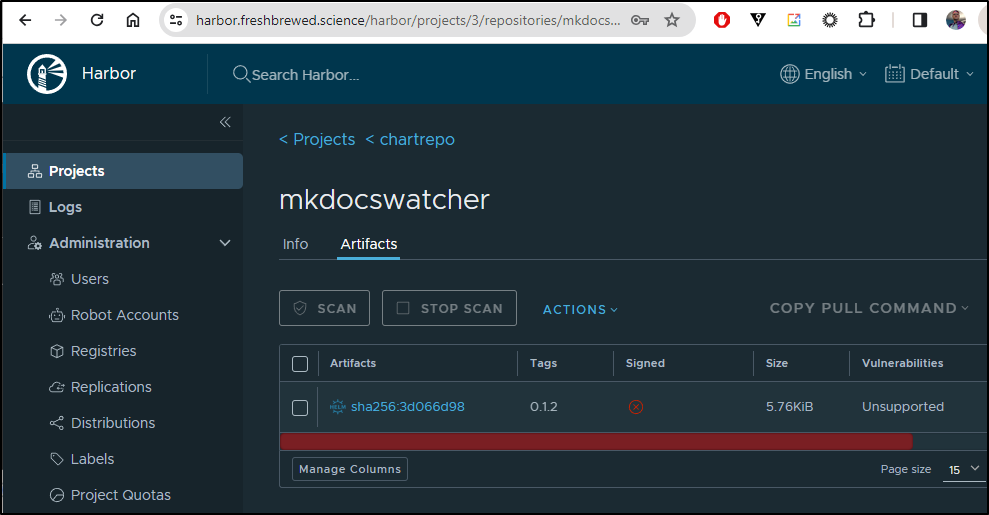

I’ll package my charts

$ helm package mkdocswatcher

Successfully packaged chart and saved it to: /home/builder/Workspaces/mkdocClusterWatcher/mkdocswatcher-0.1.2.tgz

Then push to Harbor

$ helm push mkdocswatcher-0.1.2.tgz oci://harbor.freshbrewed.science/chartrepo

Pushed: harbor.freshbrewed.science/chartrepo/mkdocswatcher:0.1.2

Digest: sha256:3d066d988f44bf1bbbf38a7ec0ad232dd146d552bbb02dd1a5366e4e995fd91d

We can check that we can pull it down if needed

builder@DESKTOP-QADGF36:~/Workspaces/mkdocClusterWatcher$ cd tmp/

builder@DESKTOP-QADGF36:~/Workspaces/mkdocClusterWatcher/tmp$ helm pull oci://harbor.freshbrewed.science/chartrepo/mkdocswatcher --version

0.1.2

Pulled: harbor.freshbrewed.science/chartrepo/mkdocswatcher:0.1.2

Digest: sha256:3d066d988f44bf1bbbf38a7ec0ad232dd146d552bbb02dd1a5366e4e995fd91d

builder@DESKTOP-QADGF36:~/Workspaces/mkdocClusterWatcher/tmp$ ls -ltra

total 16

drwxr-xr-x 6 builder builder 4096 Feb 24 16:37 ..

-rw-r--r-- 1 builder builder 5225 Feb 24 16:38 mkdocswatcher-0.1.2.tgz

drwxr-xr-x 2 builder builder 4096 Feb 24 16:38 .

Or install using the Harbor CR chart repo

$ helm upgrade --install mkdocs --create-namespace -n mkdocs oci://harbor.freshbrewed.science/chartrepo/mkdocswatcher

Pulled: harbor.freshbrewed.science/chartrepo/mkdocswatcher:0.1.2

Digest: sha256:3d066d988f44bf1bbbf38a7ec0ad232dd146d552bbb02dd1a5366e4e995fd91d

Release "mkdocs" has been upgraded. Happy Helming!

NAME: mkdocs

LAST DEPLOYED: Sat Feb 24 16:39:19 2024

NAMESPACE: mkdocs

STATUS: deployed

REVISION: 5

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace mkdocs -l "app.kubernetes.io/name=mkdocswatcher,app.kubernetes.io/instance=mkdocs" -o jsonpath="{.items[0].metadata.name}")

export CONTAINER_PORT=$(kubectl get pod --namespace mkdocs $POD_NAME -o jsonpath="{.spec.containers[0].ports[0].containerPort}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl --namespace mkdocs port-forward $POD_NAME 8080:$CONTAINER_PORT

I can also go to Harbor and see the chart is there

Doing it fresh

Since I blasted my old cluster, let’s do it all again. However, this time, we can use the chart

I’ll clone and copy the values local

builder@LuiGi17:~/Workspaces$ git clone https://github.com/idjohnson/mkdocClusterWatcher.git

Cloning into 'mkdocClusterWatcher'...

remote: Enumerating objects: 31, done.

remote: Counting objects: 100% (31/31), done.

remote: Compressing objects: 100% (27/27), done.

remote: Total 31 (delta 4), reused 27 (delta 3), pack-reused 0

Receiving objects: 100% (31/31), 22.54 KiB | 427.00 KiB/s, done.

Resolving deltas: 100% (4/4), done.

builder@LuiGi17:~/Workspaces$ cd mkdocClusterWatcher/

builder@LuiGi17:~/Workspaces/mkdocClusterWatcher$ ls

LICENSE README.md mkdocswatcher yaml

builder@LuiGi17:~/Workspaces/mkdocClusterWatcher$ cp ./mkdocswatcher/values.yaml ./

I can now tweak the values that are unique for my deployment

$ cat values.yaml

mkdocsConfig: |

site_name: Freshbrewed Documentation

docs_dir: otherdocs

nav:

- Introduction: 'index.md'

- 'about.md'

- 'Issue Tracker': 'https://example.com/'

- 'Files': '/files/'

ingress:

enabled: true

className: ""

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

hosts:

- host: mkdocwatcher.tpk.pw

paths:

- path: /

pathType: ImplementationSpecific

tls:

- secretName: mkdockwatcher-tls

hosts:

- mkdocwatcher.tpk.pw

cronjob:

schedule: "*/5 * * * *"

pvc:

storageClassName: managed-nfs-storage

size: 5Gi

Then install

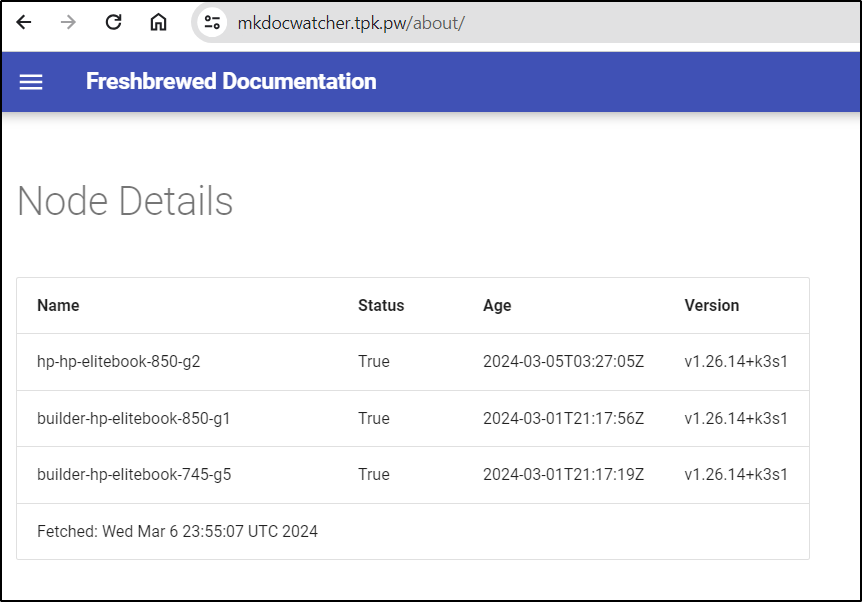

$ helm install mkdocswatcher -n mkdocswatcher --create-namespace -f ./values.yaml ./mkdocswatcher/

NAME: mkdocswatcher

LAST DEPLOYED: Wed Mar 6 17:52:30 2024

NAMESPACE: mkdocswatcher

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

https://mkdocwatcher.tpk.pw/

This worked!

However, I realized that one of the mistakes I had made on the former cluster was to leave that cron going on an fast schedule.

I can tweak that to run 15 minutes past the hour every 6 hours:

$ cat values.yaml

mkdocsConfig: |

site_name: Freshbrewed Documentation

docs_dir: otherdocs

nav:

- Introduction: 'index.md'

- 'about.md'

- 'Issue Tracker': 'https://example.com/'

- 'Files': '/files/'

ingress:

enabled: true

className: ""

annotations:

cert-manager.io/cluster-issuer: azuredns-tpkpw

ingress.kubernetes.io/ssl-redirect: "true"

kubernetes.io/ingress.class: nginx

kubernetes.io/tls-acme: "true"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

hosts:

- host: mkdocwatcher.tpk.pw

paths:

- path: /

pathType: ImplementationSpecific

tls:

- secretName: mkdockwatcher-tls

hosts:

- mkdocwatcher.tpk.pw

cronjob:

schedule: "15 */4 * * *"

pvc:

storageClassName: managed-nfs-storage

size: 5Gi

$ helm upgrade --install mkdocswatcher -n mkdocswatcher --create-namespace -f ./values.yaml ./mkdocswatcher/

Release "mkdocswatcher" has been upgraded. Happy Helming!

NAME: mkdocswatcher

LAST DEPLOYED: Wed Mar 6 18:06:43 2024

NAMESPACE: mkdocswatcher

STATUS: deployed

REVISION: 3

NOTES:

1. Get the application URL by running these commands:

https://mkdocwatcher.tpk.pw/

And we see it has been adjusted

$ kubectl get cronjob -n mkdocswatcher

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

mkdocswatcher-cronjob 15 */4 * * * False 0 2m40s 7m57s

Summary

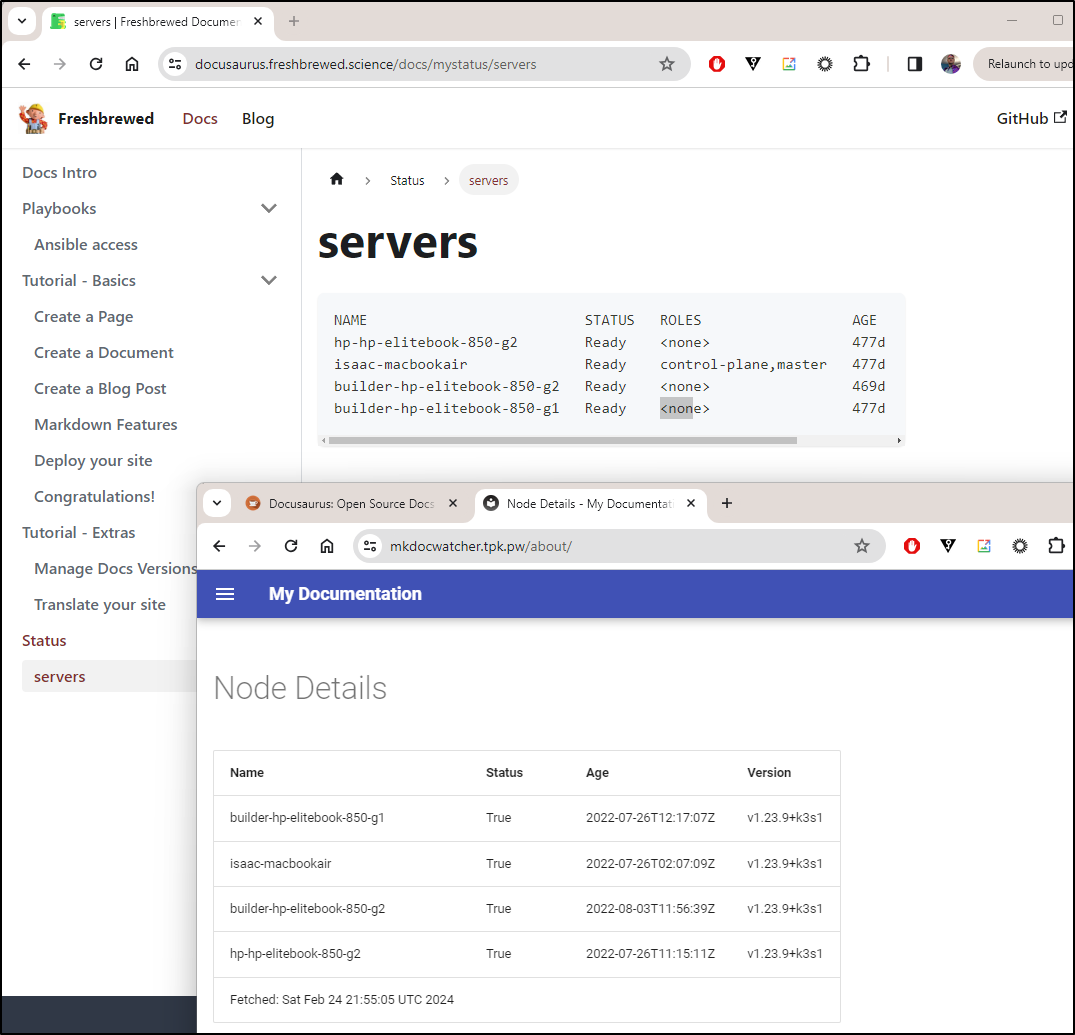

This was really quite fun. I had started this blog one afternoon thinking I would just cover a few different related Open-Source apps like I often do for smaller tools. However, the more I played with mkdocs, the more I wanted to “try one more thing”. We often have needs for generated documentation. Back in November, I put out a two part writeup on Docusaurus. In Part 1 I covered auto generated content using configmaps as well (which is still running).

We can see they both serve similar content now

But I might lean more into the MKDocs version as it’s significantly lighter weight.